Kyuho Bae

Inha University

Improved and efficient inter-vehicle distance estimation using road gradients of both ego and target vehicles

Apr 01, 2021

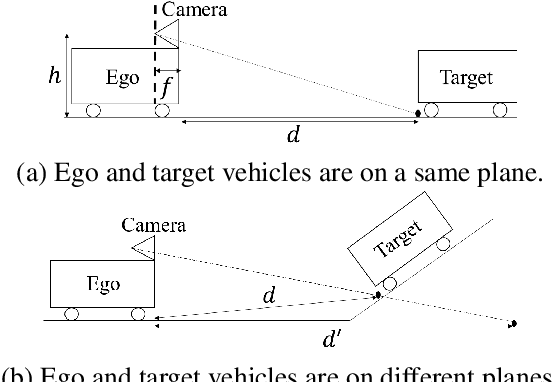

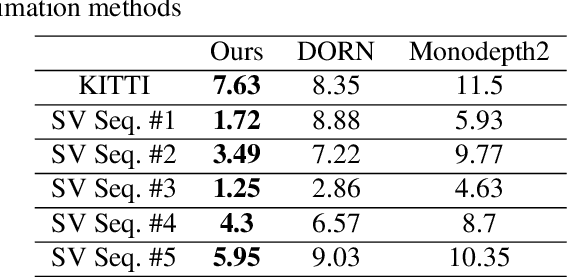

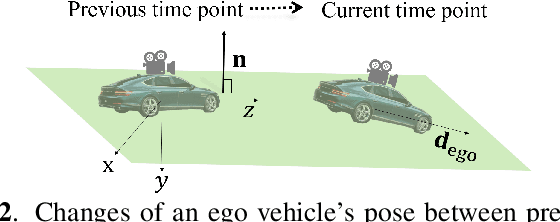

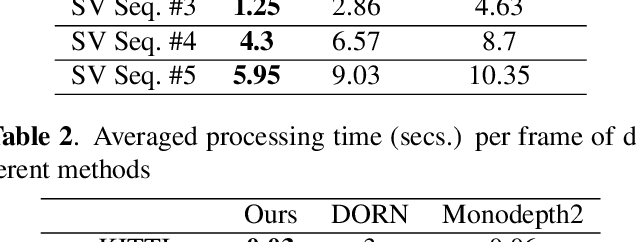

Abstract:In advanced driver assistant systems and autonomous driving, it is crucial to estimate distances between an ego vehicle and target vehicles. Existing inter-vehicle distance estimation methods assume that the ego and target vehicles drive on a same ground plane. In practical driving environments, however, they may drive on different ground planes. This paper proposes an inter-vehicle distance estimation framework that can consider slope changes of a road forward, by estimating road gradients of \emph{both} ego vehicle and target vehicles and using a 2D object detection deep net. Numerical experiments demonstrate that the proposed method significantly improves the distance estimation accuracy and time complexity, compared to deep learning-based depth estimation methods.

5D Light Field Synthesis from a Monocular Video

Dec 23, 2019

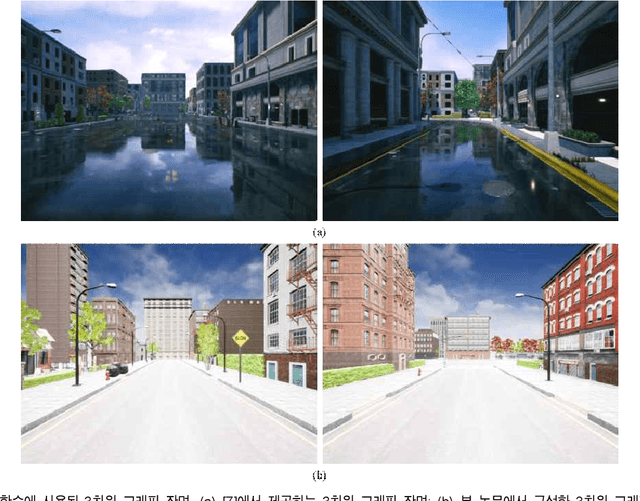

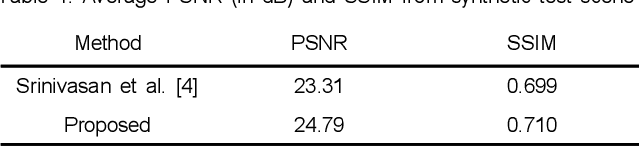

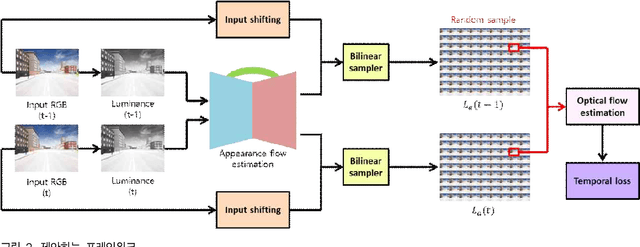

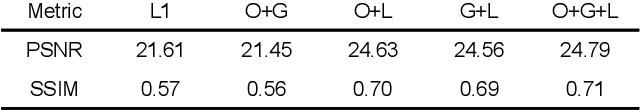

Abstract:Commercially available light field cameras have difficulty in capturing 5D (4D + time) light field videos. They can only capture still light filed images or are excessively expensive for normal users to capture the light field video. To tackle this problem, we propose a deep learning-based method for synthesizing a light field video from a monocular video. We propose a new synthetic light field video dataset that renders photorealistic scenes using UnrealCV rendering engine because no light field dataset is available. The proposed deep learning framework synthesizes the light field video with a full set (9$\times$9) of sub-aperture images from a normal monocular video. The proposed network consists of three sub-networks, namely, feature extraction, 5D light field video synthesis, and temporal consistency refinement. Experimental results show that our model can successfully synthesize the light field video for synthetic and actual scenes and outperforms the previous frame-by-frame methods quantitatively and qualitatively. The synthesized light field can be used for conventional light field applications, namely, depth estimation, viewpoint change, and refocusing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge