Karim Armanious

Unsupervised Medical Image Translation Using Cycle-MedGAN

Mar 08, 2019

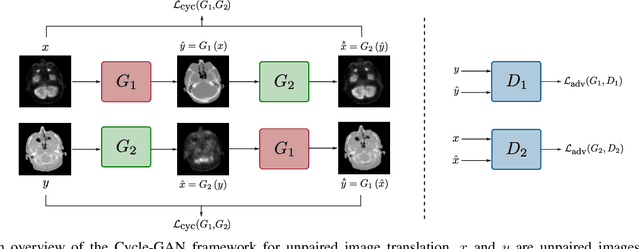

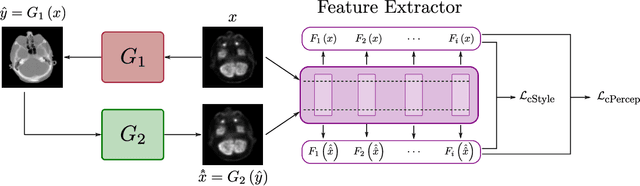

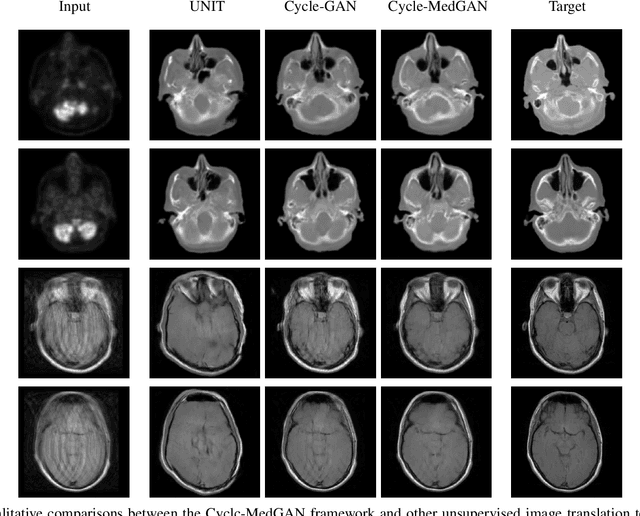

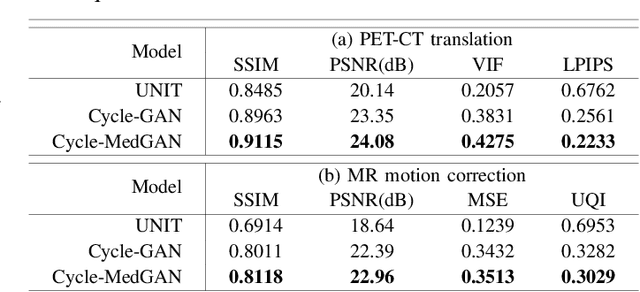

Abstract:Image-to-image translation is a new field in computer vision with multiple potential applications in the medical domain. However, for supervised image translation frameworks, co-registered datasets, paired in a pixel-wise sense, are required. This is often difficult to acquire in realistic medical scenarios. On the other hand, unsupervised translation frameworks often result in blurred translated images with unrealistic details. In this work, we propose a new unsupervised translation framework which is titled Cycle-MedGAN. The proposed framework utilizes new non-adversarial cycle losses which direct the framework to minimize the textural and perceptual discrepancies in the translated images. Qualitative and quantitative comparisons against other unsupervised translation approaches demonstrate the performance of the proposed framework for PET-CT translation and MR motion correction.

An Adversarial Super-Resolution Remedy for Radar Design Trade-offs

Mar 04, 2019

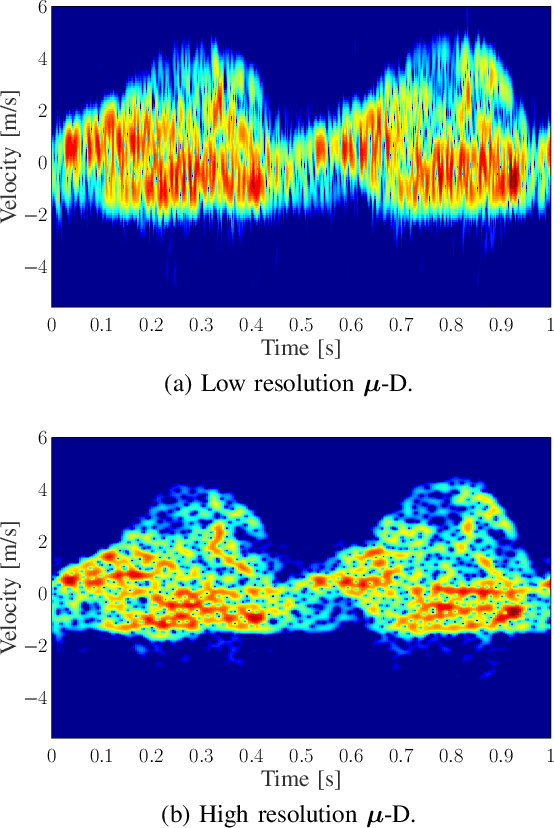

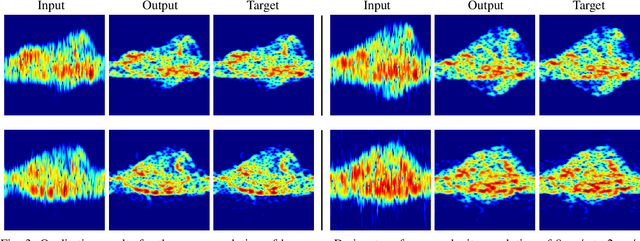

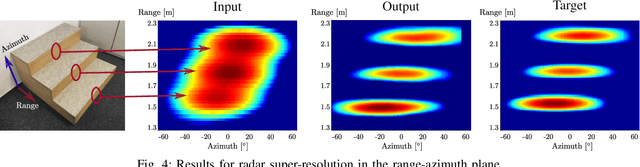

Abstract:Radar is of vital importance in many fields, such as autonomous driving, safety and surveillance applications. However, it suffers from stringent constraints on its design parametrization leading to multiple trade-offs. For example, the bandwidth in FMCW radars is inversely proportional with both the maximum unambiguous range and range resolution. In this work, we introduce a new method for circumventing radar design trade-offs. We propose the use of recent advances in computer vision, more specifically generative adversarial networks (GANs), to enhance low-resolution radar acquisitions into higher resolution counterparts while maintaining the advantages of the low-resolution parametrization. The capability of the proposed method was evaluated on the velocity resolution and range-azimuth trade-offs in micro-Doppler signatures and FMCW uniform linear array (ULA) radars, respectively.

A Study of Human Body Characteristics Effect on Micro-Doppler-Based Person Identification using Deep Learning

Nov 17, 2018

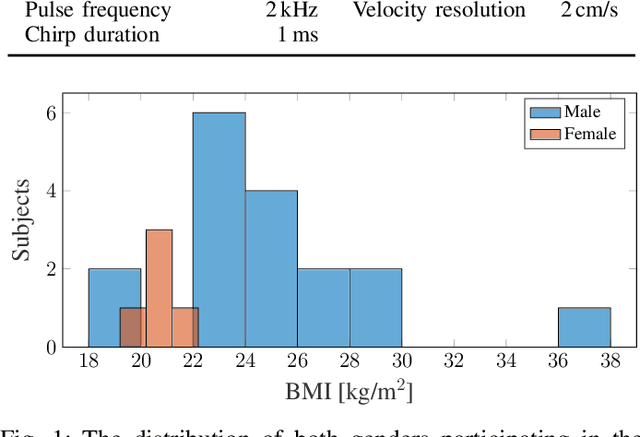

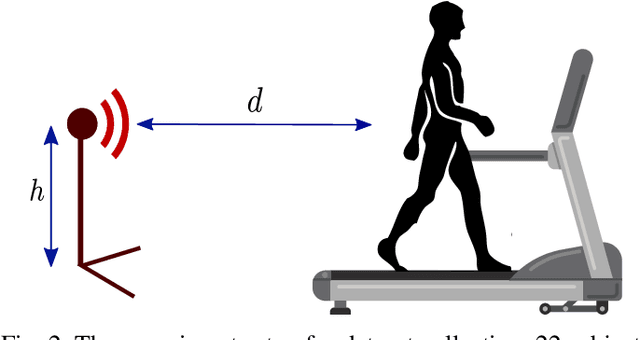

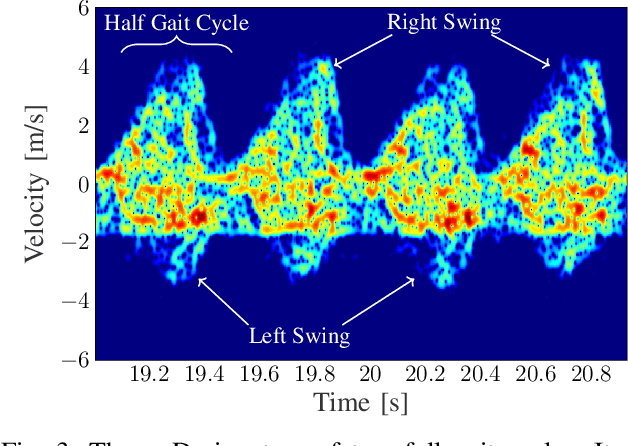

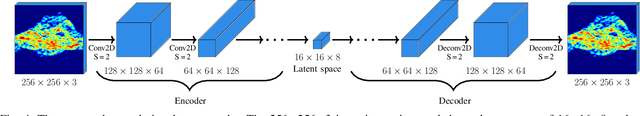

Abstract:Obtaining a smart surveillance requires a sensing system that can capture accurate and detailed information for the human walking style. The radar micro-Doppler ($\boldsymbol{\mu}$-D) analysis is proved to be a reliable metric for studying human locomotions. Thus, $\boldsymbol{\mu}$-D signatures can be used to identify humans based on their walking styles. Additionally, the signatures contain information about the radar cross section (RCS) of the moving subject. This paper investigates the effect of human body characteristics on human identification based on their $\boldsymbol{\mu}$-D signatures. In our proposed experimental setup, a treadmill is used to collect $\boldsymbol{\mu}$-D signatures of 22 subjects with different genders and body characteristics. Convolutional autoencoders (CAE) are then used to extract the latent space representation from the $\boldsymbol{\mu}$-D signatures. It is then interpreted in two dimensions using t-distributed stochastic neighbor embedding (t-SNE). Our study shows that the body mass index (BMI) has a correlation with the $\boldsymbol{\mu}$-D signature of the walking subject. A 50-layer deep residual network is then trained to identify the walking subject based on the $\boldsymbol{\mu}$-D signature. We achieve an accuracy of 98% on the test set with high signal-to-noise-ratio (SNR) and 84% in case of different SNR levels.

Towards Adversarial Denoising of Radar Micro-Doppler Signatures

Nov 12, 2018

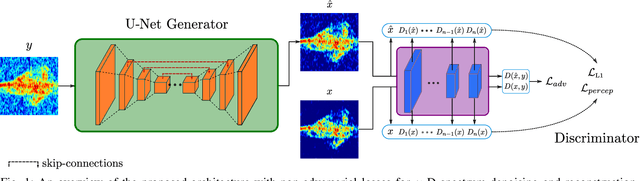

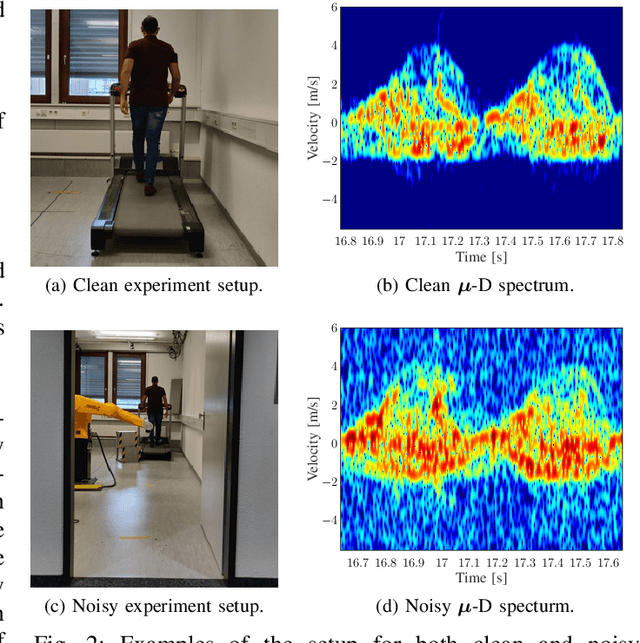

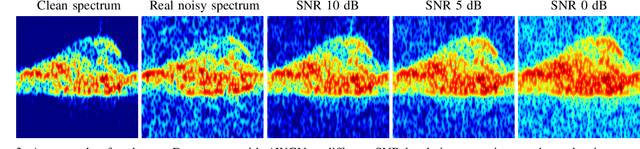

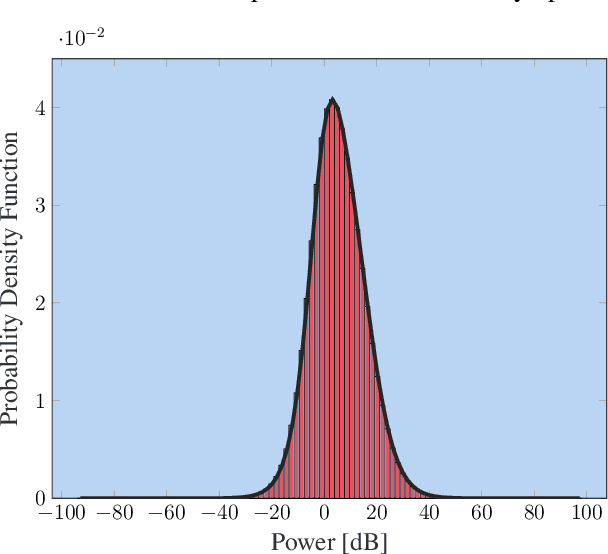

Abstract:Generative Adversarial Networks (GANs) are considered the state-of-the-art in the field of image generation. They learn the joint distribution of the training data and attempt to generate new data samples in high dimensional space following the same distribution as the input. Recent improvements in GANs opened the field to many other computer vision applications based on improving and changing the characteristics of the input image to follow some given training requirements. In this paper, we propose a novel technique for the denoising and reconstruction of the micro-Doppler ($\boldsymbol{\mu}$-D) spectra of walking humans based on GANs. Two sets of experiments were collected on 22 subjects walking on a treadmill at an intermediate velocity using a \unit[25]{GHz} CW radar. In one set, a clean $\boldsymbol{\mu}$-D spectrum is collected for each subject by placing the radar at a close distance to the subject. In the other set, variations are introduced in the experiment setup to introduce different noise and clutter effects on the spectrum by changing the distance and placing reflective objects between the radar and the target. Synthetic paired noisy and noise-free spectra were used for training, while validation was carried out on the real noisy measured data. Finally, qualitative and quantitative comparison with other classical radar denoising approaches in the literature demonstrated the proposed GANs framework is better and more robust to different noise levels.

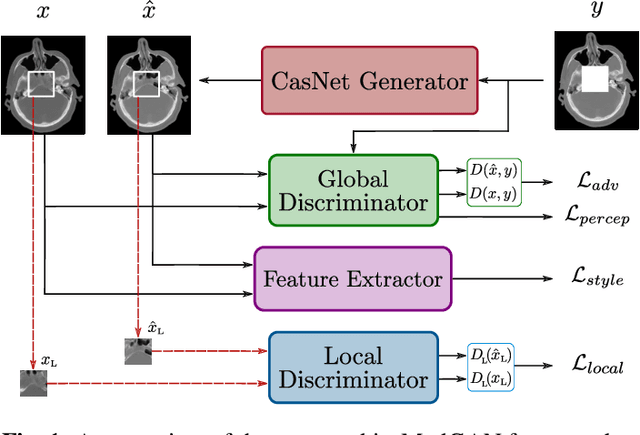

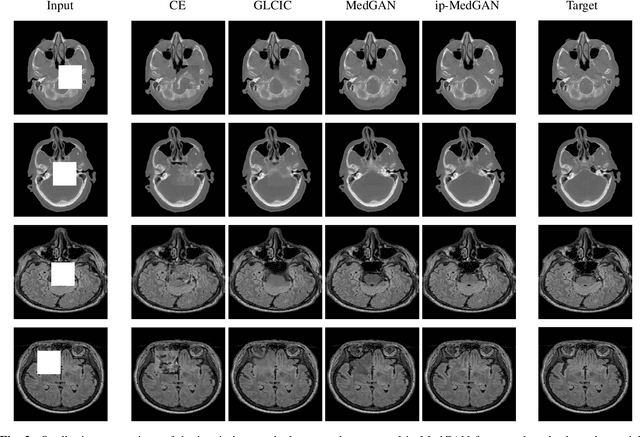

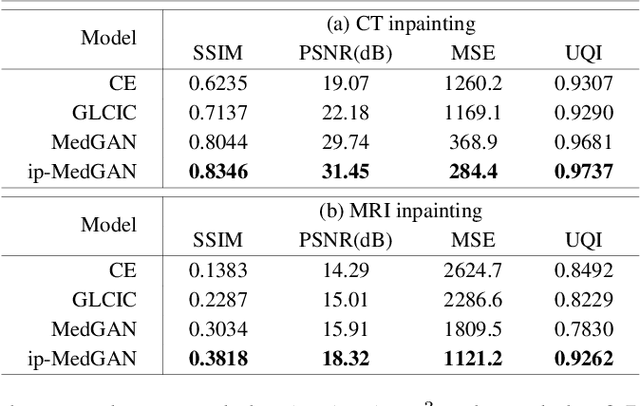

Adversarial Inpainting of Medical Image Modalities

Oct 15, 2018

Abstract:Numerous factors could lead to partial deteriorations of medical images. For example, metallic implants will lead to localized perturbations in MRI scans. This will affect further post-processing tasks such as attenuation correction in PET/MRI or radiation therapy planning. In this work, we propose the inpainting of medical images via Generative Adversarial Networks (GANs). The proposed framework incorporates two patch-based discriminator networks with additional style and perceptual losses for the inpainting of missing information in realistically detailed and contextually consistent manner. The proposed framework outperformed other natural image inpainting techniques both qualitatively and quantitatively on two different medical modalities.

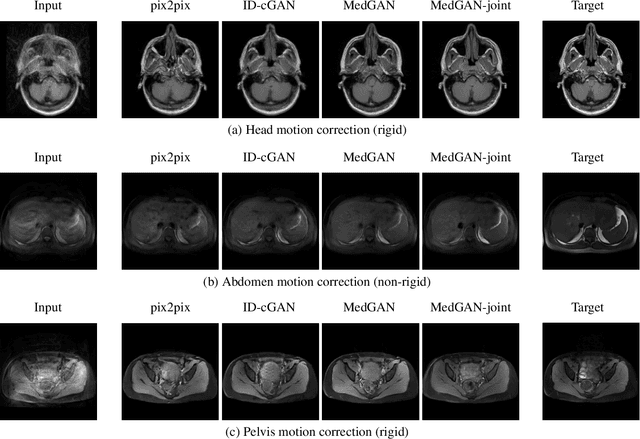

Retrospective correction of Rigid and Non-Rigid MR motion artifacts using GANs

Oct 06, 2018

Abstract:Motion artifacts are a primary source of magnetic resonance (MR) image quality deterioration with strong repercussions on diagnostic performance. Currently, MR motion correction is carried out either prospectively, with the help of motion tracking systems, or retrospectively by mainly utilizing computationally expensive iterative algorithms. In this paper, we utilize a new adversarial framework, titled MedGAN, for the joint retrospective correction of rigid and non-rigid motion artifacts in different body regions and without the need for a reference image. MedGAN utilizes a unique combination of non-adversarial losses and a new generator architecture to capture the textures and fine-detailed structures of the desired artifact-free MR images. Quantitative and qualitative comparisons with other adversarial techniques have illustrated the proposed model performance.

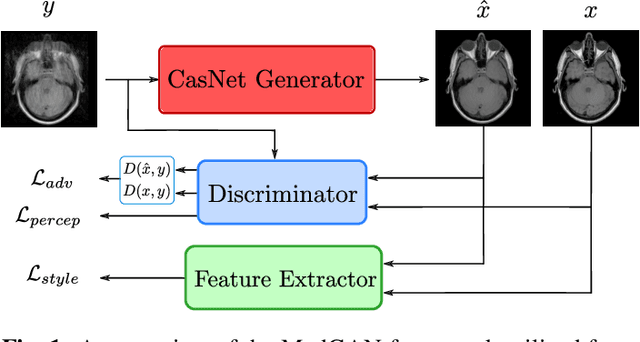

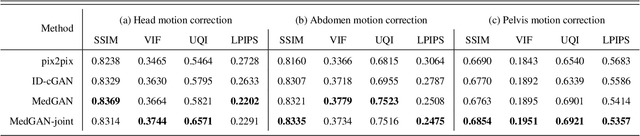

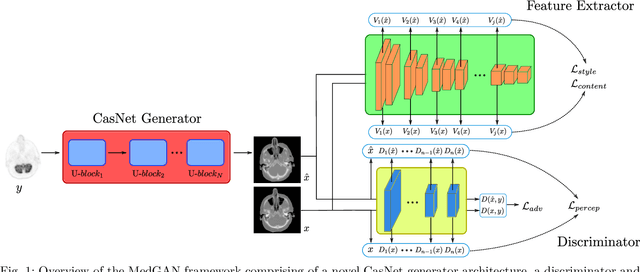

MedGAN: Medical Image Translation using GANs

Jun 17, 2018

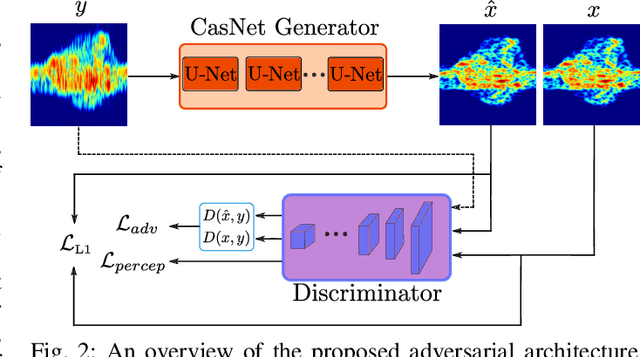

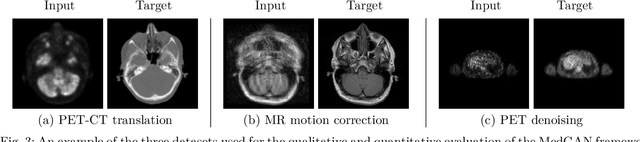

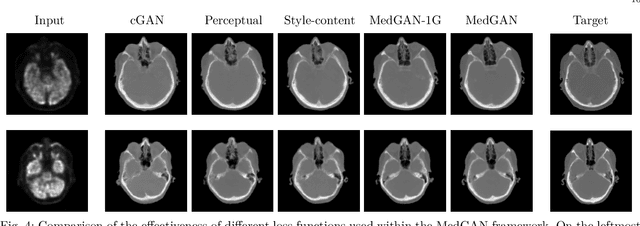

Abstract:Image-to-image translation is considered a next frontier in the field of medical image analysis, with numerous potential applications. However, recent advances in this field offer individualized solutions by utilizing specialized architectures which are task specific or by suffering from limited capacities and thus requiring refinement through non end-to-end training. In this paper, we propose a novel general purpose framework for medical image-to-image translation, titled MedGAN, which operates in an end-to-end manner on the image level. MedGAN builds upon recent advances in the field of generative adversarial networks(GANs) by combining the adversarial framework with a unique combination of non-adversarial losses which captures the high and low frequency components of the desired target modality. Namely, we utilize a discriminator network as a trainable feature extractor which penalizes the discrepancy between the translated medical images and the desired modalities in the pixel and perceptual sense. Moreover, style-transfer losses are utilized to match the textures and fine-structures of the desired target images to the outputs. Additionally, we present a novel generator architecture, titled CasNet, which enhances the sharpness of the translated medical outputs through progressive refinement via encoder decoder pairs. To demonstrate the effectiveness of our approach, we apply MedGAN on three novel and challenging applications: PET-CT translation, correction of MR motion artefacts and PET image denoising. Qualitative and quantitative comparisons with state-of-the-art techniques have emphasized the superior performance of the proposed framework. MedGAN can be directly applied as a general framework for future medical translation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge