Kanishka Misra

A Property Induction Framework for Neural Language Models

May 13, 2022

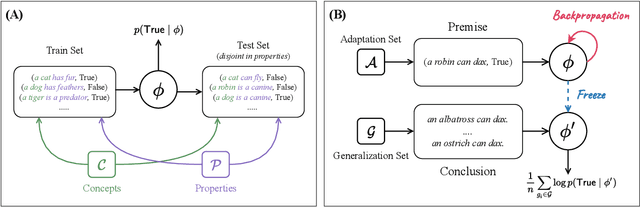

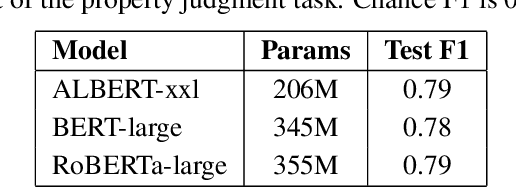

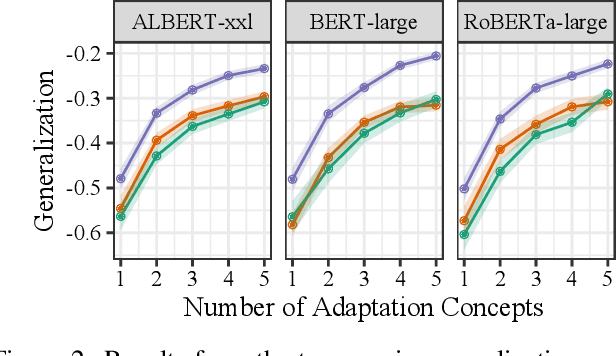

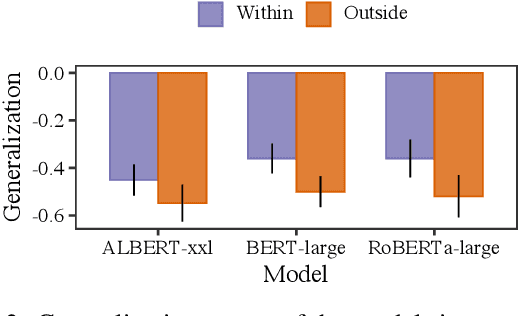

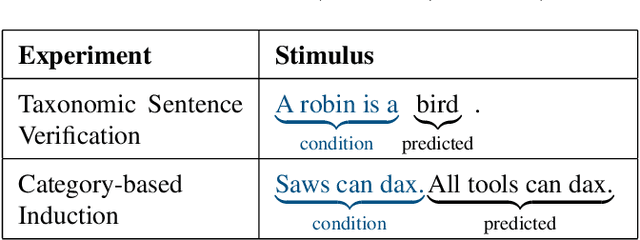

Abstract:To what extent can experience from language contribute to our conceptual knowledge? Computational explorations of this question have shed light on the ability of powerful neural language models (LMs) -- informed solely through text input -- to encode and elicit information about concepts and properties. To extend this line of research, we present a framework that uses neural-network language models (LMs) to perform property induction -- a task in which humans generalize novel property knowledge (has sesamoid bones) from one or more concepts (robins) to others (sparrows, canaries). Patterns of property induction observed in humans have shed considerable light on the nature and organization of human conceptual knowledge. Inspired by this insight, we use our framework to explore the property inductions of LMs, and find that they show an inductive preference to generalize novel properties on the basis of category membership, suggesting the presence of a taxonomic bias in their representations.

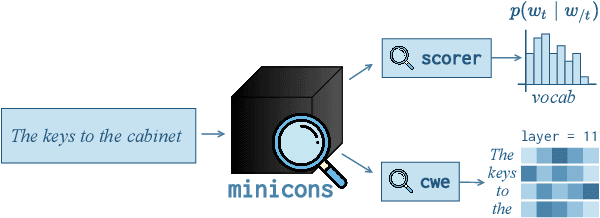

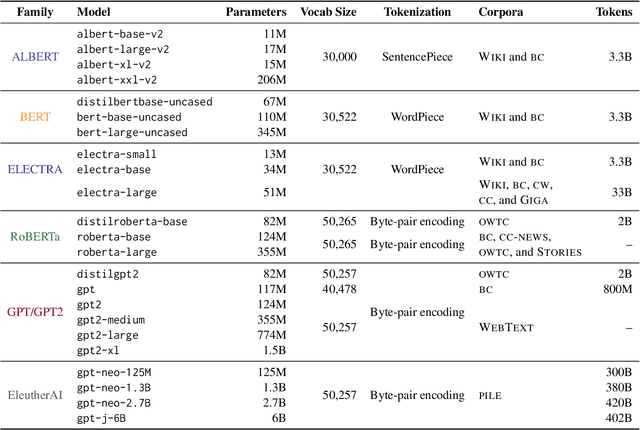

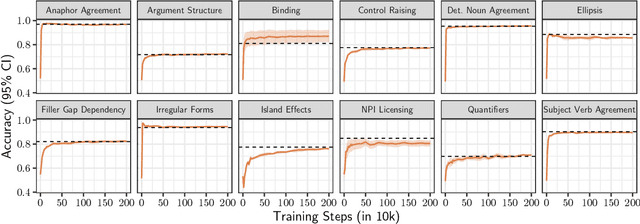

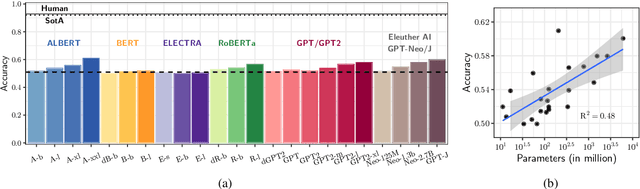

minicons: Enabling Flexible Behavioral and Representational Analyses of Transformer Language Models

Mar 24, 2022

Abstract:We present minicons, an open source library that provides a standard API for researchers interested in conducting behavioral and representational analyses of transformer-based language models (LMs). Specifically, minicons enables researchers to apply analysis methods at two levels: (1) at the prediction level -- by providing functions to efficiently extract word/sentence level probabilities; and (2) at the representational level -- by also facilitating efficient extraction of word/phrase level vectors from one or more layers. In this paper, we describe the library and apply it to two motivating case studies: One focusing on the learning dynamics of the BERT architecture on relative grammatical judgments, and the other on benchmarking 23 different LMs on zero-shot abductive reasoning. minicons is available at https://github.com/kanishkamisra/minicons

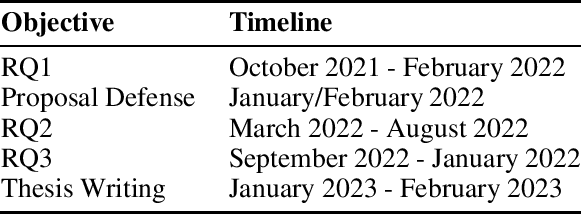

On Semantic Cognition, Inductive Generalization, and Language Models

Nov 04, 2021

Abstract:My doctoral research focuses on understanding semantic knowledge in neural network models trained solely to predict natural language (referred to as language models, or LMs), by drawing on insights from the study of concepts and categories grounded in cognitive science. I propose a framework inspired by 'inductive reasoning,' a phenomenon that sheds light on how humans utilize background knowledge to make inductive leaps and generalize from new pieces of information about concepts and their properties. Drawing from experiments that study inductive reasoning, I propose to analyze semantic inductive generalization in LMs using phenomena observed in human-induction literature, investigate inductive behavior on tasks such as implicit reasoning and emergent feature recognition, and analyze and relate induction dynamics to the learned conceptual representation space.

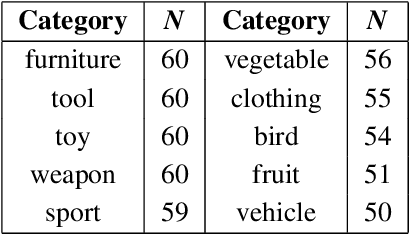

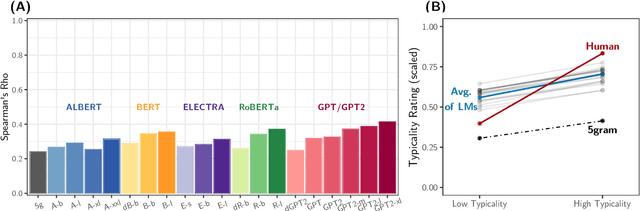

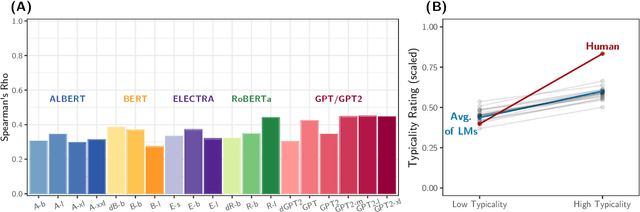

Do language models learn typicality judgments from text?

May 06, 2021

Abstract:Building on research arguing for the possibility of conceptual and categorical knowledge acquisition through statistics contained in language, we evaluate predictive language models (LMs) -- informed solely by textual input -- on a prevalent phenomenon in cognitive science: typicality. Inspired by experiments that involve language processing and show robust typicality effects in humans, we propose two tests for LMs. Our first test targets whether typicality modulates LM probabilities in assigning taxonomic category memberships to items. The second test investigates sensitivities to typicality in LMs' probabilities when extending new information about items to their categories. Both tests show modest -- but not completely absent -- correspondence between LMs and humans, suggesting that text-based exposure alone is insufficient to acquire typicality knowledge.

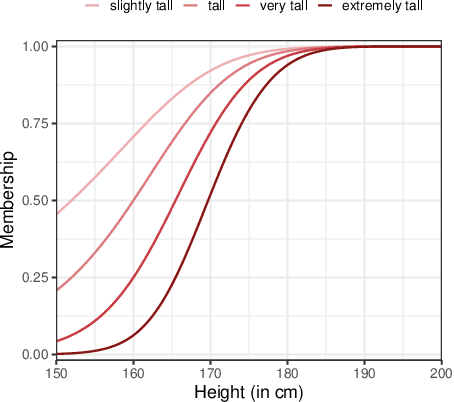

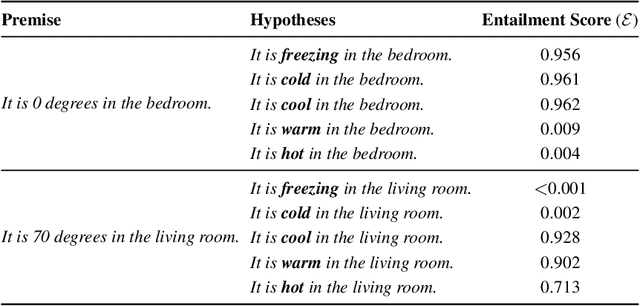

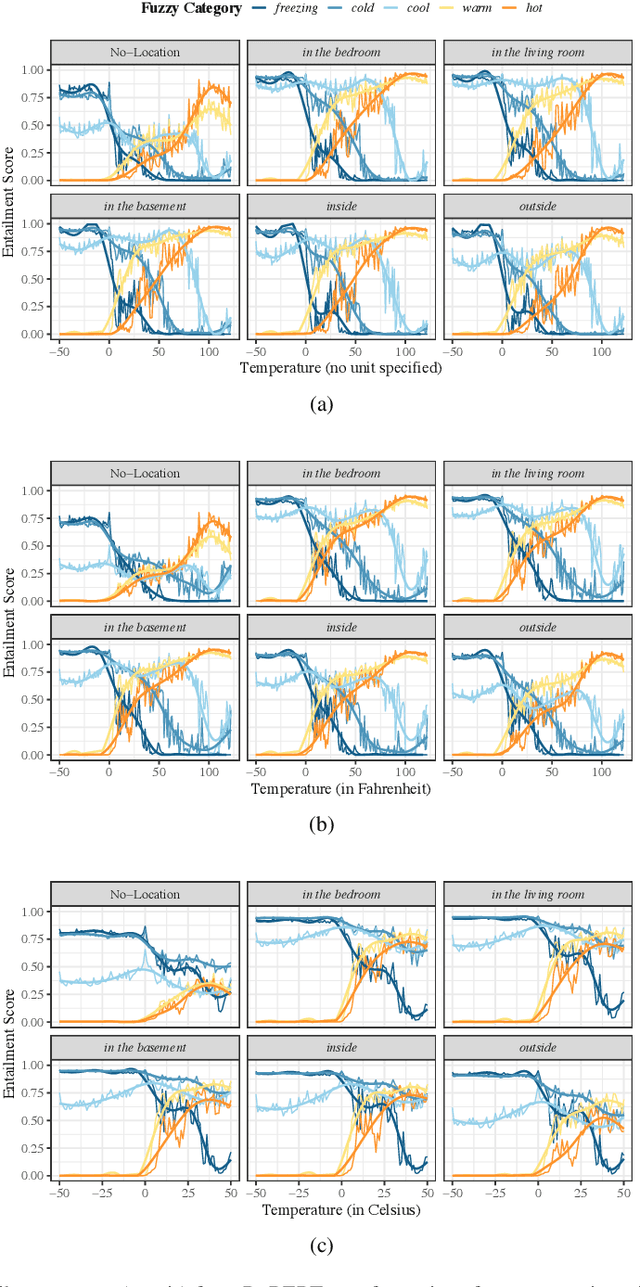

Finding Fuzziness in Neural Network Models of Language Processing

Apr 22, 2021

Abstract:Humans often communicate by using imprecise language, suggesting that fuzzy concepts with unclear boundaries are prevalent in language use. In this paper, we test the extent to which models trained to capture the distributional statistics of language show correspondence to fuzzy-membership patterns. Using the task of natural language inference, we test a recent state of the art model on the classical case of temperature, by examining its mapping of temperature data to fuzzy-perceptions such as "cool", "hot", etc. We find the model to show patterns that are similar to classical fuzzy-set theoretic formulations of linguistic hedges, albeit with a substantial amount of noise, suggesting that models trained solely on language show promise in encoding fuzziness.

Exploring Lexical Irregularities in Hypothesis-Only Models of Natural Language Inference

Jan 22, 2021

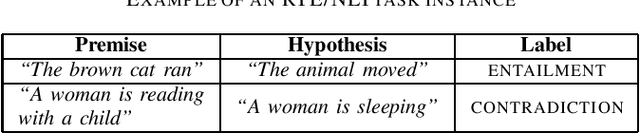

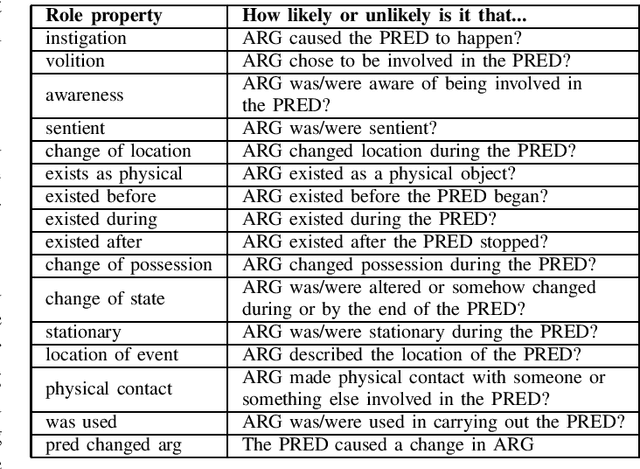

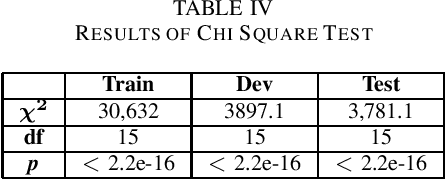

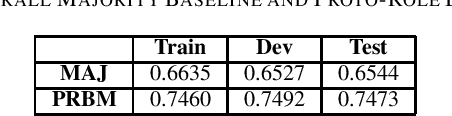

Abstract:Natural Language Inference (NLI) or Recognizing Textual Entailment (RTE) is the task of predicting the entailment relation between a pair of sentences (premise and hypothesis). This task has been described as a valuable testing ground for the development of semantic representations, and is a key component in natural language understanding evaluation benchmarks. Models that understand entailment should encode both, the premise and the hypothesis. However, experiments by Poliak et al. revealed a strong preference of these models towards patterns observed only in the hypothesis, based on a 10 dataset comparison. Their results indicated the existence of statistical irregularities present in the hypothesis that bias the model into performing competitively with the state of the art. While recast datasets provide large scale generation of NLI instances due to minimal human intervention, the papers that generate them do not provide fine-grained analysis of the potential statistical patterns that can bias NLI models. In this work, we analyze hypothesis-only models trained on one of the recast datasets provided in Poliak et al. for word-level patterns. Our results indicate the existence of potential lexical biases that could contribute to inflating the model performance.

Exploring BERT's Sensitivity to Lexical Cues using Tests from Semantic Priming

Oct 06, 2020

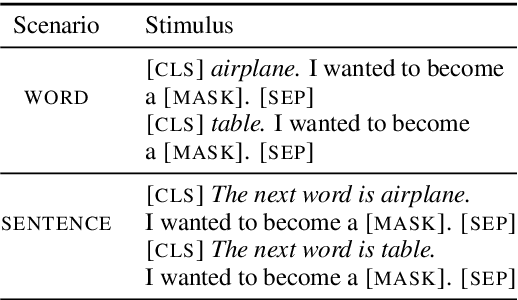

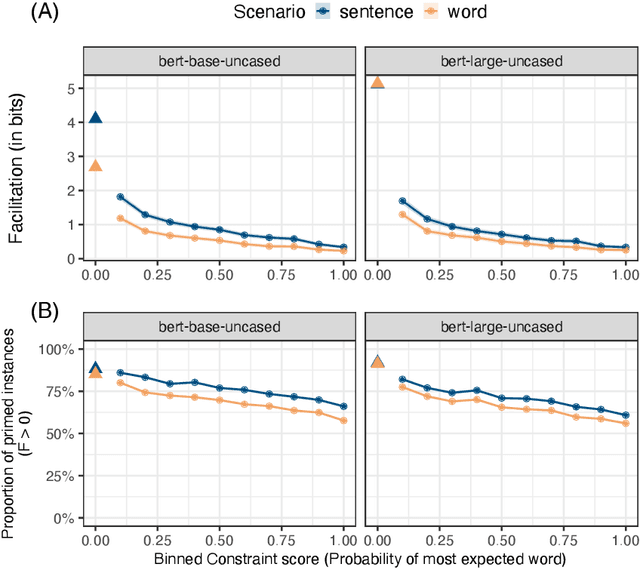

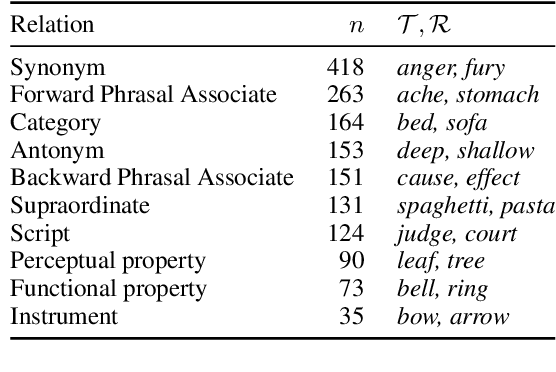

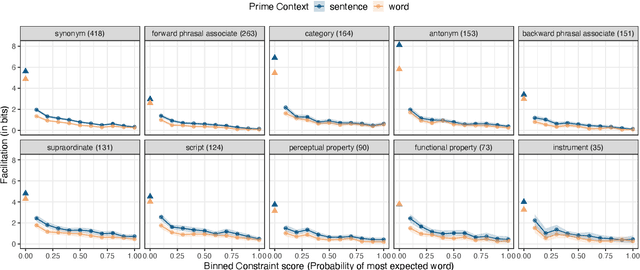

Abstract:Models trained to estimate word probabilities in context have become ubiquitous in natural language processing. How do these models use lexical cues in context to inform their word probabilities? To answer this question, we present a case study analyzing the pre-trained BERT model with tests informed by semantic priming. Using English lexical stimuli that show priming in humans, we find that BERT too shows "priming," predicting a word with greater probability when the context includes a related word versus an unrelated one. This effect decreases as the amount of information provided by the context increases. Follow-up analysis shows BERT to be increasingly distracted by related prime words as context becomes more informative, assigning lower probabilities to related words. Our findings highlight the importance of considering contextual constraint effects when studying word prediction in these models, and highlight possible parallels with human processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge