Junchen Jin

Causal-HM: Restoring Physical Generative Logic in Multimodal Anomaly Detection via Hierarchical Modulation

Dec 25, 2025Abstract:Multimodal Unsupervised Anomaly Detection (UAD) is critical for quality assurance in smart manufacturing, particularly in complex processes like robotic welding. However, existing methods often suffer from causal blindness, treating process modalities (e.g., real-time video, audio, and sensors) and result modalities (e.g., post-weld images) as equal feature sources, thereby ignoring the inherent physical generative logic. Furthermore, the heterogeneity gap between high-dimensional visual data and low-dimensional sensor signals frequently leads to critical process context being drowned out. In this paper, we propose Causal-HM, a unified multimodal UAD framework that explicitly models the physical Process to Result dependency. Specifically, our framework incorporates two key innovations: a Sensor-Guided CHM Modulation mechanism that utilizes low-dimensional sensor signals as context to guide high-dimensional audio-visual feature extraction , and a Causal-Hierarchical Architecture that enforces a unidirectional generative mapping to identify anomalies that violate physical consistency. Extensive experiments on our newly constructed Weld-4M benchmark across four modalities demonstrate that Causal-HM achieves a state-of-the-art (SOTA) I-AUROC of 90.7%. Code will be released after the paper is accepted.

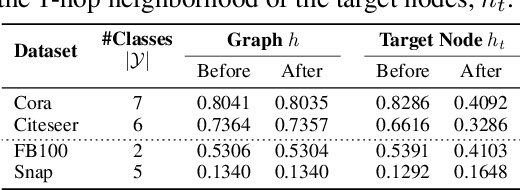

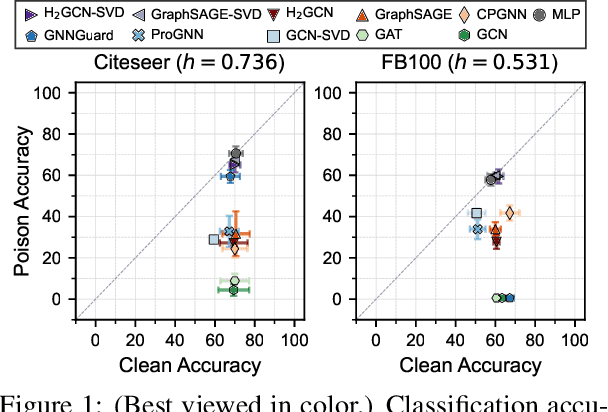

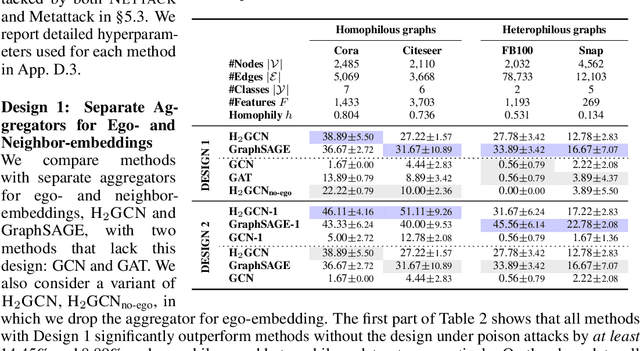

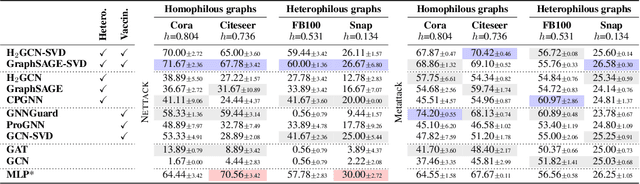

Improving Robustness of Graph Neural Networks with Heterophily-Inspired Designs

Jun 14, 2021

Abstract:Recent studies have exposed that many graph neural networks (GNNs) are sensitive to adversarial attacks, and can suffer from performance loss if the graph structure is intentionally perturbed. A different line of research has shown that many GNN architectures implicitly assume that the underlying graph displays homophily, i.e., connected nodes are more likely to have similar features and class labels, and perform poorly if this assumption is not fulfilled. In this work, we formalize the relation between these two seemingly different issues. We theoretically show that in the standard scenario in which node features exhibit homophily, impactful structural attacks always lead to increased levels of heterophily. Then, inspired by GNN architectures that target heterophily, we present two designs -- (i) separate aggregators for ego- and neighbor-embeddings, and (ii) a reduced scope of aggregation -- that can significantly improve the robustness of GNNs. Our extensive empirical evaluations show that GNNs featuring merely these two designs can achieve significantly improved robustness compared to the best-performing unvaccinated model with 24.99% gain in average performance under targeted attacks, while having smaller computational overhead than existing defense mechanisms. Furthermore, these designs can be readily combined with explicit defense mechanisms to yield state-of-the-art robustness with up to 18.33% increase in performance under attacks compared to the best-performing vaccinated model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge