John Lagergren

Few-Shot Learning Enables Population-Scale Analysis of Leaf Traits in Populus trichocarpa

Jan 24, 2023Abstract:Plant phenotyping is typically a time-consuming and expensive endeavor, requiring large groups of researchers to meticulously measure biologically relevant plant traits, and is the main bottleneck in understanding plant adaptation and the genetic architecture underlying complex traits at population scale. In this work, we address these challenges by leveraging few-shot learning with convolutional neural networks (CNNs) to segment the leaf body and visible venation of 2,906 P. trichocarpa leaf images obtained in the field. In contrast to previous methods, our approach (i) does not require experimental or image pre-processing, (ii) uses the raw RGB images at full resolution, and (iii) requires very few samples for training (e.g., just eight images for vein segmentation). Traits relating to leaf morphology and vein topology are extracted from the resulting segmentations using traditional open-source image-processing tools, validated using real-world physical measurements, and used to conduct a genome-wide association study to identify genes controlling the traits. In this way, the current work is designed to provide the plant phenotyping community with (i) methods for fast and accurate image-based feature extraction that require minimal training data, and (ii) a new population-scale data set, including 68 different leaf phenotypes, for domain scientists and machine learning researchers. All of the few-shot learning code, data, and results are made publicly available.

Region Growing with Convolutional Neural Networks for Biomedical Image Segmentation

Sep 23, 2020

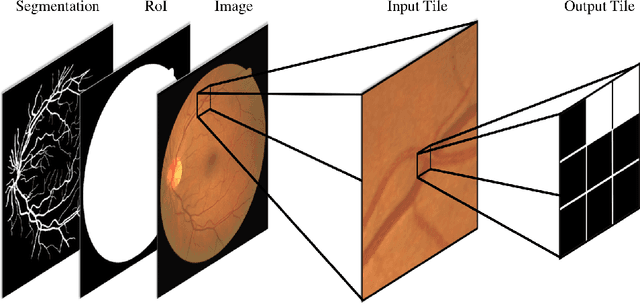

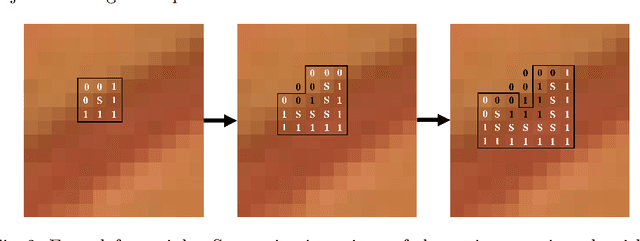

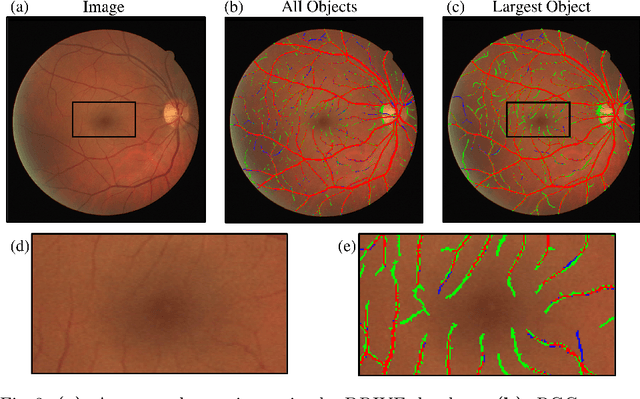

Abstract:In this paper we present a methodology that uses convolutional neural networks (CNNs) for segmentation by iteratively growing predicted mask regions in each coordinate direction. The CNN is used to predict class probability scores in a small neighborhood of the center pixel in a tile of an image. We use a threshold on the CNN probability scores to determine whether pixels are added to the region and the iteration continues until no new pixels are added to the region. Our method is able to achieve high segmentation accuracy and preserve biologically realistic morphological features while leveraging small amounts of training data and maintaining computational efficiency. Using retinal blood vessel images from the DRIVE database we found that our method is more accurate than a fully convolutional semantic segmentation CNN for several evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge