Jinsong Hu

Twisting Signals for Joint Radar-Communications: An OAM Vortex Beam Approach

Sep 19, 2025Abstract:Orbital angular momentum (OAM) technology has attracted much research interest in recent years because of its characteristic helical phase front twisting around the propagation axis and natural orthogonality among different OAM states to encode more degrees of freedom than classical planar beams. Leveraging upon these features, OAM technique has been applied to wireless communication systems to enhance spectral efficiency and radar systems to distinguish spatial targets without beam scanning. Leveraging upon these unique properties, we propose an OAM-based millimeter-wave joint radar-communications (JRC) system comprising a bi-static automotive radar and vehicle-to-vehicle (V2V) communications. Different from existing uniform circular array (UCA) based OAM systems where each element is an isotropic antenna, an OAM spatial modulation scheme utilizing a uniform linear array (ULA) is adopted with each element being a traveling-wave antenna, producing multiple Laguerre-Gaussian (LG) vortex beams simultaneously. Specifically, we first build a novel bi-static automotive OAM-JRC model that embeds communication messages in a radar signal, following which a target position and velocity parameters estimation algorithm is designed with only radar frames. Then, an OAM-based mode-division multiplexing (MDM) strategy between radar and JRC frames is presented to ensure the JRC parameters identifiability and recovery. Furthermore, we analyze the performance of the JRC system through deriving recovery guarantees and Cram\'er-Rao lower bound (CRLB) of radar target parameters and evaluating the bit error rate (BER) of communication, respectively. Our numerical experiments validate the effectiveness of the proposed OAM-based JRC system and parameter estimation method.

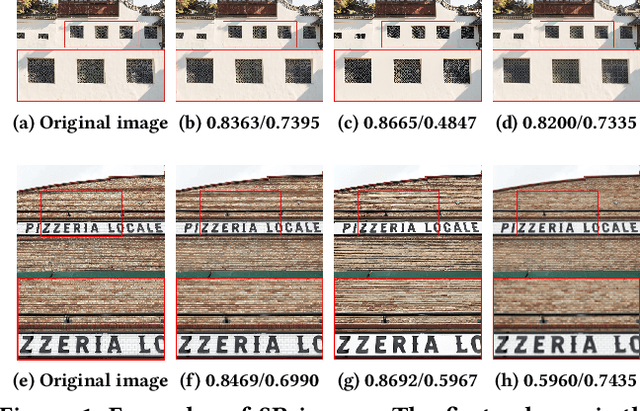

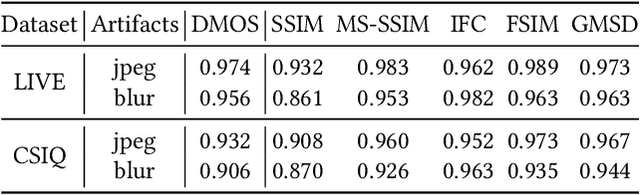

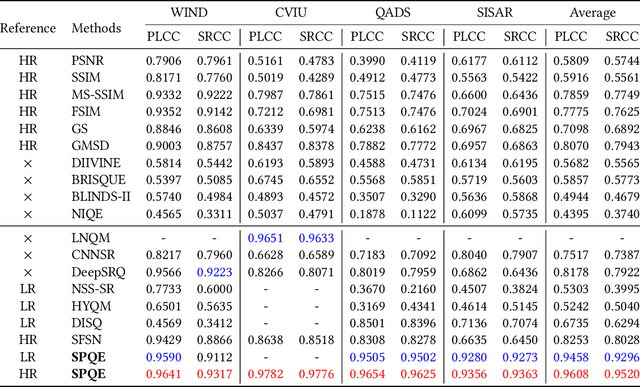

SPQE: Structure-and-Perception-Based Quality Evaluation for Image Super-Resolution

May 07, 2022

Abstract:The image Super-Resolution (SR) technique has greatly improved the visual quality of images by enhancing their resolutions. It also calls for an efficient SR Image Quality Assessment (SR-IQA) to evaluate those algorithms or their generated images. In this paper, we focus on the SR-IQA under deep learning and propose a Structure-and-Perception-based Quality Evaluation (SPQE). In emerging deep-learning-based SR, a generated high-quality, visually pleasing image may have different structures from its corresponding low-quality image. In such case, how to balance the quality scores between no-reference perceptual quality and referenced structural similarity is a critical issue. To help ease this problem, we give a theoretical analysis on this tradeoff and further calculate adaptive weights for the two types of quality scores. We also propose two deep-learning-based regressors to model the no-reference and referenced scores. By combining the quality scores and their weights, we propose a unified SPQE metric for SR-IQA. Experimental results demonstrate that the proposed method outperforms the state-of-the-arts in different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge