Jingshu Li

AI-exhibited Personality Traits Can Shape Human Self-concept through Conversations

Jan 19, 2026Abstract:Recent Large Language Model (LLM) based AI can exhibit recognizable and measurable personality traits during conversations to improve user experience. However, as human understandings of their personality traits can be affected by their interaction partners' traits, a potential risk is that AI traits may shape and bias users' self-concept of their own traits. To explore the possibility, we conducted a randomized behavioral experiment. Our results indicate that after conversations about personal topics with an LLM-based AI chatbot using GPT-4o default personality traits, users' self-concepts aligned with the AI's measured personality traits. The longer the conversation, the greater the alignment. This alignment led to increased homogeneity in self-concepts among users. We also observed that the degree of self-concept alignment was positively associated with users' conversation enjoyment. Our findings uncover how AI personality traits can shape users' self-concepts through human-AI conversation, highlighting both risks and opportunities. We provide important design implications for developing more responsible and ethical AI systems.

Exploring the Effects of Chatbot Anthropomorphism and Human Empathy on Human Prosocial Behavior Toward Chatbots

Jun 25, 2025Abstract:Chatbots are increasingly integrated into people's lives and are widely used to help people. Recently, there has also been growing interest in the reverse direction-humans help chatbots-due to a wide range of benefits including better chatbot performance, human well-being, and collaborative outcomes. However, little research has explored the factors that motivate people to help chatbots. To address this gap, we draw on the Computers Are Social Actors (CASA) framework to examine how chatbot anthropomorphism-including human-like identity, emotional expression, and non-verbal expression-influences human empathy toward chatbots and their subsequent prosocial behaviors and intentions. We also explore people's own interpretations of their prosocial behaviors toward chatbots. We conducted an online experiment (N = 244) in which chatbots made mistakes in a collaborative image labeling task and explained the reasons to participants. We then measured participants' prosocial behaviors and intentions toward the chatbots. Our findings revealed that human identity and emotional expression of chatbots increased participants' prosocial behavior and intention toward chatbots, with empathy mediating these effects. Qualitative analysis further identified two motivations for participants' prosocial behaviors: empathy for the chatbot and perceiving the chatbot as human-like. We discuss the implications of these results for understanding and promoting human prosocial behaviors toward chatbots.

Wild Narratives: Exploring the Effects of Animal Chatbots on Empathy and Positive Attitudes toward Animals

Nov 09, 2024

Abstract:Rises in the number of animal abuse cases are reported around the world. While chatbots have been effective in influencing their users' perceptions and behaviors, little if any research has hitherto explored the design of chatbots that embody animal identities for the purpose of eliciting empathy toward animals. We therefore conducted a mixed-methods experiment to investigate how specific design cues in such chatbots can shape their users' perceptions of both the chatbots' identities and the type of animal they represent. Our findings indicate that such chatbots can significantly increase empathy, improve attitudes, and promote prosocial behavioral intentions toward animals, particularly when they incorporate emotional verbal expressions and authentic details of such animals' lives. These results expand our understanding of chatbots with non-human identities and highlight their potential for use in conservation initiatives, suggesting a promising avenue whereby technology could foster a more informed and empathetic society.

Overconfident and Unconfident AI Hinder Human-AI Collaboration

Feb 12, 2024Abstract:As artificial intelligence (AI) advances, human-AI collaboration has become increasingly prevalent across both professional and everyday settings. In such collaboration, AI can express its confidence level about its performance, serving as a crucial indicator for humans to evaluate AI's suggestions. However, AI may exhibit overconfidence or underconfidence--its expressed confidence is higher or lower than its actual performance--which may lead humans to mistakenly evaluate AI advice. Our study investigates the influences of AI's overconfidence and underconfidence on human trust, their acceptance of AI suggestions, and collaboration outcomes. Our study reveal that disclosing AI confidence levels and performance feedback facilitates better recognition of AI confidence misalignments. However, participants tend to withhold their trust as perceiving such misalignments, leading to a rejection of AI suggestions and subsequently poorer performance in collaborative tasks. Conversely, without such information, participants struggle to identify misalignments, resulting in either the neglect of correct AI advice or the following of incorrect AI suggestions, adversely affecting collaboration. This study offers valuable insights for enhancing human-AI collaboration by underscoring the importance of aligning AI's expressed confidence with its actual performance and the necessity of calibrating human trust towards AI confidence.

Optimization-based Alignment for Strapdown Inertial Navigation System Comparison and Extension

Nov 23, 2016

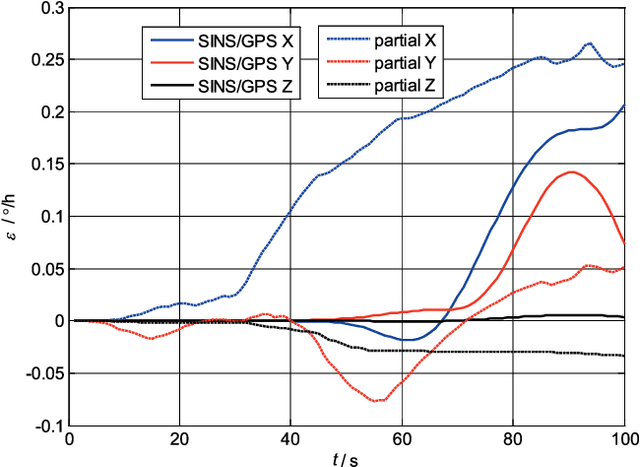

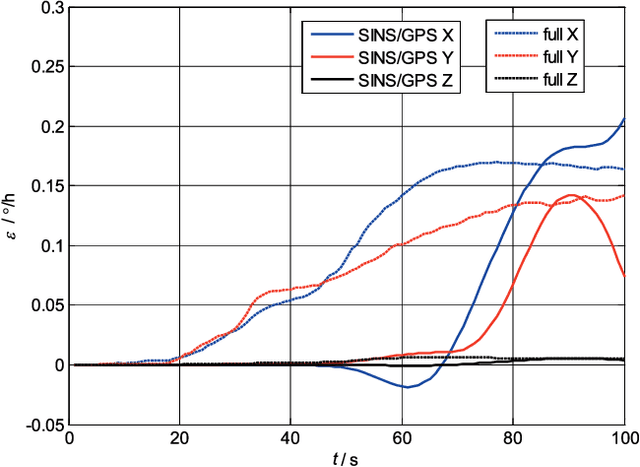

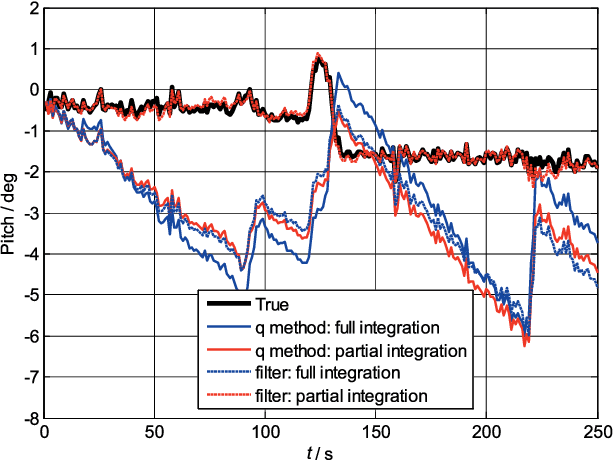

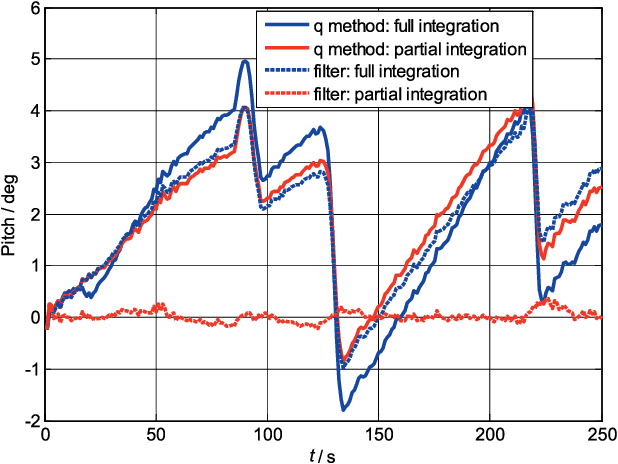

Abstract:In this paper, the optimization-based alignment (OBA) methods are investigated with main focus on the vector observations construction procedures for the strapdown inertial navigation system (SINS). The contributions of this study are twofold. First the OBA method is extended to be able to estimate the gyroscopes biases coupled with the attitude based on the construction process of the existing OBA methods. This extension transforms the initial alignment into an attitude estimation problem which can be solved using the nonlinear filtering algorithms. The second contribution is the comprehensive evaluation of the OBA methods and their extensions with different vector observations construction procedures in terms of convergent speed and steady-state estimate using field test data collected from different grades of SINS. This study is expected to facilitate the selection of appropriate OBA methods for different grade SINS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge