Jim Fleming

Reasoning and Generalization in RL: A Tool Use Perspective

Jul 03, 2019

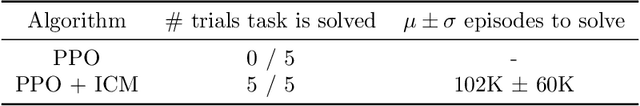

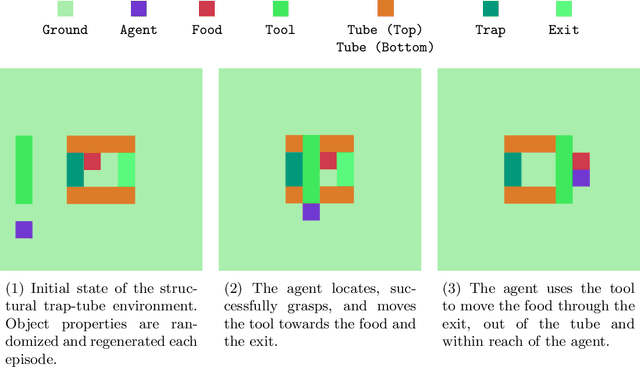

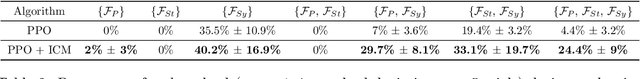

Abstract:Learning to use tools to solve a variety of tasks is an innate ability of humans and has been observed of animals in the wild. However, the underlying mechanisms that are required to learn to use tools are abstract and widely contested in the literature. In this paper, we study tool use in the context of reinforcement learning and propose a framework for analyzing generalization inspired by a classic study of tool using behavior, the trap-tube task. Recently, it has become common in reinforcement learning to measure generalization performance on a single test set of environments. We instead propose transfers that produce multiple test sets that are used to measure specified types of generalization, inspired by abilities demonstrated by animal and human tool users. The source code to reproduce our experiments is publicly available at https://github.com/fomorians/gym_tool_use.

Contextual Recurrent Neural Networks

Feb 09, 2019

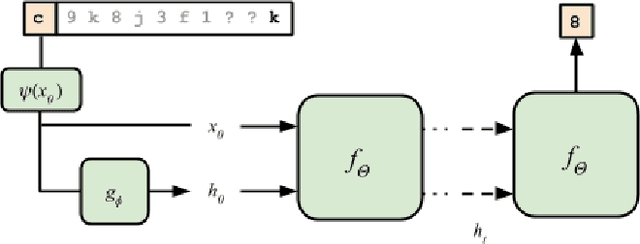

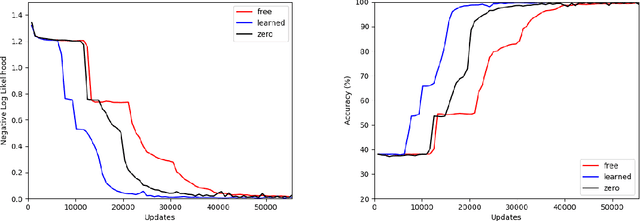

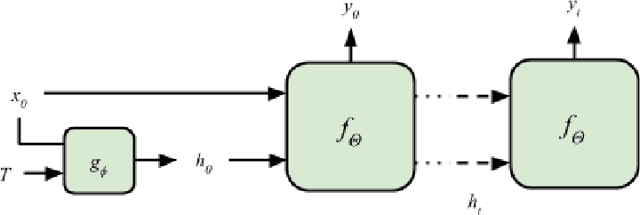

Abstract:There is an implicit assumption that by unfolding recurrent neural networks (RNN) in finite time, the misspecification of choosing a zero value for the initial hidden state is mitigated by later time steps. This assumption has been shown to work in practice and alternative initialization may be suggested but often overlooked. In this paper, we propose a method of parameterizing the initial hidden state of an RNN. The resulting architecture, referred to as a Contextual RNN, can be trained end-to-end. The performance on an associative retrieval task is found to improve by conditioning the RNN initial hidden state on contextual information from the input sequence. Furthermore, we propose a novel method of conditionally generating sequences using the hidden state parameterization of Contextual RNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge