Jean-François Lalonde

Physics-Based Rendering for Improving Robustness to Rain

Aug 27, 2019

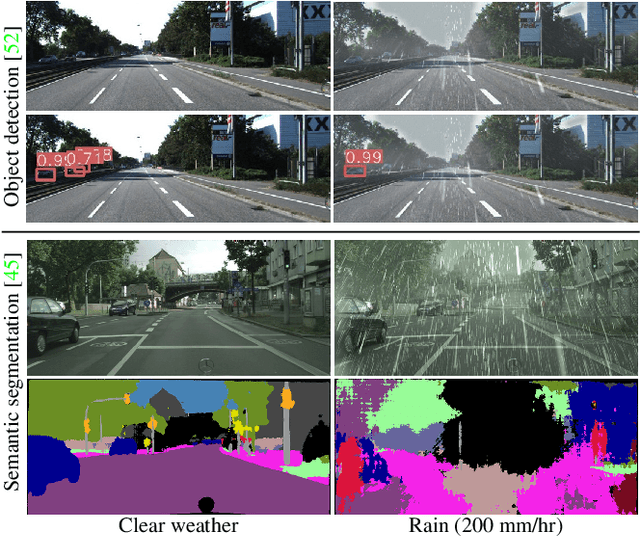

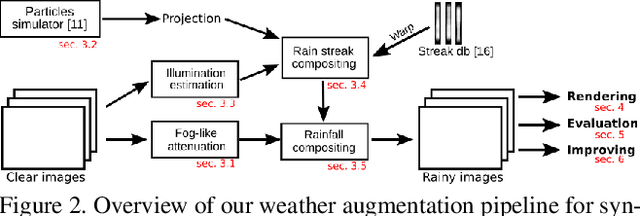

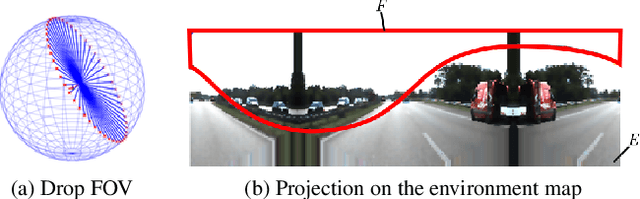

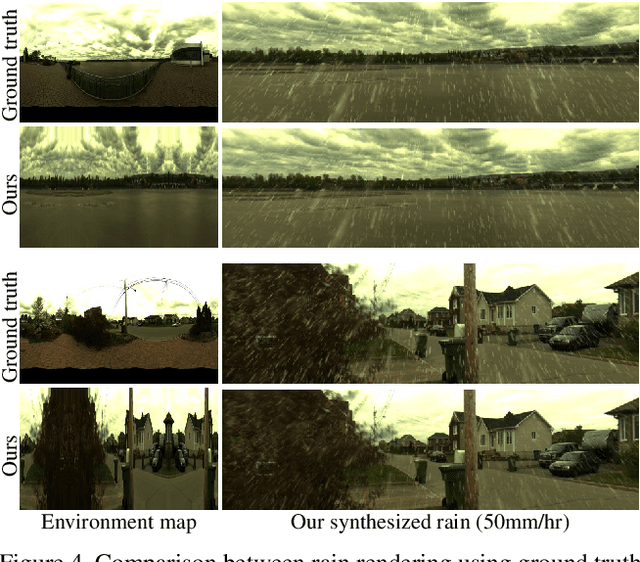

Abstract:To improve the robustness to rain, we present a physically-based rain rendering pipeline for realistically inserting rain into clear weather images. Our rendering relies on a physical particle simulator, an estimation of the scene lighting and an accurate rain photometric modeling to augment images with arbitrary amount of realistic rain or fog. We validate our rendering with a user study, proving our rain is judged 40% more realistic that state-of-the-art. Using our generated weather augmented Kitti and Cityscapes dataset, we conduct a thorough evaluation of deep object detection and semantic segmentation algorithms and show that their performance decreases in degraded weather, on the order of 15% for object detection and 60% for semantic segmentation. Furthermore, we show refining existing networks with our augmented images improves the robustness of both object detection and semantic segmentation algorithms. We experiment on nuScenes and measure an improvement of 15% for object detection and 35% for semantic segmentation compared to original rainy performance. Augmented databases and code are available on the project page.

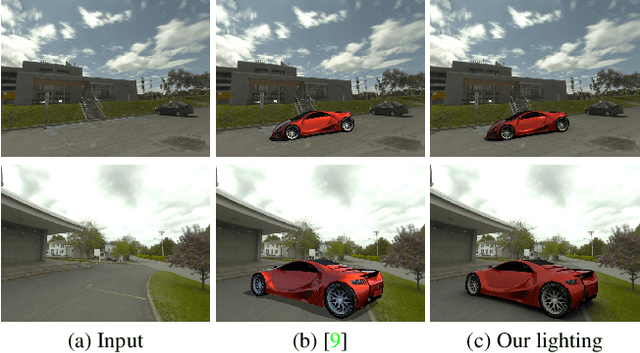

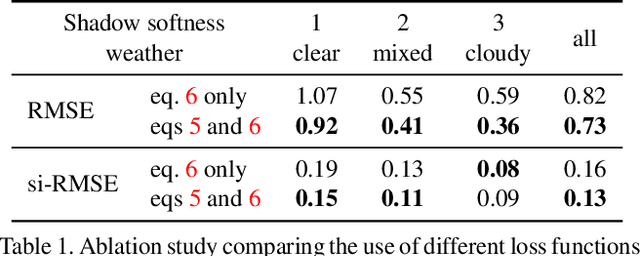

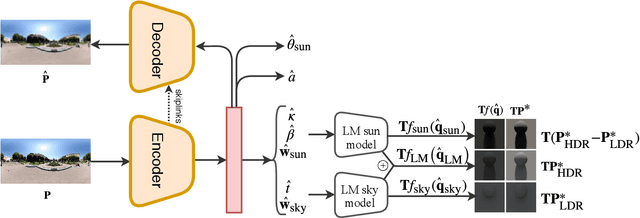

All-Weather Deep Outdoor Lighting Estimation

Jun 12, 2019

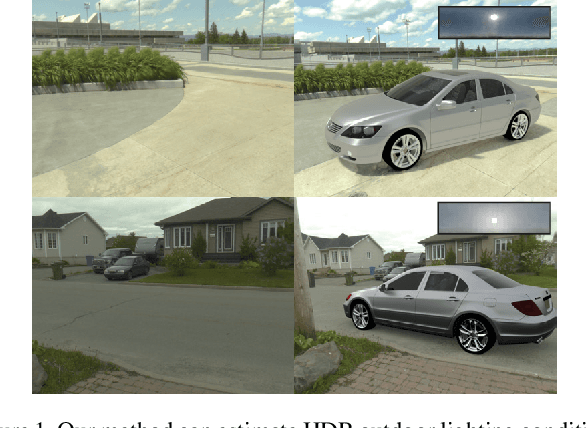

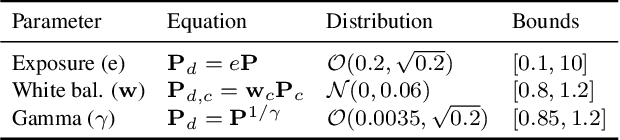

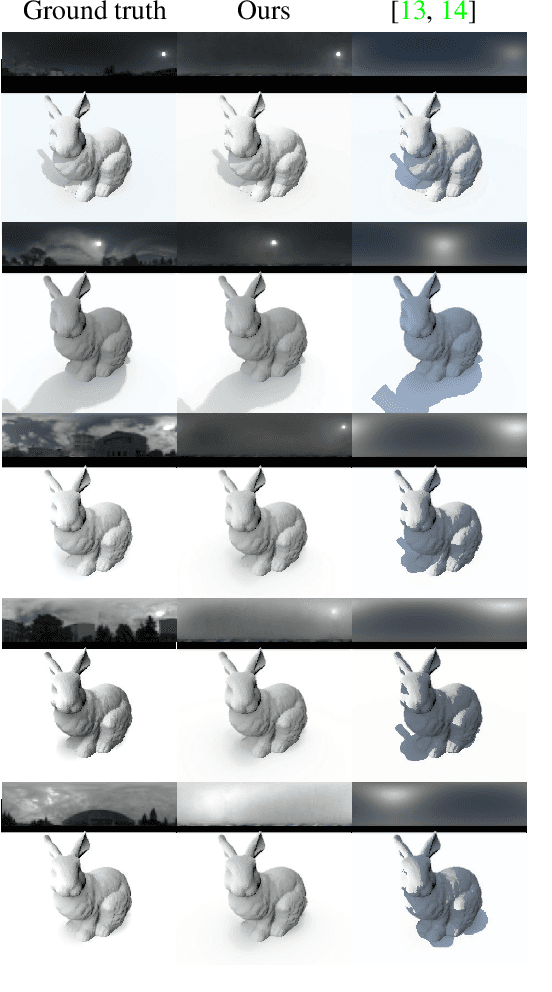

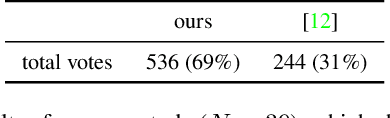

Abstract:We present a neural network that predicts HDR outdoor illumination from a single LDR image. At the heart of our work is a method to accurately learn HDR lighting from LDR panoramas under any weather condition. We achieve this by training another CNN (on a combination of synthetic and real images) to take as input an LDR panorama, and regress the parameters of the Lalonde-Matthews outdoor illumination model. This model is trained such that it a) reconstructs the appearance of the sky, and b) renders the appearance of objects lit by this illumination. We use this network to label a large-scale dataset of LDR panoramas with lighting parameters and use them to train our single image outdoor lighting estimation network. We demonstrate, via extensive experiments, that both our panorama and single image networks outperform the state of the art, and unlike prior work, are able to handle weather conditions ranging from fully sunny to overcast skies.

Fast Spatially-Varying Indoor Lighting Estimation

Jun 10, 2019

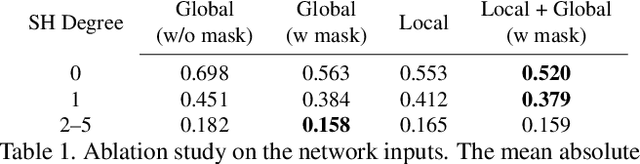

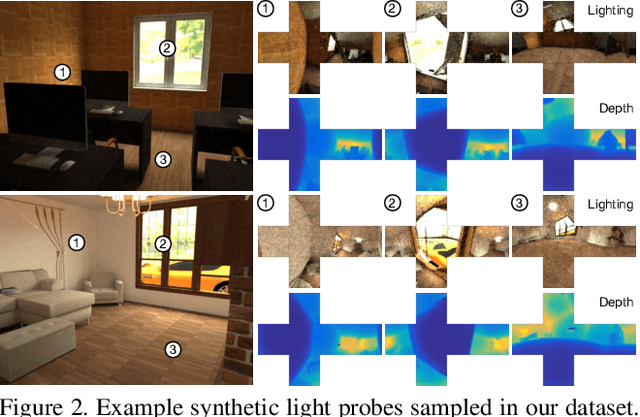

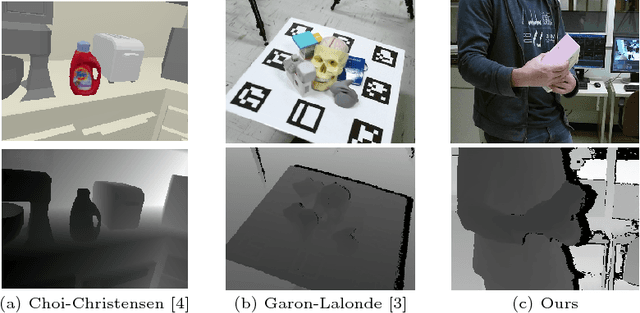

Abstract:We propose a real-time method to estimate spatiallyvarying indoor lighting from a single RGB image. Given an image and a 2D location in that image, our CNN estimates a 5th order spherical harmonic representation of the lighting at the given location in less than 20ms on a laptop mobile graphics card. While existing approaches estimate a single, global lighting representation or require depth as input, our method reasons about local lighting without requiring any geometry information. We demonstrate, through quantitative experiments including a user study, that our results achieve lower lighting estimation errors and are preferred by users over the state-of-the-art. Our approach can be used directly for augmented reality applications, where a virtual object is relit realistically at any position in the scene in real-time.

* CVPR19

Structural Decompositions for End-to-End Relighting

Jun 07, 2019

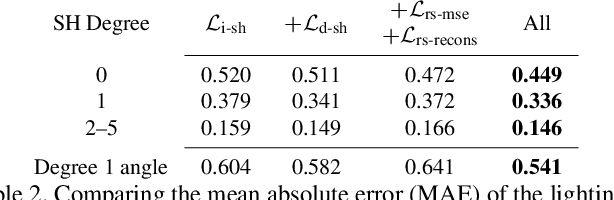

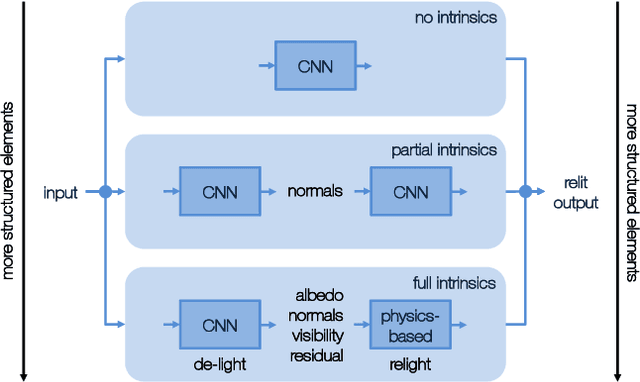

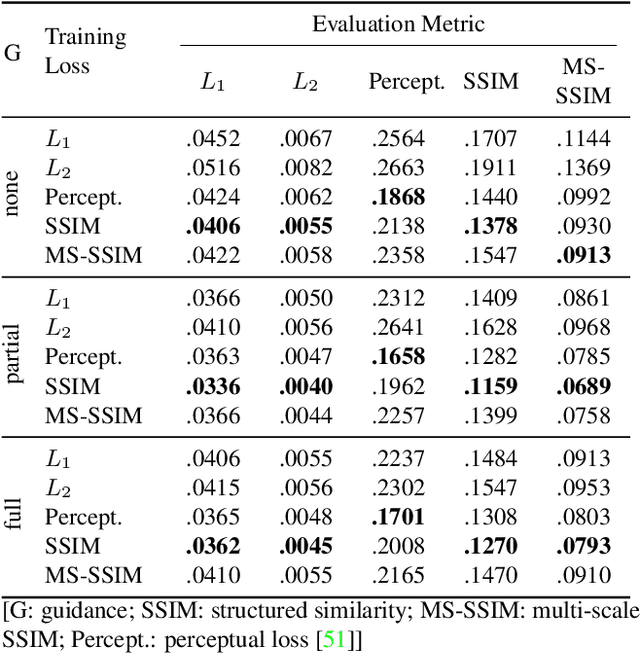

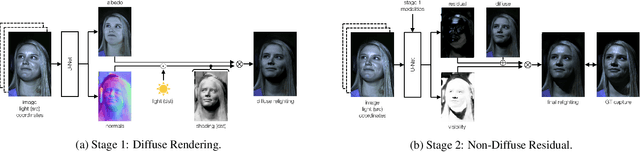

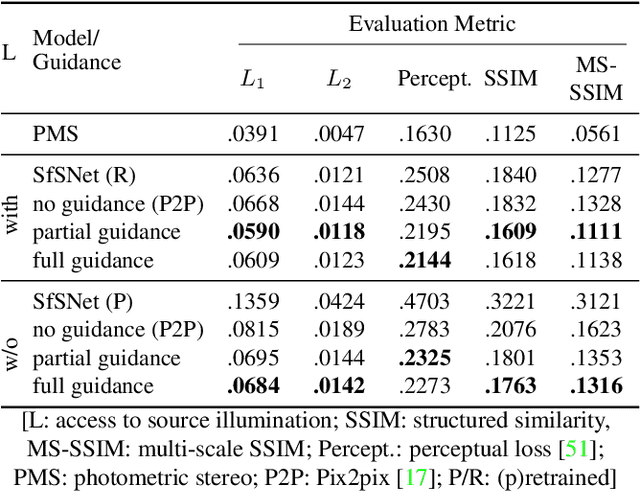

Abstract:Relighting is an essential step in artificially transferring an object from one image into another environment. For example, a believable teleconference in Augmented Reality requires a portrait recorded in the source environment to be displayed and relit consistent with the light configuration of the destination scene. In this paper, we investigate architectures for learning to both de-light and relight an image of a human face end-to-end. The architectures vary in how much they enforce physically-based image formation and rendering constraints. The most structured model decomposes the input image into intrinsic components according to a diffuse physics-based image formation model and augments the render to relight including non-diffuse effects. An intermediate model uses fewer intrinsic constraints and the least structured model makes no assumptions on the image formation. To train our models and evaluate the approach, we collected portraits of 21 subjects with various expressions and poses, each in a sequence of 32 individual light sources in a controlled light stage setup. Our method leads to precise and believable relighting results in challenging illumination conditions and poses, including when the subject is facing away from the camera. We compare our method to state-of-the-art relighting approaches and illustrate its superiority in a series of quantitative and qualitative experiments.

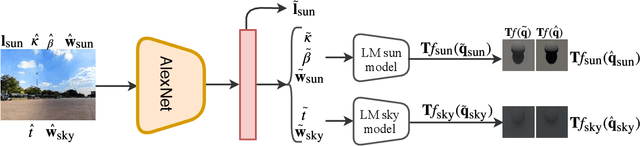

Deep Sky Modeling for Single Image Outdoor Lighting Estimation

May 10, 2019

Abstract:We propose a data-driven learned sky model, which we use for outdoor lighting estimation from a single image. As no large-scale dataset of images and their corresponding ground truth illumination is readily available, we use complementary datasets to train our approach, combining the vast diversity of illumination conditions of SUN360 with the radiometrically calibrated and physically accurate Laval HDR sky database. Our key contribution is to provide a holistic view of both lighting modeling and estimation, solving both problems end-to-end. From a test image, our method can directly estimate an HDR environment map of the lighting without relying on analytical lighting models. We demonstrate the versatility and expressivity of our learned sky model and show that it can be used to recover plausible illumination, leading to visually pleasant virtual object insertions. To further evaluate our method, we capture a dataset of HDR 360{\deg} panoramas and show through extensive validation that we significantly outperform previous state-of-the-art.

Deep Photovoltaic Nowcasting

Oct 15, 2018Abstract:Predicting the short-term power output of a photovoltaic panel is an important task for the efficient management of smart grids. Short-term forecasting at the minute scale, also known as nowcasting, can benefit from sky images captured by regular cameras and installed close to the solar panel. However, estimating the weather conditions from these images---sun intensity, cloud appearance and movement, etc.---is a very challenging task that the community has yet to solve with traditional computer vision techniques. In this work, we propose to learn the relationship between sky appearance and the future photovoltaic power output using deep learning. We train several variants of convolutional neural networks which take historical photovoltaic power values and sky images as input and estimate photovoltaic power in a very short term future. In particular, we compare three different architectures based on: a multi-layer perceptron (MLP), a convolutional neural network (CNN), and a long short term memory (LSTM) module. We evaluate our approach quantitatively on a dataset of photovoltaic power values and corresponding images gathered in Kyoto, Japan. Our experiments reveal that the MLP network, already used similarly in previous work, achieves an RMSE skill score of 7% over the commonly-used persistence baseline on the 1-minute future photovoltaic power prediction task. Our CNN-based network improves upon this with a 12% skill score. In contrast, our LSTM-based model, which can learn the temporal dependencies in the data, achieves a 21% RMSE skill score, thus outperforming all other approaches.

A Framework for Evaluating 6-DOF Object Trackers

Sep 06, 2018

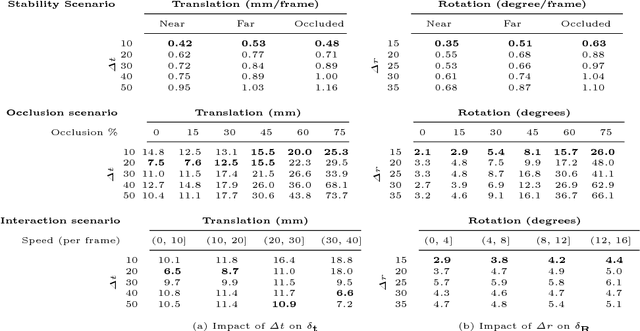

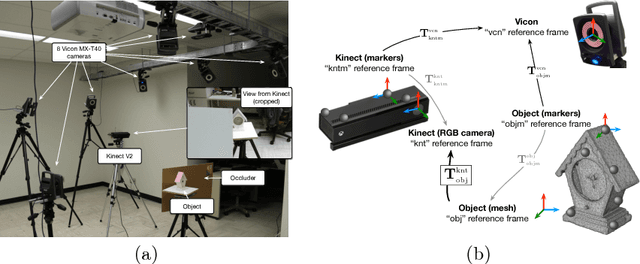

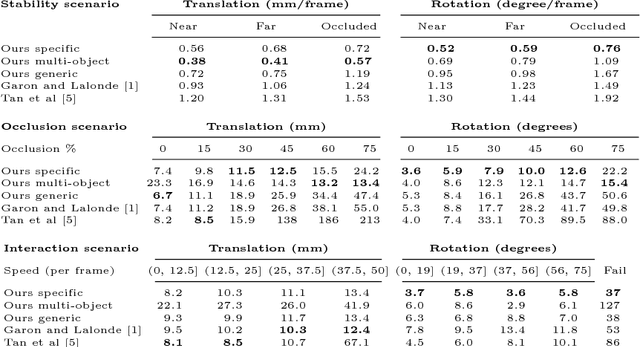

Abstract:We present a challenging and realistic novel dataset for evaluating 6-DOF object tracking algorithms. Existing datasets show serious limitations---notably, unrealistic synthetic data, or real data with large fiducial markers---preventing the community from obtaining an accurate picture of the state-of-the-art. Using a data acquisition pipeline based on a commercial motion capture system for acquiring accurate ground truth poses of real objects with respect to a Kinect V2 camera, we build a dataset which contains a total of 297 calibrated sequences. They are acquired in three different scenarios to evaluate the performance of trackers: stability, robustness to occlusion and accuracy during challenging interactions between a person and the object. We conduct an extensive study of a deep 6-DOF tracking architecture and determine a set of optimal parameters. We enhance the architecture and the training methodology to train a 6-DOF tracker that can robustly generalize to objects never seen during training, and demonstrate favorable performance compared to previous approaches trained specifically on the objects to track.

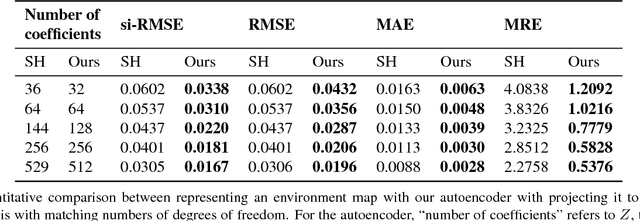

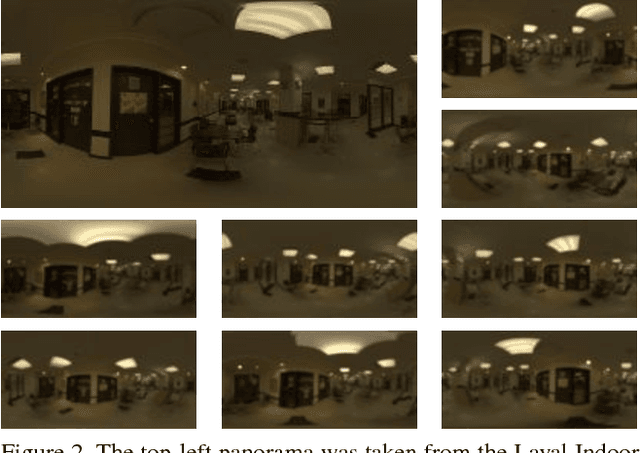

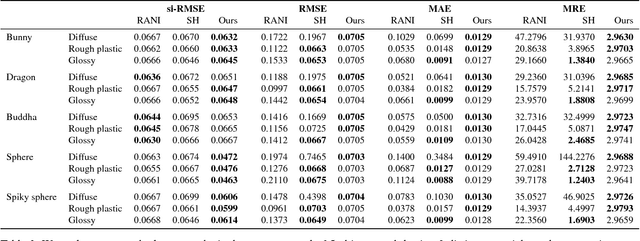

Learning to Estimate Indoor Lighting from 3D Objects

Aug 13, 2018

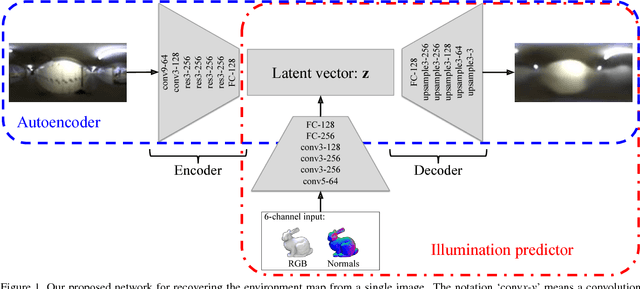

Abstract:In this work, we propose a step towards a more accurate prediction of the environment light given a single picture of a known object. To achieve this, we developed a deep learning method that is able to encode the latent space of indoor lighting using few parameters and that is trained on a database of environment maps. This latent space is then used to generate predictions of the light that are both more realistic and accurate than previous methods. To achieve this, our first contribution is a deep autoencoder which is capable of learning the feature space that compactly models lighting. Our second contribution is a convolutional neural network that predicts the light from a single image of a known object. To train these networks, our third contribution is a novel dataset that contains 21,000 HDR indoor environment maps. The results indicate that the predictor can generate plausible lighting estimations even from diffuse objects.

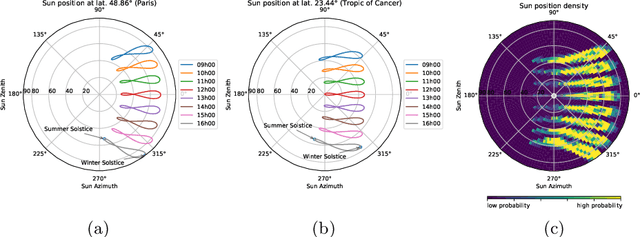

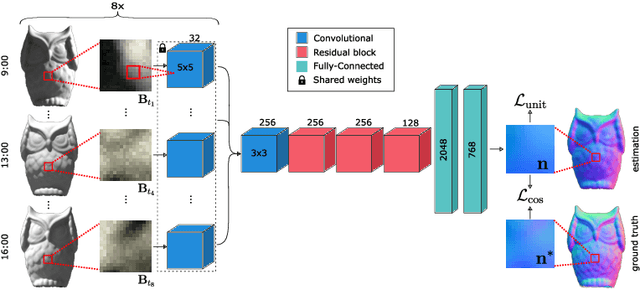

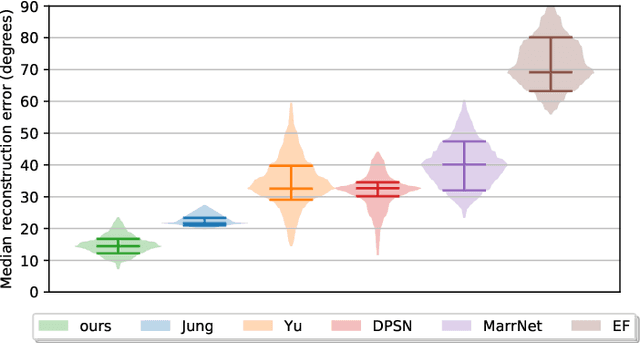

Deep Photometric Stereo on a Sunny Day

May 10, 2018

Abstract:Photometric Stereo in outdoor illumination remains a challenging, ill-posed problem. Indeed, it has been shown that the scene structure cannot be recovered unambiguously from the photometric cues alone when it is lit by the sun over the course of a single day. In this paper, we present a CNN-based technique for photometric stereo on a single sunny day. We solve the ambiguity issue by combining photometric cues with prior knowledge on material properties, local surface geometry and the natural variations in outdoor lighting. To train the CNN, we create a dataset of realistic synthetic renders with both diffuse and specular materials. Given eight outdoor images taken during a single sunny day, our method robustly estimates the scene surface normals. Our approach does not require precise geolocation to work and significantly outperforms several state-of-the-art methods on images with real lighting. This shows that our CNN can combine efficiently learned priors and photometric cues available during a single sunny day.

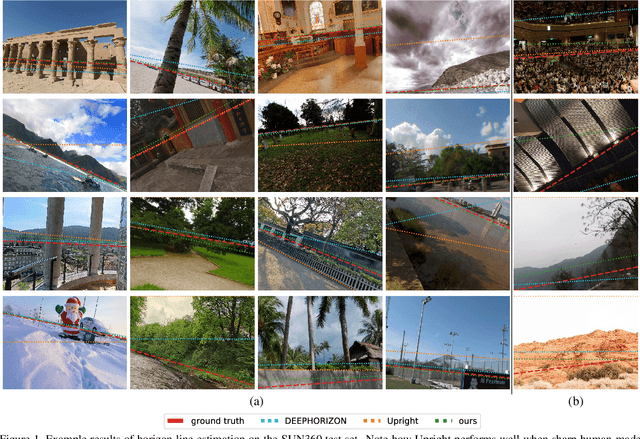

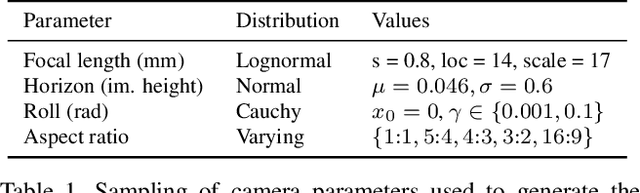

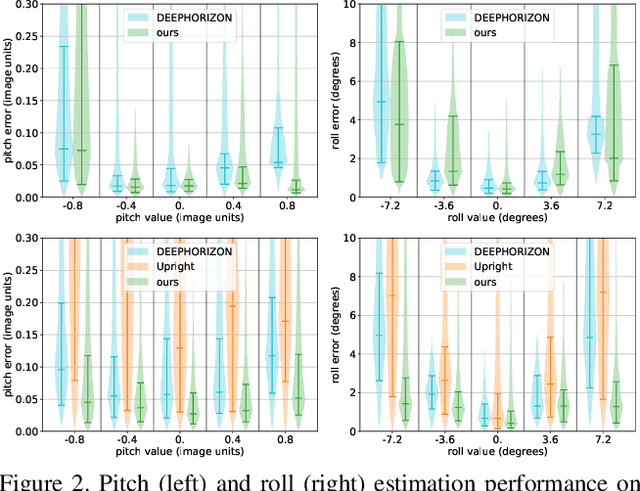

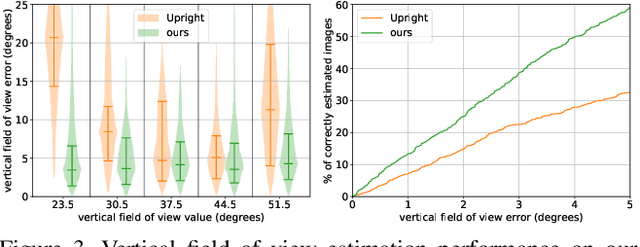

A Perceptual Measure for Deep Single Image Camera Calibration

Apr 22, 2018

Abstract:Most current single image camera calibration methods rely on specific image features or user input, and cannot be applied to natural images captured in uncontrolled settings. We propose directly inferring camera calibration parameters from a single image using a deep convolutional neural network. This network is trained using automatically generated samples from a large-scale panorama dataset, and considerably outperforms other methods, including recent deep learning-based approaches, in terms of standard L2 error. However, we argue that in many cases it is more important to consider how humans perceive errors in camera estimation. To this end, we conduct a large-scale human perception study where we ask users to judge the realism of 3D objects composited with and without ground truth camera calibration. Based on this study, we develop a new perceptual measure for camera calibration, and demonstrate that our deep calibration network outperforms other methods on this measure. Finally, we demonstrate the use of our calibration network for a number of applications including virtual object insertion, image retrieval and compositing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge