Jaideep Srivastava

Continual Deterioration Prediction for Hospitalized COVID-19 Patients

Jan 19, 2021

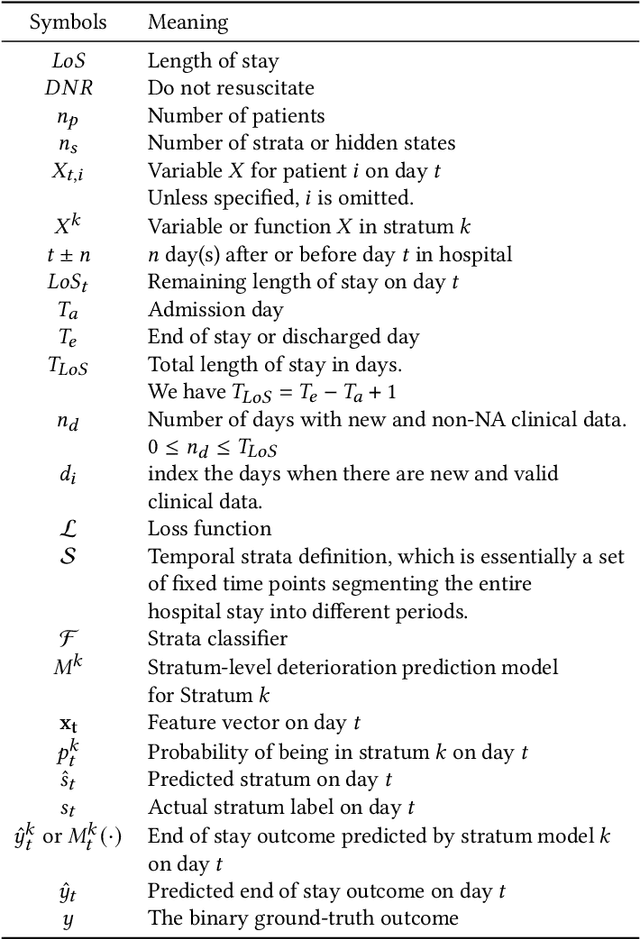

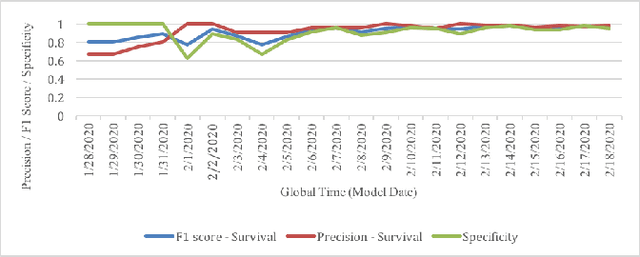

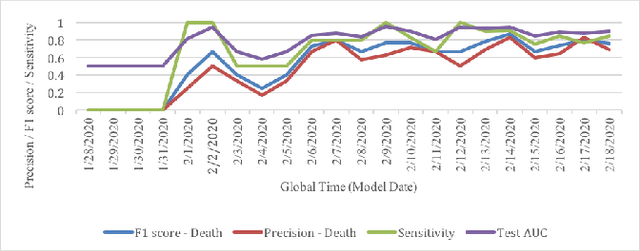

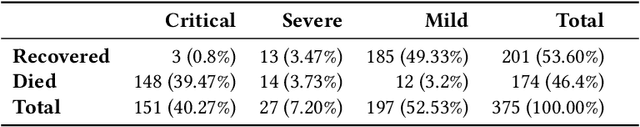

Abstract:Leading up to August 2020, COVID-19 has spread to almost every country in the world, causing millions of infected and hundreds of thousands of deaths. In this paper, we first verify the assumption that clinical variables could have time-varying effects on COVID-19 outcomes. Then, we develop a temporal stratification approach to make daily predictions on patients' outcome at the end of hospital stay. Training data is segmented by the remaining length of stay, which is a proxy for the patient's overall condition. Based on this, a sequence of predictive models are built, one for each time segment. Thanks to the publicly shared data, we were able to build and evaluate prototype models. Preliminary experiments show 0.98 AUROC, 0.91 F1 score and 0.97 AUPR on continuous deterioration prediction, encouraging further development of the model as well as validations on different datasets. We also verify the key assumption which motivates our method. Clinical variables could have time-varying effects on COVID-19 outcomes. That is to say, the feature importance of a variable in the predictive model varies at different disease stages.

Learning Student Interest Trajectory for MOOCThread Recommendation

Jan 16, 2021

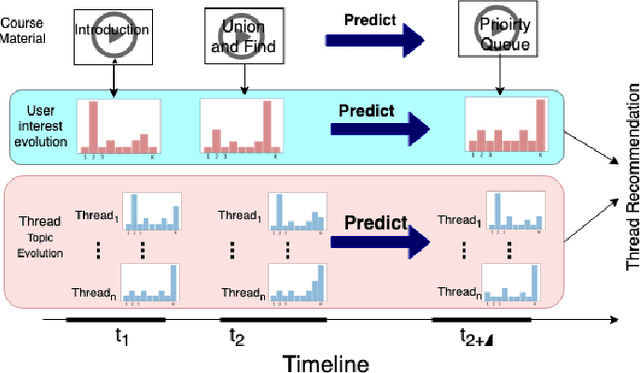

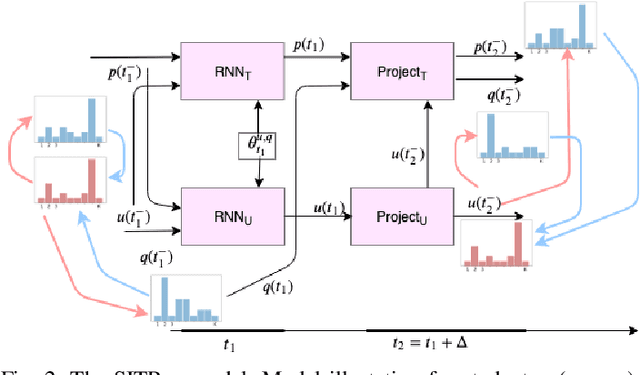

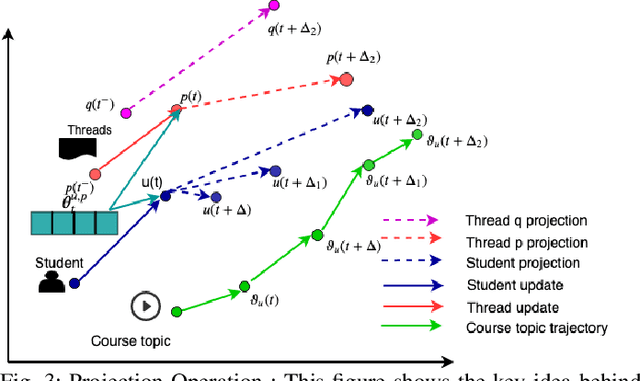

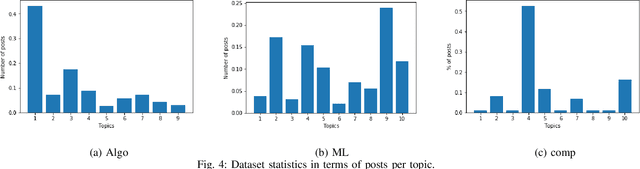

Abstract:In recent years, Massive Open Online Courses (MOOCs) have witnessed immense growth in popularity. Now, due to the recent Covid19 pandemic situation, it is important to push the limits of online education. Discussion forums are primary means of interaction among learners and instructors. However, with growing class size, students face the challenge of finding useful and informative discussion forums. This problem can be solved by matching the interest of students with thread contents. The fundamental challenge is that the student interests drift as they progress through the course, and forum contents evolve as students or instructors update them. In our paper, we propose to predict future interest trajectories of students. Our model consists of two key operations: 1) Update operation and 2) Projection operation. Update operation models the inter-dependency between the evolution of student and thread using coupled Recurrent Neural Networks when the student posts on the thread. The projection operation learns to estimate future embedding of students and threads. For students, the projection operation learns the drift in their interests caused by the change in the course topic they study. The projection operation for threads exploits how different posts induce varying interest levels in a student according to the thread structure. Extensive experimentation on three real-world MOOC datasets shows that our model significantly outperforms other baselines for thread recommendation.

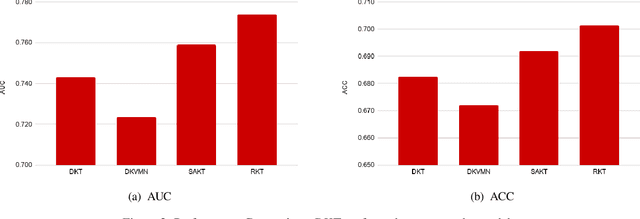

An Empirical Comparison of Deep Learning Models for Knowledge Tracing on Large-Scale Dataset

Jan 16, 2021

Abstract:Knowledge tracing (KT) is the problem of modeling each student's mastery of knowledge concepts (KCs) as (s)he engages with a sequence of learning activities. It is an active research area to help provide learners with personalized feedback and materials. Various deep learning techniques have been proposed for solving KT. Recent release of large-scale student performance dataset \cite{choi2019ednet} motivates the analysis of performance of deep learning approaches that have been proposed to solve KT. Our analysis can help understand which method to adopt when large dataset related to student performance is available. We also show that incorporating contextual information such as relation between exercises and student forget behavior further improves the performance of deep learning models.

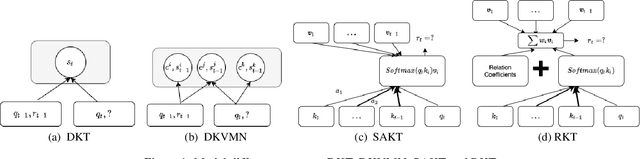

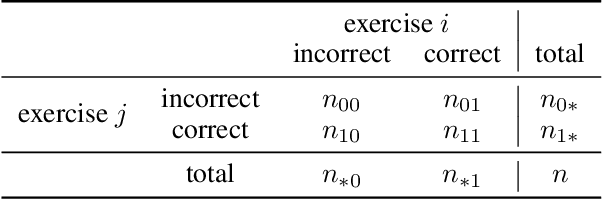

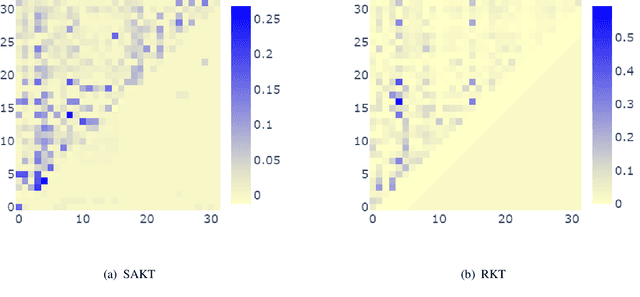

RKT : Relation-Aware Self-Attention for Knowledge Tracing

Aug 28, 2020

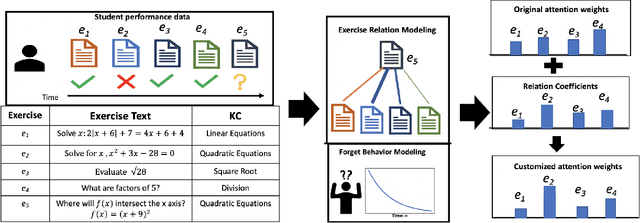

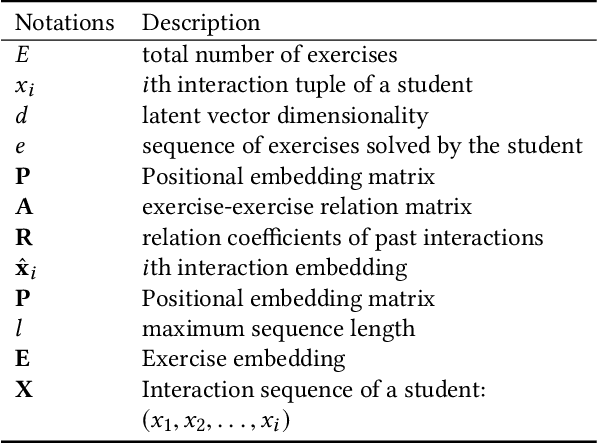

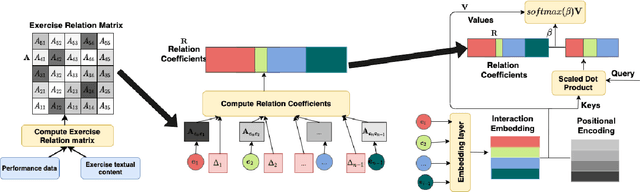

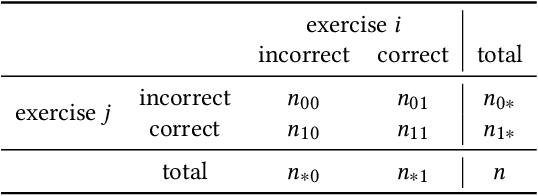

Abstract:The world has transitioned into a new phase of online learning in response to the recent Covid19 pandemic. Now more than ever, it has become paramount to push the limits of online learning in every manner to keep flourishing the education system. One crucial component of online learning is Knowledge Tracing (KT). The aim of KT is to model student's knowledge level based on their answers to a sequence of exercises referred as interactions. Students acquire their skills while solving exercises and each such interaction has a distinct impact on student ability to solve a future exercise. This \textit{impact} is characterized by 1) the relation between exercises involved in the interactions and 2) student forget behavior. Traditional studies on knowledge tracing do not explicitly model both the components jointly to estimate the impact of these interactions. In this paper, we propose a novel Relation-aware self-attention model for Knowledge Tracing (RKT). We introduce a relation-aware self-attention layer that incorporates the contextual information. This contextual information integrates both the exercise relation information through their textual content as well as student performance data and the forget behavior information through modeling an exponentially decaying kernel function. Extensive experiments on three real-world datasets, among which two new collections are released to the public, show that our model outperforms state-of-the-art knowledge tracing methods. Furthermore, the interpretable attention weights help visualize the relation between interactions and temporal patterns in the human learning process.

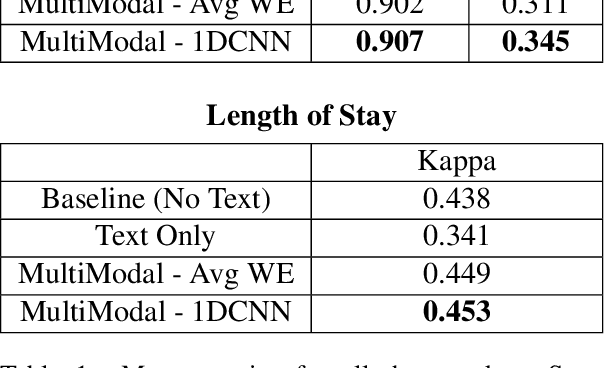

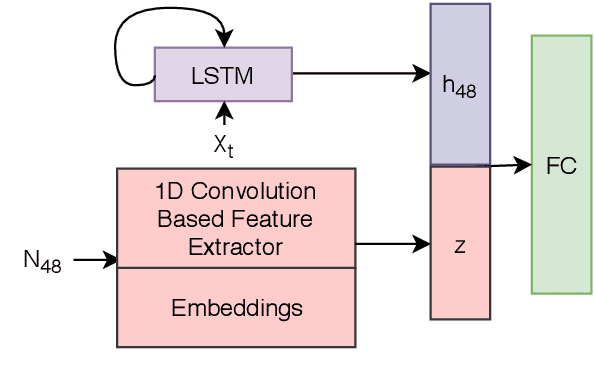

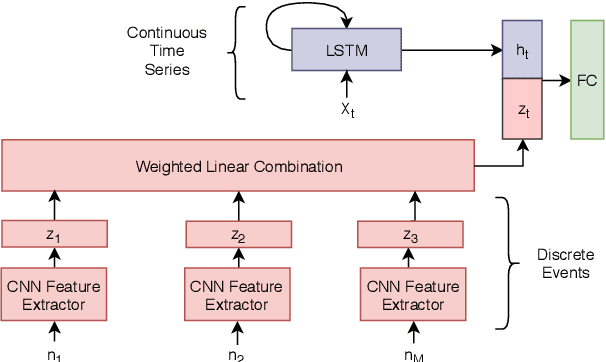

Using Clinical Notes with Time Series Data for ICU Management

Sep 12, 2019

Abstract:Monitoring patients in ICU is a challenging and high-cost task. Hence, predicting the condition of patients during their ICU stay can help provide better acute care and plan the hospital's resources. There has been continuous progress in machine learning research for ICU management, and most of this work has focused on using time series signals recorded by ICU instruments. In our work, we show that adding clinical notes as another modality improves the performance of the model for three benchmark tasks: in-hospital mortality prediction, modeling decompensation, and length of stay forecasting that play an important role in ICU management. While the time-series data is measured at regular intervals, doctor notes are charted at irregular times, making it challenging to model them together. We propose a method to model them jointly, achieving considerable improvement across benchmark tasks over baseline time-series model. Our implementation can be found at \url{https://github.com/kaggarwal/ClinicalNotesICU}.

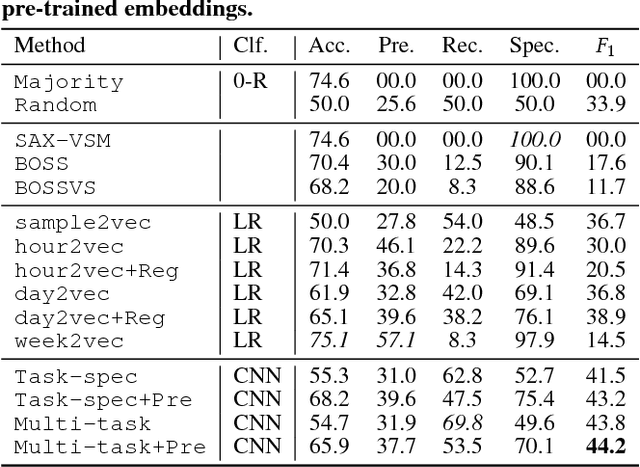

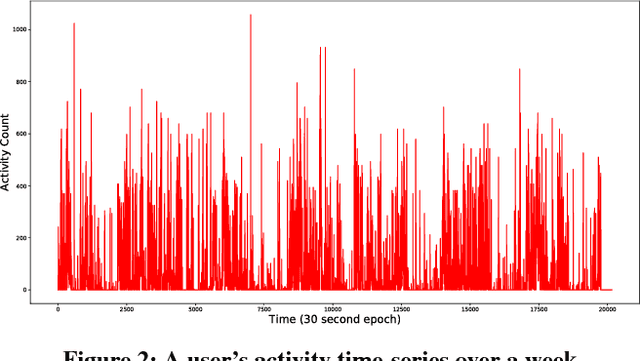

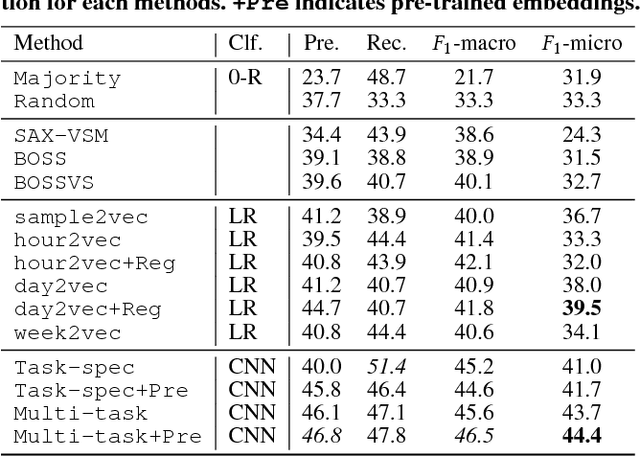

Adversarial Unsupervised Representation Learning for Activity Time-Series

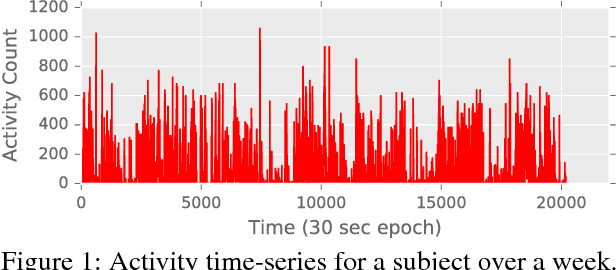

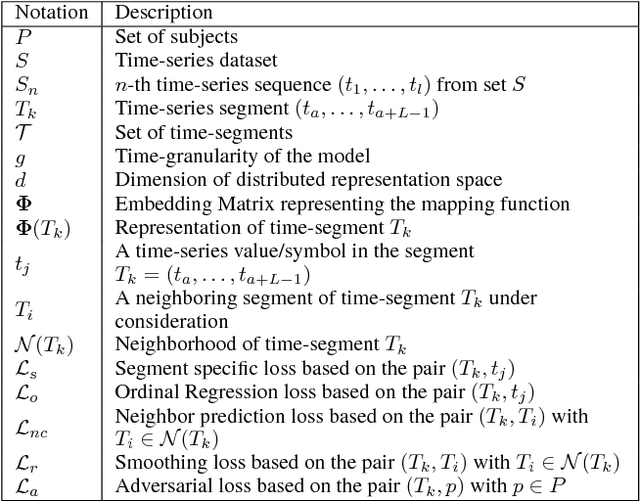

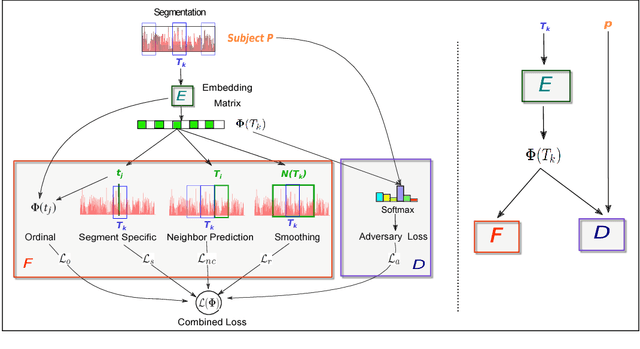

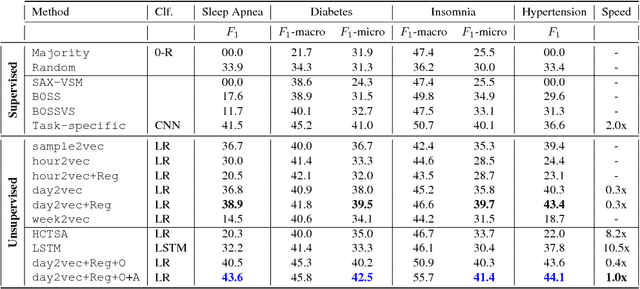

Nov 14, 2018

Abstract:Sufficient physical activity and restful sleep play a major role in the prevention and cure of many chronic conditions. Being able to proactively screen and monitor such chronic conditions would be a big step forward for overall health. The rapid increase in the popularity of wearable devices provides a significant new source, making it possible to track the user's lifestyle real-time. In this paper, we propose a novel unsupervised representation learning technique called activity2vec that learns and "summarizes" the discrete-valued activity time-series. It learns the representations with three components: (i) the co-occurrence and magnitude of the activity levels in a time-segment, (ii) neighboring context of the time-segment, and (iii) promoting subject-invariance with adversarial training. We evaluate our method on four disorder prediction tasks using linear classifiers. Empirical evaluation demonstrates that our proposed method scales and performs better than many strong baselines. The adversarial regime helps improve the generalizability of our representations by promoting subject invariant features. We also show that using the representations at the level of a day works the best since human activity is structured in terms of daily routines

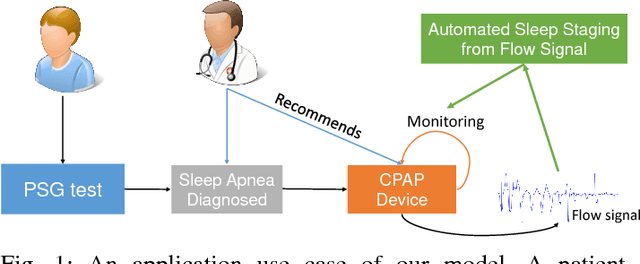

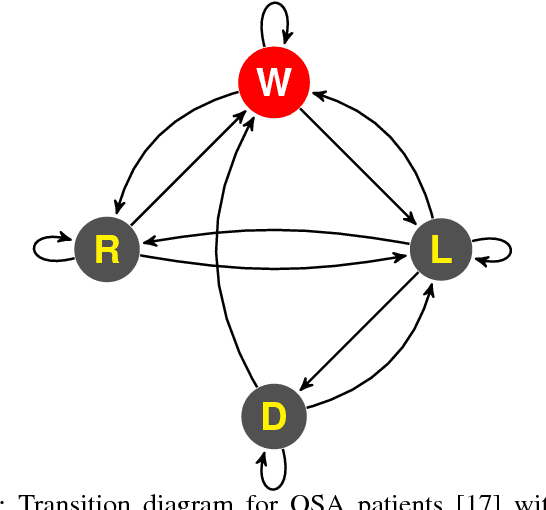

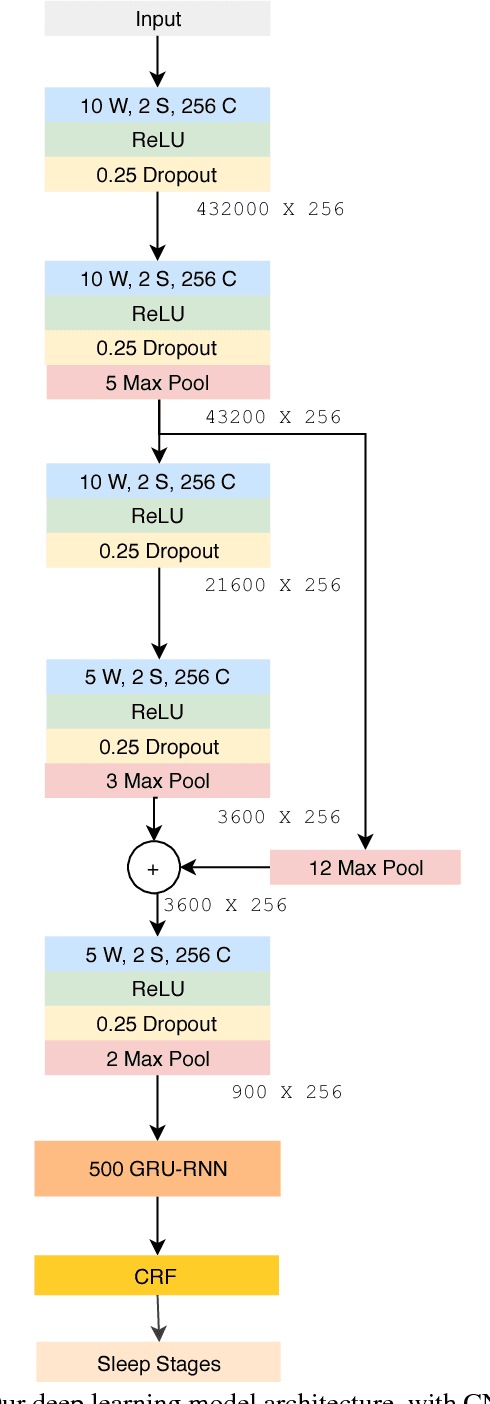

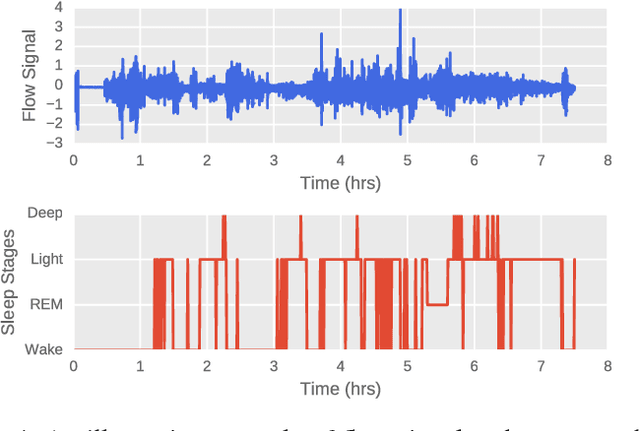

A Structured Learning Approach with Neural Conditional Random Fields for Sleep Staging

Oct 28, 2018

Abstract:Sleep plays a vital role in human health, both mental and physical. Sleep disorders like sleep apnea are increasing in prevalence, with the rapid increase in factors like obesity. Sleep apnea is most commonly treated with Continuous Positive Air Pressure (CPAP) therapy. Presently, however, there is no mechanism to monitor a patient's progress with CPAP. Accurate detection of sleep stages from CPAP flow signal is crucial for such a mechanism. We propose, for the first time, an automated sleep staging model based only on the flow signal. Deep neural networks have recently shown high accuracy on sleep staging by eliminating handcrafted features. However, these methods focus exclusively on extracting informative features from the input signal, without paying much attention to the dynamics of sleep stages in the output sequence. We propose an end-to-end framework that uses a combination of deep convolution and recurrent neural networks to extract high-level features from raw flow signal with a structured output layer based on a conditional random field to model the temporal transition structure of the sleep stages. We improve upon the previous methods by 10% using our model, that can be augmented to the previous sleep staging deep learning methods. We also show that our method can be used to accurately track sleep metrics like sleep efficiency calculated from sleep stages that can be deployed for monitoring the response of CPAP therapy on sleep apnea patients. Apart from the technical contributions, we expect this study to motivate new research questions in sleep science.

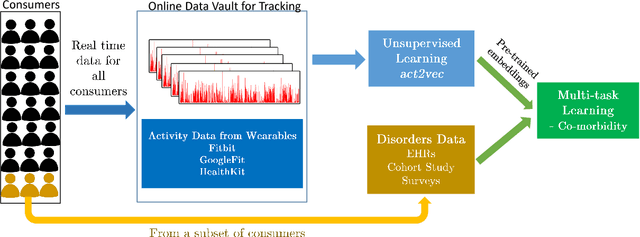

Co-Morbidity Exploration on Wearables Activity Data Using Unsupervised Pre-training and Multi-Task Learning

Dec 27, 2017

Abstract:Physical activity and sleep play a major role in the prevention and management of many chronic conditions. It is not a trivial task to understand their impact on chronic conditions. Currently, data from electronic health records (EHRs), sleep lab studies, and activity/sleep logs are used. The rapid increase in the popularity of wearable health devices provides a significant new data source, making it possible to track the user's lifestyle real-time through web interfaces, both to consumer as well as their healthcare provider, potentially. However, at present there is a gap between lifestyle data (e.g., sleep, physical activity) and clinical outcomes normally captured in EHRs. This is a critical barrier for the use of this new source of signal for healthcare decision making. Applying deep learning to wearables data provides a new opportunity to overcome this barrier. To address the problem of the unavailability of clinical data from a major fraction of subjects and unrepresentative subject populations, we propose a novel unsupervised (task-agnostic) time-series representation learning technique called act2vec. act2vec learns useful features by taking into account the co-occurrence of activity levels along with periodicity of human activity patterns. The learned representations are then exploited to boost the performance of disorder-specific supervised learning models. Furthermore, since many disorders are often related to each other, a phenomenon referred to as co-morbidity, we use a multi-task learning framework for exploiting the shared structure of disorder inducing life-style choices partially captured in the wearables data. Empirical evaluation using actigraphy data from 4,124 subjects shows that our proposed method performs and generalizes substantially better than the conventional time-series symbolic representational methods and task-specific deep learning models.

Impact of Physical Activity on Sleep:A Deep Learning Based Exploration

Jul 24, 2016

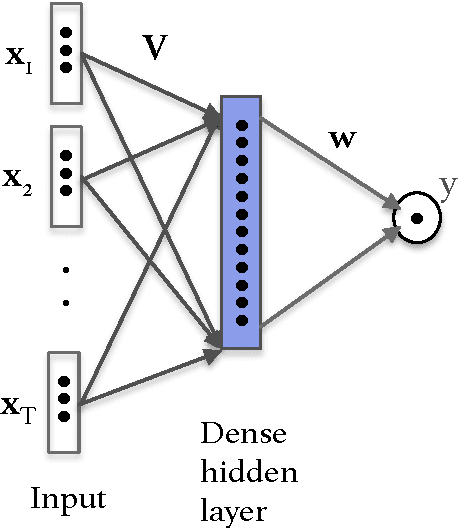

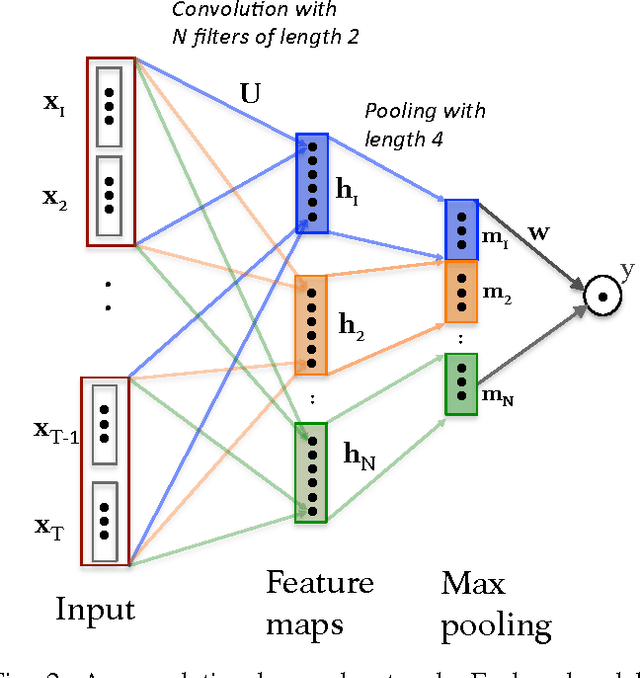

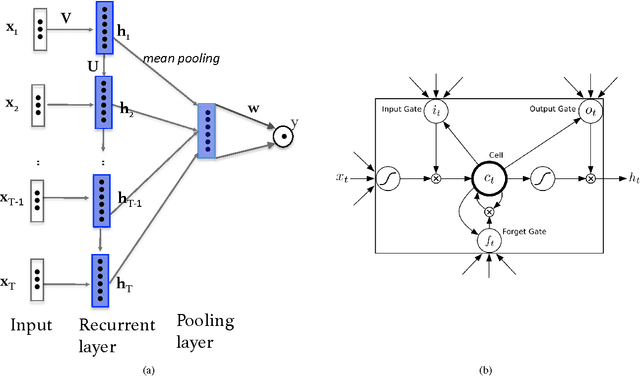

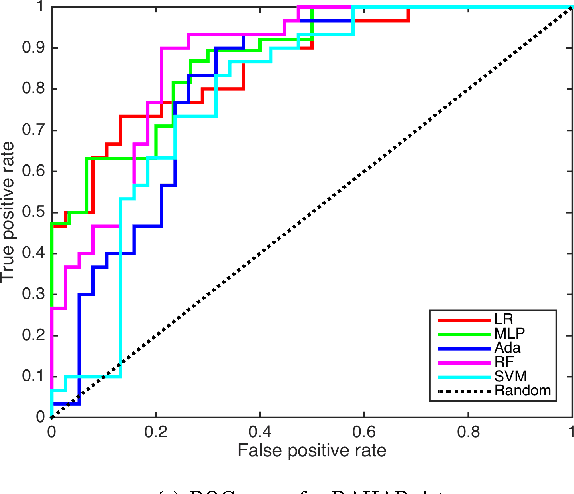

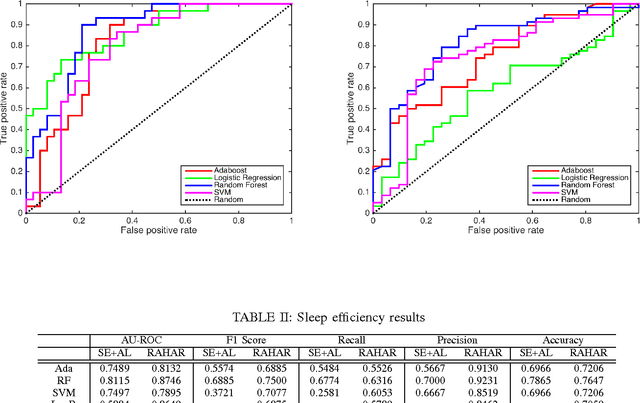

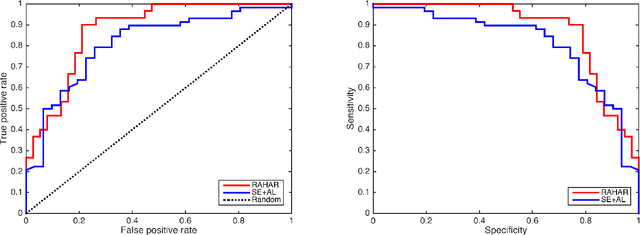

Abstract:The importance of sleep is paramount for maintaining physical, emotional and mental wellbeing. Though the relationship between sleep and physical activity is known to be important, it is not yet fully understood. The explosion in popularity of actigraphy and wearable devices, provides a unique opportunity to understand this relationship. Leveraging this information source requires new tools to be developed to facilitate data-driven research for sleep and activity patient-recommendations. In this paper we explore the use of deep learning to build sleep quality prediction models based on actigraphy data. We first use deep learning as a pure model building device by performing human activity recognition (HAR) on raw sensor data, and using deep learning to build sleep prediction models. We compare the deep learning models with those build using classical approaches, i.e. logistic regression, support vector machines, random forest and adaboost. Secondly, we employ the advantage of deep learning with its ability to handle high dimensional datasets. We explore several deep learning models on the raw wearable sensor output without performing HAR or any other feature extraction. Our results show that using a convolutional neural network on the raw wearables output improves the predictive value of sleep quality from physical activity, by an additional 8% compared to state-of-the-art non-deep learning approaches, which itself shows a 15% improvement over current practice. Moreover, utilizing deep learning on raw data eliminates the need for data pre-processing and simplifies the overall workflow to analyze actigraphy data for sleep and physical activity research.

Robust Automated Human Activity Recognition and its Application to Sleep Research

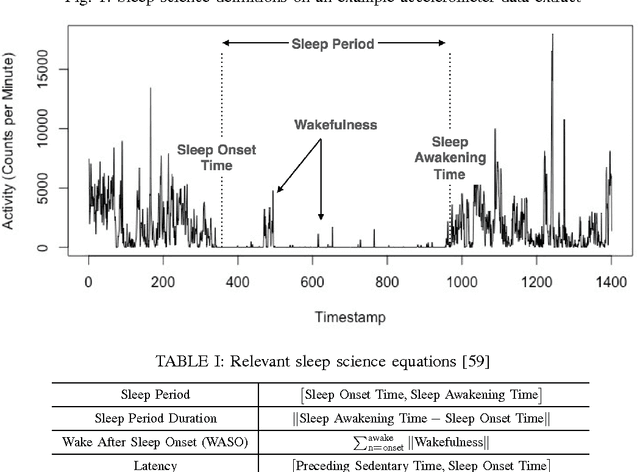

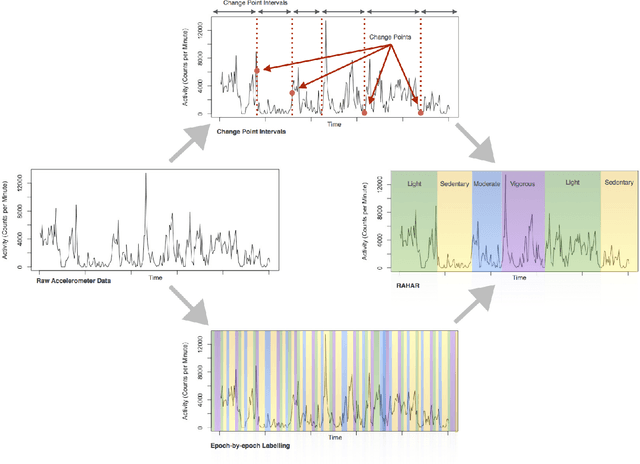

Jul 19, 2016

Abstract:Human Activity Recognition (HAR) is a powerful tool for understanding human behaviour. Applying HAR to wearable sensors can provide new insights by enriching the feature set in health studies, and enhance the personalisation and effectiveness of health, wellness, and fitness applications. Wearable devices provide an unobtrusive platform for user monitoring, and due to their increasing market penetration, feel intrinsic to the wearer. The integration of these devices in daily life provide a unique opportunity for understanding human health and wellbeing. This is referred to as the "quantified self" movement. The analyses of complex health behaviours such as sleep, traditionally require a time-consuming manual interpretation by experts. This manual work is necessary due to the erratic periodicity and persistent noisiness of human behaviour. In this paper, we present a robust automated human activity recognition algorithm, which we call RAHAR. We test our algorithm in the application area of sleep research by providing a novel framework for evaluating sleep quality and examining the correlation between the aforementioned and an individual's physical activity. Our results improve the state-of-the-art procedure in sleep research by 15 percent for area under ROC and by 30 percent for F1 score on average. However, application of RAHAR is not limited to sleep analysis and can be used for understanding other health problems such as obesity, diabetes, and cardiac diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge