Jaeyong Chung

Training Multi-bit Quantized and Binarized Networks with A Learnable Symmetric Quantizer

Apr 01, 2021

Abstract:Quantizing weights and activations of deep neural networks is essential for deploying them in resource-constrained devices, or cloud platforms for at-scale services. While binarization is a special case of quantization, this extreme case often leads to several training difficulties, and necessitates specialized models and training methods. As a result, recent quantization methods do not provide binarization, thus losing the most resource-efficient option, and quantized and binarized networks have been distinct research areas. We examine binarization difficulties in a quantization framework and find that all we need to enable the binary training are a symmetric quantizer, good initialization, and careful hyperparameter selection. These techniques also lead to substantial improvements in multi-bit quantization. We demonstrate our unified quantization framework, denoted as UniQ, on the ImageNet dataset with various architectures such as ResNet-18,-34 and MobileNetV2. For multi-bit quantization, UniQ outperforms existing methods to achieve the state-of-the-art accuracy. In binarization, the achieved accuracy is comparable to existing state-of-the-art methods even without modifying the original architectures.

INsight: A Neuromorphic Computing System for Evaluation of Large Neural Networks

Aug 05, 2015

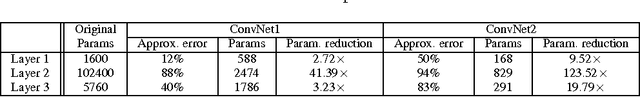

Abstract:Deep neural networks have been demonstrated impressive results in various cognitive tasks such as object detection and image classification. In order to execute large networks, Von Neumann computers store the large number of weight parameters in external memories, and processing elements are timed-shared, which leads to power-hungry I/O operations and processing bottlenecks. This paper describes a neuromorphic computing system that is designed from the ground up for the energy-efficient evaluation of large-scale neural networks. The computing system consists of a non-conventional compiler, a neuromorphic architecture, and a space-efficient microarchitecture that leverages existing integrated circuit design methodologies. The compiler factorizes a trained, feedforward network into a sparsely connected network, compresses the weights linearly, and generates a time delay neural network reducing the number of connections. The connections and units in the simplified network are mapped to silicon synapses and neurons. We demonstrate an implementation of the neuromorphic computing system based on a field-programmable gate array that performs the MNIST hand-written digit classification with 97.64% accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge