Jadson Castro Gertrudes

The use of Multi-domain Electroencephalogram Representations in the building of Models based on Convolutional and Recurrent Neural Networks for Epilepsy Detection

Apr 24, 2025

Abstract:Epilepsy, affecting approximately 50 million people globally, is characterized by abnormal brain activity and remains challenging to treat. The diagnosis of epilepsy relies heavily on electroencephalogram (EEG) data, where specialists manually analyze epileptiform patterns across pre-ictal, ictal, post-ictal, and interictal periods. However, the manual analysis of EEG signals is prone to variability between experts, emphasizing the need for automated solutions. Although previous studies have explored preprocessing techniques and machine learning approaches for seizure detection, there is a gap in understanding how the representation of EEG data (time, frequency, or time-frequency domains) impacts the predictive performance of deep learning models. This work addresses this gap by systematically comparing deep neural networks trained on EEG data in these three domains. Through the use of statistical tests, we identify the optimal data representation and model architecture for epileptic seizure detection. The results demonstrate that frequency-domain data achieves detection metrics exceeding 97\%, providing a robust foundation for more accurate and reliable seizure detection systems.

Rethinking Default Values: a Low Cost and Efficient Strategy to Define Hyperparameters

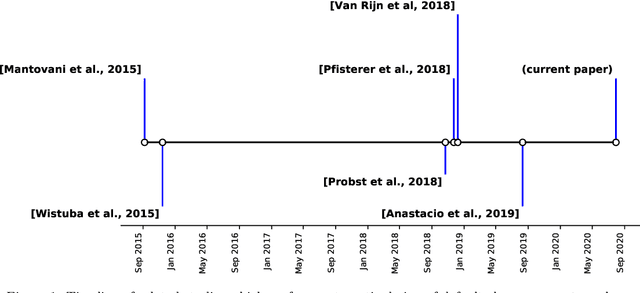

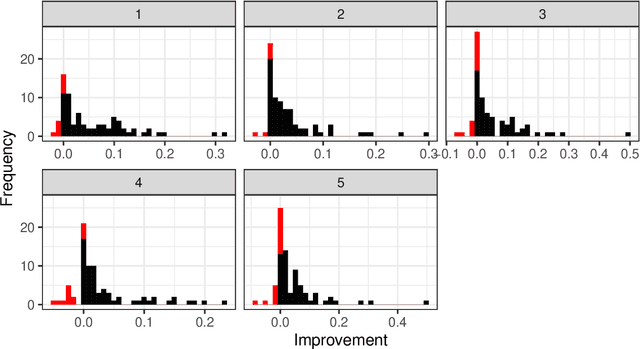

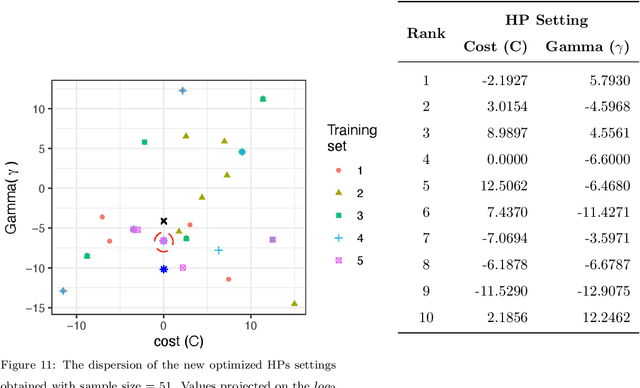

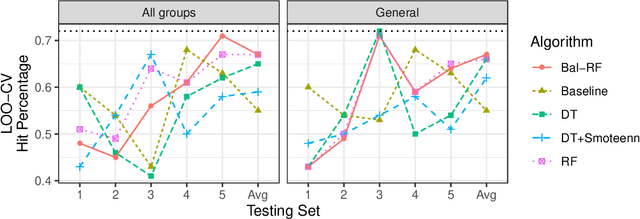

Aug 19, 2020

Abstract:Machine Learning (ML) algorithms have been successfully employed by a vast range of practitioners with different backgrounds. One of the reasons for ML popularity is the capability to consistently delivers accurate results, which can be further boosted by adjusting hyperparameters (HP). However, part of practitioners has limited knowledge about the algorithms and does not take advantage of suitable HP settings. In general, HP values are defined by trial and error, tuning, or by using default values. Trial and error is very subjective, time costly and dependent on the user experience. Tuning techniques search for HP values able to maximize the predictive performance of induced models for a given dataset, but with the drawback of a high computational cost and target specificity. To avoid tuning costs, practitioners use default values suggested by the algorithm developer or by tools implementing the algorithm. Although default values usually result in models with acceptable predictive performance, different implementations of the same algorithm can suggest distinct default values. To maintain a balance between tuning and using default values, we propose a strategy to generate new optimized default values. Our approach is grounded on a small set of optimized values able to obtain predictive performance values better than default settings provided by popular tools. The HP candidates are estimated through a pool of promising values tuned from a small and informative set of datasets. After performing a large experiment and a careful analysis of the results, we concluded that our approach delivers better default values. Besides, it leads to competitive solutions when compared with the use of tuned values, being easier to use and having a lower cost.Based on our results, we also extracted simple rules to guide practitioners in deciding whether using our new methodology or a tuning approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge