J. de Curtò

Cross-Platform Evaluation of Reasoning Capabilities in Foundation Models

Oct 30, 2025Abstract:This paper presents a comprehensive cross-platform evaluation of reasoning capabilities in contemporary foundation models, establishing an infrastructure-agnostic benchmark across three computational paradigms: HPC supercomputing (MareNostrum 5), cloud platforms (Nebius AI Studio), and university clusters (a node with eight H200 GPUs). We evaluate 15 foundation models across 79 problems spanning eight academic domains (Physics, Mathematics, Chemistry, Economics, Biology, Statistics, Calculus, and Optimization) through three experimental phases: (1) Baseline establishment: Six models (Mixtral-8x7B, Phi-3, LLaMA 3.1-8B, Gemma-2-9b, Mistral-7B, OLMo-7B) evaluated on 19 problems using MareNostrum 5, establishing methodology and reference performance; (2) Infrastructure validation: The 19-problem benchmark repeated on university cluster (seven models including Falcon-Mamba state-space architecture) and Nebius AI Studio (nine state-of-the-art models: Hermes-4 70B/405B, LLaMA 3.1-405B/3.3-70B, Qwen3 30B/235B, DeepSeek-R1, GPT-OSS 20B/120B) to confirm infrastructure-agnostic reproducibility; (3) Extended evaluation: Full 79-problem assessment on both university cluster and Nebius platforms, probing generalization at scale across architectural diversity. The findings challenge conventional scaling assumptions, establish training data quality as more critical than model size, and provide actionable guidelines for model selection across educational, production, and research contexts. The tri-infrastructure methodology and 79-problem benchmark enable longitudinal tracking of reasoning capabilities as foundation models evolve.

Learning with Signatures

Apr 25, 2022

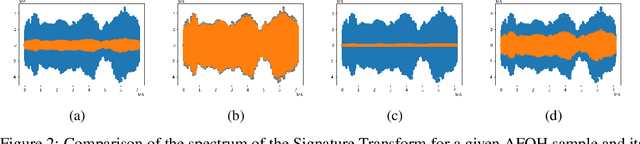

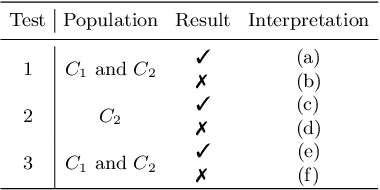

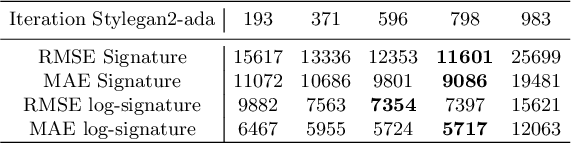

Abstract:In this work we investigate the use of the Signature Transform in the context of Learning. Under this assumption, we advance a supervised framework that provides state-of-the-art classification accuracy with the use of very few labels without the need of credit assignment and with minimal or no overfitting. We leverage tools from harmonic analysis by the use of the signature and log-signature, and use as a score function RMSE and MAE Signature and log-signature. We develop a closed-form equation to compute probably good optimal scale factors. Classification is performed at the CPU level orders of magnitude faster than other methods. We report results on AFHQ, MNIST and CIFAR10 achieving 100% accuracy on all tasks assuming we can determine at test time which probably good optimal scale factor to use for each category.

Signature and Log-signature for the Study of Empirical Distributions Generated with GANs

Mar 12, 2022

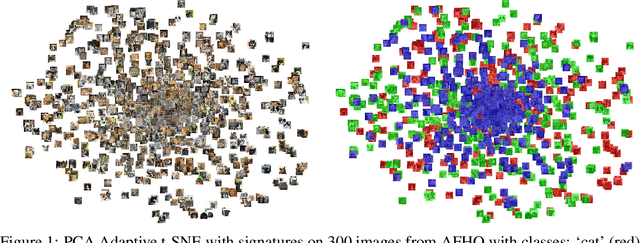

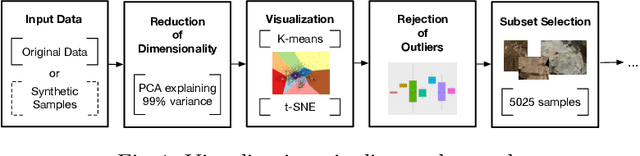

Abstract:In this paper, we develop a new and systematic method to explore and analyze samples taken by NASA Perseverance on the surface of the planet Mars. A novel in this context PCA adaptive t-SNE is proposed, as well as the introduction of statistical measures to study the goodness of fit of the sample distribution. We go beyond visualization by generating synthetic imagery using Stylegan2-ADA that resemble the original terrain distribution. We also conduct synthetic image generation using the recently introduced Scored-based Generative Modeling. We bring forward the use of the recently developed Signature Transform as a way to measure the similarity between image distributions and provide detailed acquaintance and extensive evaluations. We are the first to pioneer RMSE and MAE Signature and log-signature as an alternative to measure GAN convergence. Insights on state-of-the-art instance segmentation of the samples by the use of a model DeepLabv3 are also given.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge