Helena Mihaljević

Civil Society in the Loop: Feedback-Driven Adaptation of (L)LM-Assisted Classification in an Open-Source Telegram Monitoring Tool

Jul 09, 2025Abstract:The role of civil society organizations (CSOs) in monitoring harmful online content is increasingly crucial, especially as platform providers reduce their investment in content moderation. AI tools can assist in detecting and monitoring harmful content at scale. However, few open-source tools offer seamless integration of AI models and social media monitoring infrastructures. Given their thematic expertise and contextual understanding of harmful content, CSOs should be active partners in co-developing technological tools, providing feedback, helping to improve models, and ensuring alignment with stakeholder needs and values, rather than as passive 'consumers'. However, collaborations between the open source community, academia, and civil society remain rare, and research on harmful content seldom translates into practical tools usable by civil society actors. This work in progress explores how CSOs can be meaningfully involved in an AI-assisted open-source monitoring tool of anti-democratic movements on Telegram, which we are currently developing in collaboration with CSO stakeholders.

Debunking with Dialogue? Exploring AI-Generated Counterspeech to Challenge Conspiracy Theories

Apr 23, 2025

Abstract:Counterspeech is a key strategy against harmful online content, but scaling expert-driven efforts is challenging. Large Language Models (LLMs) present a potential solution, though their use in countering conspiracy theories is under-researched. Unlike for hate speech, no datasets exist that pair conspiracy theory comments with expert-crafted counterspeech. We address this gap by evaluating the ability of GPT-4o, Llama 3, and Mistral to effectively apply counterspeech strategies derived from psychological research provided through structured prompts. Our results show that the models often generate generic, repetitive, or superficial results. Additionally, they over-acknowledge fear and frequently hallucinate facts, sources, or figures, making their prompt-based use in practical applications problematic.

SurfaceAI: Automated creation of cohesive road surface quality datasets based on open street-level imagery

Sep 27, 2024Abstract:This paper introduces SurfaceAI, a pipeline designed to generate comprehensive georeferenced datasets on road surface type and quality from openly available street-level imagery. The motivation stems from the significant impact of road unevenness on the safety and comfort of traffic participants, especially vulnerable road users, emphasizing the need for detailed road surface data in infrastructure modeling and analysis. SurfaceAI addresses this gap by leveraging crowdsourced Mapillary data to train models that predict the type and quality of road surfaces visible in street-level images, which are then aggregated to provide cohesive information on entire road segment conditions.

StreetSurfaceVis: a dataset of crowdsourced street-level imagery with semi-automated annotations of road surface type and quality

Jul 31, 2024Abstract:Road unevenness significantly impacts the safety and comfort of various traffic participants, especially vulnerable road users such as cyclists and wheelchair users. This paper introduces StreetSurfaceVis, a novel dataset comprising 9,122 street-level images collected from a crowdsourcing platform and manually annotated by road surface type and quality. The dataset is intended to train models for comprehensive surface assessments of road networks. Existing open datasets are constrained by limited geospatial coverage and camera setups, typically excluding cycleways and footways. By crafting a heterogeneous dataset, we aim to fill this gap and enable robust models that maintain high accuracy across diverse image sources. However, the frequency distribution of road surface types and qualities is highly imbalanced. We address the challenge of ensuring sufficient images per class while reducing manual annotation by proposing a sampling strategy that incorporates various external label prediction resources. More precisely, we estimate the impact of (1) enriching the image data with OpenStreetMap tags, (2) iterative training and application of a custom surface type classification model, (3) amplifying underrepresented classes through prompt-based classification with GPT-4o or similarity search using image embeddings. We show that utilizing a combination of these strategies effectively reduces manual annotation workload while ensuring sufficient class representation.

Detection of Conspiracy Theories Beyond Keyword Bias in German-Language Telegram Using Large Language Models

Apr 27, 2024Abstract:The automated detection of conspiracy theories online typically relies on supervised learning. However, creating respective training data requires expertise, time and mental resilience, given the often harmful content. Moreover, available datasets are predominantly in English and often keyword-based, introducing a token-level bias into the models. Our work addresses the task of detecting conspiracy theories in German Telegram messages. We compare the performance of supervised fine-tuning approaches using BERT-like models with prompt-based approaches using Llama2, GPT-3.5, and GPT-4 which require little or no additional training data. We use a dataset of $\sim\!\! 4,000$ messages collected during the COVID-19 pandemic, without the use of keyword filters. Our findings demonstrate that both approaches can be leveraged effectively: For supervised fine-tuning, we report an F1 score of $\sim\!\! 0.8$ for the positive class, making our model comparable to recent models trained on keyword-focused English corpora. We demonstrate our model's adaptability to intra-domain temporal shifts, achieving F1 scores of $\sim\!\! 0.7$. Among prompting variants, the best model is GPT-4, achieving an F1 score of $\sim\!\! 0.8$ for the positive class in a zero-shot setting and equipped with a custom conspiracy theory definition.

How toxic is antisemitism? Potentials and limitations of automated toxicity scoring for antisemitic online content

Oct 05, 2023Abstract:The Perspective API, a popular text toxicity assessment service by Google and Jigsaw, has found wide adoption in several application areas, notably content moderation, monitoring, and social media research. We examine its potentials and limitations for the detection of antisemitic online content that, by definition, falls under the toxicity umbrella term. Using a manually annotated German-language dataset comprising around 3,600 posts from Telegram and Twitter, we explore as how toxic antisemitic texts are rated and how the toxicity scores differ regarding different subforms of antisemitism and the stance expressed in the texts. We show that, on a basic level, Perspective API recognizes antisemitic content as toxic, but shows critical weaknesses with respect to non-explicit forms of antisemitism and texts taking a critical stance towards it. Furthermore, using simple text manipulations, we demonstrate that the use of widespread antisemitic codes can substantially reduce API scores, making it rather easy to bypass content moderation based on the service's results.

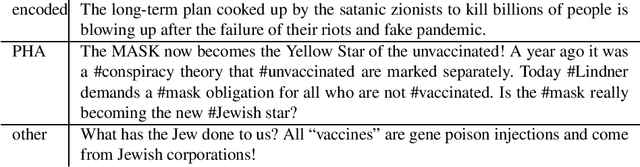

Codes, Patterns and Shapes of Contemporary Online Antisemitism and Conspiracy Narratives -- an Annotation Guide and Labeled German-Language Dataset in the Context of COVID-19

Oct 13, 2022

Abstract:Over the course of the COVID-19 pandemic, existing conspiracy theories were refreshed and new ones were created, often interwoven with antisemitic narratives, stereotypes and codes. The sheer volume of antisemitic and conspiracy theory content on the Internet makes data-driven algorithmic approaches essential for anti-discrimination organizations and researchers alike. However, the manifestation and dissemination of these two interrelated phenomena is still quite under-researched in scholarly empirical research of large text corpora. Algorithmic approaches for the detection and classification of specific contents usually require labeled datasets, annotated based on conceptually sound guidelines. While there is a growing number of datasets for the more general phenomenon of hate speech, the development of corpora and annotation guidelines for antisemitic and conspiracy content is still in its infancy, especially for languages other than English. We contribute to closing this gap by developing an annotation guide for antisemitic and conspiracy theory online content in the context of the COVID-19 pandemic. We provide working definitions, including specific forms of antisemitism such as encoded and post-Holocaust antisemitism. We use these to annotate a German-language dataset consisting of ~3,700 Telegram messages sent between 03/2020 and 12/2021.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge