Haotong Liang

Real-time experiment-theory closed-loop interaction for autonomous materials science

Oct 22, 2024Abstract:Iterative cycles of theoretical prediction and experimental validation are the cornerstone of the modern scientific method. However, the proverbial "closing of the loop" in experiment-theory cycles in practice are usually ad hoc, often inherently difficult, or impractical to repeat on a systematic basis, beset by the scale or the time constraint of computation or the phenomena under study. Here, we demonstrate Autonomous MAterials Search Engine (AMASE), where we enlist robot science to perform self-driving continuous cyclical interaction of experiments and computational predictions for materials exploration. In particular, we have applied the AMASE formalism to the rapid mapping of a temperature-composition phase diagram, a fundamental task for the search and discovery of new materials. Thermal processing and experimental determination of compositional phase boundaries in thin films are autonomously interspersed with real-time updating of the phase diagram prediction through the minimization of Gibbs free energies. AMASE was able to accurately determine the eutectic phase diagram of the Sn-Bi binary thin-film system on the fly from a self-guided campaign covering just a small fraction of the entire composition - temperature phase space, translating to a 6-fold reduction in the number of necessary experiments. This study demonstrates for the first time the possibility of real-time, autonomous, and iterative interactions of experiments and theory carried out without any human intervention.

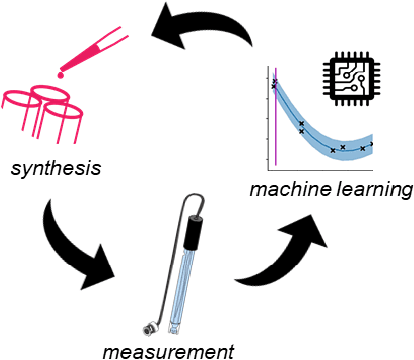

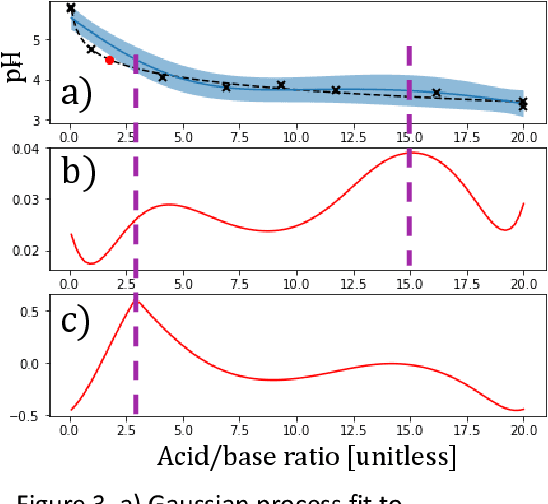

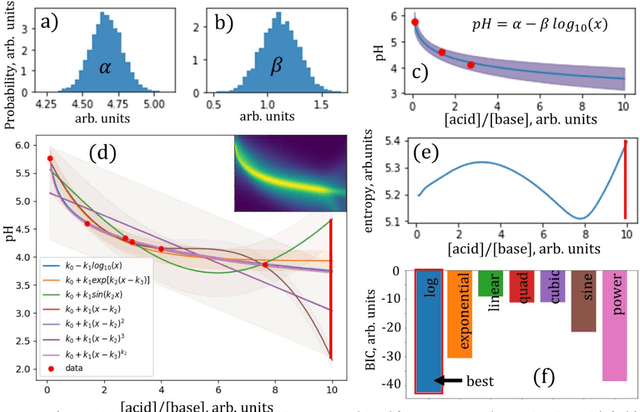

A Low-Cost Robot Science Kit for Education with Symbolic Regression for Hypothesis Discovery and Validation

Apr 13, 2022

Abstract:The next generation of physical science involves robot scientists - autonomous physical science systems capable of experimental design, execution, and analysis in a closed loop. Such systems have shown real-world success for scientific exploration and discovery, including the first discovery of a best-in-class material. To build and use these systems, the next generation workforce requires expertise in diverse areas including ML, control systems, measurement science, materials synthesis, decision theory, among others. However, education is lagging. Educators need a low-cost, easy-to-use platform to teach the required skills. Industry can also use such a platform for developing and evaluating autonomous physical science methodologies. We present the next generation in science education, a kit for building a low-cost autonomous scientist. The kit was used during two courses at the University of Maryland to teach undergraduate and graduate students autonomous physical science. We discuss its use in the course and its greater capability to teach the dual tasks of autonomous model exploration, optimization, and determination, with an example of autonomous experimental "discovery" of the Henderson-Hasselbalch equation.

Benchmarking Active Learning Strategies for Materials Optimization and Discovery

Apr 12, 2022

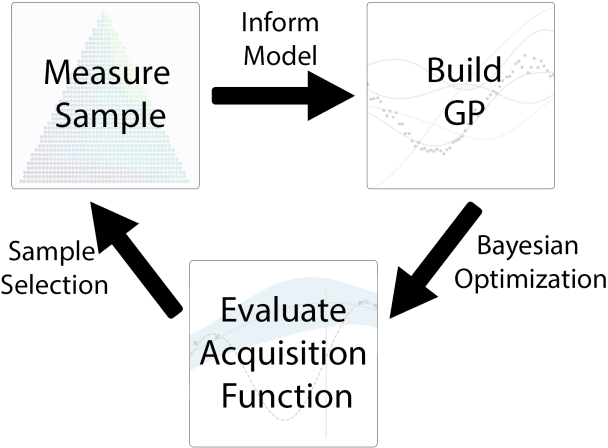

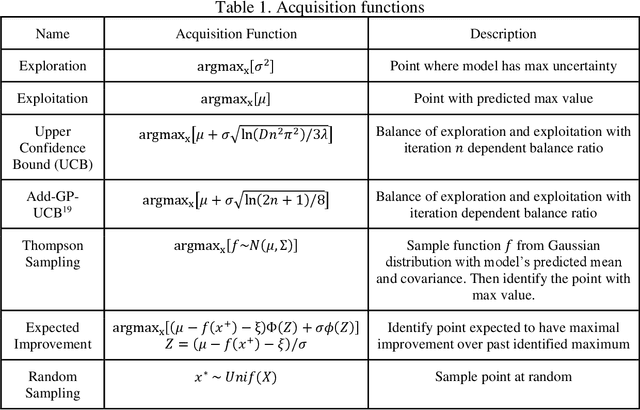

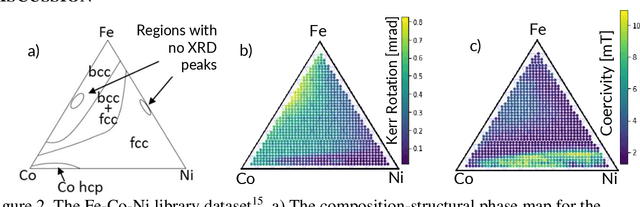

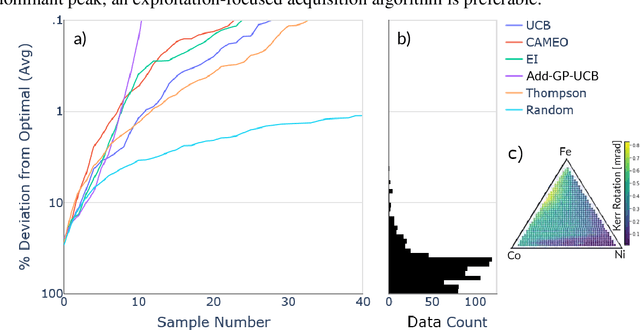

Abstract:Autonomous physical science is revolutionizing materials science. In these systems, machine learning controls experiment design, execution, and analysis in a closed loop. Active learning, the machine learning field of optimal experiment design, selects each subsequent experiment to maximize knowledge toward the user goal. Autonomous system performance can be further improved with implementation of scientific machine learning, also known as inductive bias-engineered artificial intelligence, which folds prior knowledge of physical laws (e.g., Gibbs phase rule) into the algorithm. As the number, diversity, and uses for active learning strategies grow, there is an associated growing necessity for real-world reference datasets to benchmark strategies. We present a reference dataset and demonstrate its use to benchmark active learning strategies in the form of various acquisition functions. Active learning strategies are used to rapidly identify materials with optimal physical properties within a ternary materials system. The data is from an actual Fe-Co-Ni thin-film library and includes previously acquired experimental data for materials compositions, X-ray diffraction patterns, and two functional properties of magnetic coercivity and the Kerr rotation. Popular active learning methods along with a recent scientific active learning method are benchmarked for their materials optimization performance. We discuss the relationship between algorithm performance, materials search space complexity, and the incorporation of prior knowledge.

CRYSPNet: Crystal Structure Predictions via Neural Network

Mar 31, 2020

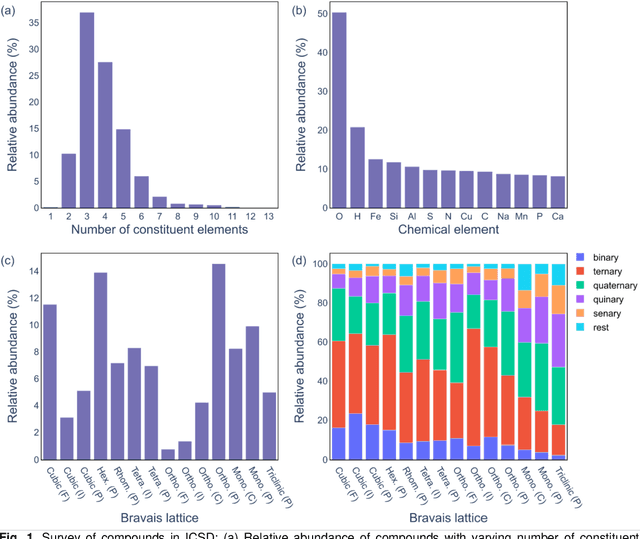

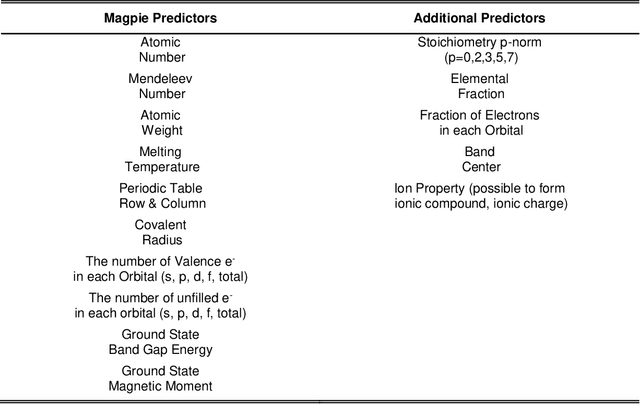

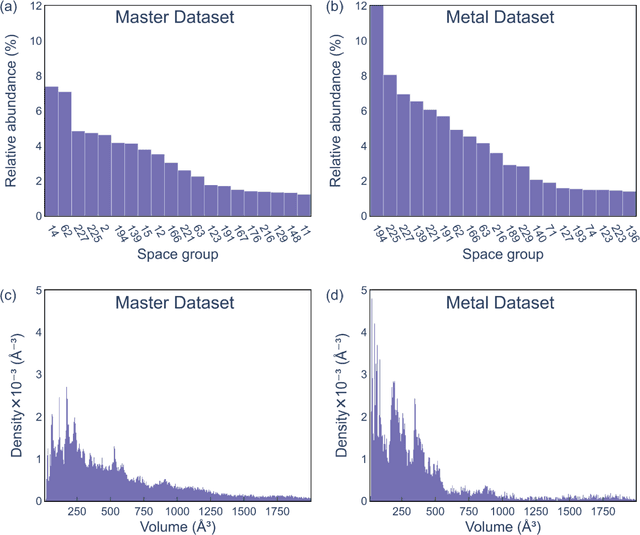

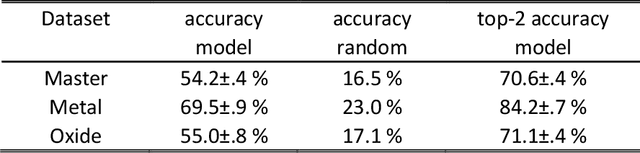

Abstract:Structure is the most basic and important property of crystalline solids; it determines directly or indirectly most materials characteristics. However, predicting crystal structure of solids remains a formidable and not fully solved problem. Standard theoretical tools for this task are computationally expensive and at times inaccurate. Here we present an alternative approach utilizing machine learning for crystal structure prediction. We developed a tool called Crystal Structure Prediction Network (CRYSPNet) that can predict the Bravais lattice, space group, and lattice parameters of an inorganic material based only on its chemical composition. CRYSPNet consists of a series of neural network models, using as inputs predictors aggregating the properties of the elements constituting the compound. It was trained and validated on more than 100,000 entries from the Inorganic Crystal Structure Database. The tool demonstrates robust predictive capability and outperforms alternative strategies by a large margin. Made available to the public (at https://github.com/AuroraLHT/cryspnet), it can be used both as an independent prediction engine or as a method to generate candidate structures for further computational and/or experimental validation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge