Guillaume Drion

Montefiore Institute, University of Liège, Liège, Belgium

Parallelizable memory recurrent units

Jan 14, 2026Abstract:With the emergence of massively parallel processing units, parallelization has become a desirable property for new sequence models. The ability to parallelize the processing of sequences with respect to the sequence length during training is one of the main factors behind the uprising of the Transformer architecture. However, Transformers lack efficiency at sequence generation, as they need to reprocess all past timesteps at every generation step. Recently, state-space models (SSMs) emerged as a more efficient alternative. These new kinds of recurrent neural networks (RNNs) keep the efficient update of the RNNs while gaining parallelization by getting rid of nonlinear dynamics (or recurrence). SSMs can reach state-of-the art performance through the efficient training of potentially very large networks, but still suffer from limited representation capabilities. In particular, SSMs cannot exhibit persistent memory, or the capacity of retaining information for an infinite duration, because of their monostability. In this paper, we introduce a new family of RNNs, the memory recurrent units (MRUs), that combine the persistent memory capabilities of nonlinear RNNs with the parallelizable computations of SSMs. These units leverage multistability as a source of persistent memory, while getting rid of transient dynamics for efficient computations. We then derive a specific implementation as proof-of-concept: the bistable memory recurrent unit (BMRU). This new RNN is compatible with the parallel scan algorithm. We show that BMRU achieves good results in tasks with long-term dependencies, and can be combined with state-space models to create hybrid networks that are parallelizable and have transient dynamics as well as persistent memory.

Fast reconstruction of degenerate populations of conductance-based neuron models from spike times

Sep 16, 2025

Abstract:Neurons communicate through spikes, and spike timing is a crucial part of neuronal processing. Spike times can be recorded experimentally both intracellularly and extracellularly, and are the main output of state-of-the-art neural probes. On the other hand, neuronal activity is controlled at the molecular level by the currents generated by many different transmembrane proteins called ion channels. Connecting spike timing to ion channel composition remains an arduous task to date. To address this challenge, we developed a method that combines deep learning with a theoretical tool called Dynamic Input Conductances (DICs), which reduce the complexity of ion channel interactions into three interpretable components describing how neurons spike. Our approach uses deep learning to infer DICs directly from spike times and then generates populations of "twin" neuron models that replicate the observed activity while capturing natural variability in membrane channel composition. The method is fast, accurate, and works using only spike recordings. We also provide open-source software with a graphical interface, making it accessible to researchers without programming expertise.

Spike-based computation using classical recurrent neural networks

Jun 06, 2023Abstract:Spiking neural networks are a type of artificial neural networks in which communication between neurons is only made of events, also called spikes. This property allows neural networks to make asynchronous and sparse computations and therefore to drastically decrease energy consumption when run on specialized hardware. However, training such networks is known to be difficult, mainly due to the non-differentiability of the spike activation, which prevents the use of classical backpropagation. This is because state-of-the-art spiking neural networks are usually derived from biologically-inspired neuron models, to which are applied machine learning methods for training. Nowadays, research about spiking neural networks focuses on the design of training algorithms whose goal is to obtain networks that compete with their non-spiking version on specific tasks. In this paper, we attempt the symmetrical approach: we modify the dynamics of a well-known, easily trainable type of recurrent neural network to make it event-based. This new RNN cell, called the Spiking Recurrent Cell, therefore communicates using events, i.e. spikes, while being completely differentiable. Vanilla backpropagation can thus be used to train any network made of such RNN cell. We show that this new network can achieve performance comparable to other types of spiking networks in the MNIST benchmark and its variants, the Fashion-MNIST and the Neuromorphic-MNIST. Moreover, we show that this new cell makes the training of deep spiking networks achievable.

Warming-up recurrent neural networks to maximize reachable multi-stability greatly improves learning

Jun 02, 2021

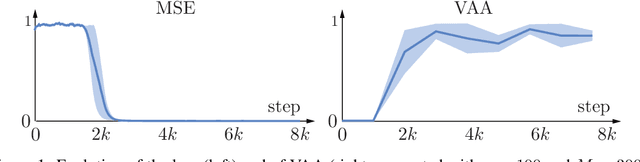

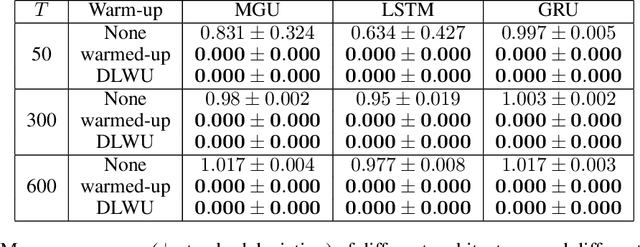

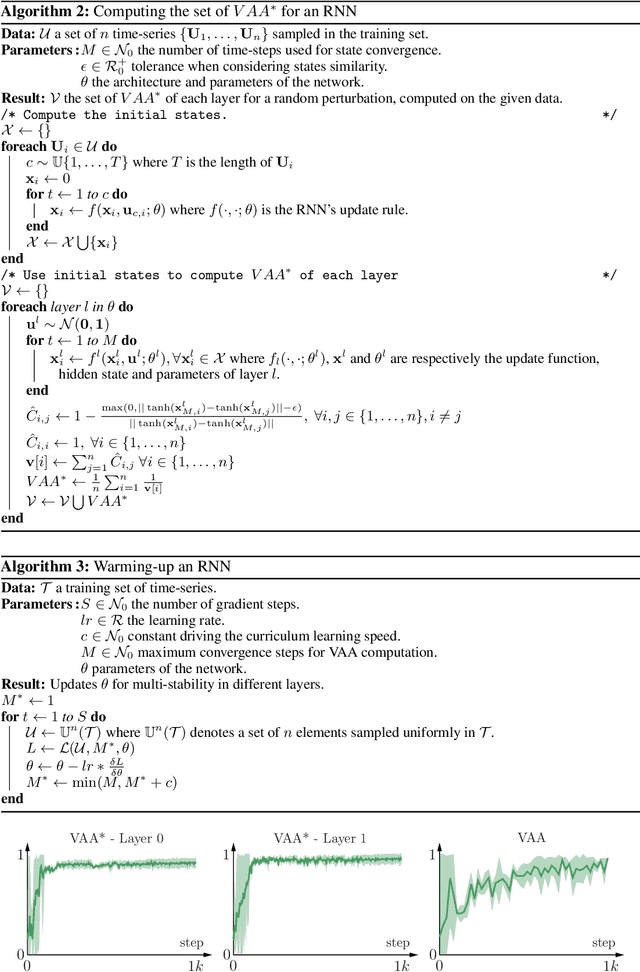

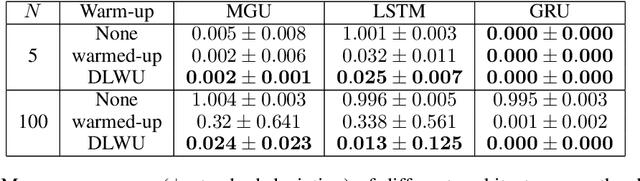

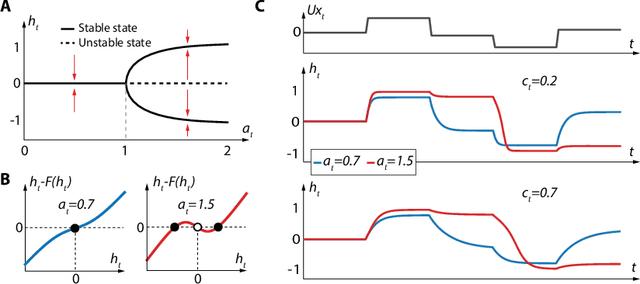

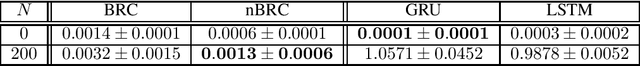

Abstract:Training recurrent neural networks is known to be difficult when time dependencies become long. Consequently, training standard gated cells such as gated recurrent units and long-short term memory on benchmarks where long-term memory is required remains an arduous task. In this work, we propose a general way to initialize any recurrent network connectivity through a process called "warm-up" to improve its capability to learn arbitrarily long time dependencies. This initialization process is designed to maximize network reachable multi-stability, i.e. the number of attractors within the network that can be reached through relevant input trajectories. Warming-up is performed before training, using stochastic gradient descent on a specifically designed loss. We show that warming-up greatly improves recurrent neural network performance on long-term memory benchmarks for multiple recurrent cell types, but can sometimes impede precision. We therefore introduce a parallel recurrent network structure with partial warm-up that is shown to greatly improve learning on long time-series while maintaining high levels of precision. This approach provides a general framework for improving learning abilities of any recurrent cell type when long-term memory is required.

A bio-inspired bistable recurrent cell allows for long-lasting memory

Jun 09, 2020

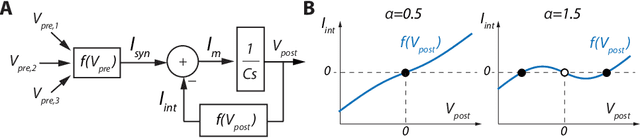

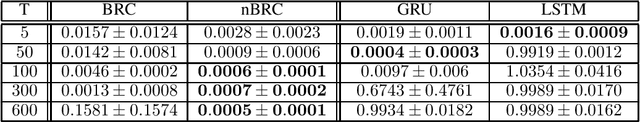

Abstract:Recurrent neural networks (RNNs) provide state-of-the-art performances in a wide variety of tasks that require memory. These performances can often be achieved thanks to gated recurrent cells such as gated recurrent units (GRU) and long short-term memory (LSTM). Standard gated cells share a layer internal state to store information at the network level, and long term memory is shaped by network-wide recurrent connection weights. Biological neurons on the other hand are capable of holding information at the cellular level for an arbitrary long amount of time through a process called bistability. Through bistability, cells can stabilize to different stable states depending on their own past state and inputs, which permits the durable storing of past information in neuron state. In this work, we take inspiration from biological neuron bistability to embed RNNs with long-lasting memory at the cellular level. This leads to the introduction of a new bistable biologically-inspired recurrent cell that is shown to strongly improves RNN performance on time-series which require very long memory, despite using only cellular connections (all recurrent connections are from neurons to themselves, i.e. a neuron state is not influenced by the state of other neurons). Furthermore, equipping this cell with recurrent neuromodulation permits to link them to standard GRU cells, taking a step towards the biological plausibility of GRU.

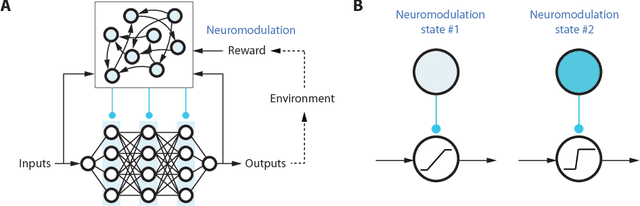

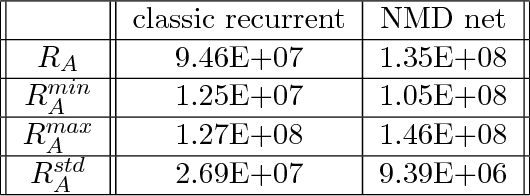

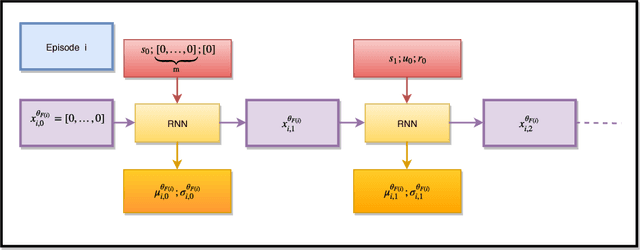

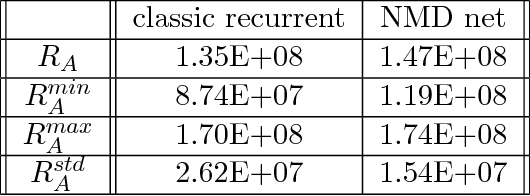

Introducing Neuromodulation in Deep Neural Networks to Learn Adaptive Behaviours

Jan 02, 2019

Abstract:In this paper, we propose a new deep neural network architecture, called NMD net, that has been specifically designed to learn adaptive behaviours. This architecture exploits a biological mechanism called neuromodulation that sustains adaptation in biological organisms. This architecture has been introduced in a deep-reinforcement learning architecture for interacting with Markov decision processes in a meta-reinforcement learning setting where the action space is continuous. The deep-reinforcement learning architecture is trained using an advantage actor-critic algorithm. Experiments are carried on several test problems. Results show that the neural network architecture with neuromodulation provides significantly better results than state-of-the-art recurrent neural networks which do not exploit this mechanism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge