Gregory Schroeder

ClearLines - Camera Calibration from Straight Lines

May 01, 2025

Abstract:The problem of calibration from straight lines is fundamental in geometric computer vision, with well-established theoretical foundations. However, its practical applicability remains limited, particularly in real-world outdoor scenarios. These environments pose significant challenges due to diverse and cluttered scenes, interrupted reprojections of straight 3D lines, and varying lighting conditions, making the task notoriously difficult. Furthermore, the field lacks a dedicated dataset encouraging the development of respective detection algorithms. In this study, we present a small dataset named "ClearLines", and by detailing its creation process, provide practical insights that can serve as a guide for developing and refining straight 3D line detection algorithms.

Shadow Erosion and Nighttime Adaptability for Camera-Based Automated Driving Applications

Apr 11, 2025

Abstract:Enhancement of images from RGB cameras is of particular interest due to its wide range of ever-increasing applications such as medical imaging, satellite imaging, automated driving, etc. In autonomous driving, various techniques are used to enhance image quality under challenging lighting conditions. These include artificial augmentation to improve visibility in poor nighttime conditions, illumination-invariant imaging to reduce the impact of lighting variations, and shadow mitigation to ensure consistent image clarity in bright daylight. This paper proposes a pipeline for Shadow Erosion and Nighttime Adaptability in images for automated driving applications while preserving color and texture details. The Shadow Erosion and Nighttime Adaptability pipeline is compared to the widely used CLAHE technique and evaluated based on illumination uniformity and visual perception quality metrics. The results also demonstrate a significant improvement over CLAHE, enhancing a YOLO-based drivable area segmentation algorithm.

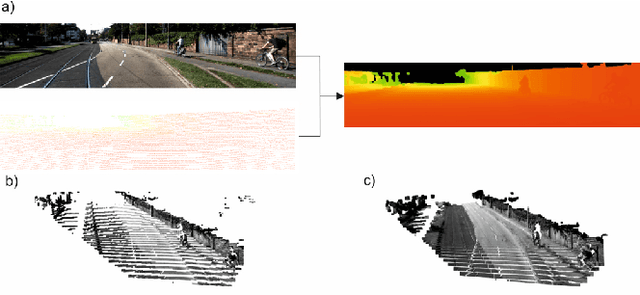

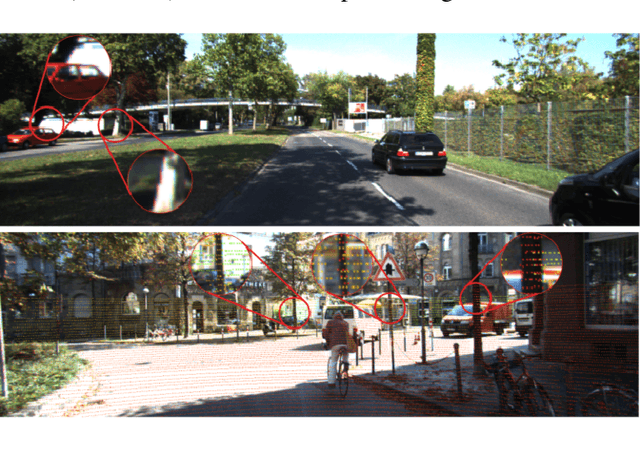

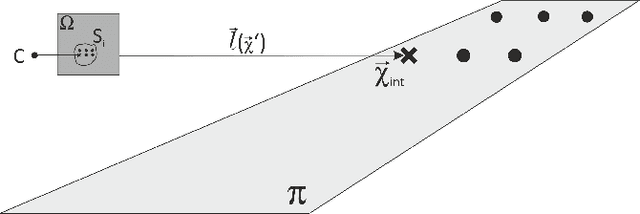

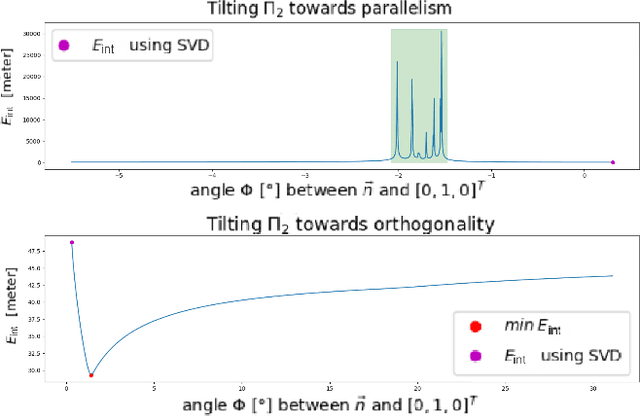

Deterministic Guided LiDAR Depth Map Completion

Jun 14, 2021

Abstract:Accurate dense depth estimation is crucial for autonomous vehicles to analyze their environment. This paper presents a non-deep learning-based approach to densify a sparse LiDAR-based depth map using a guidance RGB image. To achieve this goal the RGB image is at first cleared from most of the camera-LiDAR misalignment artifacts. Afterward, it is over segmented and a plane for each superpixel is approximated. In the case a superpixel is not well represented by a plane, a plane is approximated for a convex hull of the most inlier. Finally, the pinhole camera model is used for the interpolation process and the remaining areas are interpolated. The evaluation of this work is executed using the KITTI depth completion benchmark, which validates the proposed work and shows that it outperforms the state-of-the-art non-deep learning-based methods, in addition to several deep learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge