George Seif

Large Receptive Field Networks for High-Scale Image Super-Resolution

Apr 22, 2018

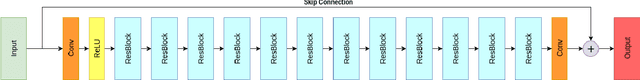

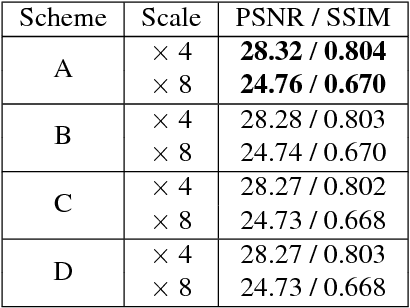

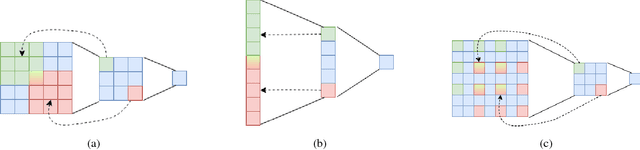

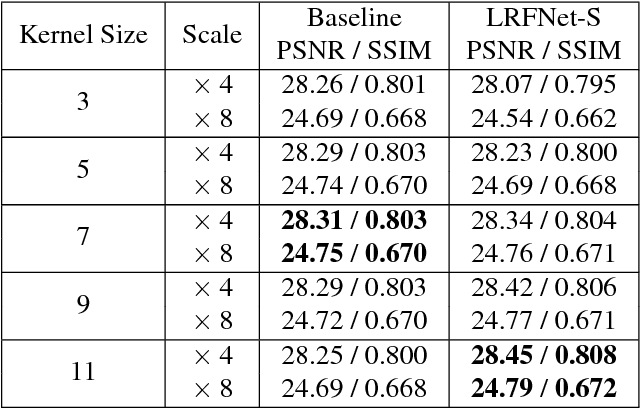

Abstract:Convolutional Neural Networks have been the backbone of recent rapid progress in Single-Image Super-Resolution. However, existing networks are very deep with many network parameters, thus having a large memory footprint and being challenging to train. We propose Large Receptive Field Networks which strive to directly expand the receptive field of Super-Resolution networks without increasing depth or parameter count. In particular, we use two different methods to expand the network receptive field: 1-D separable kernels and atrous convolutions. We conduct considerable experiments to study the performance of various arrangement schemes of the 1-D separable kernels and atrous convolution in terms of accuracy (PSNR / SSIM), parameter count, and speed, while focusing on the more challenging high upscaling factors. Extensive benchmark evaluations demonstrate the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge