Ganesh Jagadeesan

Characterizing Mamba's Selective Memory using Auto-Encoders

Dec 17, 2025

Abstract:State space models (SSMs) are a promising alternative to transformers for language modeling because they use fixed memory during inference. However, this fixed memory usage requires some information loss in the hidden state when processing long sequences. While prior work has studied the sequence length at which this information loss occurs, it does not characterize the types of information SSM language models (LMs) tend to forget. In this paper, we address this knowledge gap by identifying the types of tokens (e.g., parts of speech, named entities) and sequences (e.g., code, math problems) that are more frequently forgotten by SSM LMs. We achieve this by training an auto-encoder to reconstruct sequences from the SSM's hidden state, and measure information loss by comparing inputs with their reconstructions. We perform experiments using the Mamba family of SSM LMs (130M--1.4B) on sequences ranging from 4--256 tokens. Our results show significantly higher rates of information loss on math-related tokens (e.g., numbers, variables), mentions of organization entities, and alternative dialects to Standard American English. We then examine the frequency that these tokens appear in Mamba's pretraining data and find that less prevalent tokens tend to be the ones Mamba is most likely to forget. By identifying these patterns, our work provides clear direction for future research to develop methods that better control Mamba's ability to retain important information.

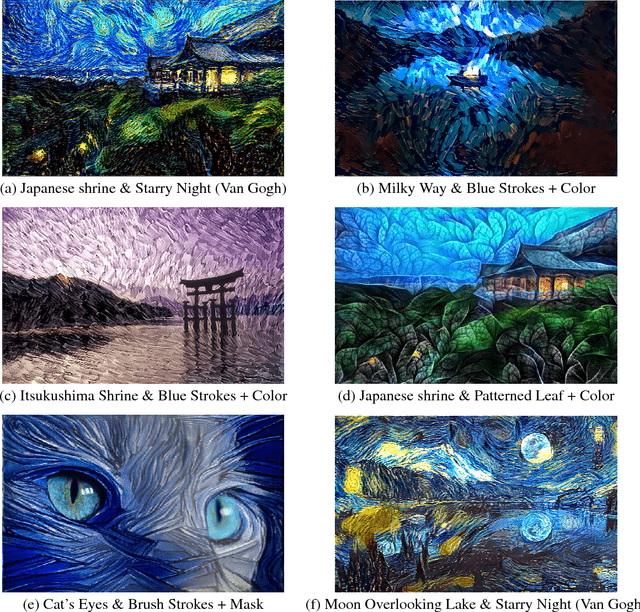

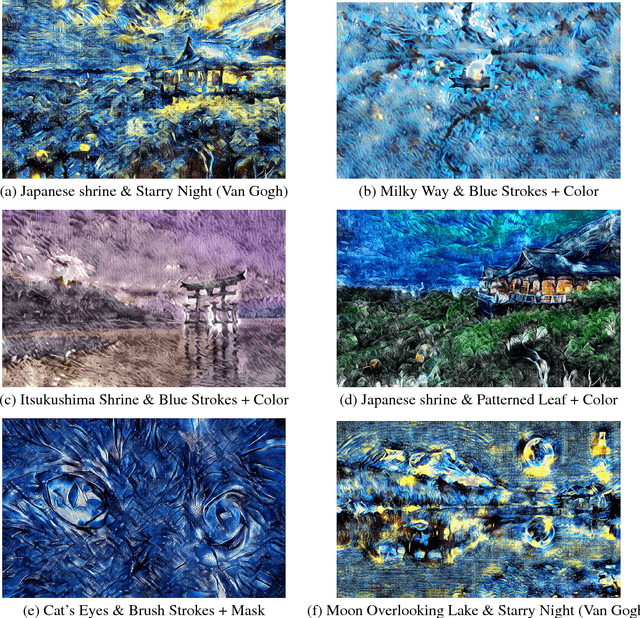

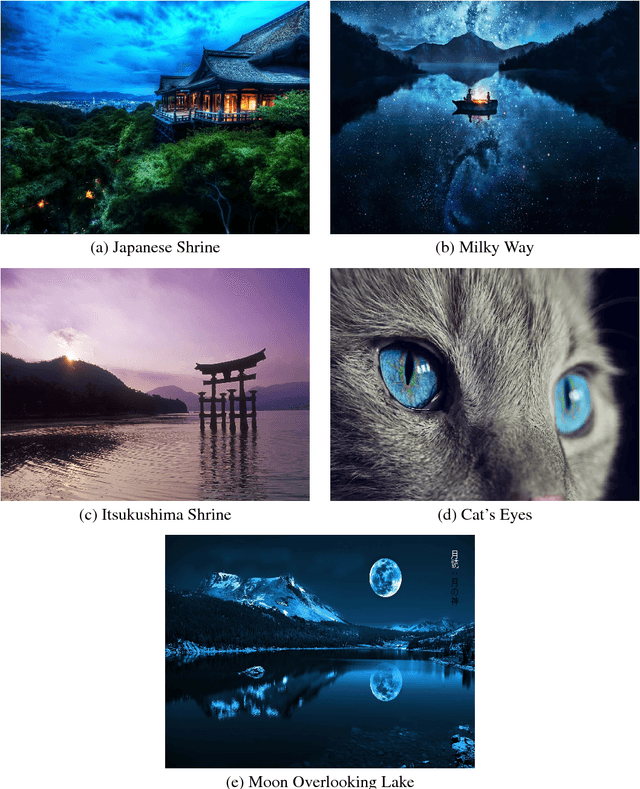

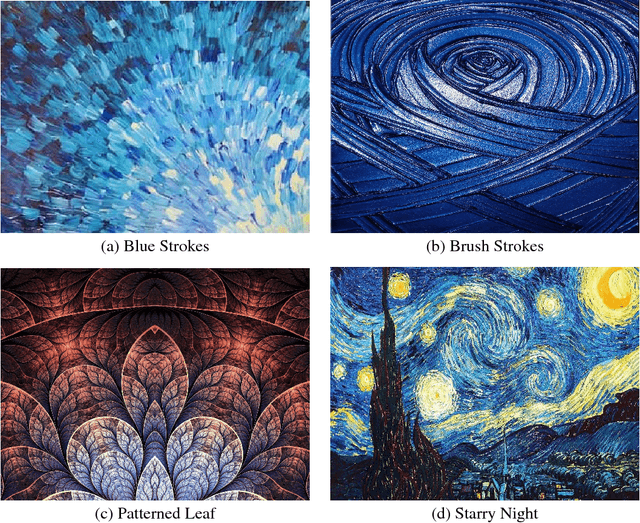

A Comprehensive Comparison between Neural Style Transfer and Universal Style Transfer

Jun 03, 2018

Abstract:Style transfer aims to transfer arbitrary visual styles to content images. We explore algorithms adapted from two papers that try to solve the problem of style transfer while generalizing on unseen styles or compromised visual quality. Majority of the improvements made focus on optimizing the algorithm for real-time style transfer while adapting to new styles with considerably less resources and constraints. We compare these strategies and compare how they measure up to produce visually appealing images. We explore two approaches to style transfer: neural style transfer with improvements and universal style transfer. We also make a comparison between the different images produced and how they can be qualitatively measured.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge