Gaëtan Caillaut

The LLM Pro Finance Suite: Multilingual Large Language Models for Financial Applications

Nov 07, 2025Abstract:The financial industry's growing demand for advanced natural language processing (NLP) capabilities has highlighted the limitations of generalist large language models (LLMs) in handling domain-specific financial tasks. To address this gap, we introduce the LLM Pro Finance Suite, a collection of five instruction-tuned LLMs (ranging from 8B to 70B parameters) specifically designed for financial applications. Our approach focuses on enhancing generalist instruction-tuned models, leveraging their existing strengths in instruction following, reasoning, and toxicity control, while fine-tuning them on a curated, high-quality financial corpus comprising over 50% finance-related data in English, French, and German. We evaluate the LLM Pro Finance Suite on a comprehensive financial benchmark suite, demonstrating consistent improvement over state-of-the-art baselines in finance-oriented tasks and financial translation. Notably, our models maintain the strong general-domain capabilities of their base models, ensuring reliable performance across non-specialized tasks. This dual proficiency, enhanced financial expertise without compromise on general abilities, makes the LLM Pro Finance Suite an ideal drop-in replacement for existing LLMs in financial workflows, offering improved domain-specific performance while preserving overall versatility. We publicly release two 8B-parameters models to foster future research and development in financial NLP applications: https://huggingface.co/collections/DragonLLM/llm-open-finance.

Learning Pretopological Spaces to Model Complex Propagation Phenomena: A Multiple Instance Learning Approach Based on a Logical Modeling

May 03, 2018

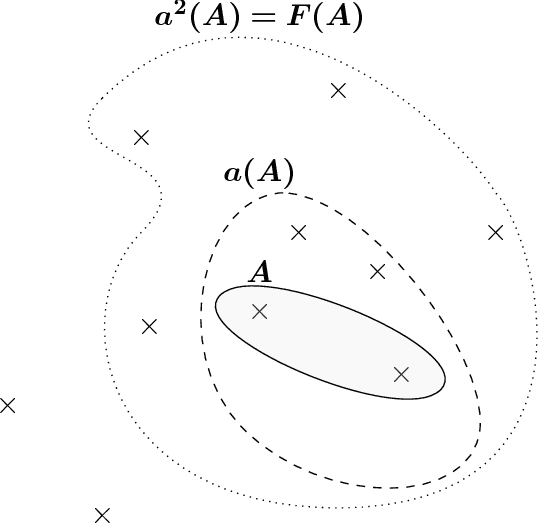

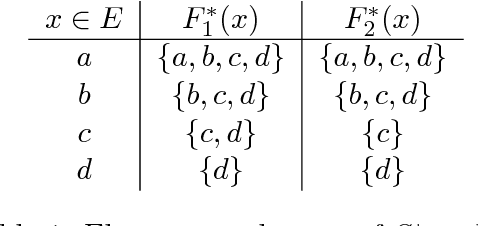

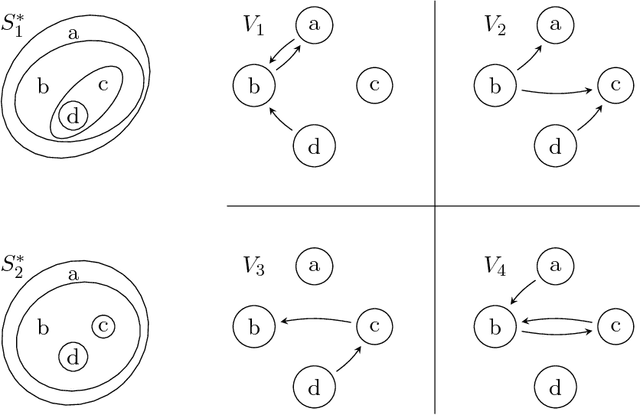

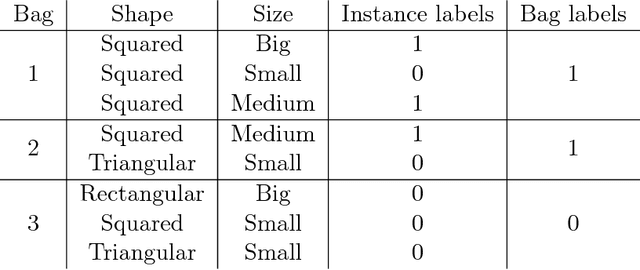

Abstract:This paper addresses the problem of learning the concept of "propagation" in the pretopology theoretical formalism. Our proposal is first to define the pseudo-closure operator (modeling the propagation concept) as a logical combination of neighborhoods. We show that learning such an operator lapses into the Multiple Instance (MI) framework, where the learning process is performed on bags of instances instead of individual instances. Though this framework is well suited for this task, its use for learning a pretopological space leads to a set of bags exponential in size. To overcome this issue we thus propose a learning method based on a low estimation of the bags covered by a concept under construction. As an experiment, percolation processes (forest fires typically) are simulated and the corresponding propagation models are learned based on a subset of observations. It reveals that the proposed MI approach is significantly more efficient on the task of propagation model recognition than existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge