Fu Peng

Reliable Robustness Evaluation via Automatically Constructed Attack Ensembles

Nov 23, 2022Abstract:Attack Ensemble (AE), which combines multiple attacks together, provides a reliable way to evaluate adversarial robustness. In practice, AEs are often constructed and tuned by human experts, which however tends to be sub-optimal and time-consuming. In this work, we present AutoAE, a conceptually simple approach for automatically constructing AEs. In brief, AutoAE repeatedly adds the attack and its iteration steps to the ensemble that maximizes ensemble improvement per additional iteration consumed. We show theoretically that AutoAE yields AEs provably within a constant factor of the optimal for a given defense. We then use AutoAE to construct two AEs for $l_{\infty}$ and $l_2$ attacks, and apply them without any tuning or adaptation to 45 top adversarial defenses on the RobustBench leaderboard. In all except one cases we achieve equal or better (often the latter) robustness evaluation than existing AEs, and notably, in 29 cases we achieve better robustness evaluation than the best known one. Such performance of AutoAE shows itself as a reliable evaluation protocol for adversarial robustness, which further indicates the huge potential of automatic AE construction. Code is available at \url{https://github.com/LeegerPENG/AutoAE}.

Training Quantized Deep Neural Networks via Cooperative Coevolution

Dec 23, 2021

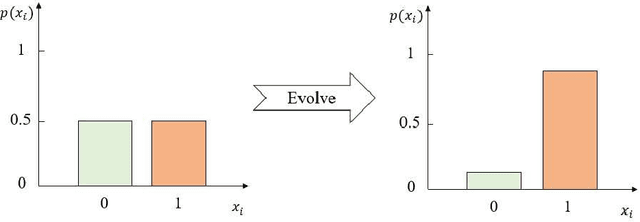

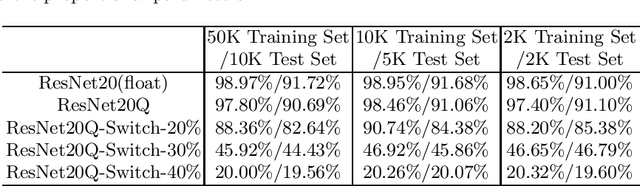

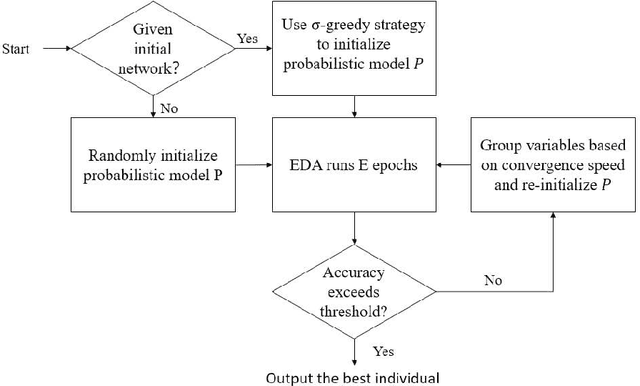

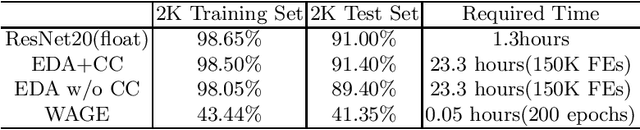

Abstract:Quantizing deep neural networks (DNNs) has been a promising solution for deploying deep neural networks on embedded devices. However, most of the existing methods do not quantize gradients, and the process of quantizing DNNs still has a lot of floating-point operations, which hinders the further applications of quantized DNNs. To solve this problem, we propose a new heuristic method based on cooperative coevolution for quantizing DNNs. Under the framework of cooperative coevolution, we use the estimation of distribution algorithm to search for the low-bits weights. Specifically, we first construct an initial quantized network from a pre-trained network instead of random initialization and then start searching from it by restricting the search space. So far, the problem is the largest discrete problem known to be solved by evolutionary algorithms. Experiments show that our method can train 4 bit ResNet-20 on the Cifar-10 dataset without sacrificing accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge