Feyza Duman Keles

On the Fine-Grained Hardness of Inverting Generative Models

Sep 11, 2023Abstract:The objective of generative model inversion is to identify a size-$n$ latent vector that produces a generative model output that closely matches a given target. This operation is a core computational primitive in numerous modern applications involving computer vision and NLP. However, the problem is known to be computationally challenging and NP-hard in the worst case. This paper aims to provide a fine-grained view of the landscape of computational hardness for this problem. We establish several new hardness lower bounds for both exact and approximate model inversion. In exact inversion, the goal is to determine whether a target is contained within the range of a given generative model. Under the strong exponential time hypothesis (SETH), we demonstrate that the computational complexity of exact inversion is lower bounded by $\Omega(2^n)$ via a reduction from $k$-SAT; this is a strengthening of known results. For the more practically relevant problem of approximate inversion, the goal is to determine whether a point in the model range is close to a given target with respect to the $\ell_p$-norm. When $p$ is a positive odd integer, under SETH, we provide an $\Omega(2^n)$ complexity lower bound via a reduction from the closest vectors problem (CVP). Finally, when $p$ is even, under the exponential time hypothesis (ETH), we provide a lower bound of $2^{\Omega (n)}$ via a reduction from Half-Clique and Vertex-Cover.

On The Computational Complexity of Self-Attention

Sep 11, 2022

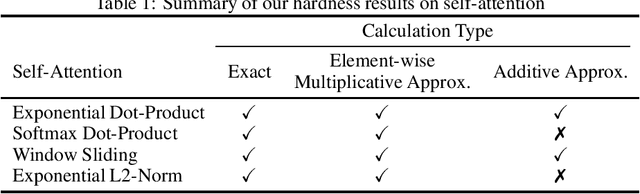

Abstract:Transformer architectures have led to remarkable progress in many state-of-art applications. However, despite their successes, modern transformers rely on the self-attention mechanism, whose time- and space-complexity is quadratic in the length of the input. Several approaches have been proposed to speed up self-attention mechanisms to achieve sub-quadratic running time; however, the large majority of these works are not accompanied by rigorous error guarantees. In this work, we establish lower bounds on the computational complexity of self-attention in a number of scenarios. We prove that the time complexity of self-attention is necessarily quadratic in the input length, unless the Strong Exponential Time Hypothesis (SETH) is false. This argument holds even if the attention computation is performed only approximately, and for a variety of attention mechanisms. As a complement to our lower bounds, we show that it is indeed possible to approximate dot-product self-attention using finite Taylor series in linear-time, at the cost of having an exponential dependence on the polynomial order.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge