Felix J. Herrmann

Power-scaled Bayesian Inference with Score-based Generative mModels

Apr 15, 2025Abstract:We propose a score-based generative algorithm for sampling from power-scaled priors and likelihoods within the Bayesian inference framework. Our algorithm enables flexible control over prior-likelihood influence without requiring retraining for different power-scaling configurations. Specifically, we focus on synthesizing seismic velocity models conditioned on imaged seismic. Our method enables sensitivity analysis by sampling from intermediate power posteriors, allowing us to assess the relative influence of the prior and likelihood on samples of the posterior distribution. Through a comprehensive set of experiments, we evaluate the effects of varying the power parameter in different settings: applying it solely to the prior, to the likelihood of a Bayesian formulation, and to both simultaneously. The results show that increasing the power of the likelihood up to a certain threshold improves the fidelity of posterior samples to the conditioning data (e.g., seismic images), while decreasing the prior power promotes greater structural diversity among samples. Moreover, we find that moderate scaling of the likelihood leads to a reduced shot data residual, confirming its utility in posterior refinement.

Well2Flow: Reconstruction of reservoir states from sparse wells using score-based generative models

Apr 07, 2025Abstract:This study investigates the use of score-based generative models for reservoir simulation, with a focus on reconstructing spatially varying permeability and saturation fields in saline aquifers, inferred from sparse observations at two well locations. By modeling the joint distribution of permeability and saturation derived from high-fidelity reservoir simulations, the proposed neural network is trained to learn the complex spatiotemporal dynamics governing multiphase fluid flow in porous media. During inference, the framework effectively reconstructs both permeability and saturation fields by conditioning on sparse vertical profiles extracted from well log data. This approach introduces a novel methodology for incorporating physical constraints and well log guidance into generative models, significantly enhancing the accuracy and physical plausibility of the reconstructed subsurface states. Furthermore, the framework demonstrates strong generalization capabilities across varying geological scenarios, highlighting its potential for practical deployment in data-scarce reservoir management tasks.

Advancing Geological Carbon Storage Monitoring With 3d Digital Shadow Technology

Feb 11, 2025

Abstract:Geological Carbon Storage (GCS) is a key technology for achieving global climate goals by capturing and storing CO2 in deep geological formations. Its effectiveness and safety rely on accurate monitoring of subsurface CO2 migration using advanced time-lapse seismic imaging. A Digital Shadow framework integrates field data, including seismic and borehole measurements, to track CO2 saturation over time. Machine learning-assisted data assimilation techniques, such as generative AI and nonlinear ensemble Bayesian filtering, update a digital model of the CO2 plume while incorporating uncertainties in reservoir properties. Compared to 2D approaches, 3D monitoring enhances the spatial accuracy of GCS assessments, capturing the full extent of CO2 migration. This study extends the uncertainty-aware 2D Digital Shadow framework by incorporating 3D seismic imaging and reservoir modeling, improving decision-making and risk mitigation in CO2 storage projects.

Enhancing Robustness Of Digital Shadow For CO2 Storage Monitoring With Augmented Rock Physics Modeling

Feb 11, 2025Abstract:To meet climate targets, the IPCC underscores the necessity of technologies capable of removing gigatonnes of CO2 annually, with Geological Carbon Storage (GCS) playing a central role. GCS involves capturing CO2 and injecting it into deep geological formations for long-term storage, requiring precise monitoring to ensure containment and prevent leakage. Time-lapse seismic imaging is essential for tracking CO2 migration but often struggles to capture the complexities of multi-phase subsurface flow. Digital Shadows (DS), leveraging machine learning-driven data assimilation techniques such as nonlinear Bayesian filtering and generative AI, provide a more detailed, uncertainty-aware monitoring approach. By incorporating uncertainties in reservoir properties, DS frameworks improve CO2 migration forecasts, reducing risks in GCS operations. However, data assimilation depends on assumptions regarding reservoir properties, rock physics models, and initial conditions, which, if inaccurate, can compromise prediction reliability. This study demonstrates that augmenting forecast ensembles with diverse rock physics models mitigates the impact of incorrect assumptions and improves predictive accuracy, particularly in differentiating uniform versus patchy saturation models.

Probabilistic Joint Recovery Method for CO$_2$ Plume Monitoring

Jan 30, 2025Abstract:Reducing CO$_2$ emissions is crucial to mitigating climate change. Carbon Capture and Storage (CCS) is one of the few technologies capable of achieving net-negative CO$_2$ emissions. However, predicting fluid flow patterns in CCS remains challenging due to uncertainties in CO$_2$ plume dynamics and reservoir properties. Building on existing seismic imaging methods like the Joint Recovery Method (JRM), which lacks uncertainty quantification, we propose the Probabilistic Joint Recovery Method (pJRM). By estimating posterior distributions across surveys using a shared generative model, pJRM provides uncertainty information to improve risk assessment in CCS projects.

Machine learning-enabled velocity model building with uncertainty quantification

Nov 14, 2024

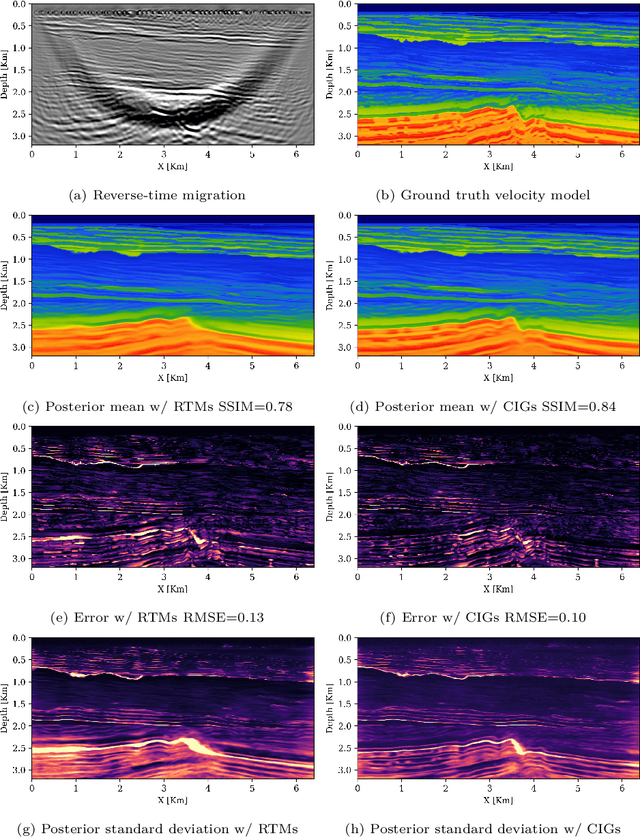

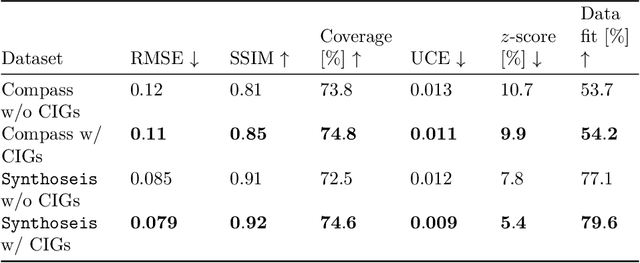

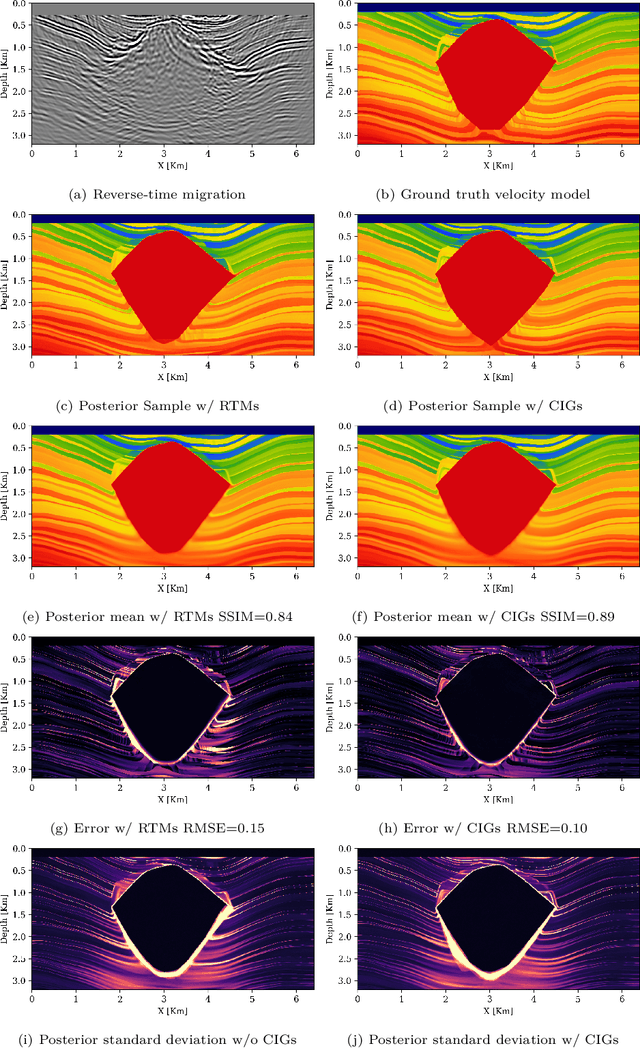

Abstract:Accurately characterizing migration velocity models is crucial for a wide range of geophysical applications, from hydrocarbon exploration to monitoring of CO2 sequestration projects. Traditional velocity model building methods such as Full-Waveform Inversion (FWI) are powerful but often struggle with the inherent complexities of the inverse problem, including noise, limited bandwidth, receiver aperture and computational constraints. To address these challenges, we propose a scalable methodology that integrates generative modeling, in the form of Diffusion networks, with physics-informed summary statistics, making it suitable for complicated imaging problems including field datasets. By defining these summary statistics in terms of subsurface-offset image volumes for poor initial velocity models, our approach allows for computationally efficient generation of Bayesian posterior samples for migration velocity models that offer a useful assessment of uncertainty. To validate our approach, we introduce a battery of tests that measure the quality of the inferred velocity models, as well as the quality of the inferred uncertainties. With modern synthetic datasets, we reconfirm gains from using subsurface-image gathers as the conditioning observable. For complex velocity model building involving salt, we propose a new iterative workflow that refines amortized posterior approximations with salt flooding and demonstrate how the uncertainty in the velocity model can be propagated to the final product reverse time migrated images. Finally, we present a proof of concept on field datasets to show that our method can scale to industry-sized problems.

An uncertainty-aware Digital Shadow for underground multimodal CO2 storage monitoring

Oct 02, 2024Abstract:Geological Carbon Storage GCS is arguably the only scalable net-negative CO2 emission technology available While promising subsurface complexities and heterogeneity of reservoir properties demand a systematic approach to quantify uncertainty when optimizing production and mitigating storage risks which include assurances of Containment and Conformance of injected supercritical CO2 As a first step towards the design and implementation of a Digital Twin for monitoring underground storage operations a machine learning based data-assimilation framework is introduced and validated on carefully designed realistic numerical simulations As our implementation is based on Bayesian inference but does not yet support control and decision-making we coin our approach an uncertainty-aware Digital Shadow To characterize the posterior distribution for the state of CO2 plumes conditioned on multi-modal time-lapse data the envisioned Shadow combines techniques from Simulation-Based Inference SBI and Ensemble Bayesian Filtering to establish probabilistic baselines and assimilate multi-modal data for GCS problems that are challenged by large degrees of freedom nonlinear multi-physics non-Gaussianity and computationally expensive to evaluate fluid flow and seismic simulations To enable SBI for dynamic systems a recursive scheme is proposed where the Digital Shadows neural networks are trained on simulated ensembles for their state and observed data well and/or seismic Once training is completed the systems state is inferred when time-lapse field data becomes available In this computational study we observe that a lack of knowledge on the permeability field can be factored into the Digital Shadows uncertainty quantification To our knowledge this work represents the first proof of concept of an uncertainty-aware in-principle scalable Digital Shadow.

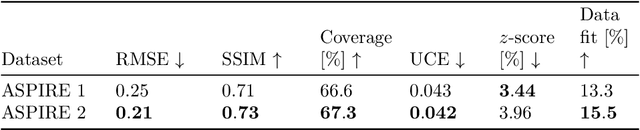

ASPIRE: Iterative Amortized Posterior Inference for Bayesian Inverse Problems

May 08, 2024Abstract:Due to their uncertainty quantification, Bayesian solutions to inverse problems are the framework of choice in applications that are risk averse. These benefits come at the cost of computations that are in general, intractable. New advances in machine learning and variational inference (VI) have lowered the computational barrier by learning from examples. Two VI paradigms have emerged that represent different tradeoffs: amortized and non-amortized. Amortized VI can produce fast results but due to generalizing to many observed datasets it produces suboptimal inference results. Non-amortized VI is slower at inference but finds better posterior approximations since it is specialized towards a single observed dataset. Current amortized VI techniques run into a sub-optimality wall that can not be improved without more expressive neural networks or extra training data. We present a solution that enables iterative improvement of amortized posteriors that uses the same networks architectures and training data. The benefits of our method requires extra computations but these remain frugal since they are based on physics-hybrid methods and summary statistics. Importantly, these computations remain mostly offline thus our method maintains cheap and reusable online evaluation while bridging the approximation gap these two paradigms. We denote our proposed method ASPIRE - Amortized posteriors with Summaries that are Physics-based and Iteratively REfined. We first validate our method on a stylized problem with a known posterior then demonstrate its practical use on a high-dimensional and nonlinear transcranial medical imaging problem with ultrasound. Compared with the baseline and previous methods from the literature our method stands out as an computationally efficient and high-fidelity method for posterior inference.

BEACON: Bayesian Experimental design Acceleration with Conditional Normalizing flows $-$ a case study in optimal monitor well placement for CO$_2$ sequestration

Mar 28, 2024

Abstract:CO$_2$ sequestration is a crucial engineering solution for mitigating climate change. However, the uncertain nature of reservoir properties, necessitates rigorous monitoring of CO$_2$ plumes to prevent risks such as leakage, induced seismicity, or breaching licensed boundaries. To address this, project managers use borehole wells for direct CO$_2$ and pressure monitoring at specific locations. Given the high costs associated with drilling, it is crucial to strategically place a limited number of wells to ensure maximally effective monitoring within budgetary constraints. Our approach for selecting well locations integrates fluid-flow solvers for forecasting plume trajectories with generative neural networks for plume inference uncertainty. Our methodology is extensible to three-dimensional domains and is developed within a Bayesian framework for optimal experimental design, ensuring scalability and mathematical optimality. We use a realistic case study to verify these claims by demonstrating our method's application in a large scale domain and optimal performance as compared to baseline well placement.

Probabilistic Bayesian optimal experimental design using conditional normalizing flows

Feb 28, 2024

Abstract:Bayesian optimal experimental design (OED) seeks to conduct the most informative experiment under budget constraints to update the prior knowledge of a system to its posterior from the experimental data in a Bayesian framework. Such problems are computationally challenging because of (1) expensive and repeated evaluation of some optimality criterion that typically involves a double integration with respect to both the system parameters and the experimental data, (2) suffering from the curse-of-dimensionality when the system parameters and design variables are high-dimensional, (3) the optimization is combinatorial and highly non-convex if the design variables are binary, often leading to non-robust designs. To make the solution of the Bayesian OED problem efficient, scalable, and robust for practical applications, we propose a novel joint optimization approach. This approach performs simultaneous (1) training of a scalable conditional normalizing flow (CNF) to efficiently maximize the expected information gain (EIG) of a jointly learned experimental design (2) optimization of a probabilistic formulation of the binary experimental design with a Bernoulli distribution. We demonstrate the performance of our proposed method for a practical MRI data acquisition problem, one of the most challenging Bayesian OED problems that has high-dimensional (320 $\times$ 320) parameters at high image resolution, high-dimensional (640 $\times$ 386) observations, and binary mask designs to select the most informative observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge