Felipe Cadar Chamone

A Weighted Sparse Sampling and Smoothing Frame Transition Approach for Semantic Fast-Forward First-Person Videos

Mar 13, 2018

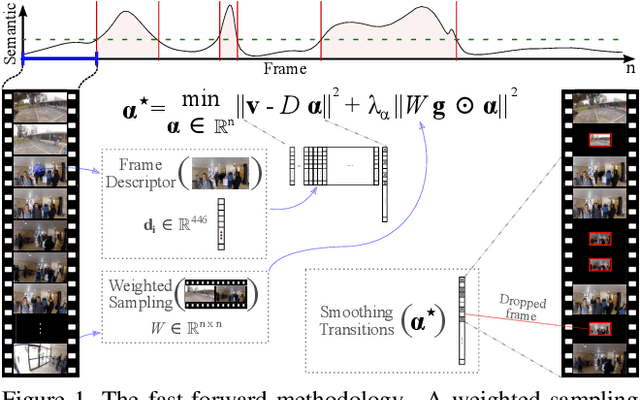

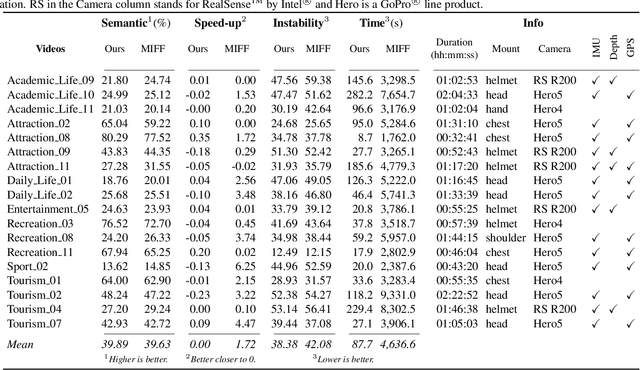

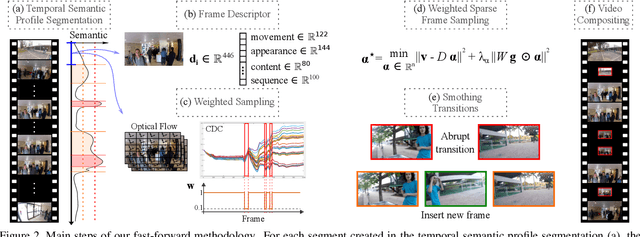

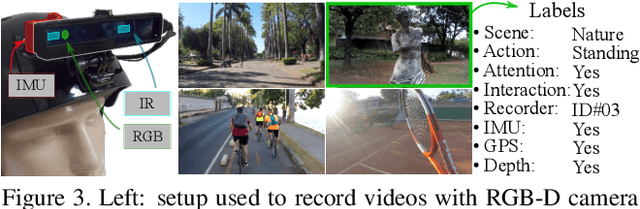

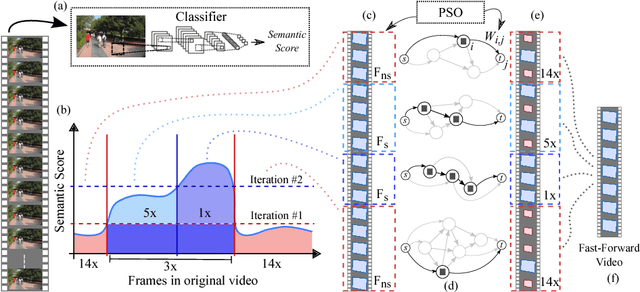

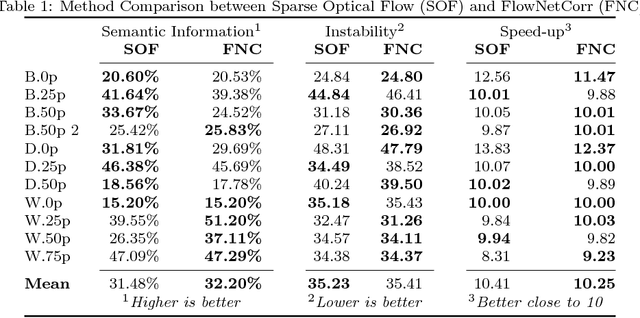

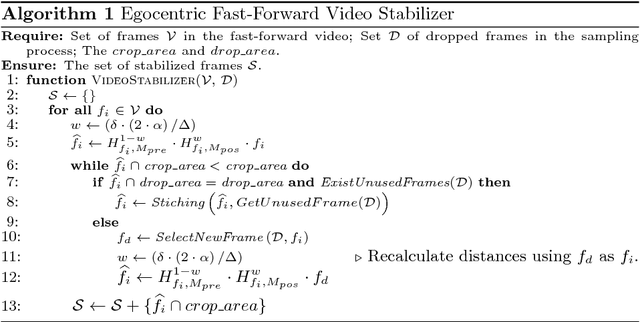

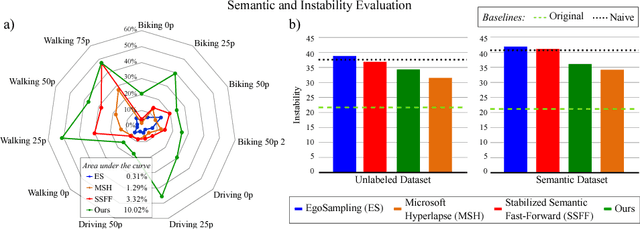

Abstract:Thanks to the advances in the technology of low-cost digital cameras and the popularity of the self-recording culture, the amount of visual data on the Internet is going to the opposite side of the available time and patience of the users. Thus, most of the uploaded videos are doomed to be forgotten and unwatched in a computer folder or website. In this work, we address the problem of creating smooth fast-forward videos without losing the relevant content. We present a new adaptive frame selection formulated as a weighted minimum reconstruction problem, which combined with a smoothing frame transition method accelerates first-person videos emphasizing the relevant segments and avoids visual discontinuities. The experiments show that our method is able to fast-forward videos to retain as much relevant information and smoothness as the state-of-the-art techniques in less time. We also present a new 80-hour multimodal (RGB-D, IMU, and GPS) dataset of first-person videos with annotations for recorder profile, frame scene, activities, interaction, and attention.

Making a long story short: A Multi-Importance fast-forwarding egocentric videos with the emphasis on relevant objects

Mar 07, 2018

Abstract:The emergence of low-cost high-quality personal wearable cameras combined with the increasing storage capacity of video-sharing websites have evoked a growing interest in first-person videos, since most videos are composed of long-running unedited streams which are usually tedious and unpleasant to watch. State-of-the-art semantic fast-forward methods currently face the challenge of providing an adequate balance between smoothness in visual flow and the emphasis on the relevant parts. In this work, we present the Multi-Importance Fast-Forward (MIFF), a fully automatic methodology to fast-forward egocentric videos facing these challenges. The dilemma of defining what is the semantic information of a video is addressed by a learning process based on the preferences of the user. Results show that the proposed method keeps over $3$ times more semantic content than the state-of-the-art fast-forward. Finally, we discuss the need of a particular video stabilization technique for fast-forward egocentric videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge