Fabian Stephany

AI Skills Improve Job Prospects: Causal Evidence from a Hiring Experiment

Jan 19, 2026Abstract:The growing adoption of artificial intelligence (AI) technologies has heightened interest in the labour market value of AI-related skills, yet causal evidence on their role in hiring decisions remains scarce. This study examines whether AI skills serve as a positive hiring signal and whether they can offset conventional disadvantages such as older age or lower formal education. We conduct an experimental survey with 1,700 recruiters from the United Kingdom and the United States. Using a paired conjoint design, recruiters evaluated hypothetical candidates represented by synthetically designed resumes. Across three occupations - graphic designer, office assistant, and software engineer - AI skills significantly increase interview invitation probabilities by approximately 8 to 15 percentage points. AI skills also partially or fully offset disadvantages related to age and lower education, with effects strongest for office assistants, where formal AI certification plays an additional compensatory role. Effects are weaker for graphic designers, consistent with more skeptical recruiter attitudes toward AI in creative work. Finally, recruiters' own background and AI usage significantly moderate these effects. Overall, the findings demonstrate that AI skills function as a powerful hiring signal and can mitigate traditional labour market disadvantages, with implications for workers' skill acquisition strategies and firms' recruitment practices.

Women Worry, Men Adopt: How Gendered Perceptions Shape the Use of Generative AI

Jan 07, 2026Abstract:Generative artificial intelligence (GenAI) is diffusing rapidly, yet its adoption is strikingly unequal. Using nationally representative UK survey data from 2023 to 2024, we show that women adopt GenAI substantially less often than men because they perceive its societal risks differently. We construct a composite index capturing concerns about mental health, privacy, climate impact, and labor market disruption. This index explains between 9 and 18 percent of the variation in GenAI adoption and ranks among the strongest predictors for women across all age groups, surpassing digital literacy and education for young women. Intersectional analyses show that the largest disparities arise among younger, digitally fluent individuals with high societal risk concerns, where gender gaps in personal use exceed 45 percentage points. Using a synthetic twin panel design, we show that increased optimism about AI's societal impact raises GenAI use among young women from 13 percent to 33 percent, substantially narrowing the gender divide. These findings indicate that gendered perceptions of AI's social and ethical consequences, rather than access or capability, are the primary drivers of unequal GenAI adoption, with implications for productivity, skill formation, and economic inequality in an AI enabled economy.

Complement or substitute? How AI increases the demand for human skills

Dec 27, 2024Abstract:The question of whether AI substitutes or complements human work is central to debates on the future of work. This paper examines the impact of AI on skill demand and compensation in the U.S. economy, analysing 12 million online job vacancies from 2018 to 2023. It investigates internal effects (within-job substitution and complementation) and external effects (across occupations, industries, and regions). Our findings reveal a significant increase in demand for AI-complementary skills, such as digital literacy, teamwork, and resilience, alongside rising wage premiums for these skills in AI roles like Data Scientist. Conversely, substitute skills, including customer service and text review, have declined in both demand and value within AI-related positions. Examining external effects, we find a notable rise in demand for complementary skills in non-AI roles linked to the growth of AI-related jobs in specific industries or regions. At the same time, there is a moderate decline in non-AI roles requiring substitute skills. Overall, AI's complementary effect is up to 50% larger than its substitution effect, resulting in net positive demand for skills. These results, replicated for the UK and Australia, highlight AI's transformative impact on workforce skill requirements. They suggest reskilling efforts should prioritise not only technical AI skills but also complementary skills like ethics and digital literacy.

The Economics of Human Oversight: How Norms and Incentives Affect Costs and Performance of AI Workers

Dec 22, 2023Abstract:The global surge in AI applications is transforming industries, leading to displacement and complementation of existing jobs, while also giving rise to new employment opportunities. Human oversight of AI is an emerging task in which human workers interact with an AI model to improve its performance, safety, and compliance with normative principles. Data annotation, encompassing the labelling of images or annotating of texts, serves as a critical human oversight process, as the quality of a dataset directly influences the quality of AI models trained on it. Therefore, the efficiency of human oversight work stands as an important competitive advantage for AI developers. This paper delves into the foundational economics of human oversight, with a specific focus on the impact of norm design and monetary incentives on data quality and costs. An experimental study involving 307 data annotators examines six groups with varying task instructions (norms) and monetary incentives. Results reveal that annotators provided with clear rules exhibit higher accuracy rates, outperforming those with vague standards by 14%. Similarly, annotators receiving an additional monetary incentive perform significantly better, with the highest accuracy rate recorded in the group working with both clear rules and incentives (87.5% accuracy). However, both groups require more time to complete tasks, with a 31% increase in average task completion time compared to those working with standards and no incentives. These empirical findings underscore the trade-off between data quality and efficiency in data curation, shedding light on the nuanced impact of norm design and incentives on the economics of AI development. The paper contributes experimental insights to discussions on the economical, ethical, and legal considerations of AI technologies.

Skills or Degree? The Rise of Skill-Based Hiring for AI and Green Jobs

Dec 19, 2023Abstract:For emerging professions, such as jobs in the field of Artificial Intelligence (AI) or sustainability (green), labour supply does not meet industry demand. In this scenario of labour shortages, our work aims to understand whether employers have started focusing on individual skills rather than on formal qualifications in their recruiting. By analysing a large time series dataset of around one million online job vacancies between 2019 and 2022 from the UK and drawing on diverse literature on technological change and labour market signalling, we provide evidence that employers have started so-called "skill-based hiring" for AI and green roles, as more flexible hiring practices allow them to increase the available talent pool. In our observation period the demand for AI roles grew twice as much as average labour demand. At the same time, the mention of university education for AI roles declined by 23%, while AI roles advertise five times as many skills as job postings on average. Our regression analysis also shows that university degrees no longer show an educational premium for AI roles, while for green positions the educational premium persists. In contrast, AI skills have a wage premium of 16%, similar to having a PhD (17%). Our work recommends making use of alternative skill building formats such as apprenticeships, on-the-job training, MOOCs, vocational education and training, micro-certificates, and online bootcamps to use human capital to its full potential and to tackle talent shortages.

How Many Online Workers are there in the World? A Data-Driven Assessment

Apr 19, 2021

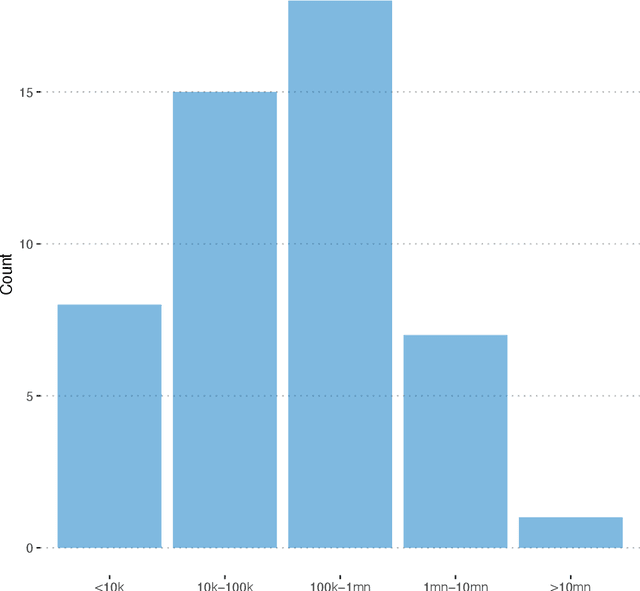

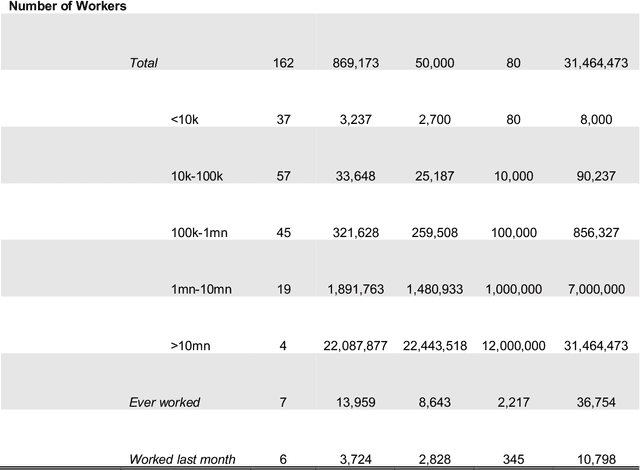

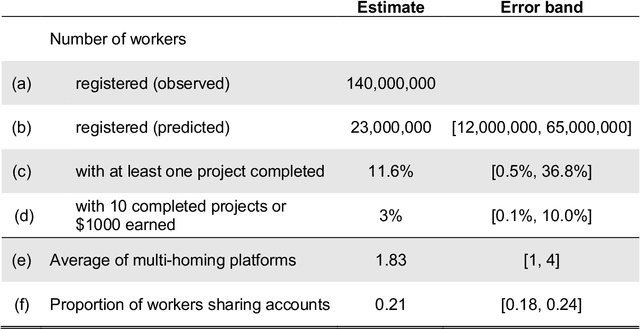

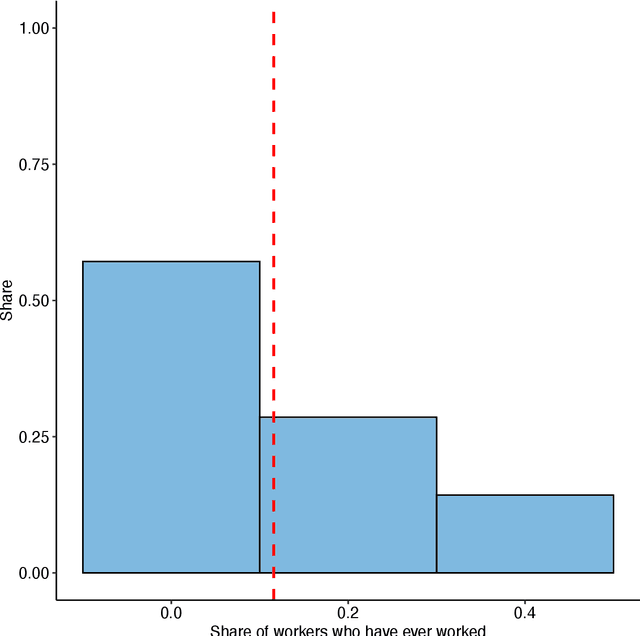

Abstract:An unknown number of people around the world are earning income by working through online labour platforms such as Upwork and Amazon Mechanical Turk. We combine data collected from various sources to build a data-driven assessment of the number of such online workers (also known as online freelancers) globally. Our headline estimate is that there are 163 million freelancer profiles registered on online labour platforms globally. Approximately 19 million of them have obtained work through the platform at least once, and 5 million have completed at least 10 projects or earned at least $1000. These numbers suggest a substantial growth from 2015 in registered worker accounts, but much less growth in amount of work completed by workers. Our results indicate that online freelancing represents a non-trivial segment of labour today, but one that is spread thinly across countries and sectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge