Evan Miller

Adding Error Bars to Evals: A Statistical Approach to Language Model Evaluations

Nov 01, 2024Abstract:Evaluations are critical for understanding the capabilities of large language models (LLMs). Fundamentally, evaluations are experiments; but the literature on evaluations has largely ignored the literature from other sciences on experiment analysis and planning. This article shows researchers with some training in statistics how to think about and analyze data from language model evaluations. Conceptualizing evaluation questions as having been drawn from an unseen super-population, we present formulas for analyzing evaluation data, measuring differences between two models, and planning an evaluation experiment. We make a number of specific recommendations for running language model evaluations and reporting experiment results in a way that minimizes statistical noise and maximizes informativeness.

Learning Abduction under Partial Observability

Nov 25, 2017

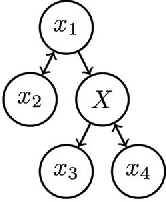

Abstract:Juba recently proposed a formulation of learning abductive reasoning from examples, in which both the relative plausibility of various explanations, as well as which explanations are valid, are learned directly from data. The main shortcoming of this formulation of the task is that it assumes access to full-information (i.e., fully specified) examples; relatedly, it offers no role for declarative background knowledge, as such knowledge is rendered redundant in the abduction task by complete information. In this work, we extend the formulation to utilize such partially specified examples, along with declarative background knowledge about the missing data. We show that it is possible to use implicitly learned rules together with the explicitly given declarative knowledge to support hypotheses in the course of abduction. We observe that when a small explanation exists, it is possible to obtain a much-improved guarantee in the challenging exception-tolerant setting. Such small, human-understandable explanations are of particular interest for potential applications of the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge