Emilio Garcia-Fidalgo

MSC-VO: Exploiting Manhattan and Structural Constraints for Visual Odometry

Nov 05, 2021

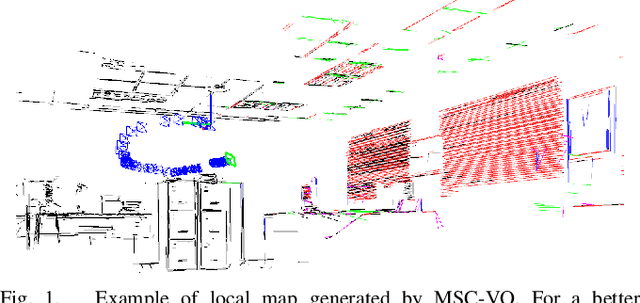

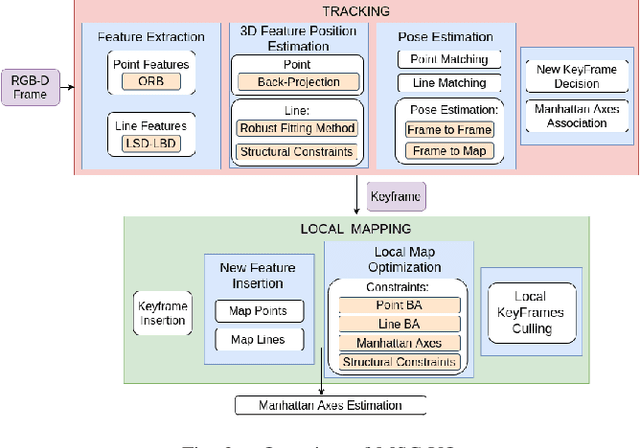

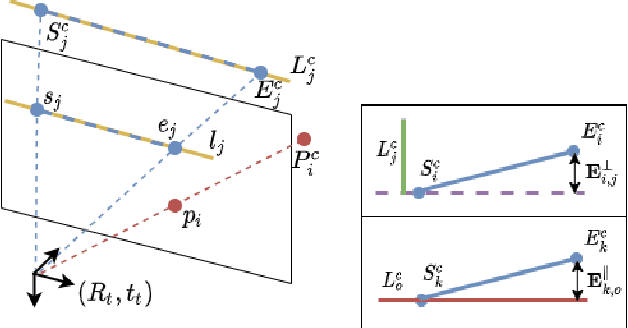

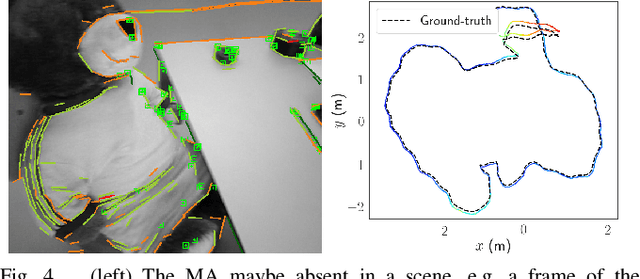

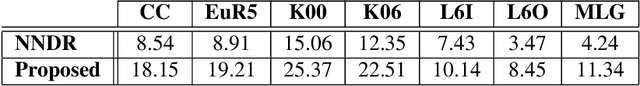

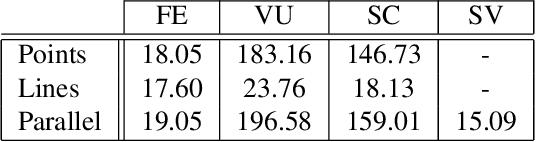

Abstract:Visual odometry algorithms tend to degrade when facing low-textured scenes -from e.g. human-made environments-, where it is often difficult to find a sufficient number of point features. Alternative geometrical visual cues, such as lines, which can often be found within these scenarios, can become particularly useful. Moreover, these scenarios typically present structural regularities, such as parallelism or orthogonality, and hold the Manhattan World assumption. Under these premises, in this work, we introduce MSC-VO, an RGB-D -based visual odometry approach that combines both point and line features and leverages, if exist, those structural regularities and the Manhattan axes of the scene. Within our approach, these structural constraints are initially used to estimate accurately the 3D position of the extracted lines. These constraints are also combined next with the estimated Manhattan axes and the reprojection errors of points and lines to refine the camera pose by means of local map optimization. Such a combination enables our approach to operate even in the absence of the aforementioned constraints, allowing the method to work for a wider variety of scenarios. Furthermore, we propose a novel multi-view Manhattan axes estimation procedure that mainly relies on line features. MSC-VO is assessed using several public datasets, outperforming other state-of-the-art solutions, and comparing favourably even with some SLAM methods.

LiODOM: Adaptive Local Mapping for Robust LiDAR-Only Odometry

Nov 05, 2021

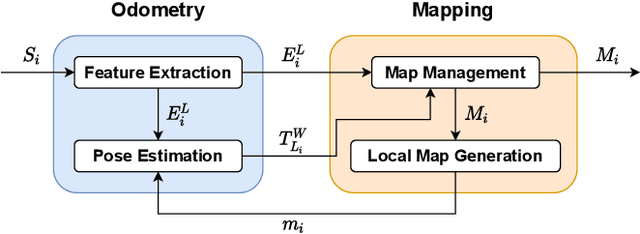

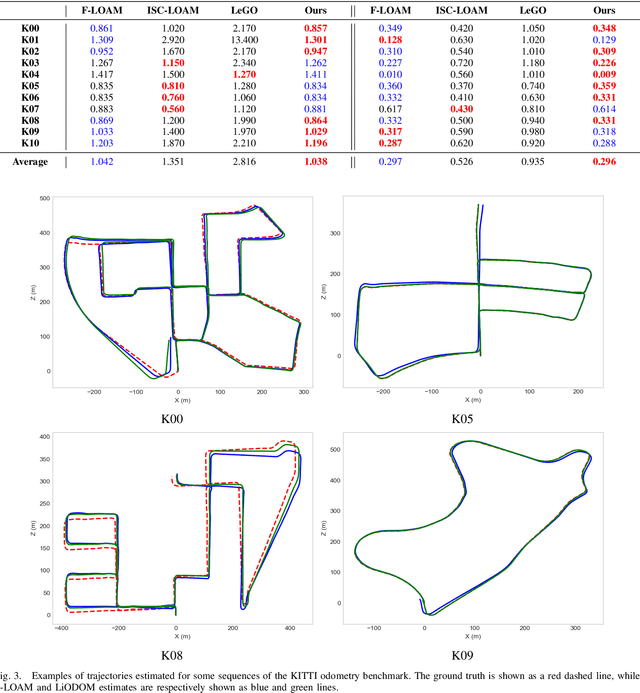

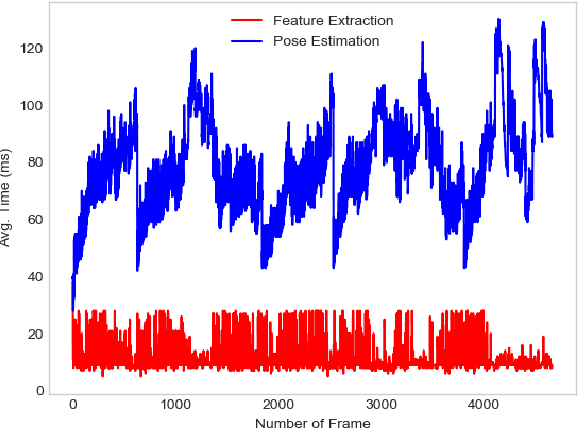

Abstract:In the last decades, Light Detection And Ranging (LiDAR) technology has been extensively explored as a robust alternative for self-localization and mapping. These approaches typically state ego-motion estimation as a non-linear optimization problem dependent on the correspondences established between the current point cloud and a map, whatever its scope, local or global. This paper proposes LiODOM, a novel LiDAR-only ODOmetry and Mapping approach for pose estimation and map-building, based on minimizing a loss function derived from a set of weighted point-to-line correspondences with a local map abstracted from the set of available point clouds. Furthermore, this work places a particular emphasis on map representation given its relevance for quick data association. To efficiently represent the environment, we propose a data structure that combined with a hashing scheme allows for fast access to any section of the map. LiODOM is validated by means of a set of experiments on public datasets, for which it compares favourably against other solutions. Its performance on-board an aerial platform is also reported.

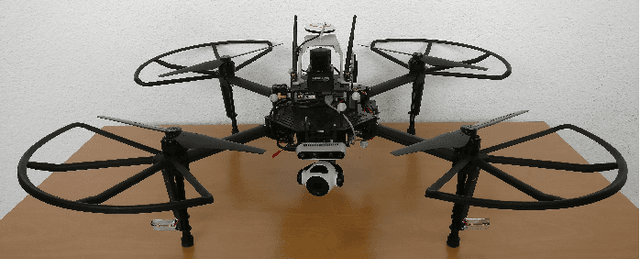

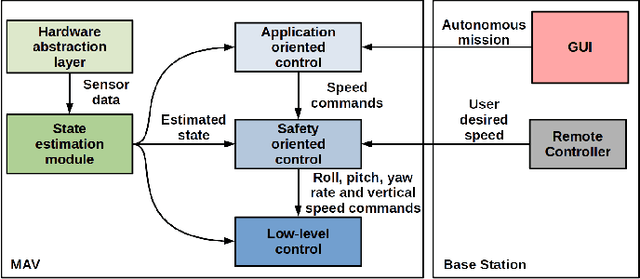

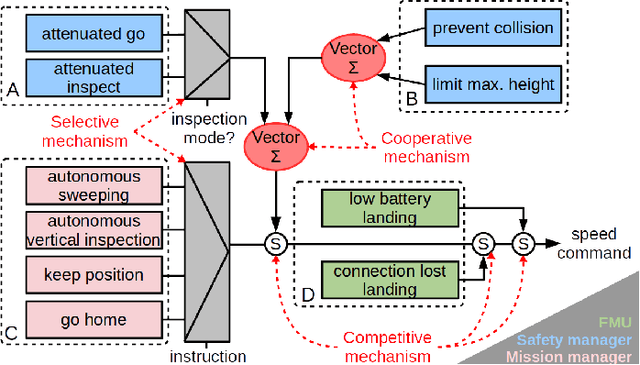

Evaluation of a Skill-based Control Architecture for a Visual Inspection-oriented Aerial Platform

Sep 03, 2020

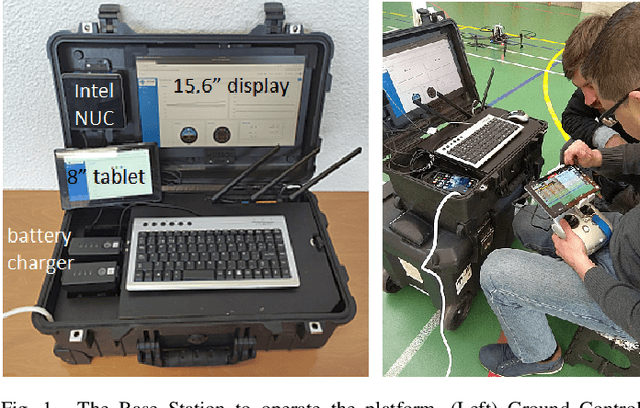

Abstract:The periodic inspection of vessels is a fundamental task to ensure their integrity and avoid maritime accidents. Currently, these inspections represent a high cost for the ship owner, in addition to the danger that this kind of hostile environment entails for the surveyors. In these situations, robotic platforms turn out to be useful not only for safety reasons, but also to reduce vessel downtimes and simplify the inspection procedures. Under this context, in this paper we report on the evaluation of a new control architecture devised to drive an aerial platform during these inspection procedures. The control architecture, based on an extensive use of behaviour-based high-level control, implements visual inspection-oriented functionalities, while releases the operator from the complexities of inspection flights and ensures the integrity of the platform. Apart from the control software, the full system comprises a multi-rotor platform equipped with a suitable set of sensors to permit teleporting the surveyor to the areas that need inspection. The paper provides an extensive set of testing results in different scenarios, under different operational conditions and over real vessels, in order to demonstrate the suitability of the platform for this kind of tasks.

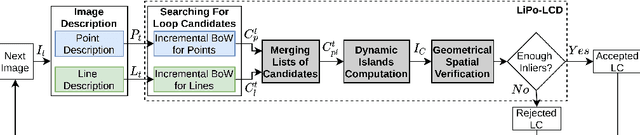

LiPo-LCD: Combining Lines and Points for Appearance-based Loop Closure Detection

Sep 03, 2020

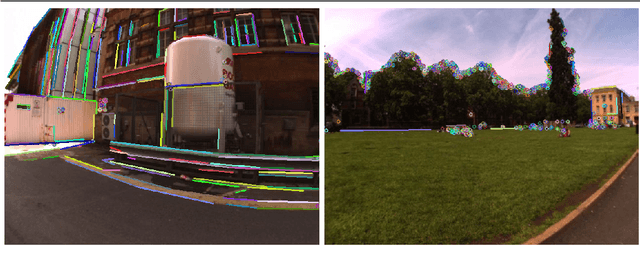

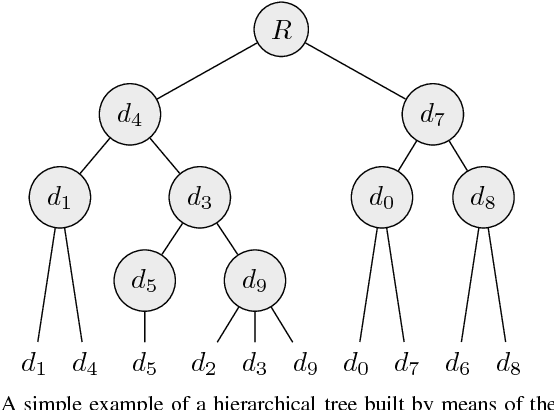

Abstract:Visual SLAM approaches typically depend on loop closure detection to correct the inconsistencies that may arise during the map and camera trajectory calculations, typically making use of point features for detecting and closing the existing loops. In low-textured scenarios, however, it is difficult to find enough point features and, hence, the performance of these solutions drops drastically. An alternative for human-made scenarios, due to their structural regularity, is the use of geometrical cues such as straight segments, frequently present within these environments. Under this context, in this paper we introduce LiPo-LCD, a novel appearance-based loop closure detection method that integrates lines and points. Adopting the idea of incremental Bag-of-Binary-Words schemes, we build separate BoW models for each feature, and use them to retrieve previously seen images using a late fusion strategy. Additionally, a simple but effective mechanism, based on the concept of island, groups similar images close in time to reduce the image candidate search effort. A final step validates geometrically the loop candidates by incorporating the detected lines by means of a process comprising a line feature matching stage, followed by a robust spatial verification stage, now combining both lines and points. As it is reported in the paper, LiPo-LCD compares well with several state-of-the-art solutions for a number of datasets involving different environmental conditions.

iBoW-LCD: An Appearance-based Loop Closure Detection Approach using Incremental Bags of Binary Words

Jul 18, 2018

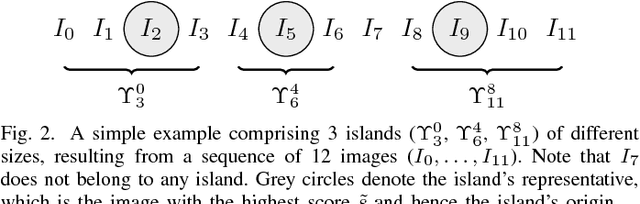

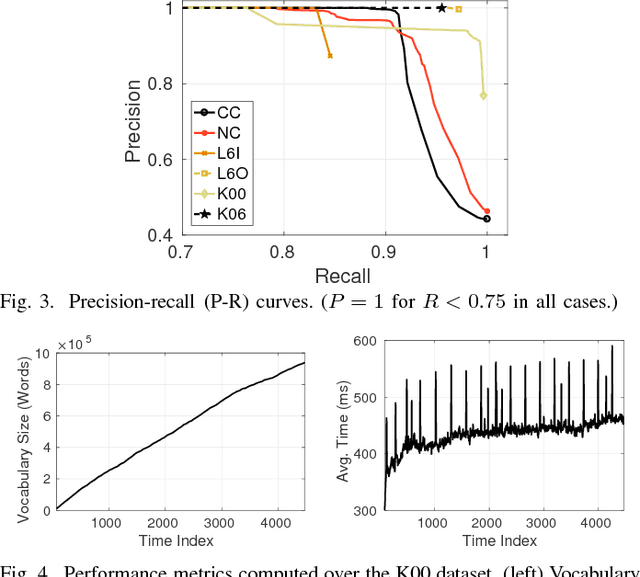

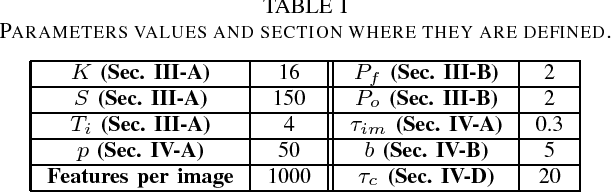

Abstract:In this paper, we introduce iBoW-LCD, a novel appearance-based loop closure detection method. The presented approach makes use of an incremental Bag-of-Words (BoW) scheme based on binary descriptors to retrieve previously seen similar images, avoiding any vocabulary training stage usually required by classic BoW models. In addition, to detect loop closures, iBoW-LCD builds on the concept of dynamic islands, a simple but effective mechanism to group similar images close in time, which reduces the computational times typically associated to Bayesian frameworks. Our approach is validated using several indoor and outdoor public datasets, taken under different environmental conditions, achieving a high accuracy and outperforming other state-of-the-art solutions.

* Accepted for publication in IEEE Robotics and Automation Letters (RA-L), 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge