Emad A. Mohammed

Med-SegLens: Latent-Level Model Diffing for Interpretable Medical Image Segmentation

Feb 11, 2026Abstract:Modern segmentation models achieve strong predictive performance but remain largely opaque, limiting our ability to diagnose failures, understand dataset shift, or intervene in a principled manner. We introduce Med-SegLens, a model-diffing framework that decomposes segmentation model activations into interpretable latent features using sparse autoencoders trained on SegFormer and U-Net. Through cross-architecture and cross-dataset latent alignment across healthy, adult, pediatric, and sub-Saharan African glioma cohorts, we identify a stable backbone of shared representations, while dataset shift is driven by differential reliance on population-specific latents. We show that these latents act as causal bottlenecks for segmentation failures, and that targeted latent-level interventions can correct errors and improve cross-dataset adaption without retraining, recovering performance in 70% of failure cases and improving Dice score from 39.4% to 74.2%. Our results demonstrate that latent-level model diffing provides a practical and mechanistic tool for diagnosing failures and mitigating dataset shift in segmentation models.

Prostate Capsule Segmentation from Micro-Ultrasound Images using Adaptive Focal Loss

Sep 19, 2025

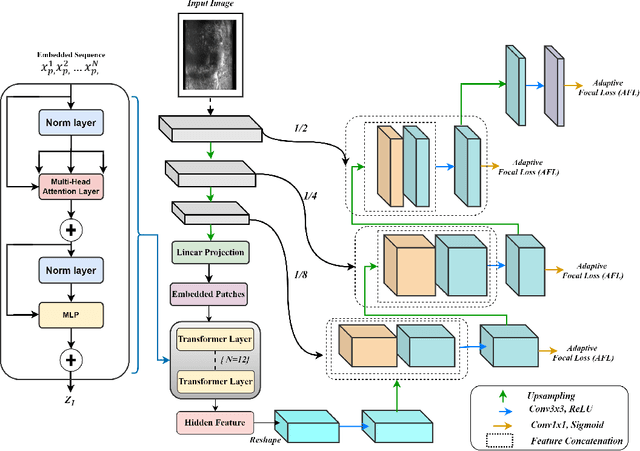

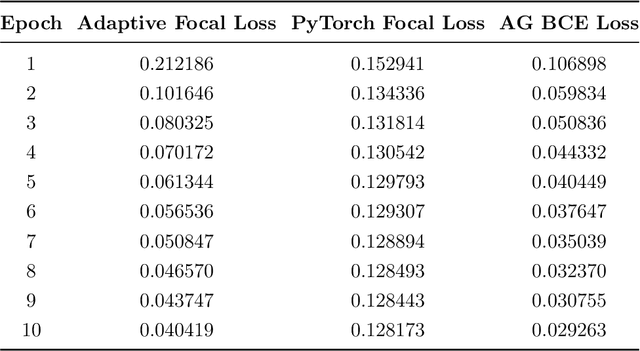

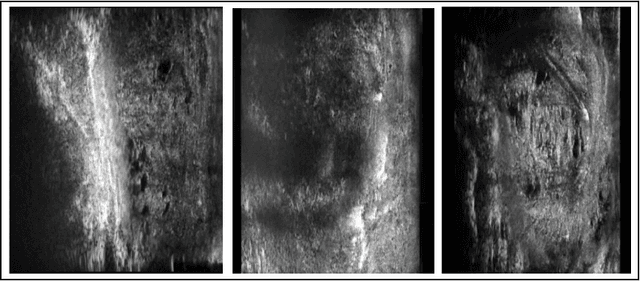

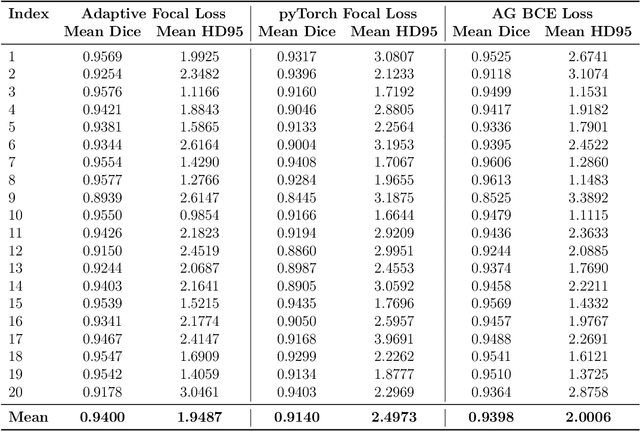

Abstract:Micro-ultrasound (micro-US) is a promising imaging technique for cancer detection and computer-assisted visualization. This study investigates prostate capsule segmentation using deep learning techniques from micro-US images, addressing the challenges posed by the ambiguous boundaries of the prostate capsule. Existing methods often struggle in such cases, motivating the development of a tailored approach. This study introduces an adaptive focal loss function that dynamically emphasizes both hard and easy regions, taking into account their respective difficulty levels and annotation variability. The proposed methodology has two primary strategies: integrating a standard focal loss function as a baseline to design an adaptive focal loss function for proper prostate capsule segmentation. The focal loss baseline provides a robust foundation, incorporating class balancing and focusing on examples that are difficult to classify. The adaptive focal loss offers additional flexibility, addressing the fuzzy region of the prostate capsule and annotation variability by dilating the hard regions identified through discrepancies between expert and non-expert annotations. The proposed method dynamically adjusts the segmentation model's weights better to identify the fuzzy regions of the prostate capsule. The proposed adaptive focal loss function demonstrates superior performance, achieving a mean dice coefficient (DSC) of 0.940 and a mean Hausdorff distance (HD) of 1.949 mm in the testing dataset. These results highlight the effectiveness of integrating advanced loss functions and adaptive techniques into deep learning models. This enhances the accuracy of prostate capsule segmentation in micro-US images, offering the potential to improve clinical decision-making in prostate cancer diagnosis and treatment planning.

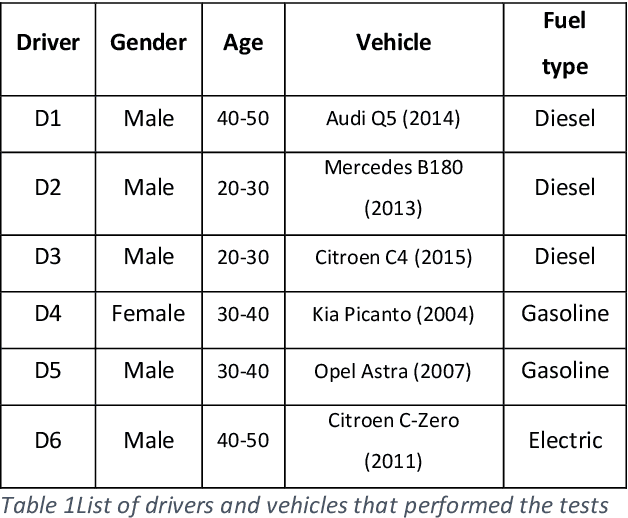

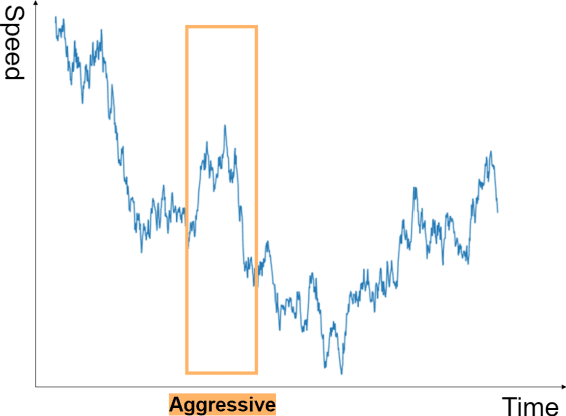

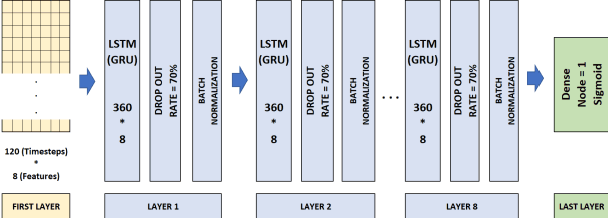

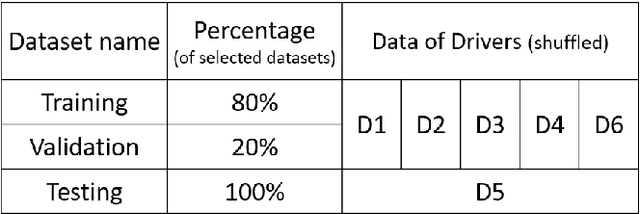

Deep Learning Approach for Aggressive Driving Behaviour Detection

Nov 08, 2021

Abstract:Driving behaviour is one of the primary causes of road crashes and accidents, and these can be decreased by identifying and minimizing aggressive driving behaviour. This study identifies the timesteps when a driver in different circumstances (rush, mental conflicts, reprisal) begins to drive aggressively. An observer (real or virtual) is needed to examine driving behaviour to discover aggressive driving occasions; we overcome this problem by using a smartphone's GPS sensor to detect locations and classify drivers' driving behaviour every three minutes. To detect timeseries patterns in our dataset, we employ RNN (GRU, LSTM) algorithms to identify patterns during the driving course. The algorithm is independent of road, vehicle, position, or driver characteristics. We conclude that three minutes (or more) of driving (120 seconds of GPS data) is sufficient to identify driver behaviour. The results show high accuracy and a high F1 score.

Dynamic and Systematic Survey of Deep Learning Approaches for Driving Behavior Analysis

Sep 18, 2021

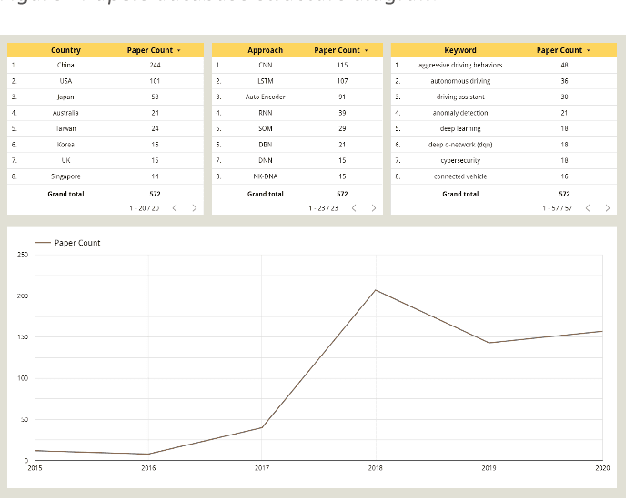

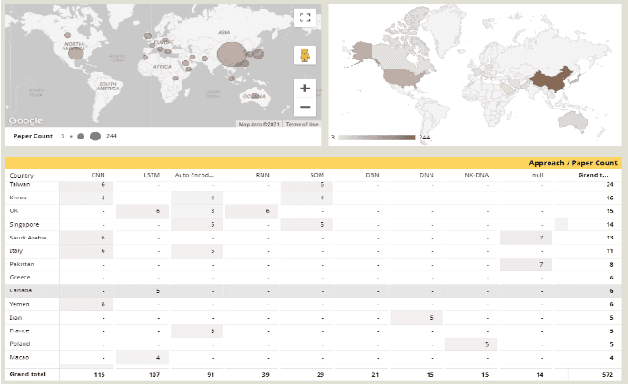

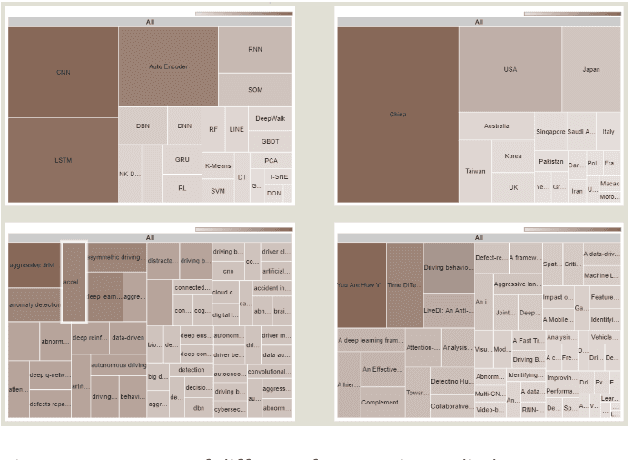

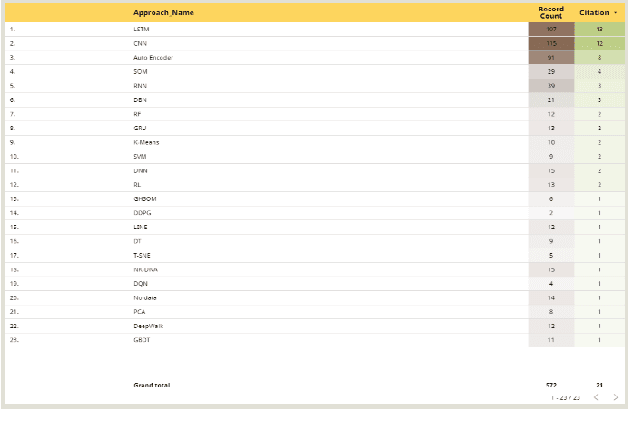

Abstract:Improper driving results in fatalities, damages, increased energy consumptions, and depreciation of the vehicles. Analyzing driving behaviour could lead to optimize and avoid mentioned issues. By identifying the type of driving and mapping them to the consequences of that type of driving, we can get a model to prevent them. In this regard, we try to create a dynamic survey paper to review and present driving behaviour survey data for future researchers in our research. By analyzing 58 articles, we attempt to classify standard methods and provide a framework for future articles to be examined and studied in different dashboards and updated about trends.

Detection of Alzheimer's Disease Using Graph-Regularized Convolutional Neural Network Based on Structural Similarity Learning of Brain Magnetic Resonance Images

Feb 25, 2021

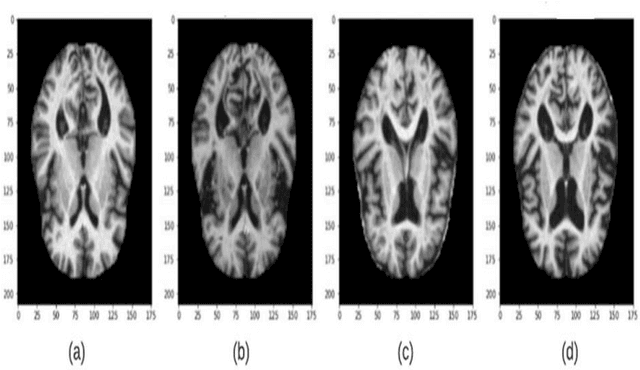

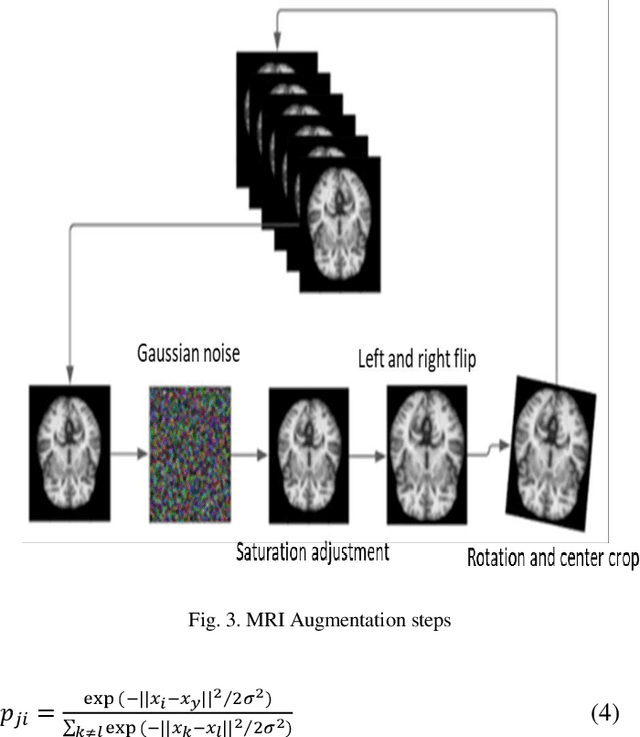

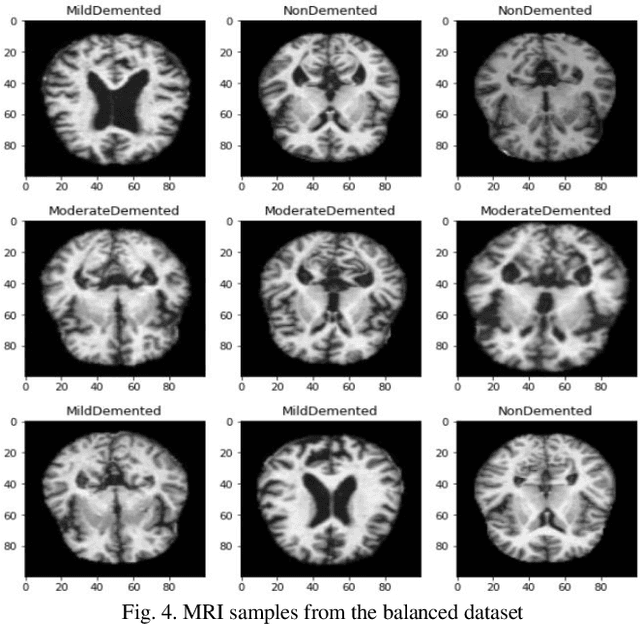

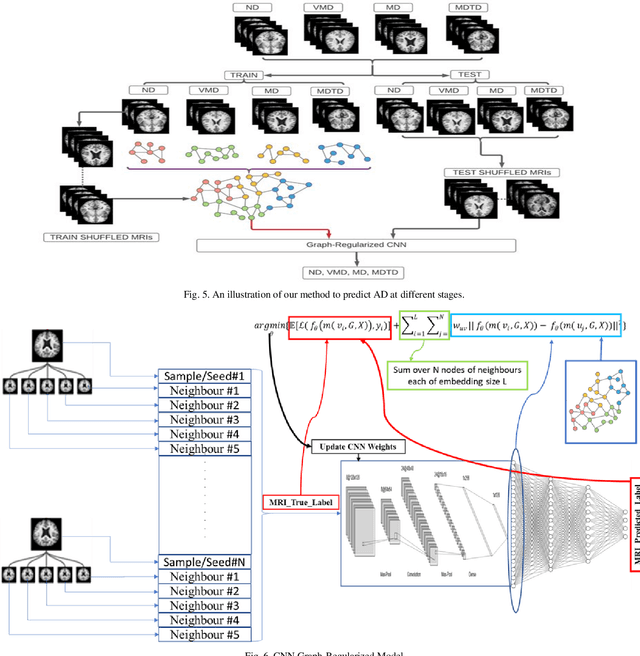

Abstract:Objective: This paper presents an Alzheimer's disease (AD) detection method based on learning structural similarity between Magnetic Resonance Images (MRIs) and representing this similarity as a graph. Methods: We construct the similarity graph using embedded features of the input image (i.e., Non-Demented (ND), Very Mild Demented (VMD), Mild Demented (MD), and Moderated Demented (MDTD)). We experiment and compare different dimension-reduction and clustering algorithms to construct the best similarity graph to capture the similarity between the same class images using the cosine distance as a similarity measure. We utilize the similarity graph to present (sample) the training data to a convolutional neural network (CNN). We use the similarity graph as a regularizer in the loss function of a CNN model to minimize the distance between the input images and their k-nearest neighbours in the similarity graph while minimizing the categorical cross-entropy loss between the training image predictions and the actual image class labels. Results: We conduct extensive experiments with several pre-trained CNN models and compare the results to other recent methods. Conclusion: Our method achieves superior performance on the testing dataset (accuracy = 0.986, area under receiver operating characteristics curve = 0.998, F1 measure = 0.987). Significance: The classification results show an improvement in the prediction accuracy compared to the other methods. We release all the code used in our experiments to encourage reproducible research in this area

A Review of Artificial Intelligence Technologies for Early Prediction of Alzheimer's Disease

Dec 22, 2020

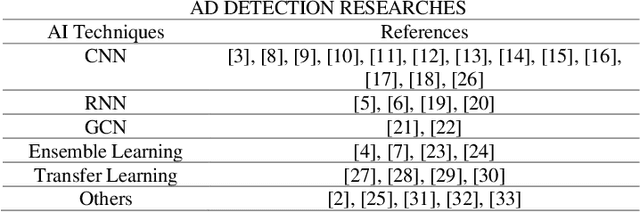

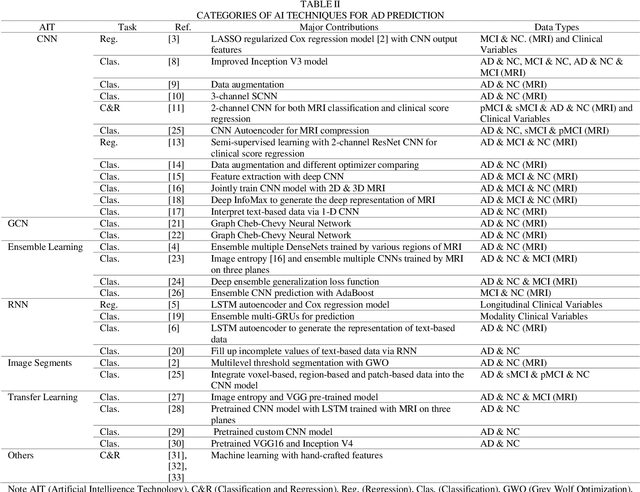

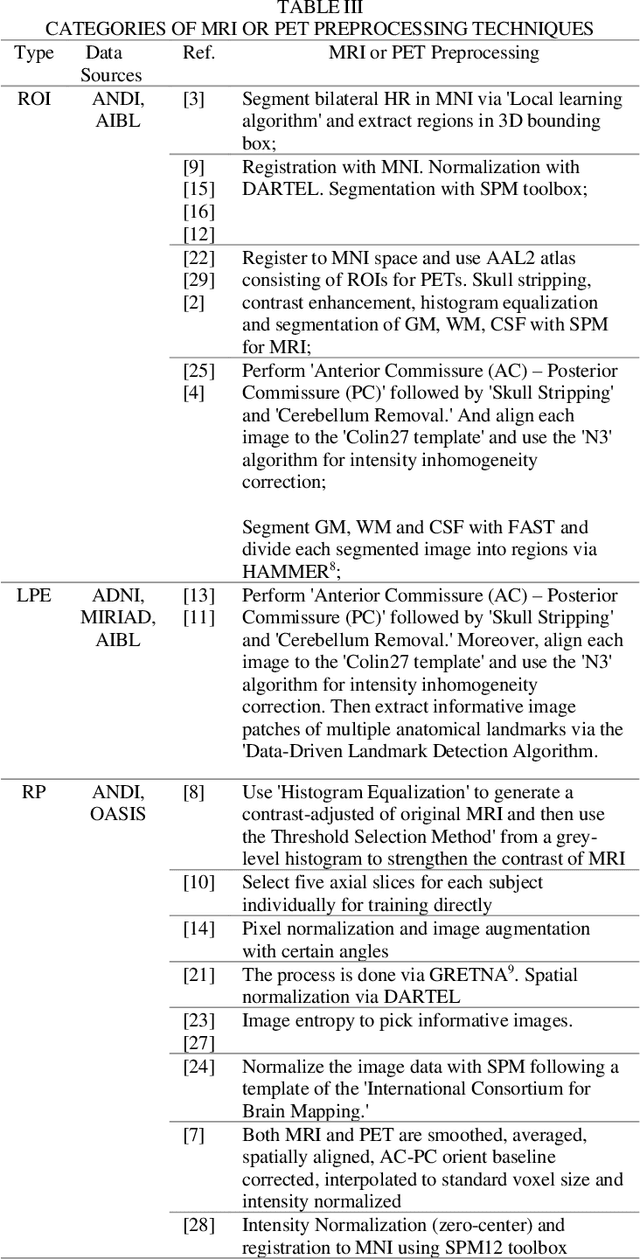

Abstract:Alzheimer's Disease (AD) is a severe brain disorder, destroying memories and brain functions. AD causes chronically, progressively, and irreversibly cognitive declination and brain damages. The reliable and effective evaluation of early dementia has become essential research with medical imaging technologies and computer-aided algorithms. This trend has moved to modern Artificial Intelligence (AI) technologies motivated by deeplearning success in image classification and natural language processing. The purpose of this review is to provide an overview of the latest research involving deep-learning algorithms in evaluating the process of dementia, diagnosing the early stage of AD, and discussing an outlook for this research. This review introduces various applications of modern AI algorithms in AD diagnosis, including Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Automatic Image Segmentation, Autoencoder, Graph CNN (GCN), Ensemble Learning, and Transfer Learning. The advantages and disadvantages of the proposed methods and their performance are discussed. The conclusion section summarizes the primary contributions and medical imaging preprocessing techniques applied in the reviewed research. Finally, we discuss the limitations and future outlooks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge