Eley Ng

Diffusion Co-Policy for Synergistic Human-Robot Collaborative Tasks

May 20, 2023

Abstract:Modeling multimodal human behavior accurately has been a key barrier to increasing the level of interaction between human and robot, particularly for collaborative tasks. Our key insight is that the predictive accuracy of human behaviors on physical tasks is bottlenecked by the model for methods involving human behavior prediction. We present a method for training denoising diffusion probabilistic models on a dataset of collaborative human-human demonstrations and conditioning on past human partner actions to plan sequences of robot actions that synergize well with humans during test time. We demonstrate the method outperforms other state-of-art learning methods on human-robot table-carrying, a continuous state-action task, in both simulation and real settings with a human in the loop. Moreover, we qualitatively highlight compelling robot behaviors that arise during evaluations that demonstrate evidence of true human-robot collaboration, including mutual adaptation, shared task understanding, leadership switching, learned partner behaviors, and low levels of wasteful interaction forces arising from dissent. Project page coming soon.

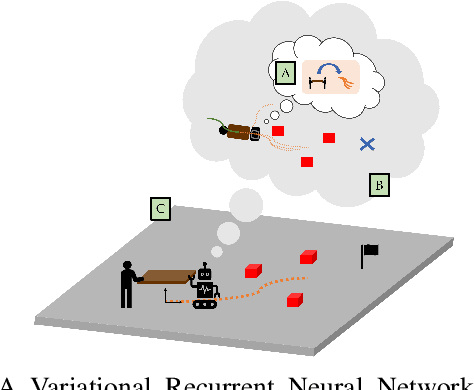

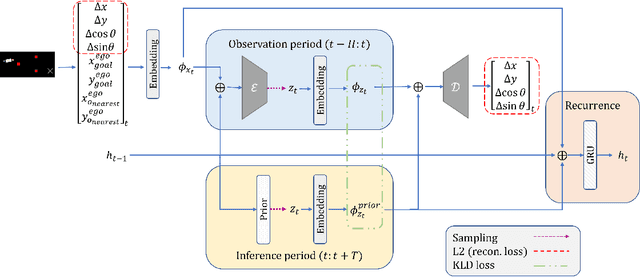

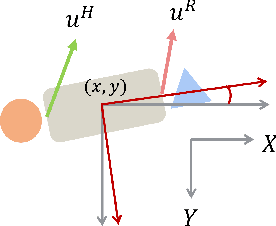

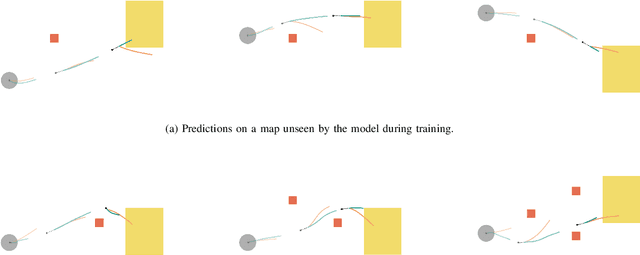

It Takes Two: Learning to Plan for Human-Robot Cooperative Carrying

Sep 26, 2022

Abstract:Collaborative table-carrying is a complex task due to the continuous nature of the action and state-spaces, multimodality of strategies, existence of obstacles in the environment, and the need for instantaneous adaptation to other agents. In this work, we present a method for predicting realistic motion plans for cooperative human-robot teams on a table-carrying task. Using a Variational Recurrent Neural Network, VRNN, to model the variation in the trajectory of a human-robot team over time, we are able to capture the distribution over the team's future states while leveraging information from interaction history. The key to our approach is in our model's ability to leverage human demonstration data and generate trajectories that synergize well with humans during test time. We show that the model generates more human-like motion compared to a baseline, centralized sampling-based planner, Rapidly-exploring Random Trees (RRT). Furthermore, we evaluate the VRNN planner with a human partner and show its ability to both generate more human-like paths and achieve higher task success rate than RRT can while planning with a human. Finally, we demonstrate that a LoCoBot using the VRNN planner can complete the task successfully with a human controlling another LoCoBot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge