Dinh Q. Phung

A Slice Sampler for Restricted Hierarchical Beta Process with Applications to Shared Subspace Learning

Oct 16, 2012Abstract:Hierarchical beta process has found interesting applications in recent years. In this paper we present a modified hierarchical beta process prior with applications to hierarchical modeling of multiple data sources. The novel use of the prior over a hierarchical factor model allows factors to be shared across different sources. We derive a slice sampler for this model, enabling tractable inference even when the likelihood and the prior over parameters are non-conjugate. This allows the application of the model in much wider contexts without restrictions. We present two different data generative models a linear GaussianGaussian model for real valued data and a linear Poisson-gamma model for count data. Encouraging transfer learning results are shown for two real world applications text modeling and content based image retrieval.

Ordinal Boltzmann Machines for Collaborative Filtering

May 09, 2012

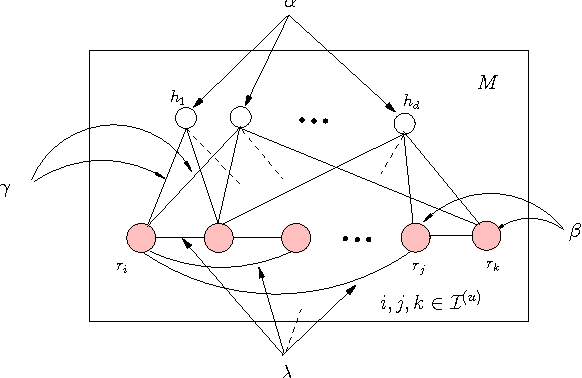

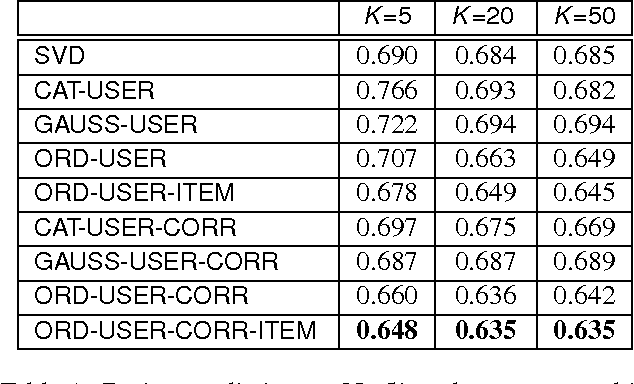

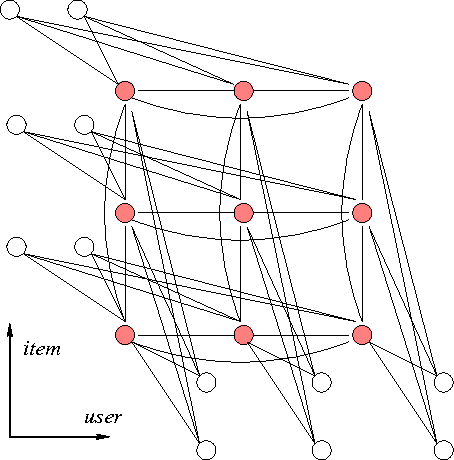

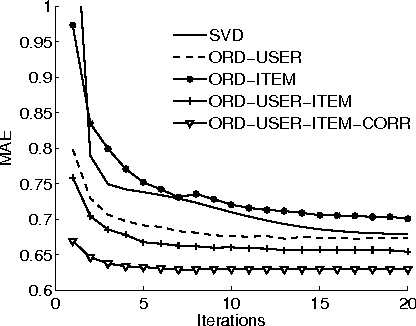

Abstract:Collaborative filtering is an effective recommendation technique wherein the preference of an individual can potentially be predicted based on preferences of other members. Early algorithms often relied on the strong locality in the preference data, that is, it is enough to predict preference of a user on a particular item based on a small subset of other users with similar tastes or of other items with similar properties. More recently, dimensionality reduction techniques have proved to be equally competitive, and these are based on the co-occurrence patterns rather than locality. This paper explores and extends a probabilistic model known as Boltzmann Machine for collaborative filtering tasks. It seamlessly integrates both the similarity and co-occurrence in a principled manner. In particular, we study parameterisation options to deal with the ordinal nature of the preferences, and propose a joint modelling of both the user-based and item-based processes. Experiments on moderate and large-scale movie recommendation show that our framework rivals existing well-known methods.

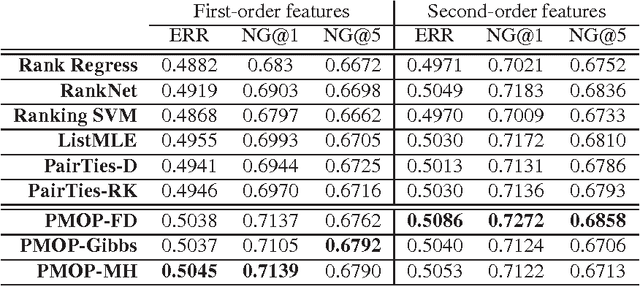

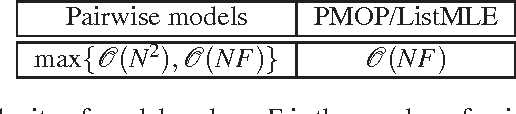

Probabilistic Models over Ordered Partitions with Application in Learning to Rank

Oct 04, 2010

Abstract:This paper addresses the general problem of modelling and learning rank data with ties. We propose a probabilistic generative model, that models the process as permutations over partitions. This results in super-exponential combinatorial state space with unknown numbers of partitions and unknown ordering among them. We approach the problem from the discrete choice theory, where subsets are chosen in a stagewise manner, reducing the state space per each stage significantly. Further, we show that with suitable parameterisation, we can still learn the models in linear time. We evaluate the proposed models on the problem of learning to rank with the data from the recently held Yahoo! challenge, and demonstrate that the models are competitive against well-known rivals.

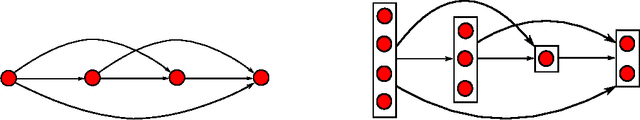

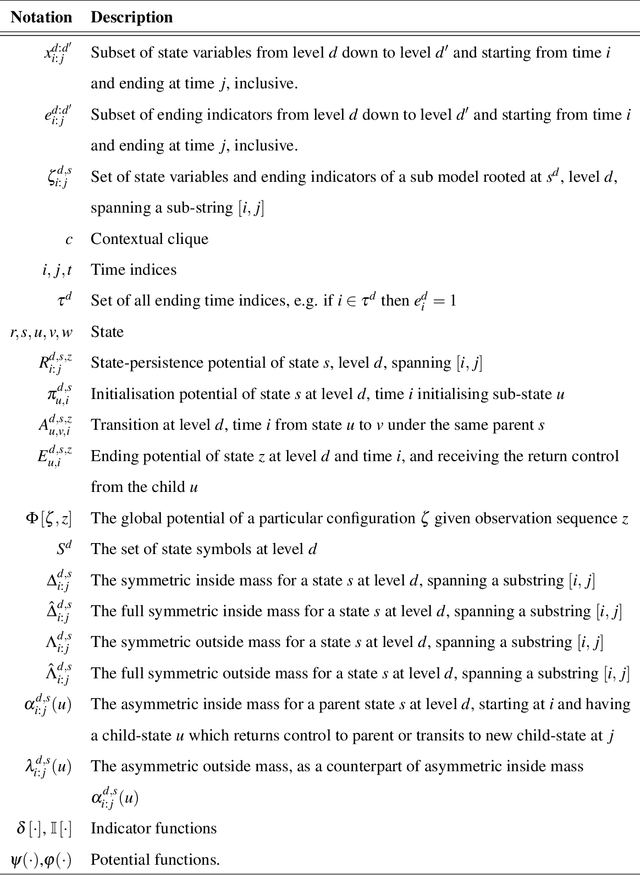

Hierarchical Semi-Markov Conditional Random Fields for Recursive Sequential Data

Sep 10, 2010

Abstract:Inspired by the hierarchical hidden Markov models (HHMM), we present the hierarchical semi-Markov conditional random field (HSCRF), a generalisation of embedded undirectedMarkov chains tomodel complex hierarchical, nestedMarkov processes. It is parameterised in a discriminative framework and has polynomial time algorithms for learning and inference. Importantly, we consider partiallysupervised learning and propose algorithms for generalised partially-supervised learning and constrained inference. We demonstrate the HSCRF in two applications: (i) recognising human activities of daily living (ADLs) from indoor surveillance cameras, and (ii) noun-phrase chunking. We show that the HSCRF is capable of learning rich hierarchical models with reasonable accuracy in both fully and partially observed data cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge