Diego Corrêa da Silva

Calibrated Recommendations: Survey and Future Directions

Jul 03, 2025Abstract:The idea of calibrated recommendations is that the properties of the items that are suggested to users should match the distribution of their individual past preferences. Calibration techniques are therefore helpful to ensure that the recommendations provided to a user are not limited to a certain subset of the user's interests. Over the past few years, we have observed an increasing number of research works that use calibration for different purposes, including questions of diversity, biases, and fairness. In this work, we provide a survey on the recent developments in the area of calibrated recommendations. We both review existing technical approaches for calibration and provide an overview on empirical and analytical studies on the effectiveness of calibration for different use cases. Furthermore, we discuss limitations and common challenges when implementing calibration in practice.

Introducing a Framework and a Decision Protocol to Calibrate Recommender Systems

Apr 07, 2022

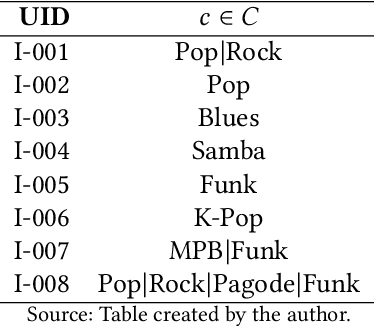

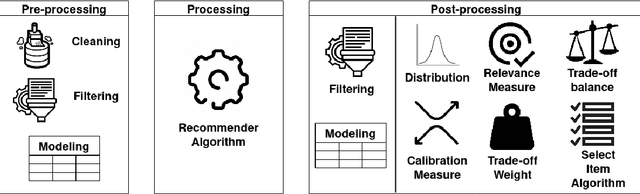

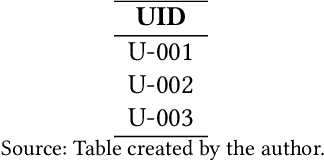

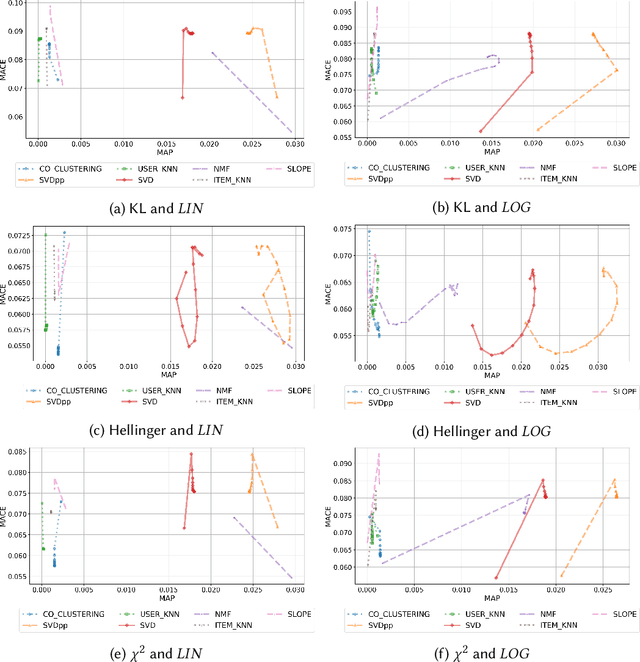

Abstract:Recommender Systems use the user's profile to generate a recommendation list with unknown items to a target user. Although the primary goal of traditional recommendation systems is to deliver the most relevant items, such an effort unintentionally can cause collateral effects including low diversity and unbalanced genres or categories, benefiting particular groups of categories. This paper proposes an approach to create recommendation lists with a calibrated balance of genres, avoiding disproportion between the user's profile interests and the recommendation list. The calibrated recommendations consider concomitantly the relevance and the divergence between the genres distributions extracted from the user's preference and the recommendation list. The main claim is that calibration can contribute positively to generate fairer recommendations. In particular, we propose a new trade-off equation, which considers the users' bias to provide a recommendation list that seeks for the users' tendencies. Moreover, we propose a conceptual framework and a decision protocol to generate more than one thousand combinations of calibrated systems in order to find the best combination. We compare our approach against state-of-the-art approaches using multiple domain datasets, which are analyzed by rank and calibration metrics. The results indicate that the trade-off, which considers the users' bias, produces positive effects on the precision and to the fairness, thus generating recommendation lists that respect the genre distribution and, through the decision protocol, we also found the best system for each dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge