Dewei Yi

TD-TOG Dataset: Benchmarking Zero-Shot and One-Shot Task-Oriented Grasping for Object Generalization

Jun 05, 2025Abstract:Task-oriented grasping (TOG) is an essential preliminary step for robotic task execution, which involves predicting grasps on regions of target objects that facilitate intended tasks. Existing literature reveals there is a limited availability of TOG datasets for training and benchmarking despite large demand, which are often synthetic or have artifacts in mask annotations that hinder model performance. Moreover, TOG solutions often require affordance masks, grasps, and object masks for training, however, existing datasets typically provide only a subset of these annotations. To address these limitations, we introduce the Top-down Task-oriented Grasping (TD-TOG) dataset, designed to train and evaluate TOG solutions. TD-TOG comprises 1,449 real-world RGB-D scenes including 30 object categories and 120 subcategories, with hand-annotated object masks, affordances, and planar rectangular grasps. It also features a test set for a novel challenge that assesses a TOG solution's ability to distinguish between object subcategories. To contribute to the demand for TOG solutions that can adapt and manipulate previously unseen objects without re-training, we propose a novel TOG framework, Binary-TOG. Binary-TOG uses zero-shot for object recognition, and one-shot learning for affordance recognition. Zero-shot learning enables Binary-TOG to identify objects in multi-object scenes through textual prompts, eliminating the need for visual references. In multi-object settings, Binary-TOG achieves an average task-oriented grasp accuracy of 68.9%. Lastly, this paper contributes a comparative analysis between one-shot and zero-shot learning for object generalization in TOG to be used in the development of future TOG solutions.

LLEDA -- Lifelong Self-Supervised Domain Adaptation

Nov 12, 2022Abstract:Lifelong domain adaptation remains a challenging task in machine learning due to the differences among the domains and the unavailability of historical data. The ultimate goal is to learn the distributional shifts while retaining the previously gained knowledge. Inspired by the Complementary Learning Systems (CLS) theory, we propose a novel framework called Lifelong Self-Supervised Domain Adaptation (LLEDA). LLEDA addresses catastrophic forgetting by replaying hidden representations rather than raw data pixels and domain-agnostic knowledge transfer using self-supervised learning. LLEDA does not access labels from the source or the target domain and only has access to a single domain at any given time. Extensive experiments demonstrate that the proposed method outperforms several other methods and results in a long-term adaptation, while being less prone to catastrophic forgetting when transferred to new domains.

Brain-Inspired Deep Imitation Learning for Autonomous Driving Systems

Jul 30, 2021

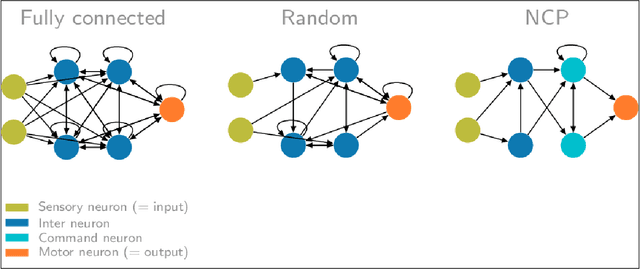

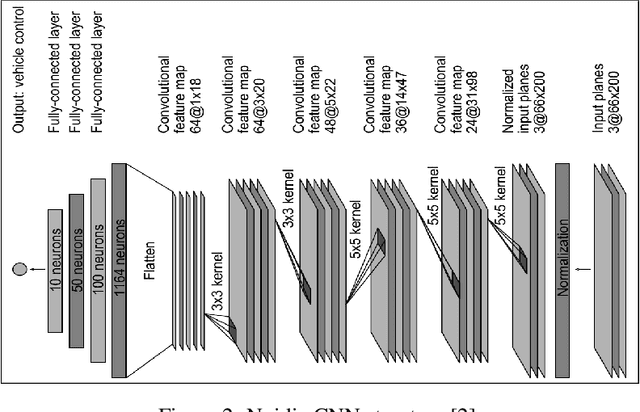

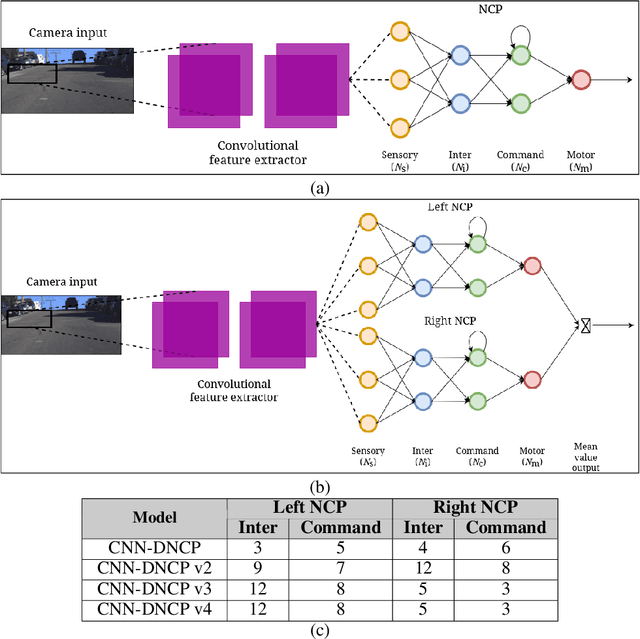

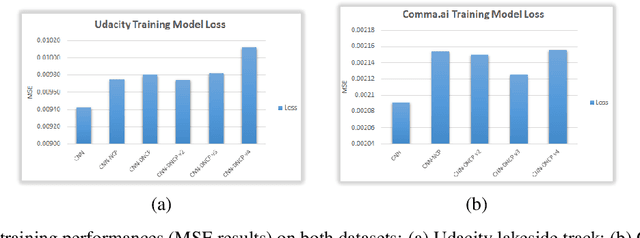

Abstract:Autonomous driving has attracted great attention from both academics and industries. To realise autonomous driving, Deep Imitation Learning (DIL) is treated as one of the most promising solutions, because it improves autonomous driving systems by automatically learning a complex mapping from human driving data, compared to manually designing the driving policy. However, existing DIL methods cannot generalise well across domains, that is, a network trained on the data of source domain gives rise to poor generalisation on the data of target domain. In the present study, we propose a novel brain-inspired deep imitation method that builds on the evidence from human brain functions, to improve the generalisation ability of deep neural networks so that autonomous driving systems can perform well in various scenarios. Specifically, humans have a strong generalisation ability which is beneficial from the structural and functional asymmetry of the two sides of the brain. Here, we design dual Neural Circuit Policy (NCP) architectures in deep neural networks based on the asymmetry of human neural networks. Experimental results demonstrate that our brain-inspired method outperforms existing methods regarding generalisation when dealing with unseen data. Our source codes and pretrained models are available at https://github.com/Intenzo21/Brain-Inspired-Deep-Imitation-Learning-for-Autonomous-Driving-Systems}{https://github.com/Intenzo21/Brain-Inspired-Deep-Imitation-Learning-for-Autonomous-Driving-Systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge