Derek Pang

MuZero with Self-competition for Rate Control in VP9 Video Compression

Feb 14, 2022

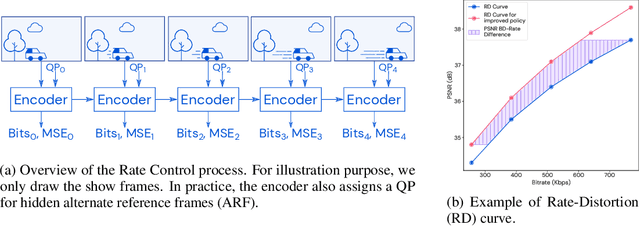

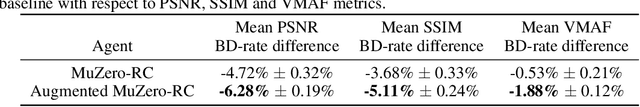

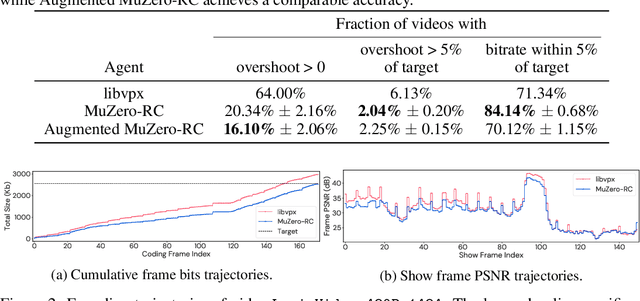

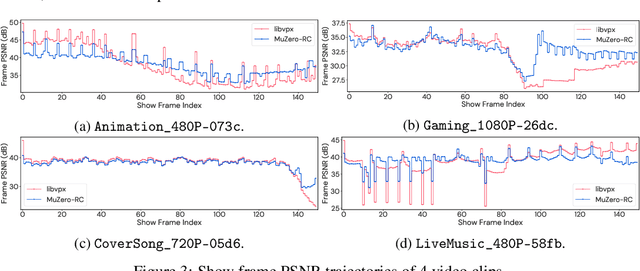

Abstract:Video streaming usage has seen a significant rise as entertainment, education, and business increasingly rely on online video. Optimizing video compression has the potential to increase access and quality of content to users, and reduce energy use and costs overall. In this paper, we present an application of the MuZero algorithm to the challenge of video compression. Specifically, we target the problem of learning a rate control policy to select the quantization parameters (QP) in the encoding process of libvpx, an open source VP9 video compression library widely used by popular video-on-demand (VOD) services. We treat this as a sequential decision making problem to maximize the video quality with an episodic constraint imposed by the target bitrate. Notably, we introduce a novel self-competition based reward mechanism to solve constrained RL with variable constraint satisfaction difficulty, which is challenging for existing constrained RL methods. We demonstrate that the MuZero-based rate control achieves an average 6.28% reduction in size of the compressed videos for the same delivered video quality level (measured as PSNR BD-rate) compared to libvpx's two-pass VBR rate control policy, while having better constraint satisfaction behavior.

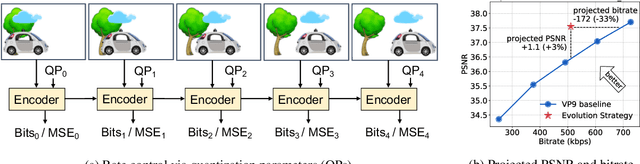

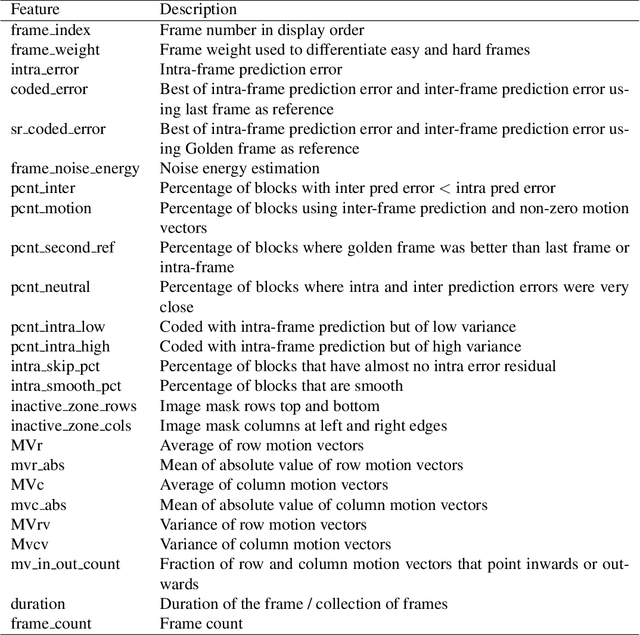

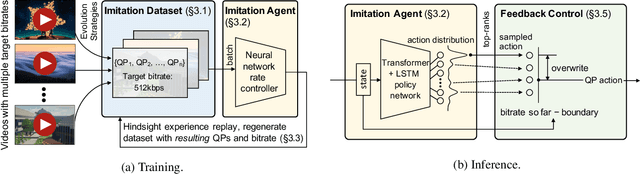

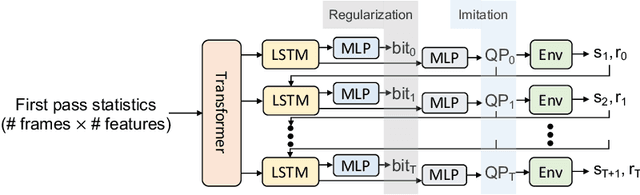

Neural Rate Control for Video Encoding using Imitation Learning

Dec 09, 2020

Abstract:In modern video encoders, rate control is a critical component and has been heavily engineered. It decides how many bits to spend to encode each frame, in order to optimize the rate-distortion trade-off over all video frames. This is a challenging constrained planning problem because of the complex dependency among decisions for different video frames and the bitrate constraint defined at the end of the episode. We formulate the rate control problem as a Partially Observable Markov Decision Process (POMDP), and apply imitation learning to learn a neural rate control policy. We demonstrate that by learning from optimal video encoding trajectories obtained through evolution strategies, our learned policy achieves better encoding efficiency and has minimal constraint violation. In addition to imitating the optimal actions, we find that additional auxiliary losses, data augmentation/refinement and inference-time policy improvements are critical for learning a good rate control policy. We evaluate the learned policy against the rate control policy in libvpx, a widely adopted open source VP9 codec library, in the two-pass variable bitrate (VBR) mode. We show that over a diverse set of real-world videos, our learned policy achieves 8.5% median bitrate reduction without sacrificing video quality.

A stochastic model of human visual attention with a dynamic Bayesian network

Apr 01, 2010

Abstract:Recent studies in the field of human vision science suggest that the human responses to the stimuli on a visual display are non-deterministic. People may attend to different locations on the same visual input at the same time. Based on this knowledge, we propose a new stochastic model of visual attention by introducing a dynamic Bayesian network to predict the likelihood of where humans typically focus on a video scene. The proposed model is composed of a dynamic Bayesian network with 4 layers. Our model provides a framework that simulates and combines the visual saliency response and the cognitive state of a person to estimate the most probable attended regions. Sample-based inference with Markov chain Monte-Carlo based particle filter and stream processing with multi-core processors enable us to estimate human visual attention in near real time. Experimental results have demonstrated that our model performs significantly better in predicting human visual attention compared to the previous deterministic models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge