Deepak Ghimire

Comparison of Two Methods for Stationary Incident Detection Based on Background Image

Jun 17, 2025Abstract:In general, background subtraction-based methods are used to detect moving objects in visual tracking applications. In this paper, we employed a background subtraction-based scheme to detect the temporarily stationary objects. We proposed two schemes for stationary object detection, and we compare those in terms of detection performance and computational complexity. In the first approach, we used a single background, and in the second approach, we used dual backgrounds, generated with different learning rates, in order to detect temporarily stopped objects. Finally, we used normalized cross correlation (NCC) based image comparison to monitor and track the detected stationary object in a video scene. The proposed method is robust with partial occlusion, short-time fully occlusion, and illumination changes, and it can operate in real time.

* 8 pages, 6 figures

Study on Real-Time Road Surface Reconstruction Using Stereo Vision

Apr 25, 2025Abstract:Road surface reconstruction plays a crucial role in autonomous driving, providing essential information for safe and smooth navigation. This paper enhances the RoadBEV [1] framework for real-time inference on edge devices by optimizing both efficiency and accuracy. To achieve this, we proposed to apply Isomorphic Global Structured Pruning to the stereo feature extraction backbone, reducing network complexity while maintaining performance. Additionally, the head network is redesigned with an optimized hourglass structure, dynamic attention heads, reduced feature channels, mixed precision inference, and efficient probability volume computation. Our approach improves inference speed while achieving lower reconstruction error, making it well-suited for real-time road surface reconstruction in autonomous driving.

Real-Time Sleepiness Detection for Driver State Monitoring System

Apr 21, 2025Abstract:A driver face monitoring system can detect driver fatigue, which is a significant factor in many accidents, using computer vision techniques. In this paper, we present a real-time technique for driver eye state detection. First, the face is detected, and the eyes are located within the face region for tracking. A normalized cross-correlation-based online dynamic template matching technique, combined with Kalman filter tracking, is proposed to track the detected eye positions in subsequent image frames. A support vector machine with histogram of oriented gradients (HOG) features is used to classify the state of the eyes as open or closed. If the eyes remain closed for a specified period, the driver is considered to be asleep, and an alarm is triggered.

* 8 pages, published in GST 2015

Facial expression recognition based on local region specific features and support vector machines

Apr 15, 2016

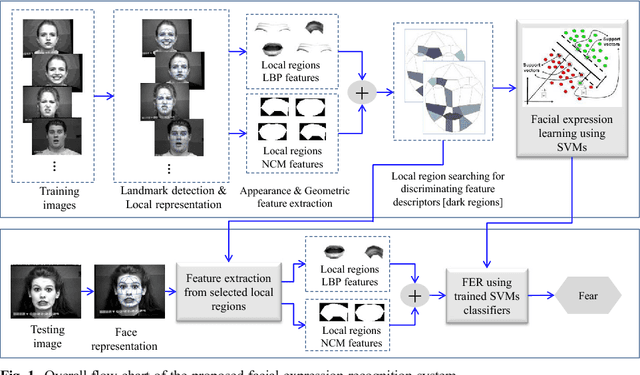

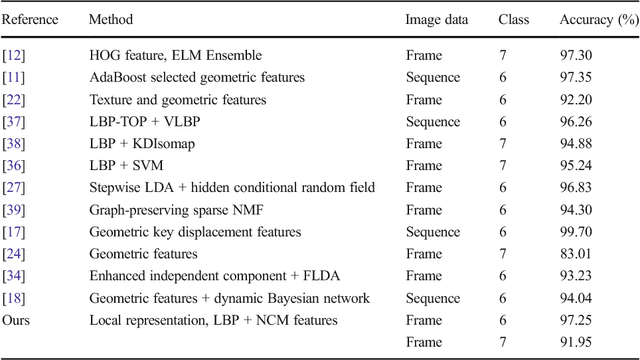

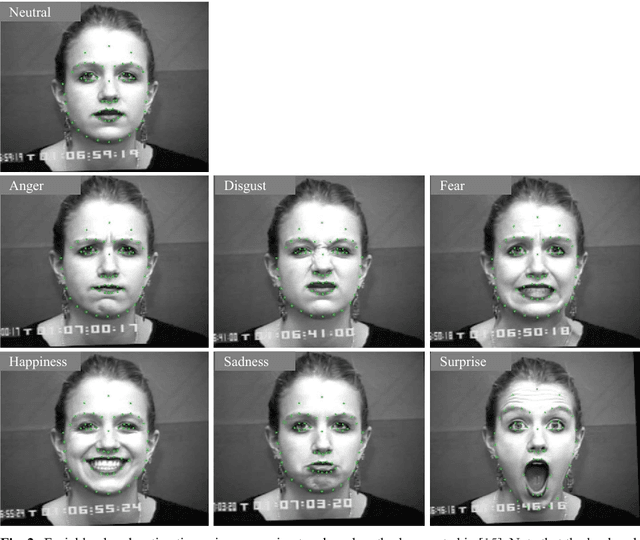

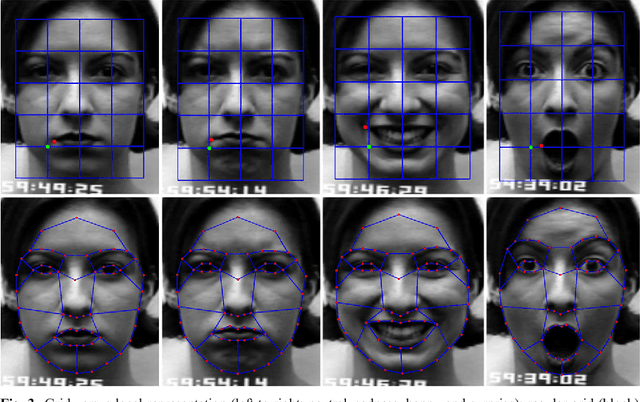

Abstract:Facial expressions are one of the most powerful, natural and immediate means for human being to communicate their emotions and intensions. Recognition of facial expression has many applications including human-computer interaction, cognitive science, human emotion analysis, personality development etc. In this paper, we propose a new method for the recognition of facial expressions from single image frame that uses combination of appearance and geometric features with support vector machines classification. In general, appearance features for the recognition of facial expressions are computed by dividing face region into regular grid (holistic representation). But, in this paper we extracted region specific appearance features by dividing the whole face region into domain specific local regions. Geometric features are also extracted from corresponding domain specific regions. In addition, important local regions are determined by using incremental search approach which results in the reduction of feature dimension and improvement in recognition accuracy. The results of facial expressions recognition using features from domain specific regions are also compared with the results obtained using holistic representation. The performance of the proposed facial expression recognition system has been validated on publicly available extended Cohn-Kanade (CK+) facial expression data sets.

* Facial expressions, Local representation, Appearance features, Geometric features, Support vector machines

Recognition of facial expressions based on salient geometric features and support vector machines

Apr 15, 2016

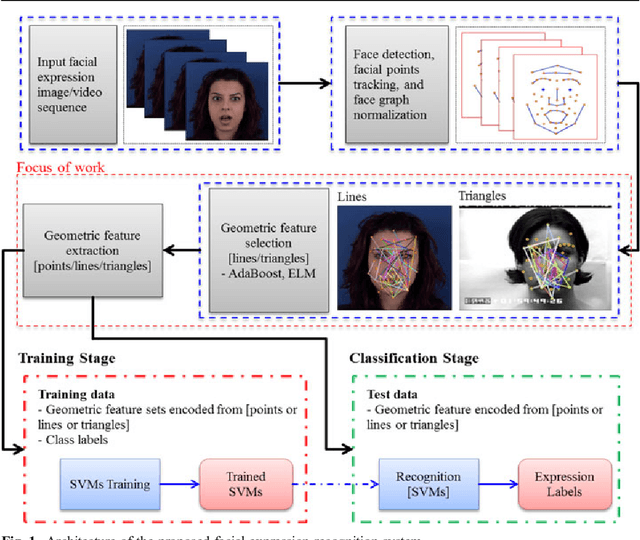

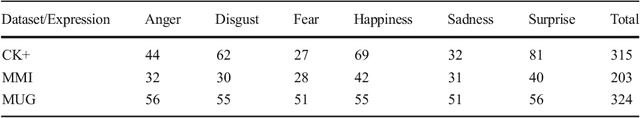

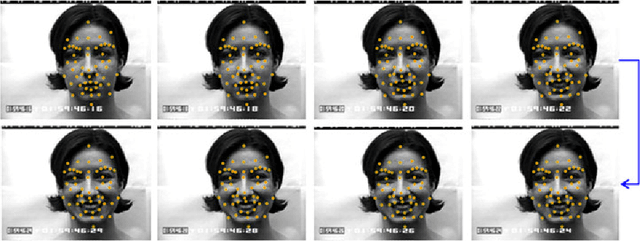

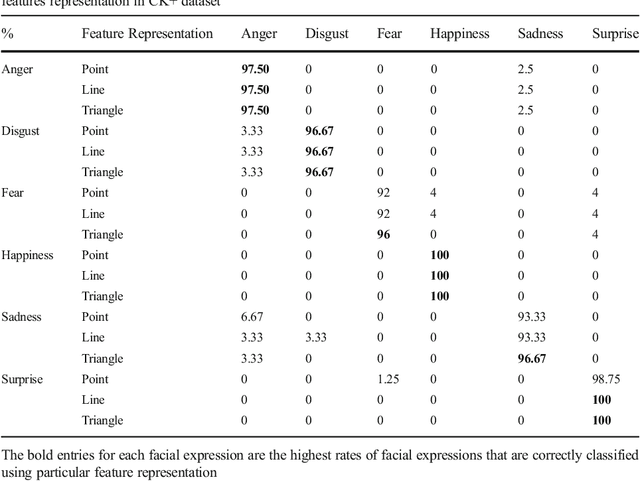

Abstract:Facial expressions convey nonverbal cues which play an important role in interpersonal relations, and are widely used in behavior interpretation of emotions, cognitive science, and social interactions. In this paper we analyze different ways of representing geometric feature and present a fully automatic facial expression recognition (FER) system using salient geometric features. In geometric feature-based FER approach, the first important step is to initialize and track dense set of facial points as the expression evolves over time in consecutive frames. In the proposed system, facial points are initialized using elastic bunch graph matching (EBGM) algorithm and tracking is performed using Kanade-Lucas-Tomaci (KLT) tracker. We extract geometric features from point, line and triangle composed of tracking results of facial points. The most discriminative line and triangle features are extracted using feature selective multi-class AdaBoost with the help of extreme learning machine (ELM) classification. Finally the geometric features for FER are extracted from the boosted line, and triangles composed of facial points. The recognition accuracy using features from point, line and triangle are analyzed independently. The performance of the proposed FER system is evaluated on three different data sets: namely CK+, MMI and MUG facial expression data sets.

* Facial points, Geometric features, AdaBoost, Extreme learning machine, Support vector machines, Facial expression recognitions

Geometric Feature-Based Facial Expression Recognition in Image Sequences Using Multi-Class AdaBoost and Support Vector Machines

Apr 12, 2016

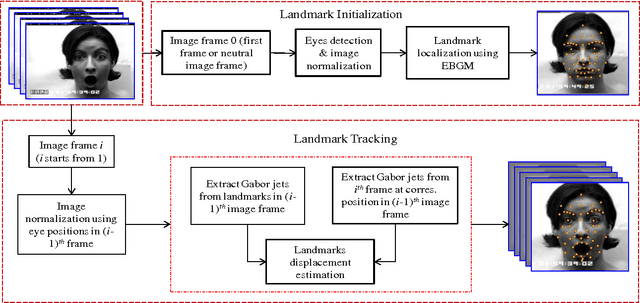

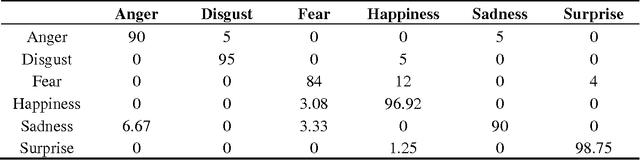

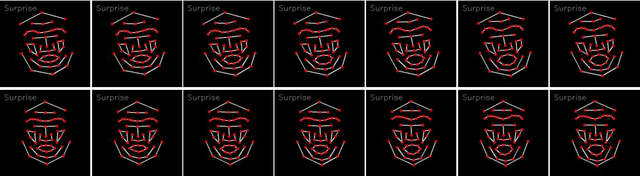

Abstract:Facial expressions are widely used in the behavioral interpretation of emotions, cognitive science, and social interactions. In this paper, we present a novel method for fully automatic facial expression recognition in facial image sequences. As the facial expression evolves over time facial landmarks are automatically tracked in consecutive video frames, using displacements based on elastic bunch graph matching displacement estimation. Feature vectors from individual landmarks, as well as pairs of landmarks tracking results are extracted, and normalized, with respect to the first frame in the sequence. The prototypical expression sequence for each class of facial expression is formed, by taking the median of the landmark tracking results from the training facial expression sequences. Multi-class AdaBoost with dynamic time warping similarity distance between the feature vector of input facial expression and prototypical facial expression, is used as a weak classifier to select the subset of discriminative feature vectors. Finally, two methods for facial expression recognition are presented, either by using multi-class AdaBoost with dynamic time warping, or by using support vector machine on the boosted feature vectors. The results on the Cohn-Kanade (CK+) facial expression database show a recognition accuracy of 95.17% and 97.35% using multi-class AdaBoost and support vector machines, respectively.

* 21 pages, Sensors Journal, facial expression recognition

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge