Debaditya Roy

Learning to Generate Long-term Future Narrations Describing Activities of Daily Living

Mar 03, 2025Abstract:Anticipating future events is crucial for various application domains such as healthcare, smart home technology, and surveillance. Narrative event descriptions provide context-rich information, enhancing a system's future planning and decision-making capabilities. We propose a novel task: $\textit{long-term future narration generation}$, which extends beyond traditional action anticipation by generating detailed narrations of future daily activities. We introduce a visual-language model, ViNa, specifically designed to address this challenging task. ViNa integrates long-term videos and corresponding narrations to generate a sequence of future narrations that predict subsequent events and actions over extended time horizons. ViNa extends existing multimodal models that perform only short-term predictions or describe observed videos by generating long-term future narrations for a broader range of daily activities. We also present a novel downstream application that leverages the generated narrations called future video retrieval to help users improve planning for a task by visualizing the future. We evaluate future narration generation on the largest egocentric dataset Ego4D.

Learning to Reason Iteratively and Parallelly for Complex Visual Reasoning Scenarios

Nov 20, 2024Abstract:Complex visual reasoning and question answering (VQA) is a challenging task that requires compositional multi-step processing and higher-level reasoning capabilities beyond the immediate recognition and localization of objects and events. Here, we introduce a fully neural Iterative and Parallel Reasoning Mechanism (IPRM) that combines two distinct forms of computation -- iterative and parallel -- to better address complex VQA scenarios. Specifically, IPRM's "iterative" computation facilitates compositional step-by-step reasoning for scenarios wherein individual operations need to be computed, stored, and recalled dynamically (e.g. when computing the query "determine the color of pen to the left of the child in red t-shirt sitting at the white table"). Meanwhile, its "parallel" computation allows for the simultaneous exploration of different reasoning paths and benefits more robust and efficient execution of operations that are mutually independent (e.g. when counting individual colors for the query: "determine the maximum occurring color amongst all t-shirts"). We design IPRM as a lightweight and fully-differentiable neural module that can be conveniently applied to both transformer and non-transformer vision-language backbones. It notably outperforms prior task-specific methods and transformer-based attention modules across various image and video VQA benchmarks testing distinct complex reasoning capabilities such as compositional spatiotemporal reasoning (AGQA), situational reasoning (STAR), multi-hop reasoning generalization (CLEVR-Humans) and causal event linking (CLEVRER-Humans). Further, IPRM's internal computations can be visualized across reasoning steps, aiding interpretability and diagnosis of its errors.

Effectively Leveraging CLIP for Generating Situational Summaries of Images and Videos

Jul 30, 2024

Abstract:Situation recognition refers to the ability of an agent to identify and understand various situations or contexts based on available information and sensory inputs. It involves the cognitive process of interpreting data from the environment to determine what is happening, what factors are involved, and what actions caused those situations. This interpretation of situations is formulated as a semantic role labeling problem in computer vision-based situation recognition. Situations depicted in images and videos hold pivotal information, essential for various applications like image and video captioning, multimedia retrieval, autonomous systems and event monitoring. However, existing methods often struggle with ambiguity and lack of context in generating meaningful and accurate predictions. Leveraging multimodal models such as CLIP, we propose ClipSitu, which sidesteps the need for full fine-tuning and achieves state-of-the-art results in situation recognition and localization tasks. ClipSitu harnesses CLIP-based image, verb, and role embeddings to predict nouns fulfilling all the roles associated with a verb, providing a comprehensive understanding of depicted scenarios. Through a cross-attention Transformer, ClipSitu XTF enhances the connection between semantic role queries and visual token representations, leading to superior performance in situation recognition. We also propose a verb-wise role prediction model with near-perfect accuracy to create an end-to-end framework for producing situational summaries for out-of-domain images. We show that situational summaries empower our ClipSitu models to produce structured descriptions with reduced ambiguity compared to generic captions. Finally, we extend ClipSitu to video situation recognition to showcase its versatility and produce comparable performance to state-of-the-art methods.

ClipSitu: Effectively Leveraging CLIP for Conditional Predictions in Situation Recognition

Jul 02, 2023Abstract:Situation Recognition is the task of generating a structured summary of what is happening in an image using an activity verb and the semantic roles played by actors and objects. In this task, the same activity verb can describe a diverse set of situations as well as the same actor or object category can play a diverse set of semantic roles depending on the situation depicted in the image. Hence model needs to understand the context of the image and the visual-linguistic meaning of semantic roles. Therefore, we leverage the CLIP foundational model that has learned the context of images via language descriptions. We show that deeper-and-wider multi-layer perceptron (MLP) blocks obtain noteworthy results for the situation recognition task by using CLIP image and text embedding features and it even outperforms the state-of-the-art CoFormer, a Transformer-based model, thanks to the external implicit visual-linguistic knowledge encapsulated by CLIP and the expressive power of modern MLP block designs. Motivated by this, we design a cross-attention-based Transformer using CLIP visual tokens that model the relation between textual roles and visual entities. Our cross-attention-based Transformer known as ClipSitu XTF outperforms existing state-of-the-art by a large margin of 14.1% on semantic role labelling (value) for top-1 accuracy using imSitu dataset. We will make the code publicly available.

Modelling Spatio-Temporal Interactions for Compositional Action Recognition

May 04, 2023

Abstract:Humans have the natural ability to recognize actions even if the objects involved in the action or the background are changed. Humans can abstract away the action from the appearance of the objects and their context which is referred to as compositionality of actions. Compositional action recognition deals with imparting human-like compositional generalization abilities to action-recognition models. In this regard, extracting the interactions between humans and objects forms the basis of compositional understanding. These interactions are not affected by the appearance biases of the objects or the context. But the context provides additional cues about the interactions between things and stuff. Hence we need to infuse context into the human-object interactions for compositional action recognition. To this end, we first design a spatial-temporal interaction encoder that captures the human-object (things) interactions. The encoder learns the spatio-temporal interaction tokens disentangled from the background context. The interaction tokens are then infused with contextual information from the video tokens to model the interactions between things and stuff. The final context-infused spatio-temporal interaction tokens are used for compositional action recognition. We show the effectiveness of our interaction-centric approach on the compositional Something-Else dataset where we obtain a new state-of-the-art result of 83.8% top-1 accuracy outperforming recent important object-centric methods by a significant margin. Our approach of explicit human-object-stuff interaction modeling is effective even for standard action recognition datasets such as Something-Something-V2 and Epic-Kitchens-100 where we obtain comparable or better performance than state-of-the-art.

Interaction Visual Transformer for Egocentric Action Anticipation

Nov 25, 2022Abstract:Human-object interaction is one of the most important visual cues that has not been explored for egocentric action anticipation. We propose a novel Transformer variant to model interactions by computing the change in the appearance of objects and human hands due to the execution of the actions and use those changes to refine the video representation. Specifically, we model interactions between hands and objects using Spatial Cross-Attention (SCA) and further infuse contextual information using Trajectory Cross-Attention to obtain environment-refined interaction tokens. Using these tokens, we construct an interaction-centric video representation for action anticipation. We term our model InAViT which achieves state-of-the-art action anticipation performance on large-scale egocentric datasets EPICKTICHENS100 (EK100) and EGTEA Gaze+. InAViT outperforms other visual transformer-based methods including object-centric video representation. On the EK100 evaluation server, InAViT is the top-performing method on the public leaderboard (at the time of submission) where it outperforms the second-best model by 3.3% on mean-top5 recall.

Predicting the Next Action by Modeling the Abstract Goal

Sep 12, 2022

Abstract:The problem of anticipating human actions is an inherently uncertain one. However, we can reduce this uncertainty if we have a sense of the goal that the actor is trying to achieve. Here, we present an action anticipation model that leverages goal information for the purpose of reducing the uncertainty in future predictions. Since we do not possess goal information or the observed actions during inference, we resort to visual representation to encapsulate information about both actions and goals. Through this, we derive a novel concept called abstract goal which is conditioned on observed sequences of visual features for action anticipation. We design the abstract goal as a distribution whose parameters are estimated using a variational recurrent network. We sample multiple candidates for the next action and introduce a goal consistency measure to determine the best candidate that follows from the abstract goal. Our method obtains impressive results on the very challenging Epic-Kitchens55 (EK55), EK100, and EGTEA Gaze+ datasets. We obtain absolute improvements of +13.69, +11.24, and +5.19 for Top-1 verb, Top-1 noun, and Top-1 action anticipation accuracy respectively over prior state-of-the-art methods for seen kitchens (S1) of EK55. Similarly, we also obtain significant improvements in the unseen kitchens (S2) set for Top-1 verb (+10.75), noun (+5.84) and action (+2.87) anticipation. Similar trend is observed for EGTEA Gaze+ dataset, where absolute improvement of +9.9, +13.1 and +6.8 is obtained for noun, verb, and action anticipation. It is through the submission of this paper that our method is currently the new state-of-the-art for action anticipation in EK55 and EGTEA Gaze+ https://competitions.codalab.org/competitions/20071#results Code available at https://github.com/debadityaroy/Abstract_Goal

FlowCaps: Optical Flow Estimation with Capsule Networks For Action Recognition

Nov 08, 2020

Abstract:Capsule networks (CapsNets) have recently shown promise to excel in most computer vision tasks, especially pertaining to scene understanding. In this paper, we explore CapsNet's capabilities in optical flow estimation, a task at which convolutional neural networks (CNNs) have already outperformed other approaches. We propose a CapsNet-based architecture, termed FlowCaps, which attempts to a) achieve better correspondence matching via finer-grained, motion-specific, and more-interpretable encoding crucial for optical flow estimation, b) perform better-generalizable optical flow estimation, c) utilize lesser ground truth data, and d) significantly reduce the computational complexity in achieving good performance, in comparison to its CNN-counterparts.

Defining Traffic States using Spatio-temporal Traffic Graphs

Jul 27, 2020

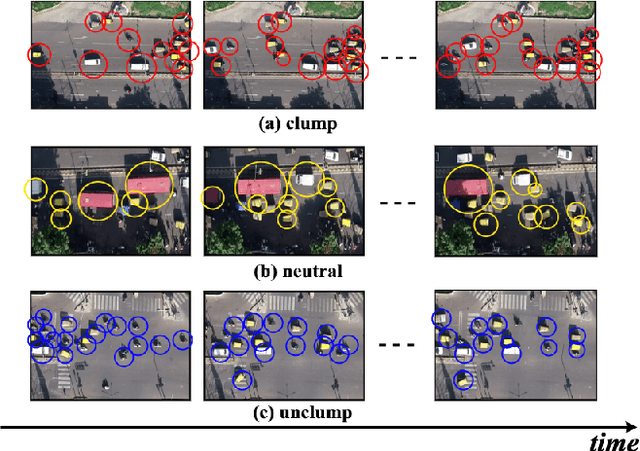

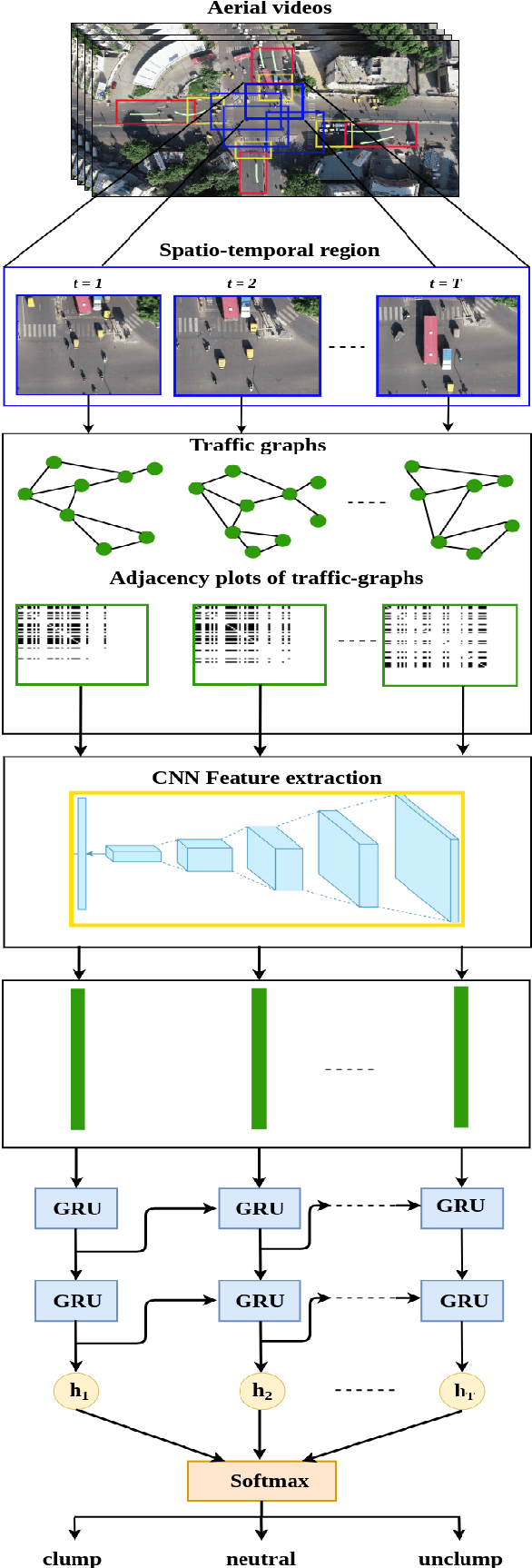

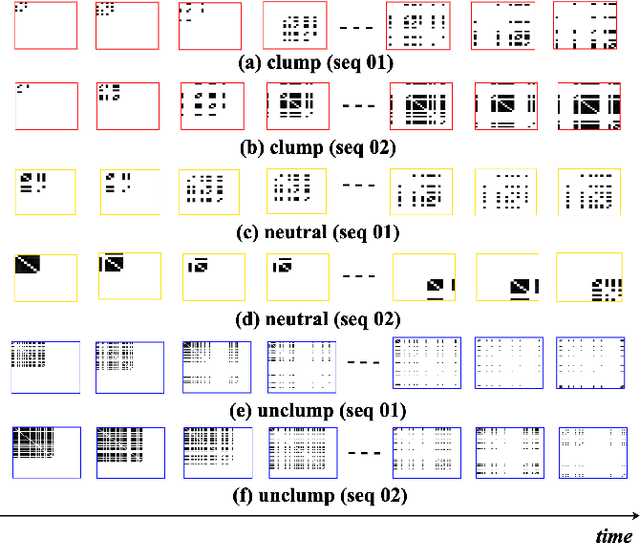

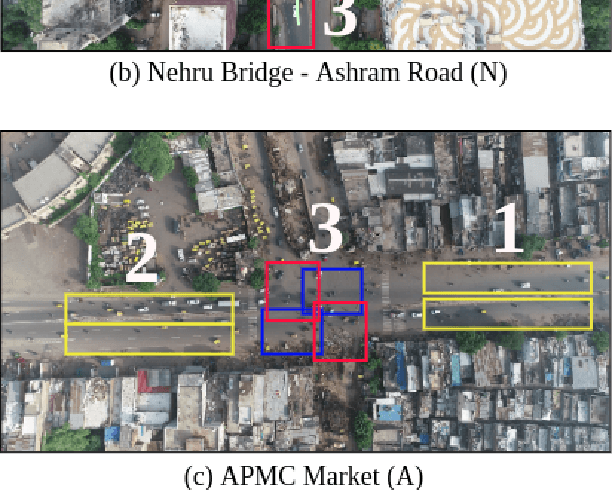

Abstract:Intersections are one of the main sources of congestion and hence, it is important to understand traffic behavior at intersections. Particularly, in developing countries with high vehicle density, mixed traffic type, and lane-less driving behavior, it is difficult to distinguish between congested and normal traffic behavior. In this work, we propose a way to understand the traffic state of smaller spatial regions at intersections using traffic graphs. The way these traffic graphs evolve over time reveals different traffic states - a) a congestion is forming (clumping), the congestion is dispersing (unclumping), or c) the traffic is flowing normally (neutral). We train a spatio-temporal deep network to identify these changes. Also, we introduce a large dataset called EyeonTraffic (EoT) containing 3 hours of aerial videos collected at 3 busy intersections in Ahmedabad, India. Our experiments on the EoT dataset show that the traffic graphs can help in correctly identifying congestion-prone behavior in different spatial regions of an intersection.

Detection of Collision-Prone Vehicle Behavior at Intersections using Siamese Interaction LSTM

Dec 10, 2019

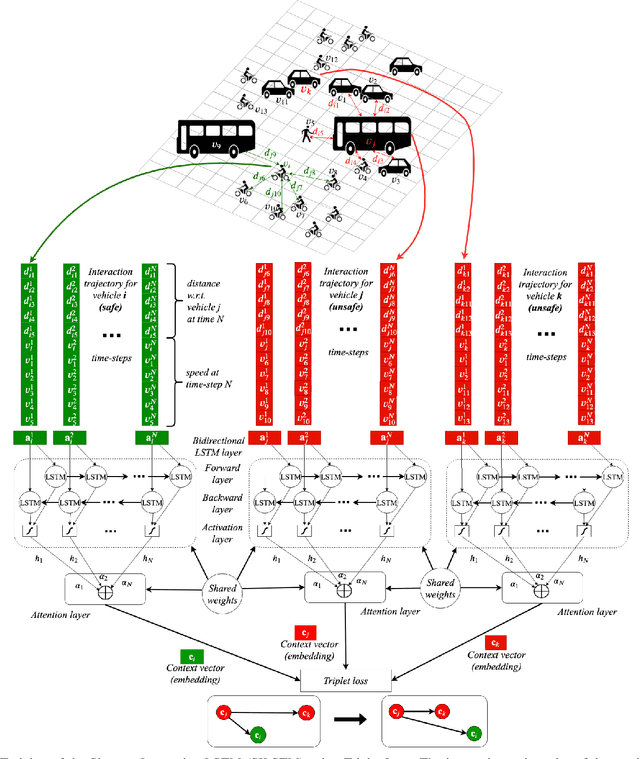

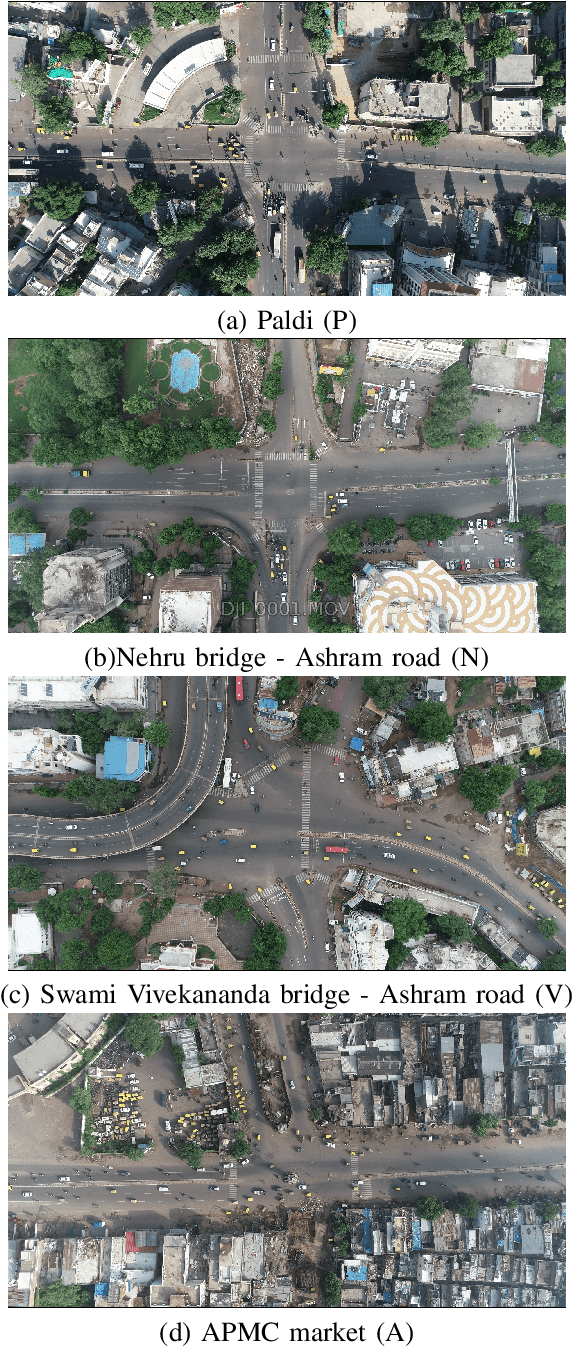

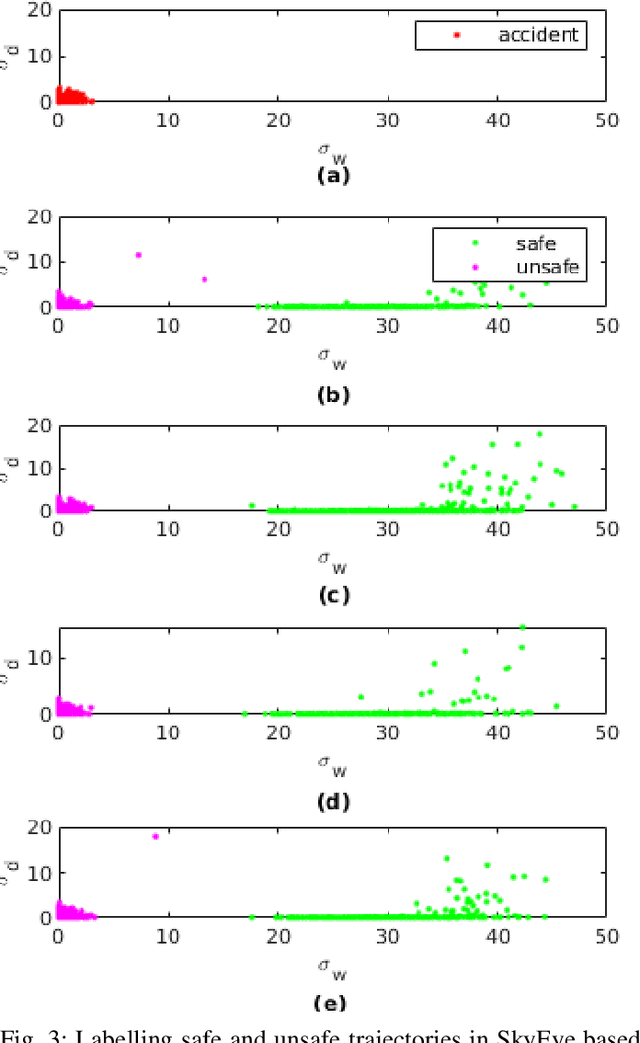

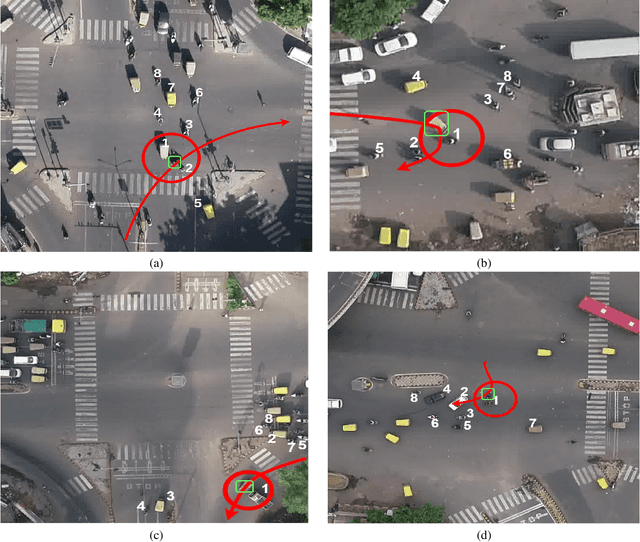

Abstract:As a large proportion of road accidents occur at intersections, monitoring traffic safety of intersections is important. Existing approaches are designed to investigate accidents in lane-based traffic. However, such approaches are not suitable in a lane-less mixed-traffic environment where vehicles often ply very close to each other. Hence, we propose an approach called Siamese Interaction Long Short-Term Memory network (SILSTM) to detect collision prone vehicle behavior. The SILSTM network learns the interaction trajectory of a vehicle that describes the interactions of a vehicle with its neighbors at an intersection. Among the hundreds of interactions for every vehicle, there maybe only some interactions which may be unsafe and hence, a temporal attention layer is used in the SILSTM network. Furthermore, the comparison of interaction trajectories requires labeling the trajectories as either unsafe or safe, but such a distinction is highly subjective, especially in lane-less traffic. Hence, in this work, we compute the characteristics of interaction trajectories involved in accidents using the collision energy model. The interaction trajectories that match accident characteristics are labeled as unsafe while the rest are considered safe. Finally, there is no existing dataset that allows us to monitor a particular intersection for a long duration. Therefore, we introduce the SkyEye dataset that contains 1 hour of continuous aerial footage from each of the 4 chosen intersections in the city of Ahmedabad in India. A detailed evaluation of SILSTM on the SkyEye dataset shows that unsafe (collision-prone) interaction trajectories can be effectively detected at different intersections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge