Davide Scassola

Graph Conditional Flow Matching for Relational Data Generation

May 21, 2025Abstract:Data synthesis is gaining momentum as a privacy-enhancing technology. While single-table tabular data generation has seen considerable progress, current methods for multi-table data often lack the flexibility and expressiveness needed to capture complex relational structures. In particular, they struggle with long-range dependencies and complex foreign-key relationships, such as tables with multiple parent tables or multiple types of links between the same pair of tables. We propose a generative model for relational data that generates the content of a relational dataset given the graph formed by the foreign-key relationships. We do this by learning a deep generative model of the content of the whole relational database by flow matching, where the neural network trained to denoise records leverages a graph neural network to obtain information from connected records. Our method is flexible, as it can support relational datasets with complex structures, and expressive, as the generation of each record can be influenced by any other record within the same connected component. We evaluate our method on several benchmark datasets and show that it achieves state-of-the-art performance in terms of synthetic data fidelity.

Conditioning Score-Based Generative Models by Neuro-Symbolic Constraints

Aug 31, 2023

Abstract:Score-based and diffusion models have emerged as effective approaches for both conditional and unconditional generation. Still conditional generation is based on either a specific training of a conditional model or classifier guidance, which requires training a noise-dependent classifier, even when the classifier for uncorrupted data is given. We propose an approach to sample from unconditional score-based generative models enforcing arbitrary logical constraints, without any additional training. Firstly, we show how to manipulate the learned score in order to sample from an un-normalized distribution conditional on a user-defined constraint. Then, we define a flexible and numerically stable neuro-symbolic framework for encoding soft logical constraints. Combining these two ingredients we obtain a general, but approximate, conditional sampling algorithm. We further developed effective heuristics aimed at improving the approximation. Finally, we show the effectiveness of our approach for various types of constraints and data: tabular data, images and time series.

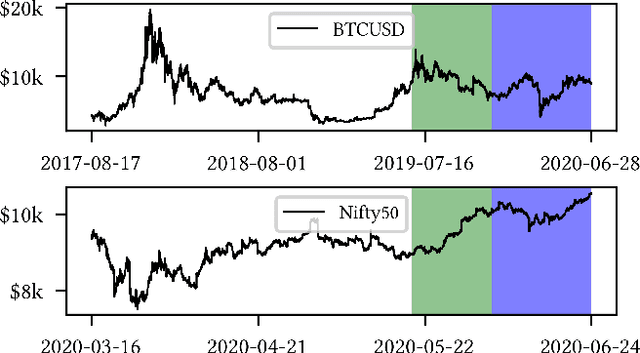

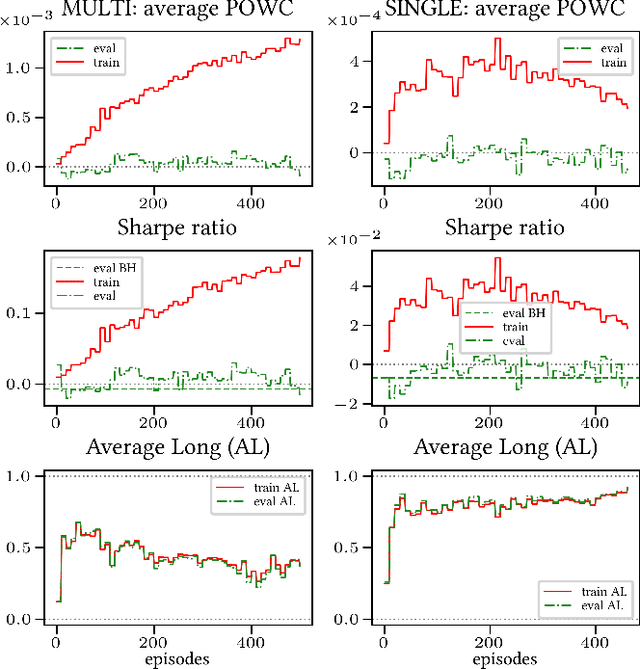

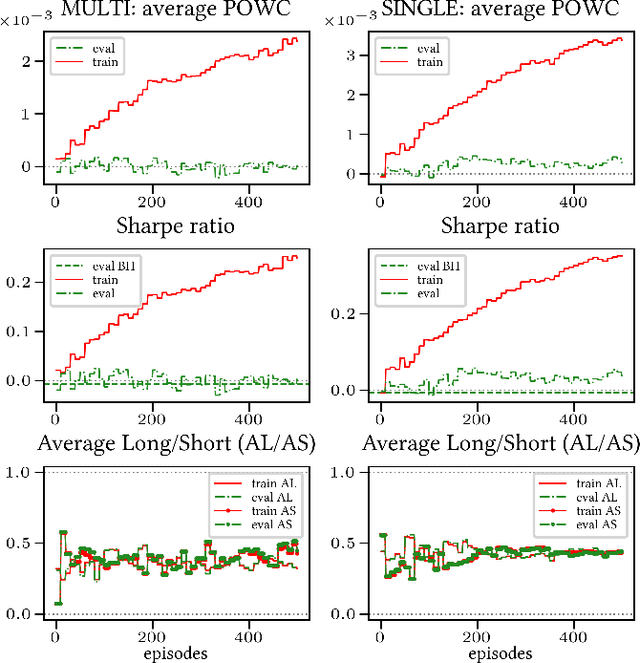

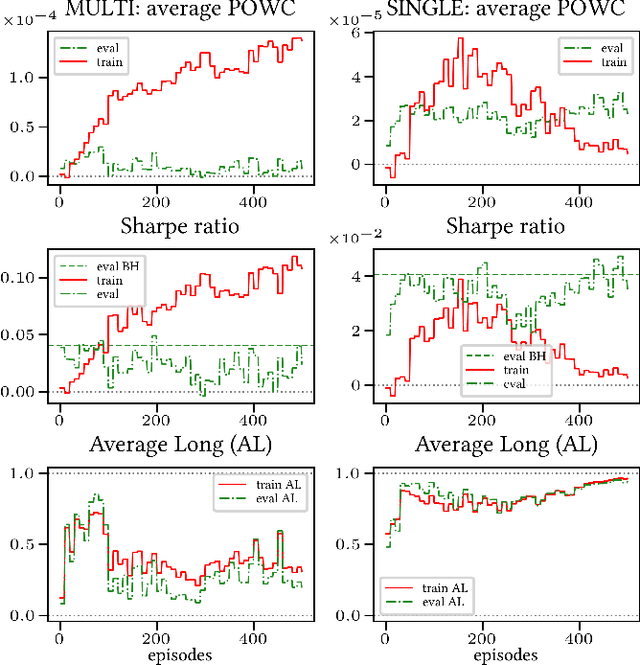

Multi-Objective reward generalization: Improving performance of Deep Reinforcement Learning for selected applications in stock and cryptocurrency trading

Mar 09, 2022

Abstract:We investigate the potential of Multi-Objective, Deep Reinforcement Learning for stock and cryptocurrency trading. More specifically, we build on the generalized setting \`a la Fontaine and Friedman arXiv:1809.06364 (where the reward weighting mechanism is not specified a priori, but embedded in the learning process) by complementing it with computational speed-ups, and adding the cumulative reward's discount factor to the learning process. Firstly, we verify that the resulting Multi-Objective algorithm generalizes well, and we provide preliminary statistical evidence showing that its prediction is more stable than the corresponding Single-Objective strategy's. Secondly, we show that the Multi-Objective algorithm has a clear edge over the corresponding Single-Objective strategy when the reward mechanism is sparse (i.e., when non-null feedback is infrequent over time). Finally, we discuss the generalization properties of the discount factor. The entirety of our code is provided in open source format.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge