David K. Elson

Consistency Training Helps Stop Sycophancy and Jailbreaks

Oct 31, 2025Abstract:An LLM's factuality and refusal training can be compromised by simple changes to a prompt. Models often adopt user beliefs (sycophancy) or satisfy inappropriate requests which are wrapped within special text (jailbreaking). We explore \emph{consistency training}, a self-supervised paradigm that teaches a model to be invariant to certain irrelevant cues in the prompt. Instead of teaching the model what exact response to give on a particular prompt, we aim to teach the model to behave identically across prompt data augmentations (like adding leading questions or jailbreak text). We try enforcing this invariance in two ways: over the model's external outputs (\emph{Bias-augmented Consistency Training} (BCT) from Chua et al. [2025]) and over its internal activations (\emph{Activation Consistency Training} (ACT), a method we introduce). Both methods reduce Gemini 2.5 Flash's susceptibility to irrelevant cues. Because consistency training uses responses from the model itself as training data, it avoids issues that arise from stale training data, such as degrading model capabilities or enforcing outdated response guidelines. While BCT and ACT reduce sycophancy equally well, BCT does better at jailbreak reduction. We think that BCT can simplify training pipelines by removing reliance on static datasets. We argue that some alignment problems are better viewed not in terms of optimal responses, but rather as consistency issues.

Generating Different Story Tellings from Semantic Representations of Narrative

Aug 29, 2017

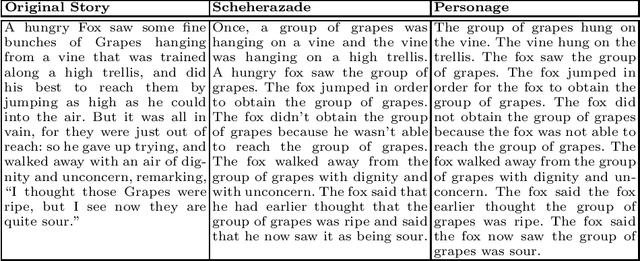

Abstract:In order to tell stories in different voices for different audiences, interactive story systems require: (1) a semantic representation of story structure, and (2) the ability to automatically generate story and dialogue from this semantic representation using some form of Natural Language Generation (NLG). However, there has been limited research on methods for linking story structures to narrative descriptions of scenes and story events. In this paper we present an automatic method for converting from Scheherazade's story intention graph, a semantic representation, to the input required by the Personage NLG engine. Using 36 Aesop Fables distributed in DramaBank, a collection of story encodings, we train translation rules on one story and then test these rules by generating text for the remaining 35. The results are measured in terms of the string similarity metrics Levenshtein Distance and BLEU score. The results show that we can generate the 35 stories with correct content: the test set stories on average are close to the output of the Scheherazade realizer, which was customized to this semantic representation. We provide some examples of story variations generated by personage. In future work, we will experiment with measuring the quality of the same stories generated in different voices, and with techniques for making storytelling interactive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge