David A. Noever

Runway Extraction and Improved Mapping from Space Imagery

Dec 30, 2021

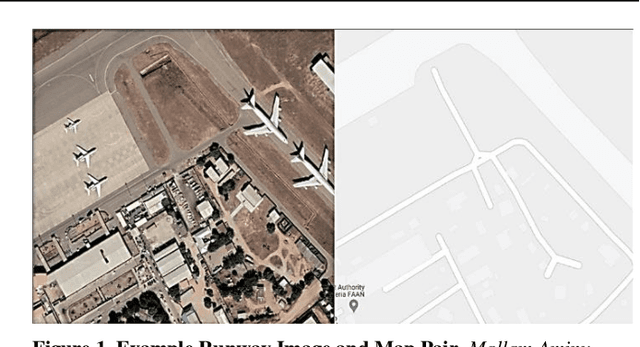

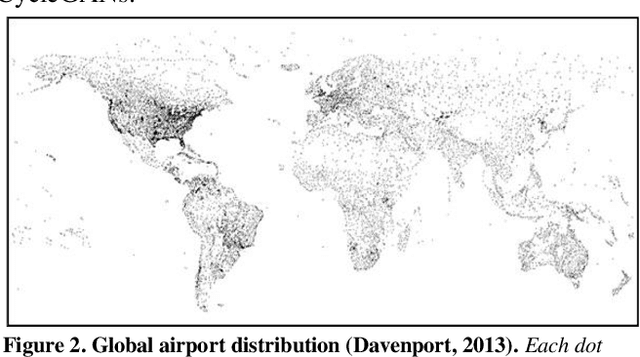

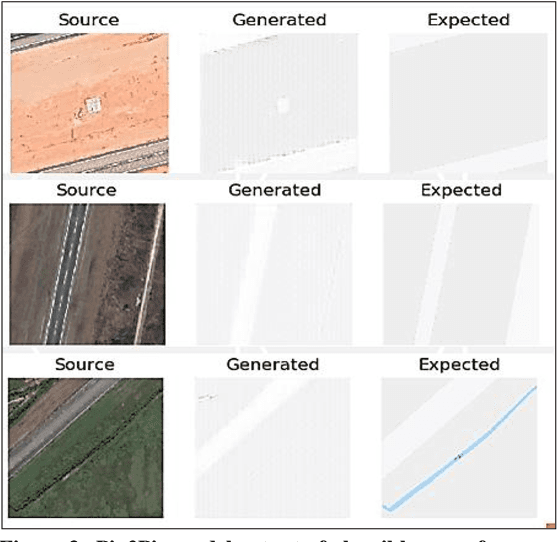

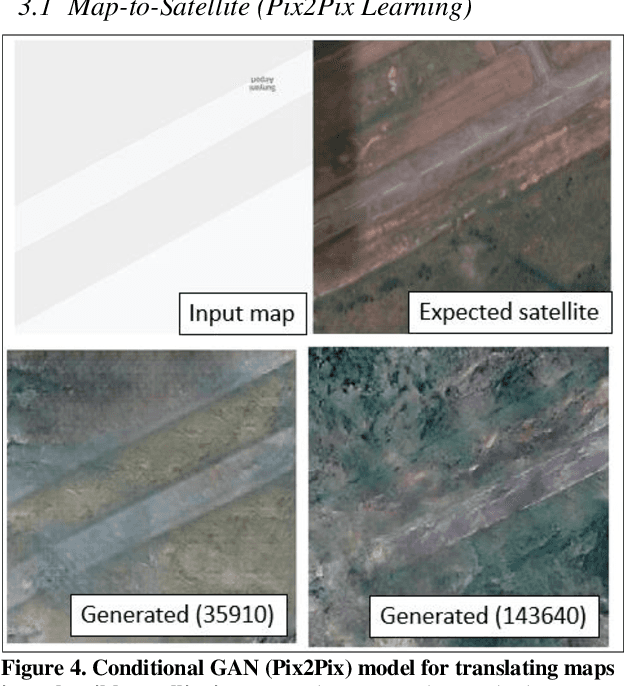

Abstract:Change detection methods applied to monitoring key infrastructure like airport runways represent an important capability for disaster relief and urban planning. The present work identifies two generative adversarial networks (GAN) architectures that translate reversibly between plausible runway maps and satellite imagery. We illustrate the training capability using paired images (satellite-map) from the same point of view and using the Pix2Pix architecture or conditional GANs. In the absence of available pairs, we likewise show that CycleGAN architectures with four network heads (discriminator-generator pairs) can also provide effective style transfer from raw image pixels to outline or feature maps. To emphasize the runway and tarmac boundaries, we experimentally show that the traditional grey-tan map palette is not a required training input but can be augmented by higher contrast mapping palettes (red-black) for sharper runway boundaries. We preview a potentially novel use case (called "sketch2satellite") where a human roughly draws the current runway boundaries and automates the machine output of plausible satellite images. Finally, we identify examples of faulty runway maps where the published satellite and mapped runways disagree but an automated update renders the correct map using GANs.

Reading Isn't Believing: Adversarial Attacks On Multi-Modal Neurons

Mar 18, 2021

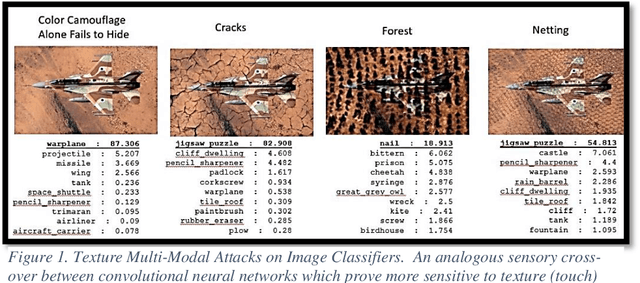

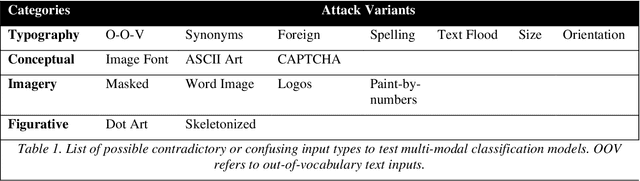

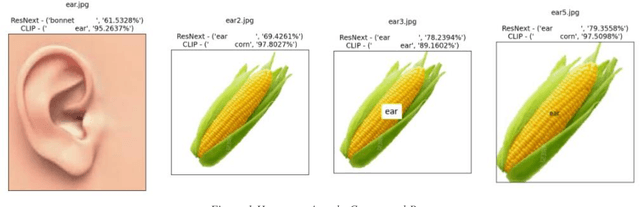

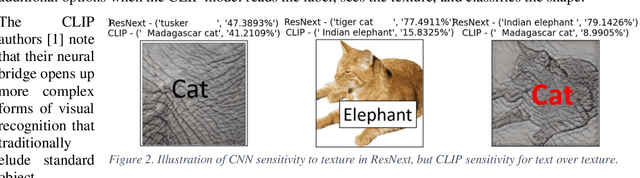

Abstract:With Open AI's publishing of their CLIP model (Contrastive Language-Image Pre-training), multi-modal neural networks now provide accessible models that combine reading with visual recognition. Their network offers novel ways to probe its dual abilities to read text while classifying visual objects. This paper demonstrates several new categories of adversarial attacks, spanning basic typographical, conceptual, and iconographic inputs generated to fool the model into making false or absurd classifications. We demonstrate that contradictory text and image signals can confuse the model into choosing false (visual) options. Like previous authors, we show by example that the CLIP model tends to read first, look later, a phenomenon we describe as reading isn't believing.

Image Classifiers for Network Intrusions

Mar 13, 2021

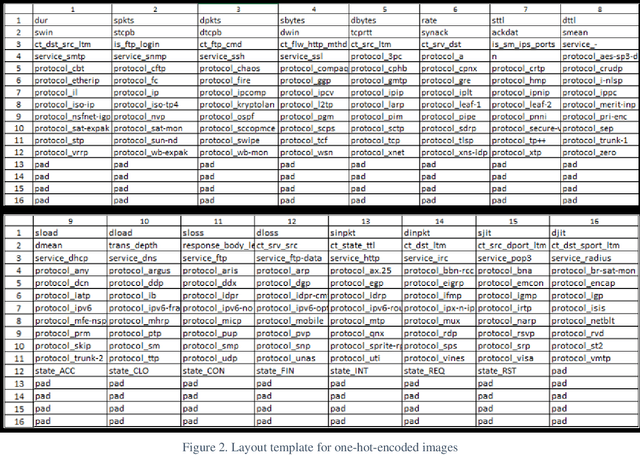

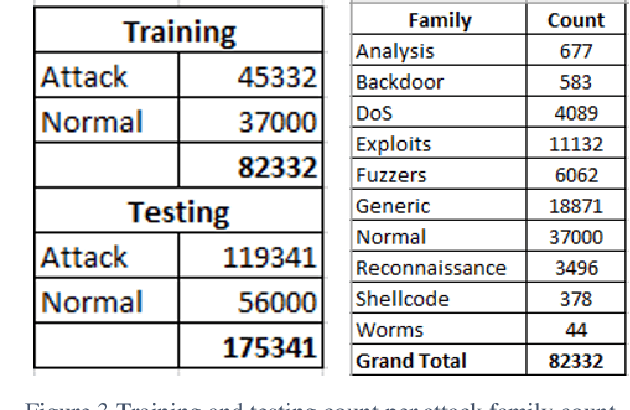

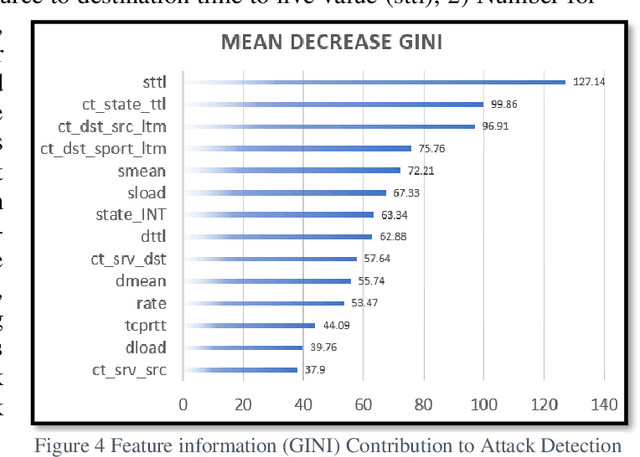

Abstract:This research recasts the network attack dataset from UNSW-NB15 as an intrusion detection problem in image space. Using one-hot-encodings, the resulting grayscale thumbnails provide a quarter-million examples for deep learning algorithms. Applying the MobileNetV2's convolutional neural network architecture, the work demonstrates a 97% accuracy in distinguishing normal and attack traffic. Further class refinements to 9 individual attack families (exploits, worms, shellcodes) show an overall 56% accuracy. Using feature importance rank, a random forest solution on subsets show the most important source-destination factors and the least important ones as mainly obscure protocols. The dataset is available on Kaggle.

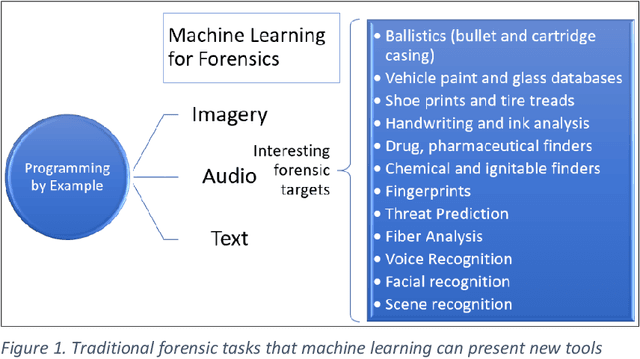

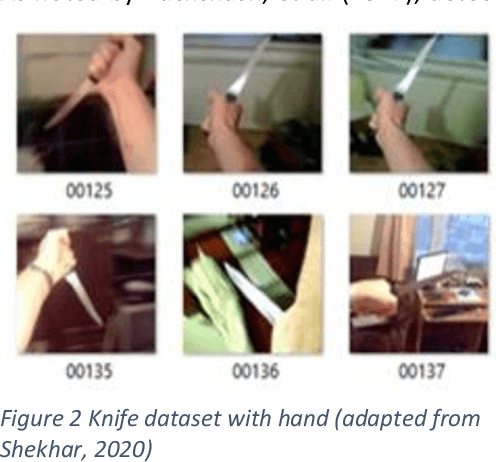

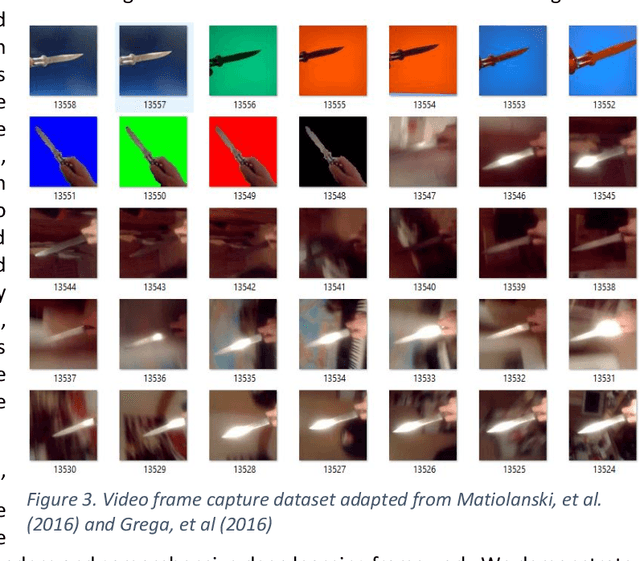

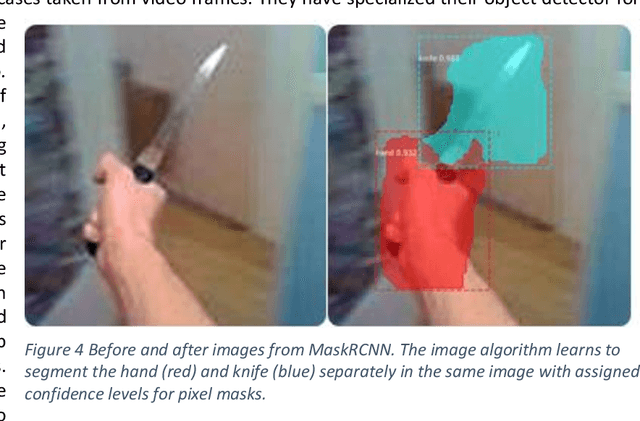

Knife and Threat Detectors

Apr 08, 2020

Abstract:Despite rapid advances in image-based machine learning, the threat identification of a knife wielding attacker has not garnered substantial academic attention. This relative research gap appears less understandable given the high knife assault rate (>100,000 annually) and the increasing availability of public video surveillance to analyze and forensically document. We present three complementary methods for scoring automated threat identification using multiple knife image datasets, each with the goal of narrowing down possible assault intentions while minimizing misidentifying false positives and risky false negatives. To alert an observer to the knife-wielding threat, we test and deploy classification built around MobileNet in a sparse and pruned neural network with a small memory requirement (< 2.2 megabytes) and 95% test accuracy. We secondly train a detection algorithm (MaskRCNN) to segment the hand from the knife in a single image and assign probable certainty to their relative location. This segmentation accomplishes both localization with bounding boxes but also relative positions to infer overhand threats. A final model built on the PoseNet architecture assigns anatomical waypoints or skeletal features to narrow the threat characteristics and reduce misunderstood intentions. We further identify and supplement existing data gaps that might blind a deployed knife threat detector such as collecting innocuous hand and fist images as important negative training sets. When automated on commodity hardware and software solutions one original research contribution is this systematic survey of timely and readily available image-based alerts to task and prioritize crime prevention countermeasures prior to a tragic outcome.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge