Christian Grussler

Tractable downfall of basis pursuit in structured sparse optimization

Mar 24, 2025

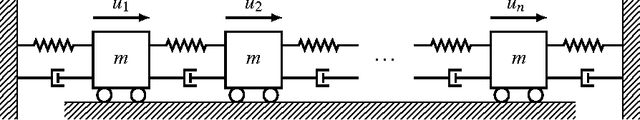

Abstract:The problem of finding the sparsest solution to a linear underdetermined system of equations, as it often appears in data analysis, optimal control and system identification problems, is considered. This non-convex problem is commonly solved by convexification via $\ell_1$-norm minimization, also known as basis pursuit. In this work, a class of structured matrices, representing the system of equations, is introduced for which the basis pursuit approach tractably fails to recover the sparsest solution. In particular, we are able to identify matrix columns that correspond to unrecoverable non-zero entries of the sparsest solution, as well as to conclude the uniqueness of the sparsest solution in polynomial time. These deterministic guarantees contrast popular probabilistic ones, and as such, provide valuable insights into the a priori design of sparse optimization problems. As our matrix structure appears naturally in optimal control problems, we exemplify our findings by showing that it is possible to verify a priori that basis pursuit may fail in finding fuel optimal regulators for a class of discrete-time linear time-invariant systems.

Efficient Proximal Mapping Computation for Unitarily Invariant Low-Rank Inducing Norms

Oct 17, 2018

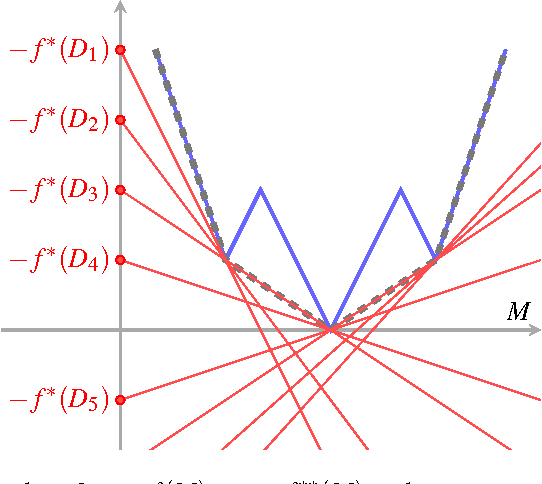

Abstract:Low-rank inducing unitarily invariant norms have been introduced to convexify problems with low-rank/sparsity constraint. They are the convex envelope of a unitary invariant norm and the indicator function of an upper bounding rank constraint. The most well-known member of this family is the so-called nuclear norm. To solve optimization problems involving such norms with proximal splitting methods, efficient ways of evaluating the proximal mapping of the low-rank inducing norms are needed. This is known for the nuclear norm, but not for most other members of the low-rank inducing family. This work supplies a framework that reduces the proximal mapping evaluation into a nested binary search, in which each iteration requires the solution of a much simpler problem. This simpler problem can often be solved analytically as it is demonstrated for the so-called low-rank inducing Frobenius and spectral norms. Moreover, the framework allows to compute the proximal mapping of compositions of these norms with increasing convex functions and the projections onto their epigraphs. This has the additional advantage that we can also deal with compositions of increasing convex functions and low-rank inducing norms in proximal splitting methods.

Low-Rank Inducing Norms with Optimality Interpretations

Jun 11, 2018

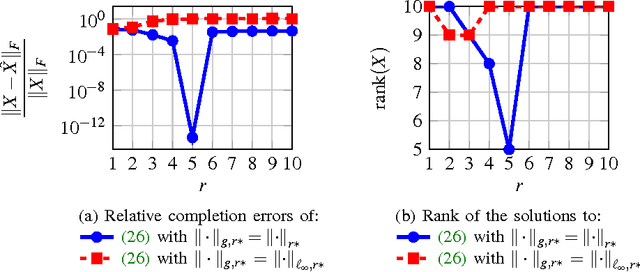

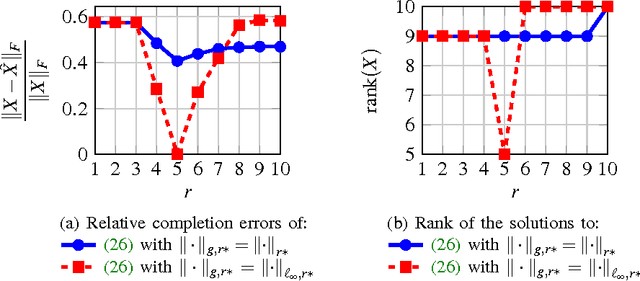

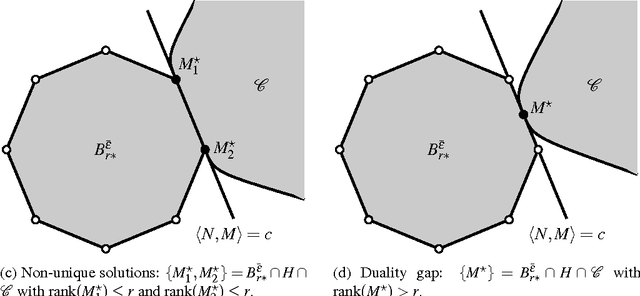

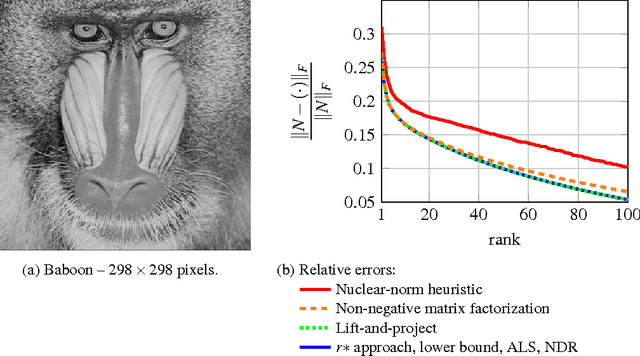

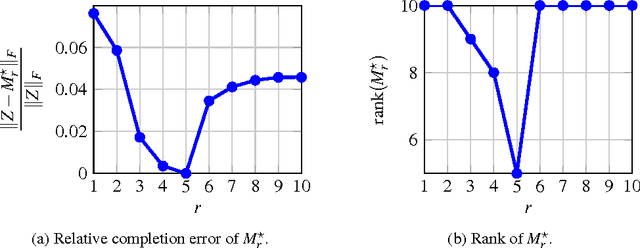

Abstract:Optimization problems with rank constraints appear in many diverse fields such as control, machine learning and image analysis. Since the rank constraint is non-convex, these problems are often approximately solved via convex relaxations. Nuclear norm regularization is the prevailing convexifying technique for dealing with these types of problem. This paper introduces a family of low-rank inducing norms and regularizers which includes the nuclear norm as a special case. A posteriori guarantees on solving an underlying rank constrained optimization problem with these convex relaxations are provided. We evaluate the performance of the low-rank inducing norms on three matrix completion problems. In all examples, the nuclear norm heuristic is outperformed by convex relaxations based on other low-rank inducing norms. For two of the problems there exist low-rank inducing norms that succeed in recovering the partially unknown matrix, while the nuclear norm fails. These low-rank inducing norms are shown to be representable as semi-definite programs. Moreover, these norms have cheaply computable proximal mappings, which makes it possible to also solve problems of large size using first-order methods.

Low-rank Optimization with Convex Constraints

Mar 06, 2018

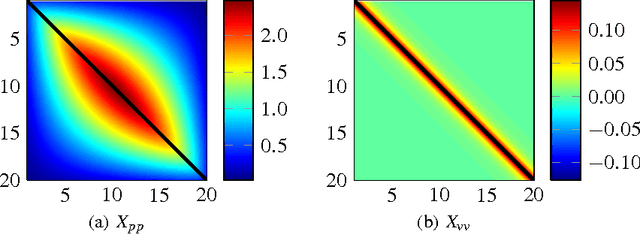

Abstract:The problem of low-rank approximation with convex constraints, which appears in data analysis, system identification, model order reduction, low-order controller design and low-complexity modelling is considered. Given a matrix, the objective is to find a low-rank approximation that meets rank and convex constraints, while minimizing the distance to the matrix in the squared Frobenius norm. In many situations, this non-convex problem is convexified by nuclear norm regularization. However, we will see that the approximations obtained by this method may be far from optimal. In this paper, we propose an alternative convex relaxation that uses the convex envelope of the squared Frobenius norm and the rank constraint. With this approach, easily verifiable conditions are obtained under which the solutions to the convex relaxation and the original non-convex problem coincide. An SDP representation of the convex envelope is derived, which allows us to apply this approach to several known problems. Our example on optimal low-rank Hankel approximation/model reduction illustrates that the proposed convex relaxation performs consistently better than nuclear norm regularization and may outperform balanced truncation.

Local Convergence of Proximal Splitting Methods for Rank Constrained Problems

Oct 11, 2017

Abstract:We analyze the local convergence of proximal splitting algorithms to solve optimization problems that are convex besides a rank constraint. For this, we show conditions under which the proximal operator of a function involving the rank constraint is locally identical to the proximal operator of its convex envelope, hence implying local convergence. The conditions imply that the non-convex algorithms locally converge to a solution whenever a convex relaxation involving the convex envelope can be expected to solve the non-convex problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge