Carri K. Glide-Hurst

Modality-AGnostic Image Cascade (MAGIC) for Multi-Modality Cardiac Substructure Segmentation

Jun 12, 2025Abstract:Cardiac substructures are essential in thoracic radiation therapy planning to minimize risk of radiation-induced heart disease. Deep learning (DL) offers efficient methods to reduce contouring burden but lacks generalizability across different modalities and overlapping structures. This work introduces and validates a Modality-AGnostic Image Cascade (MAGIC) for comprehensive and multi-modal cardiac substructure segmentation. MAGIC is implemented through replicated encoding and decoding branches of an nnU-Net-based, U-shaped backbone conserving the function of a single model. Twenty cardiac substructures (heart, chambers, great vessels (GVs), valves, coronary arteries (CAs), and conduction nodes) from simulation CT (Sim-CT), low-field MR-Linac, and cardiac CT angiography (CCTA) modalities were manually delineated and used to train (n=76), validate (n=15), and test (n=30) MAGIC. Twelve comparison models (four segmentation subgroups across three modalities) were equivalently trained. All methods were compared for training efficiency and against reference contours using the Dice Similarity Coefficient (DSC) and two-tailed Wilcoxon Signed-Rank test (threshold, p<0.05). Average DSC scores were 0.75(0.16) for Sim-CT, 0.68(0.21) for MR-Linac, and 0.80(0.16) for CCTA. MAGIC outperforms the comparison in 57% of cases, with limited statistical differences. MAGIC offers an effective and accurate segmentation solution that is lightweight and capable of segmenting multiple modalities and overlapping structures in a single model. MAGIC further enables clinical implementation by simplifying the computational requirements and offering unparalleled flexibility for clinical settings.

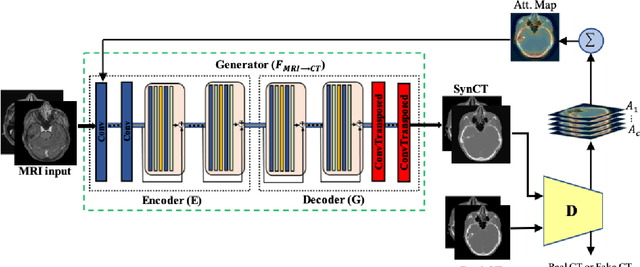

Attention-Guided Generative Adversarial Network to Address Atypical Anatomy in Modality Transfer

Jun 27, 2020

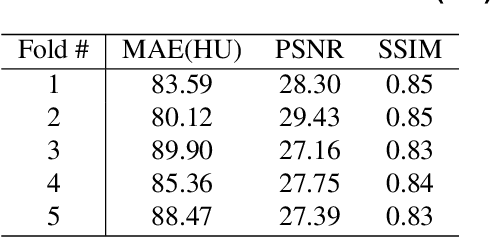

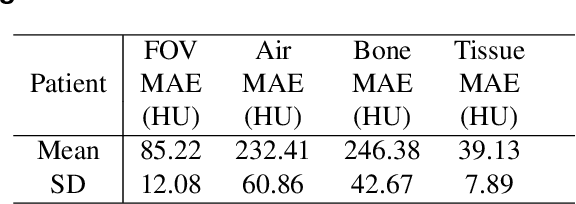

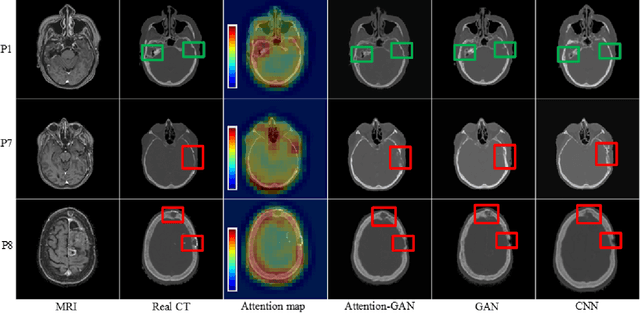

Abstract:Recently, interest in MR-only treatment planning using synthetic CTs (synCTs) has grown rapidly in radiation therapy. However, developing class solutions for medical images that contain atypical anatomy remains a major limitation. In this paper, we propose a novel spatial attention-guided generative adversarial network (attention-GAN) model to generate accurate synCTs using T1-weighted MRI images as the input to address atypical anatomy. Experimental results on fifteen brain cancer patients show that attention-GAN outperformed existing synCT models and achieved an average MAE of 85.22$\pm$12.08, 232.41$\pm$60.86, 246.38$\pm$42.67 Hounsfield units between synCT and CT-SIM across the entire head, bone and air regions, respectively. Qualitative analysis shows that attention-GAN has the ability to use spatially focused areas to better handle outliers, areas with complex anatomy or post-surgical regions, and thus offer strong potential for supporting near real-time MR-only treatment planning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge