Brendan Hertel

University of Massachusetts Lowell

Robot Learning Using Multi-Coordinate Elastic Maps

May 09, 2025

Abstract:To learn manipulation skills, robots need to understand the features of those skills. An easy way for robots to learn is through Learning from Demonstration (LfD), where the robot learns a skill from an expert demonstrator. While the main features of a skill might be captured in one differential coordinate (i.e., Cartesian), they could have meaning in other coordinates. For example, an important feature of a skill may be its shape or velocity profile, which are difficult to discover in Cartesian differential coordinate. In this work, we present a method which enables robots to learn skills from human demonstrations via encoding these skills into various differential coordinates, then determines the importance of each coordinate to reproduce the skill. We also introduce a modified form of Elastic Maps that includes multiple differential coordinates, combining statistical modeling of skills in these differential coordinate spaces. Elastic Maps, which are flexible and fast to compute, allow for the incorporation of several different types of constraints and the use of any number of demonstrations. Additionally, we propose methods for auto-tuning several parameters associated with the modified Elastic Map formulation. We validate our approach in several simulated experiments and a real-world writing task with a UR5e manipulator arm.

Parameter-Free Segmentation of Robot Movements with Cross-Correlation Using Different Similarity Metrics

May 09, 2025Abstract:Often, robots are asked to execute primitive movements, whether as a single action or in a series of actions representing a larger, more complex task. These movements can be learned in many ways, but a common one is from demonstrations presented to the robot by a teacher. However, these demonstrations are not always simple movements themselves, and complex demonstrations must be broken down, or segmented, into primitive movements. In this work, we present a parameter-free approach to segmentation using techniques inspired by autocorrelation and cross-correlation from signal processing. In cross-correlation, a representative signal is found in some larger, more complex signal by correlating the representative signal with the larger signal. This same idea can be applied to segmenting robot motion and demonstrations, provided with a representative motion primitive. This results in a fast and accurate segmentation, which does not take any parameters. One of the main contributions of this paper is the modification of the cross-correlation process by employing similarity metrics that can capture features specific to robot movements. To validate our framework, we conduct several experiments of complex tasks both in simulation and in real-world. We also evaluate the effectiveness of our segmentation framework by comparing various similarity metrics.

An Adaptive Framework for Manipulator Skill Reproduction in Dynamic Environments

May 24, 2024

Abstract:Robot skill learning and execution in uncertain and dynamic environments is a challenging task. This paper proposes an adaptive framework that combines Learning from Demonstration (LfD), environment state prediction, and high-level decision making. Proactive adaptation prevents the need for reactive adaptation, which lags behind changes in the environment rather than anticipating them. We propose a novel LfD representation, Elastic-Laplacian Trajectory Editing (ELTE), which continuously adapts the trajectory shape to predictions of future states. Then, a high-level reactive system using an Unscented Kalman Filter (UKF) and Hidden Markov Model (HMM) prevents unsafe execution in the current state of the dynamic environment based on a discrete set of decisions. We first validate our LfD representation in simulation, then experimentally assess the entire framework using a legged mobile manipulator in 36 real-world scenarios. We show the effectiveness of the proposed framework under different dynamic changes in the environment. Our results show that the proposed framework produces robust and stable adaptive behaviors.

A Framework for Learning and Reusing Robotic Skills

Apr 29, 2024

Abstract:In this paper, we present our work in progress towards creating a library of motion primitives. This library facilitates easier and more intuitive learning and reusing of robotic skills. Users can teach robots complex skills through Learning from Demonstration, which is automatically segmented into primitives and stored in clusters of similar skills. We propose a novel multimodal segmentation method as well as a novel trajectory clustering method. Then, when needed for reuse, we transform primitives into new environments using trajectory editing. We present simulated results for our framework with demonstrations taken on real-world robots.

Confidence-Based Skill Reproduction Through Perturbation Analysis

May 04, 2023Abstract:Several methods exist for teaching robots, with one of the most prominent being Learning from Demonstration (LfD). Many LfD representations can be formulated as constrained optimization problems. We propose a novel convex formulation of the LfD problem represented as elastic maps, which models reproductions as a series of connected springs. Relying on the properties of strong duality and perturbation analysis of the constrained optimization problem, we create a confidence metric. Our method allows the demonstrated skill to be reproduced with varying confidence level yielding different levels of smoothness and flexibility. Our confidence-based method provides reproductions of the skill that perform better for a given set of constraints. By analyzing the constraints, our method can also remove unnecessary constraints. We validate our approach using several simulated and real-world experiments using a Jaco2 7DOF manipulator arm.

DECISIVE Benchmarking Data Report: sUAS Performance Results from Phase I

Jan 20, 2023

Abstract:This report reviews all results derived from performance benchmarking conducted during Phase I of the Development and Execution of Comprehensive and Integrated Subterranean Intelligent Vehicle Evaluations (DECISIVE) project by the University of Massachusetts Lowell, using the test methods specified in the DECISIVE Test Methods Handbook v1.1 for evaluating small unmanned aerial systems (sUAS) performance in subterranean and constrained indoor environments, spanning communications, field readiness, interface, obstacle avoidance, navigation, mapping, autonomy, trust, and situation awareness. Using those 20 test methods, over 230 tests were conducted across 8 sUAS platforms: Cleo Robotics Dronut X1P (P = prototype), FLIR Black Hornet PRS, Flyability Elios 2 GOV, Lumenier Nighthawk V3, Parrot ANAFI USA GOV, Skydio X2D, Teal Golden Eagle, and Vantage Robotics Vesper. Best in class criteria is specified for each applicable test method and the sUAS that match this criteria are named for each test method, including a high-level executive summary of their performance.

DECISIVE Test Methods Handbook: Test Methods for Evaluating sUAS in Subterranean and Constrained Indoor Environments, Version 1.1

Nov 01, 2022

Abstract:This handbook outlines all test methods developed under the Development and Execution of Comprehensive and Integrated Subterranean Intelligent Vehicle Evaluations (DECISIVE) project by the University of Massachusetts Lowell for evaluating small unmanned aerial systems (sUAS) performance in subterranean and constrained indoor environments, spanning communications, field readiness, interface, obstacle avoidance, navigation, mapping, autonomy, trust, and situation awareness. For sUAS deployment in subterranean and constrained indoor environments, this puts forth two assumptions about applicable sUAS to be evaluated using these test methods: (1) able to operate without access to GPS signal, and (2) width from prop top to prop tip does not exceed 91 cm (36 in) wide (i.e., can physically fit through a typical doorway, although successful navigation through is not guaranteed). All test methods are specified using a common format: Purpose, Summary of Test Method, Apparatus and Artifacts, Equipment, Metrics, Procedure, and Example Data. All test methods are designed to be run in real-world environments (e.g., MOUT sites) or using fabricated apparatuses (e.g., test bays built from wood, or contained inside of one or more shipping containers).

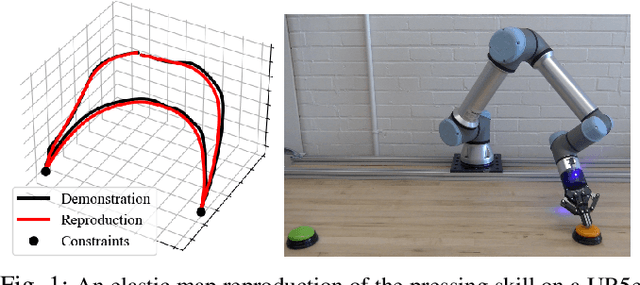

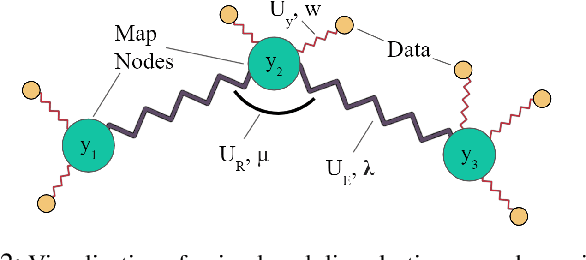

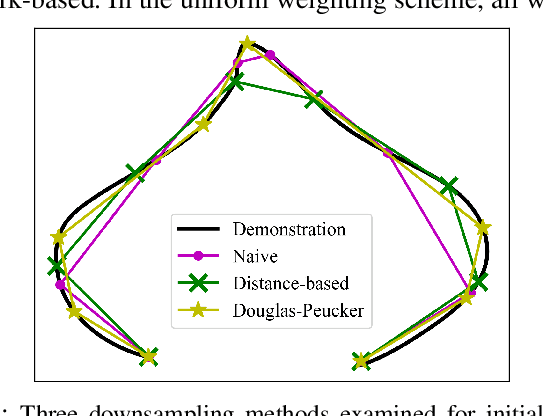

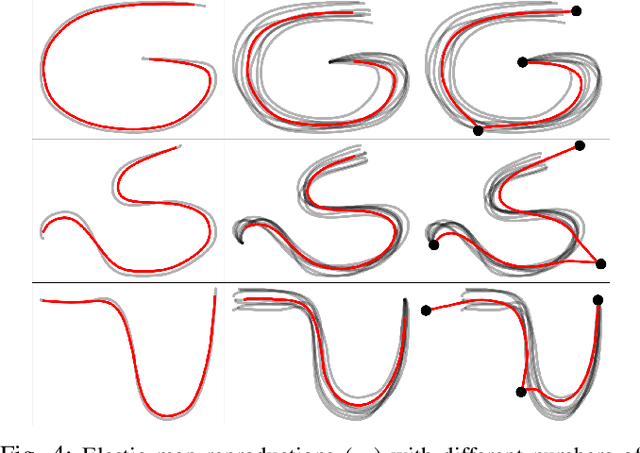

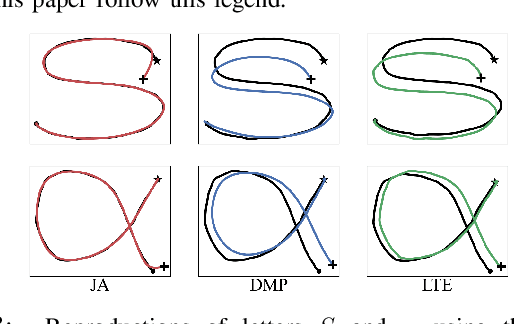

Robot Learning from Demonstration Using Elastic Maps

Aug 03, 2022

Abstract:Learning from Demonstration (LfD) is a popular method of reproducing and generalizing robot skills from human-provided demonstrations. In this paper, we propose a novel optimization-based LfD method that encodes demonstrations as elastic maps. An elastic map is a graph of nodes connected through a mesh of springs. We build a skill model by fitting an elastic map to the set of demonstrations. The formulated optimization problem in our approach includes three objectives with natural and physical interpretations. The main term rewards the mean squared error in the Cartesian coordinate. The second term penalizes the non-equidistant distribution of points resulting in the optimum total length of the trajectory. The third term rewards smoothness while penalizing nonlinearity. These quadratic objectives form a convex problem that can be solved efficiently with local optimizers. We examine nine methods for constructing and weighting the elastic maps and study their performance in robotic tasks. We also evaluate the proposed method in several simulated and real-world experiments using a UR5e manipulator arm, and compare it to other LfD approaches to demonstrate its benefits and flexibility across a variety of metrics.

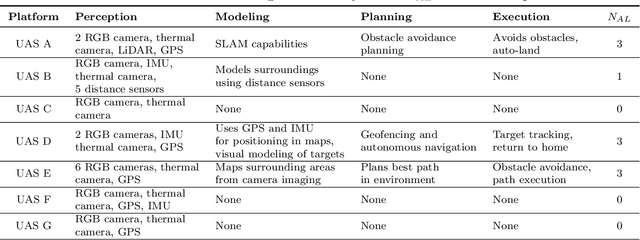

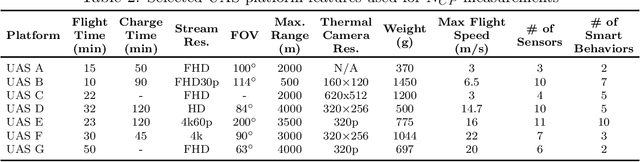

Methods for Combining and Representing Non-Contextual Autonomy Scores for Unmanned Aerial Systems

Nov 14, 2021

Abstract:Measuring an overall autonomy score for a robotic system requires the combination of a set of relevant aspects and features of the system that might be measured in different units, qualitative, and/or discordant. In this paper, we build upon an existing non-contextual autonomy framework that measures and combines the Autonomy Level and the Component Performance of a system as overall autonomy score. We examine several methods of combining features, showing how some methods find different rankings of the same data, and we employ the weighted product method to resolve this issue. Furthermore, we introduce the non-contextual autonomy coordinate and represent the overall autonomy of a system with an autonomy distance. We apply our method to a set of seven Unmanned Aerial Systems (UAS) and obtain their absolute autonomy score as well as their relative score with respect to the best system.

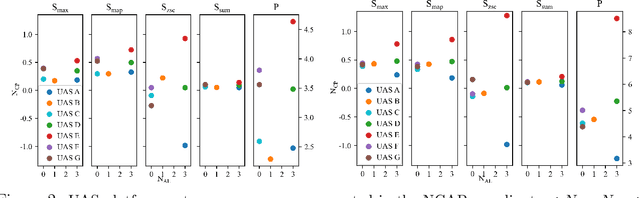

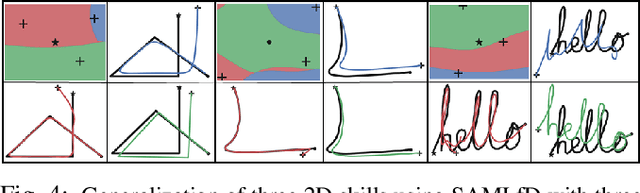

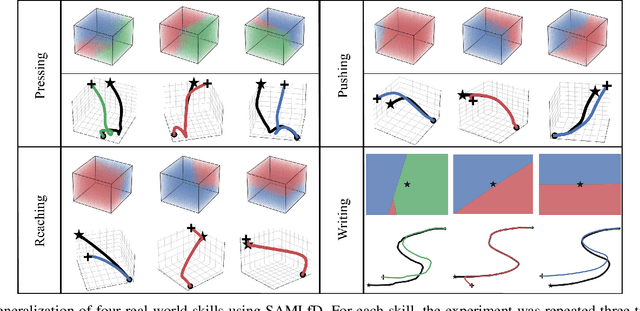

Similarity-Aware Skill Reproduction based on Multi-Representational Learning from Demonstration

Oct 28, 2021

Abstract:Learning from Demonstration (LfD) algorithms enable humans to teach new skills to robots through demonstrations. The learned skills can be robustly reproduced from the identical or near boundary conditions (e.g., initial point). However, when generalizing a learned skill over boundary conditions with higher variance, the similarity of the reproductions changes from one boundary condition to another, and a single LfD representation cannot preserve a consistent similarity across a generalization region. We propose a novel similarity-aware framework including multiple LfD representations and a similarity metric that can improve skill generalization by finding reproductions with the highest similarity values for a given boundary condition. Given a demonstration of the skill, our framework constructs a similarity region around a point of interest (e.g., initial point) by evaluating individual LfD representations using the similarity metric. Any point within this volume corresponds to a representation that reproduces the skill with the greatest similarity. We validate our multi-representational framework in three simulated and four sets of real-world experiments using a physical 6-DOF robot. We also evaluate 11 different similarity metrics and categorize them according to their biases in 286 simulated experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge