Bernd Ulmann

Learning Dynamics in Memristor-Based Equilibrium Propagation

Dec 13, 2025Abstract:Memristor-based in-memory computing has emerged as a promising paradigm to overcome the constraints of the von Neumann bottleneck and the memory wall by enabling fully parallelisable and energy-efficient vector-matrix multiplications. We investigate the effect of nonlinear, memristor-driven weight updates on the convergence behaviour of neural networks trained with equilibrium propagation (EqProp). Six memristor models were characterised by their voltage-current hysteresis and integrated into the EBANA framework for evaluation on two benchmark classification tasks. EqProp can achieve robust convergence under nonlinear weight updates, provided that memristors exhibit a sufficiently wide resistance range of at least an order of magnitude.

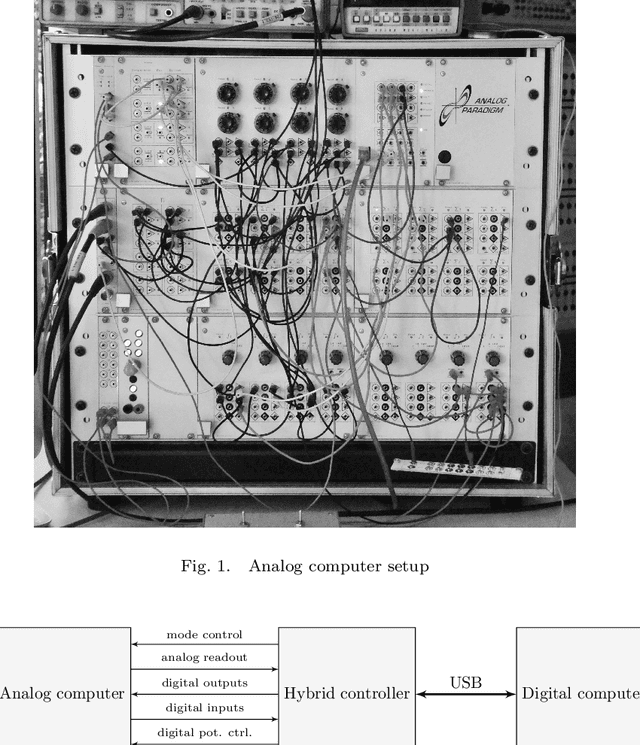

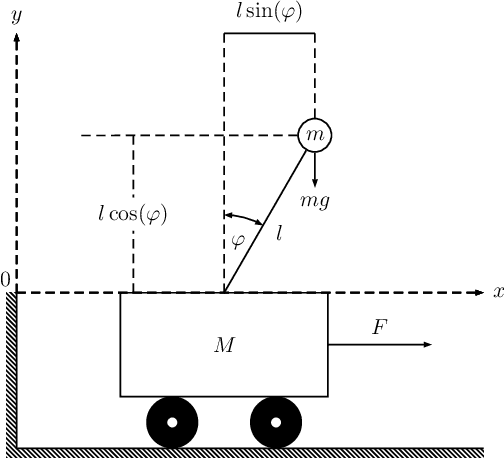

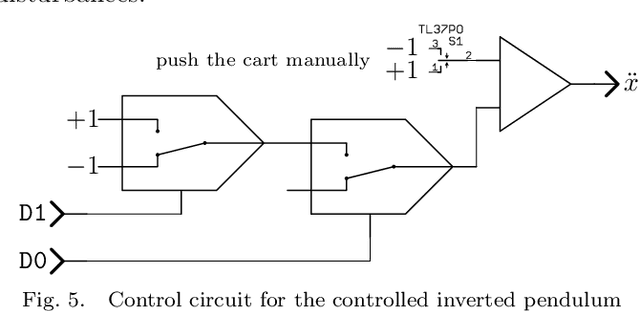

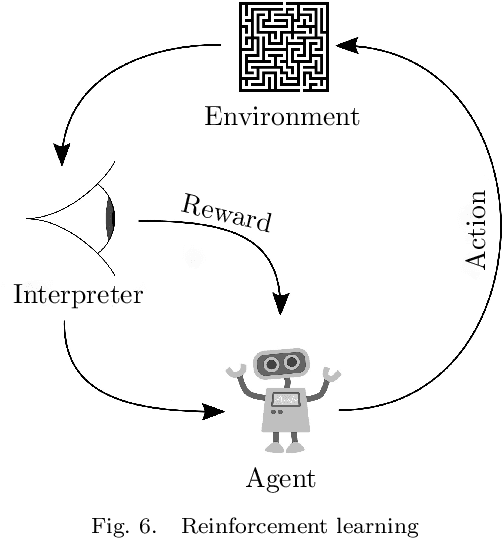

Hybrid computer approach to train a machine learning system

Mar 13, 2021

Abstract:This book chapter describes a novel approach to training machine learning systems by means of a hybrid computer setup i.e. a digital computer tightly coupled with an analog computer. As an example a reinforcement learning system is trained to balance an inverted pendulum which is simulated on an analog computer, thus demonstrating a solution to the major challenge of adequately simulating the environment for reinforcement learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge