Ayman Khalafallah

Leveraging Foundational Models and Simple Fusion for Multi-modal Physiological Signal Analysis

Dec 17, 2025Abstract:Physiological signals such as electrocardiograms (ECG) and electroencephalograms (EEG) provide complementary insights into human health and cognition, yet multi-modal integration is challenging due to limited multi-modal labeled data, and modality-specific differences . In this work, we adapt the CBraMod encoder for large-scale self-supervised ECG pretraining, introducing a dual-masking strategy to capture intra- and inter-lead dependencies. To overcome the above challenges, we utilize a pre-trained CBraMod encoder for EEG and pre-train a symmetric ECG encoder, equipping each modality with a rich foundational representation. These representations are then fused via simple embedding concatenation, allowing the classification head to learn cross-modal interactions, together enabling effective downstream learning despite limited multi-modal supervision. Evaluated on emotion recognition, our approach achieves near state-of-the-art performance, demonstrating that carefully designed physiological encoders, even with straightforward fusion, substantially improve downstream performance. These results highlight the potential of foundation-model approaches to harness the holistic nature of physiological signals, enabling scalable, label-efficient, and generalizable solutions for healthcare and affective computing.

Clustering by Attention: Leveraging Prior Fitted Transformers for Data Partitioning

Jul 27, 2025Abstract:Clustering is a core task in machine learning with wide-ranging applications in data mining and pattern recognition. However, its unsupervised nature makes it inherently challenging. Many existing clustering algorithms suffer from critical limitations: they often require careful parameter tuning, exhibit high computational complexity, lack interpretability, or yield suboptimal accuracy, especially when applied to large-scale datasets. In this paper, we introduce a novel clustering approach based on meta-learning. Our approach eliminates the need for parameter optimization while achieving accuracy that outperforms state-of-the-art clustering techniques. The proposed technique leverages a few pre-clustered samples to guide the clustering process for the entire dataset in a single forward pass. Specifically, we employ a pre-trained Prior-Data Fitted Transformer Network (PFN) to perform clustering. The algorithm computes attention between the pre-clustered samples and the unclustered samples, allowing it to infer cluster assignments for the entire dataset based on the learned relation. We theoretically and empirically demonstrate that, given just a few pre-clustered examples, the model can generalize to accurately cluster the rest of the dataset. Experiments on challenging benchmark datasets show that our approach can successfully cluster well-separated data without any pre-clustered samples, and significantly improves performance when a few clustered samples are provided. We show that our approach is superior to the state-of-the-art techniques. These results highlight the effectiveness and scalability of our approach, positioning it as a promising alternative to existing clustering techniques.

AlexU-AIC at Arabic Hate Speech 2022: Contrast to Classify

Jul 18, 2022

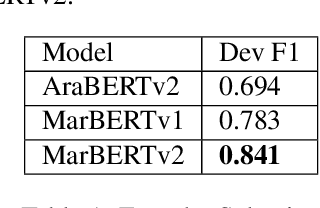

Abstract:Online presence on social media platforms such as Facebook and Twitter has become a daily habit for internet users. Despite the vast amount of services the platforms offer for their users, users suffer from cyber-bullying, which further leads to mental abuse and may escalate to cause physical harm to individuals or targeted groups. In this paper, we present our submission to the Arabic Hate Speech 2022 Shared Task Workshop (OSACT5 2022) using the associated Arabic Twitter dataset. The shared task consists of 3 sub-tasks, sub-task A focuses on detecting whether the tweet is offensive or not. Then, For offensive Tweets, sub-task B focuses on detecting whether the tweet is hate speech or not. Finally, For hate speech Tweets, sub-task C focuses on detecting the fine-grained type of hate speech among six different classes. Transformer models proved their efficiency in classification tasks, but with the problem of over-fitting when fine-tuned on a small or an imbalanced dataset. We overcome this limitation by investigating multiple training paradigms such as Contrastive learning and Multi-task learning along with Classification fine-tuning and an ensemble of our top 5 performers. Our proposed solution achieved 0.841, 0.817, and 0.476 macro F1-average in sub-tasks A, B, and C respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge