Axel-Cyrille Ngonga Ngomo

Data Science Group, Paderborn University, Germany

Prediction of concept lengths for fast concept learning in description logics

Jul 10, 2021

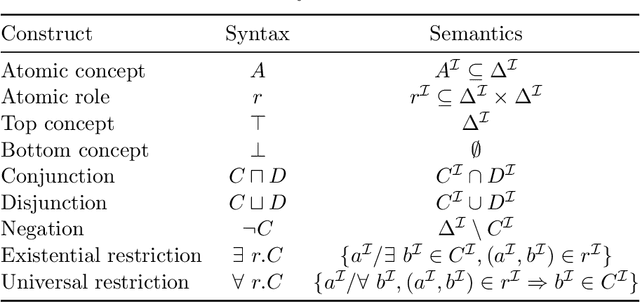

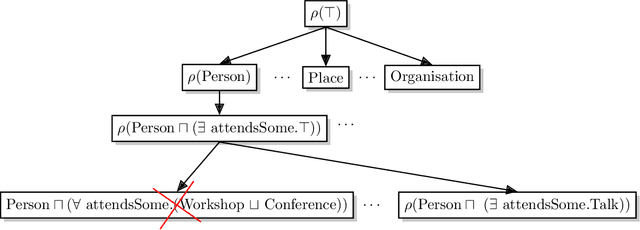

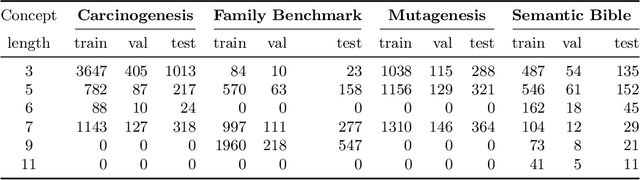

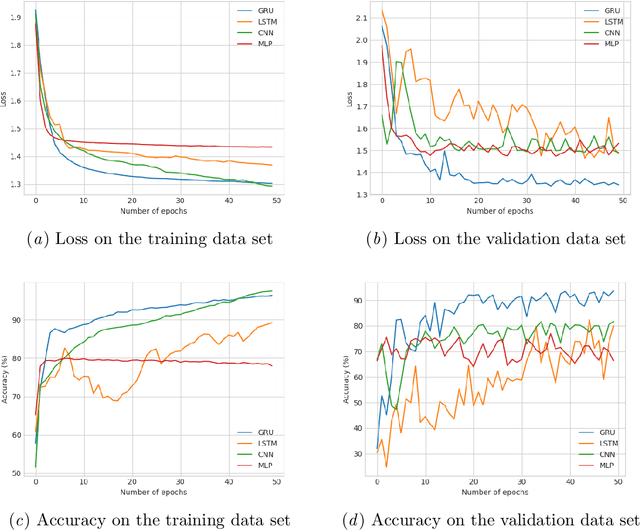

Abstract:Concept learning approaches based on refinement operators explore partially ordered solution spaces to compute concepts, which are used as binary classification models for individuals. However, the refinement trees spanned by these approaches can easily grow to millions of nodes for complex learning problems. This leads to refinement-based approaches often failing to detect optimal concepts efficiently. In this paper, we propose a supervised machine learning approach for learning concept lengths, which allows predicting the length of the target concept and therefore facilitates the reduction of the search space during concept learning. To achieve this goal, we compare four neural architectures and evaluate them on four benchmark knowledge graphs--Carcinogenesis, Mutagenesis, Semantic Bible, Family Benchmark. Our evaluation results suggest that recurrent neural network architectures perform best at concept length prediction with an F-measure of up to 92%. We show that integrating our concept length predictor into the CELOE (Class Expression Learner for Ontology Engineering) algorithm improves CELOE's runtime by a factor of up to 13.4 without any significant changes to the quality of the results it generates. For reproducibility, we provide our implementation in the public GitHub repository at https://github.com/ConceptLengthLearner/ReproducibilityRepo

DRILL-- Deep Reinforcement Learning for Refinement Operators in $\mathcal{ALC}$

Jun 29, 2021

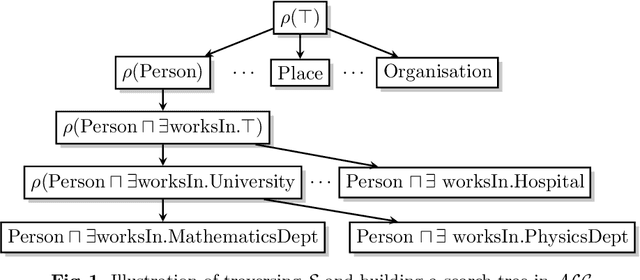

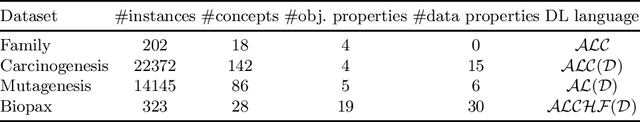

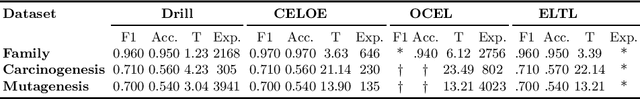

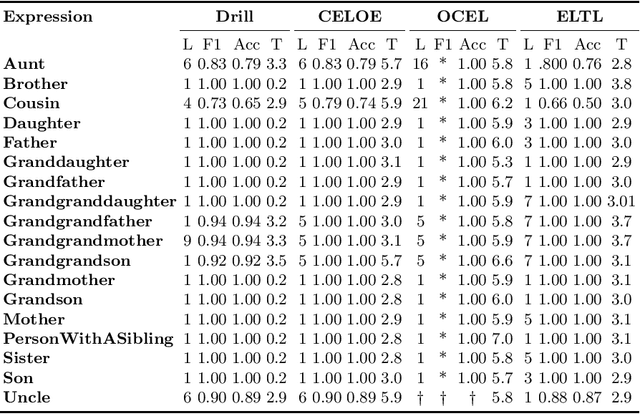

Abstract:Approaches based on refinement operators have been successfully applied to class expression learning on RDF knowledge graphs. These approaches often need to explore a large number of concepts to find adequate hypotheses. This need arguably stems from current approaches relying on myopic heuristic functions to guide their search through an infinite concept space. In turn, deep reinforcement learning provides effective means to address myopia by estimating how much discounted cumulated future reward states promise. In this work, we leverage deep reinforcement learning to accelerate the learning of concepts in $\mathcal{ALC}$ by proposing DRILL -- a novel class expression learning approach that uses a convolutional deep Q-learning model to steer its search. By virtue of its architecture, DRILL is able to compute the expected discounted cumulated future reward of more than $10^3$ class expressions in a second on standard hardware. We evaluate DRILL on four benchmark datasets against state-of-the-art approaches. Our results suggest that DRILL converges to goal states at least 2.7$\times$ faster than state-of-the-art models on all benchmark datasets. We provide an open-source implementation of our approach, including training and evaluation scripts as well as pre-trained models.

Convolutional Hypercomplex Embeddings for Link Prediction

Jun 29, 2021

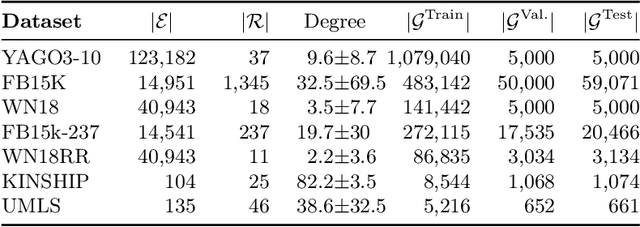

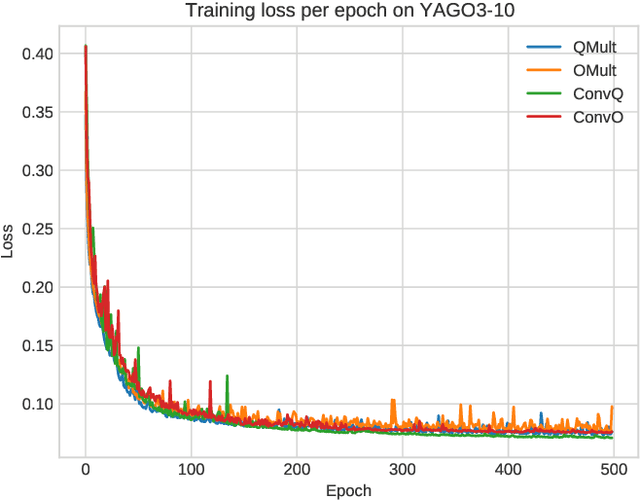

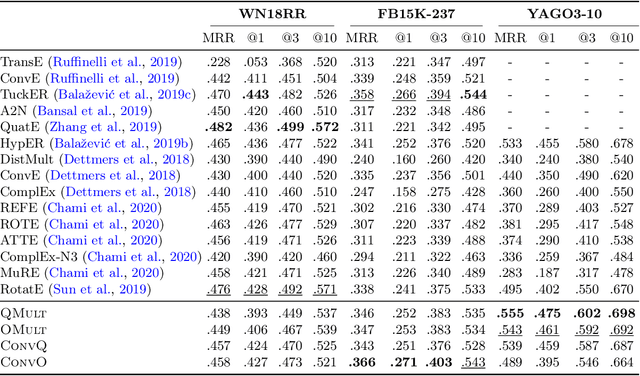

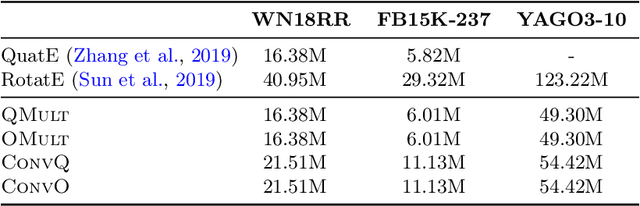

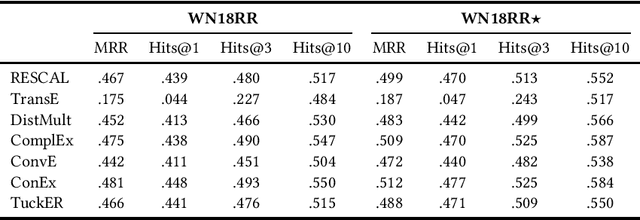

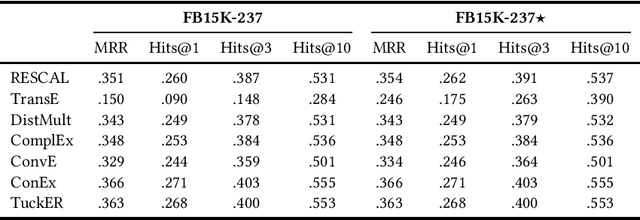

Abstract:Knowledge graph embedding research has mainly focused on the two smallest normed division algebras, $\mathbb{R}$ and $\mathbb{C}$. Recent results suggest that trilinear products of quaternion-valued embeddings can be a more effective means to tackle link prediction. In addition, models based on convolutions on real-valued embeddings often yield state-of-the-art results for link prediction. In this paper, we investigate a composition of convolution operations with hypercomplex multiplications. We propose the four approaches QMult, OMult, ConvQ and ConvO to tackle the link prediction problem. QMult and OMult can be considered as quaternion and octonion extensions of previous state-of-the-art approaches, including DistMult and ComplEx. ConvQ and ConvO build upon QMult and OMult by including convolution operations in a way inspired by the residual learning framework. We evaluated our approaches on seven link prediction datasets including WN18RR, FB15K-237 and YAGO3-10. Experimental results suggest that the benefits of learning hypercomplex-valued vector representations become more apparent as the size and complexity of the knowledge graph grows. ConvO outperforms state-of-the-art approaches on FB15K-237 in MRR, Hit@1 and Hit@3, while QMult, OMult, ConvQ and ConvO outperform state-of-the-approaches on YAGO3-10 in all metrics. Results also suggest that link prediction performances can be further improved via prediction averaging. To foster reproducible research, we provide an open-source implementation of approaches, including training and evaluation scripts as well as pretrained models.

Out-of-Vocabulary Entities in Link Prediction

May 26, 2021

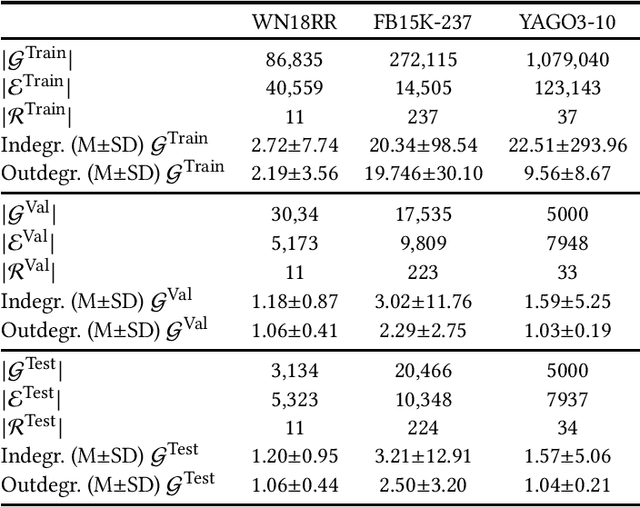

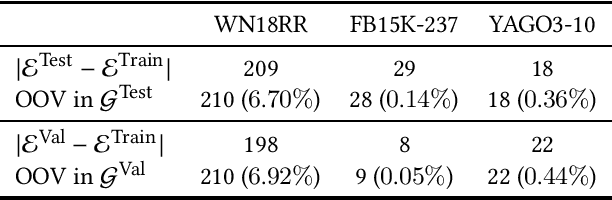

Abstract:Knowledge graph embedding techniques are key to making knowledge graphs amenable to the plethora of machine learning approaches based on vector representations. Link prediction is often used as a proxy to evaluate the quality of these embeddings. Given that the creation of benchmarks for link prediction is a time-consuming endeavor, most work on the subject matter uses only a few benchmarks. As benchmarks are crucial for the fair comparison of algorithms, ensuring their quality is tantamount to providing a solid ground for developing better solutions to link prediction and ipso facto embedding knowledge graphs. First studies of benchmarks pointed to limitations pertaining to information leaking from the development to the test fragments of some benchmark datasets. We spotted a further common limitation of three of the benchmarks commonly used for evaluating link prediction approaches: out-of-vocabulary entities in the test and validation sets. We provide an implementation of an approach for spotting and removing such entities and provide corrected versions of the datasets WN18RR, FB15K-237, and YAGO3-10. Our experiments on the corrected versions of WN18RR, FB15K-237, and YAGO3-10 suggest that the measured performance of state-of-the-art approaches is altered significantly with p-values <1%, <1.4%, and <1%, respectively. Overall, state-of-the-art approaches gain on average absolute $3.29 \pm 0.24\%$ in all metrics on WN18RR. This means that some of the conclusions achieved in previous works might need to be revisited. We provide an open-source implementation of our experiments and corrected datasets at at https://github.com/dice-group/OOV-In-Link-Prediction.

An Empirical Evaluation of Cost-based Federated SPARQL Query Processing Engines

Apr 02, 2021

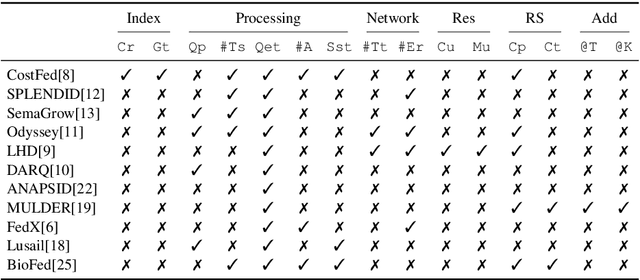

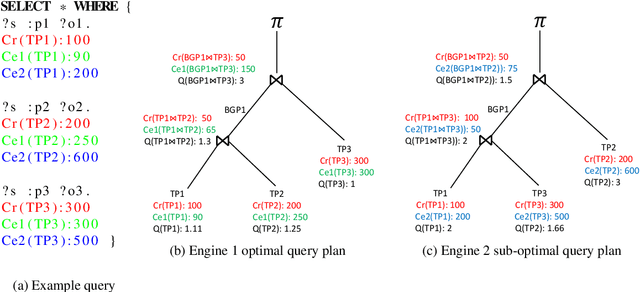

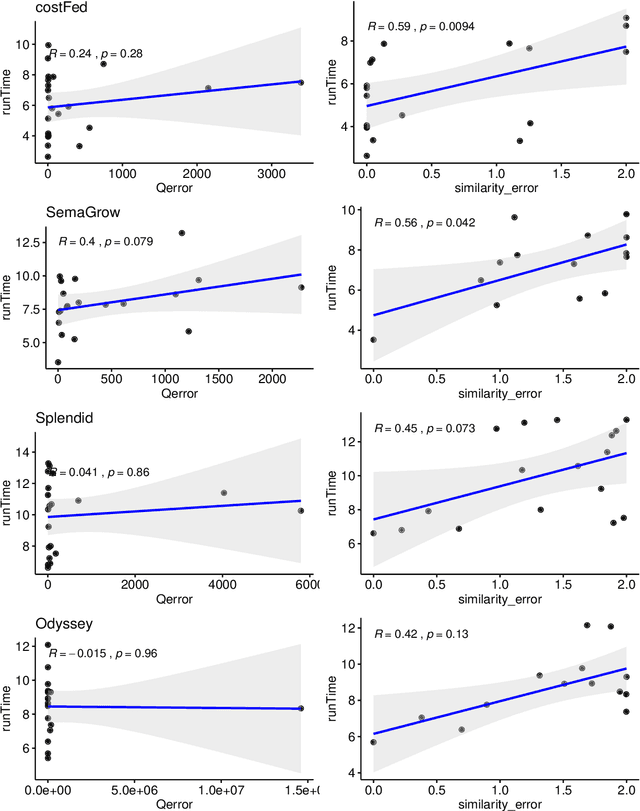

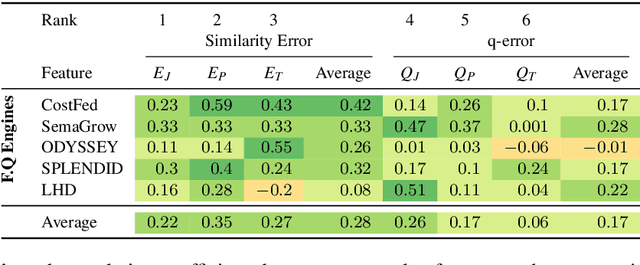

Abstract:Finding a good query plan is key to the optimization of query runtime. This holds in particular for cost-based federation engines, which make use of cardinality estimations to achieve this goal. A number of studies compare SPARQL federation engines across different performance metrics, including query runtime, result set completeness and correctness, number of sources selected and number of requests sent. Albeit informative, these metrics are generic and unable to quantify and evaluate the accuracy of the cardinality estimators of cost-based federation engines. To thoroughly evaluate cost-based federation engines, the effect of estimated cardinality errors on the overall query runtime performance must be measured. In this paper, we address this challenge by presenting novel evaluation metrics targeted at a fine-grained benchmarking of cost-based federated SPARQL query engines. We evaluate five cost-based federated SPARQL query engines using existing as well as novel evaluation metrics by using LargeRDFBench queries. Our results provide a detailed analysis of the experimental outcomes that reveal novel insights, useful for the development of future cost-based federated SPARQL query processing engines.

* 24 pages, Semantic Web, 2020, #article

Knowledge Graph Question Answering using Graph-Pattern Isomorphism

Mar 11, 2021

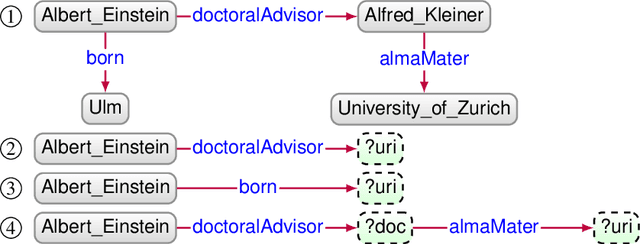

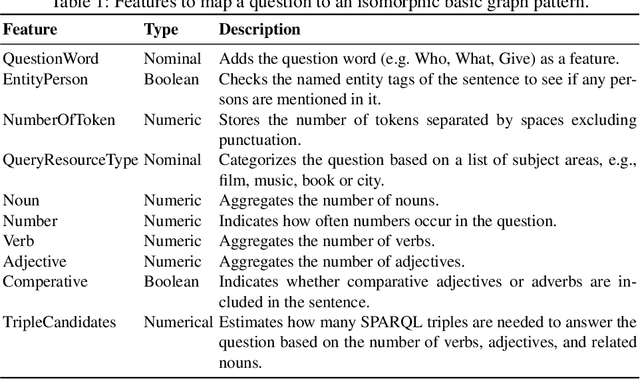

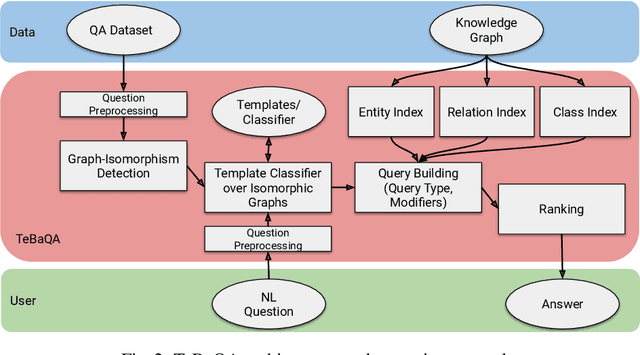

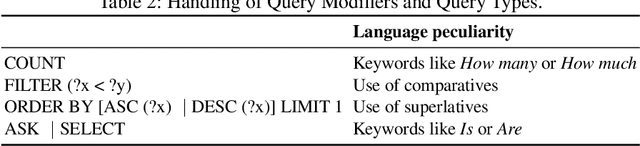

Abstract:Knowledge Graph Question Answering (KGQA) systems are based on machine learning algorithms, requiring thousands of question-answer pairs as training examples or natural language processing pipelines that need module fine-tuning. In this paper, we present a novel QA approach, dubbed TeBaQA. Our approach learns to answer questions based on graph isomorphisms from basic graph patterns of SPARQL queries. Learning basic graph patterns is efficient due to the small number of possible patterns. This novel paradigm reduces the amount of training data necessary to achieve state-of-the-art performance. TeBaQA also speeds up the domain adaption process by transforming the QA system development task into a much smaller and easier data compilation task. In our evaluation, TeBaQA achieves state-of-the-art performance on QALD-8 and delivers comparable results on QALD-9 and LC-QuAD v1. Additionally, we performed a fine-grained evaluation on complex queries that deal with aggregation and superlative questions as well as an ablation study, highlighting future research challenges.

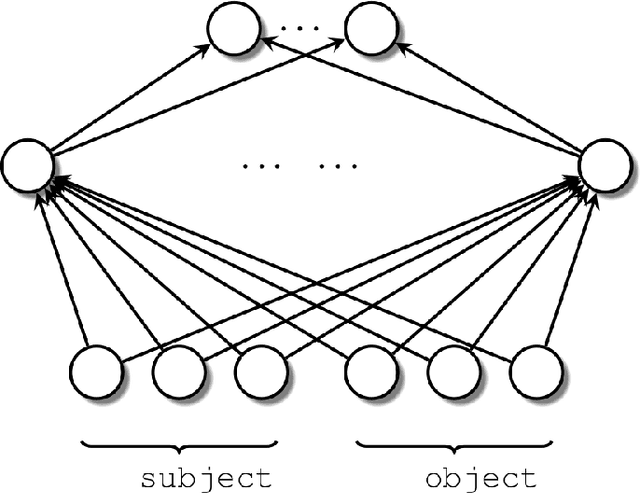

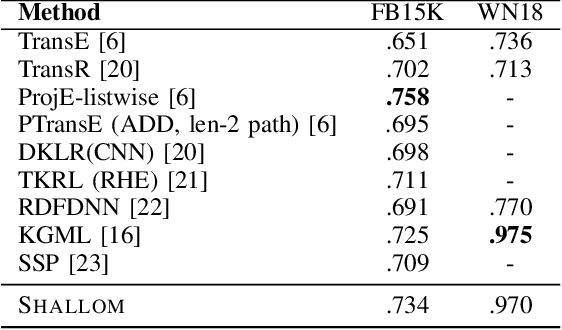

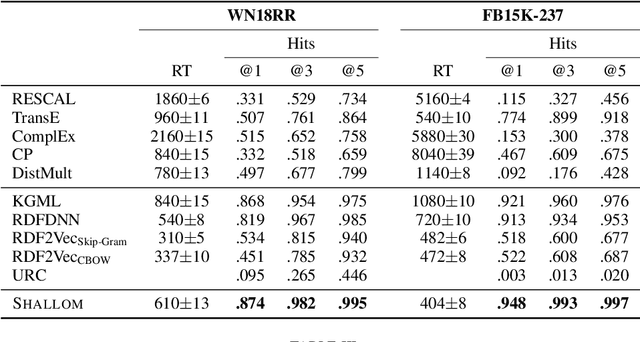

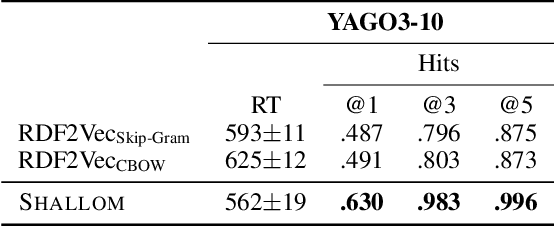

A shallow neural model for relation prediction

Jan 22, 2021

Abstract:Knowledge graph completion refers to predicting missing triples. Most approaches achieve this goal by predicting entities, given an entity and a relation. We predict missing triples via the relation prediction. To this end, we frame the relation prediction problem as a multi-label classification problem and propose a shallow neural model (SHALLOM) that accurately infers missing relations from entities. SHALLOM is analogous to C-BOW as both approaches predict a central token (p) given surrounding tokens ((s,o)). Our experiments indicate that SHALLOM outperforms state-of-the-art approaches on the FB15K-237 and WN18RR with margins of up to $3\%$ and $8\%$ (absolute), respectively, while requiring a maximum training time of 8 minutes on these datasets. We ensure the reproducibility of our results by providing an open-source implementation including training and evaluation scripts at {\url{https://github.com/dice-group/Shallom}.}

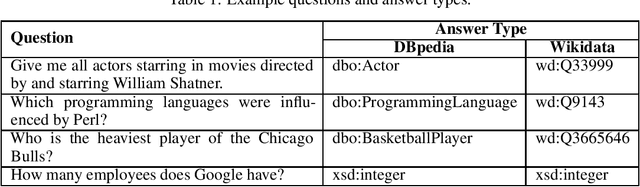

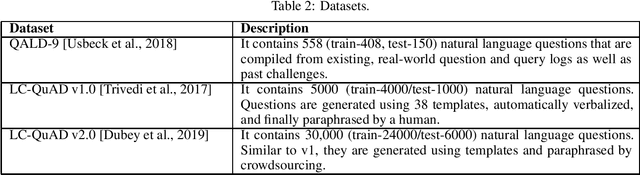

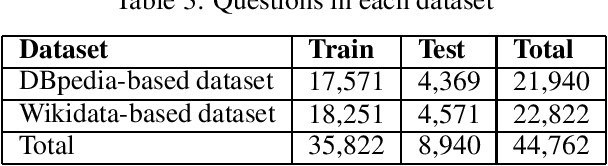

SeMantic AnsweR Type prediction task at ISWC 2020 Semantic Web Challenge

Dec 01, 2020

Abstract:Each year the International Semantic Web Conference accepts a set of Semantic Web Challenges to establish competitions that will advance the state of the art solutions in any given problem domain. The SeMantic AnsweR Type prediction task (SMART) was part of ISWC 2020 challenges. Question type and answer type prediction can play a key role in knowledge base question answering systems providing insights that are helpful to generate correct queries or rank the answer candidates. More concretely, given a question in natural language, the task of SMART challenge is, to predict the answer type using a target ontology (e.g., DBpedia or Wikidata).

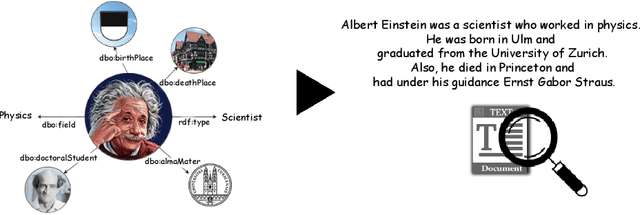

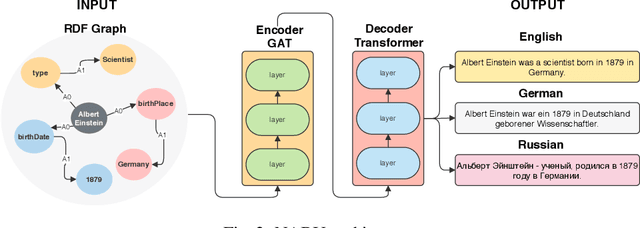

NABU $\mathrm{-}$ Multilingual Graph-based Neural RDF Verbalizer

Sep 21, 2020

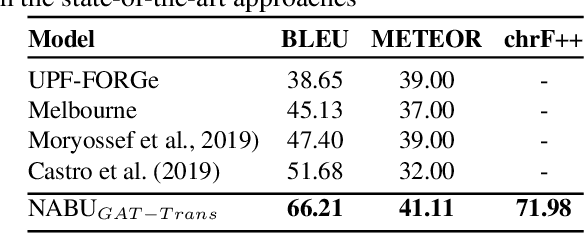

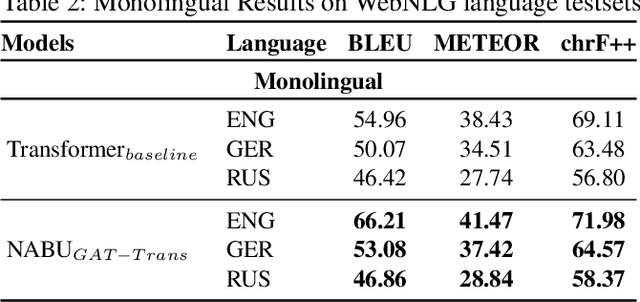

Abstract:The RDF-to-text task has recently gained substantial attention due to continuous growth of Linked Data. In contrast to traditional pipeline models, recent studies have focused on neural models, which are now able to convert a set of RDF triples into text in an end-to-end style with promising results. However, English is the only language widely targeted. We address this research gap by presenting NABU, a multilingual graph-based neural model that verbalizes RDF data to German, Russian, and English. NABU is based on an encoder-decoder architecture, uses an encoder inspired by Graph Attention Networks and a Transformer as decoder. Our approach relies on the fact that knowledge graphs are language-agnostic and they hence can be used to generate multilingual text. We evaluate NABU in monolingual and multilingual settings on standard benchmarking WebNLG datasets. Our results show that NABU outperforms state-of-the-art approaches on English with 66.21 BLEU, and achieves consistent results across all languages on the multilingual scenario with 56.04 BLEU.

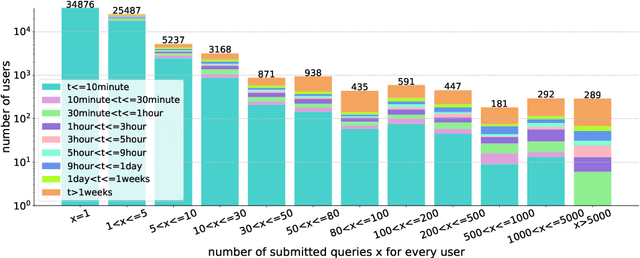

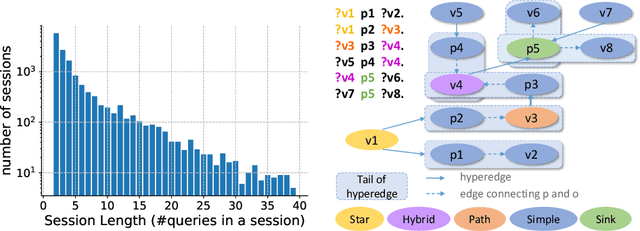

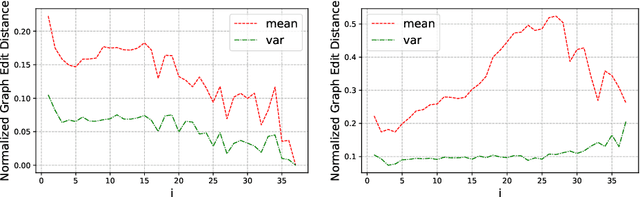

Revealing Secrets in SPARQL Session Level

Sep 13, 2020

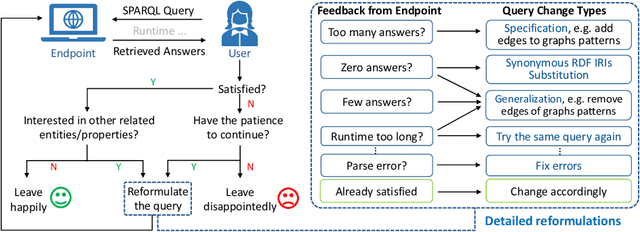

Abstract:Based on Semantic Web technologies, knowledge graphs help users to discover information of interest by using live SPARQL services. Answer-seekers often examine intermediate results iteratively and modify SPARQL queries repeatedly in a search session. In this context, understanding user behaviors is critical for effective intention prediction and query optimization. However, these behaviors have not yet been researched systematically at the SPARQL session level. This paper reveals the secrets of session-level user search behaviors by conducting a comprehensive investigation over massive real-world SPARQL query logs. In particular, we thoroughly assess query changes made by users w.r.t. structural and data-driven features of SPARQL queries. To illustrate the potentiality of our findings, we employ a proof-of-concept model to predict user intentions, i.e., future directions of the given session, and give reformulation suggestions based on the predicted intention. We hope the results presented here will help to devise efficient SPARQL caching, auto-completion, query suggestion, approximation, and relaxation techniques in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge