Avik W. Ghosh

Adaptive Synaptogenesis Implemented on a Nanomagnetic Platform

Apr 14, 2025Abstract:The human brain functions very differently from artificial neural networks (ANN) and possesses unique features that are absent in ANN. An important one among them is "adaptive synaptogenesis" that modifies synaptic weights when needed to avoid catastrophic forgetting and promote lifelong learning. The key aspect of this algorithm is supervised Hebbian learning, where weight modifications in the neocortex driven by temporal coincidence are further accepted or vetoed by an added control mechanism from the hippocampus during the training cycle, to make distant synaptic connections highly sparse and strategic. In this work, we discuss various algorithmic aspects of adaptive synaptogenesis tailored to edge computing, demonstrate its function using simulations, and design nanomagnetic hardware accelerators for specific functions of synaptogenesis.

Building Reservoir Computing Hardware Using Low Energy-Barrier Magnetics

Jul 06, 2020

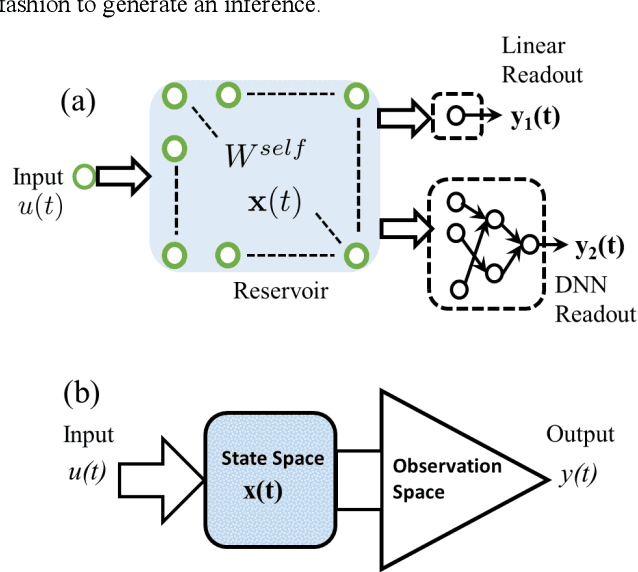

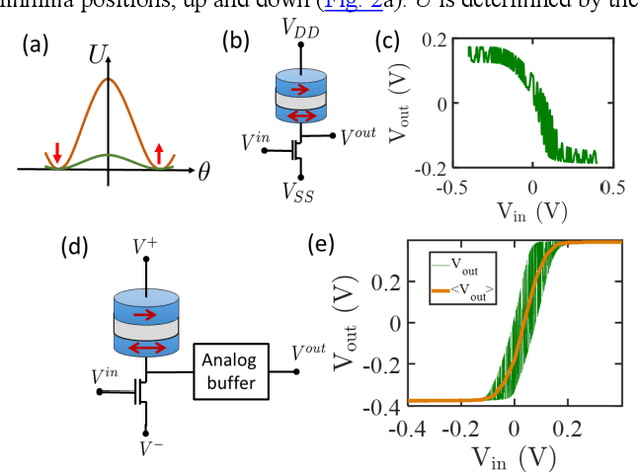

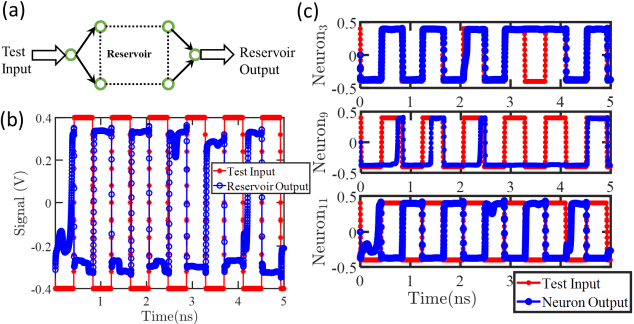

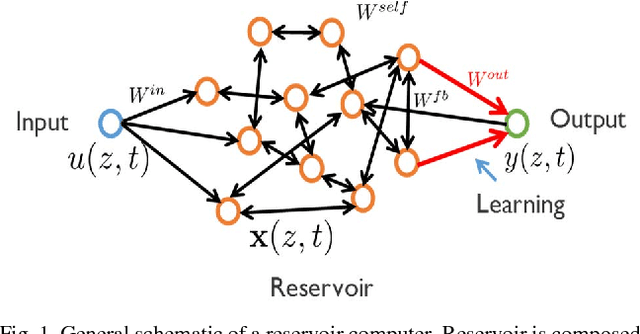

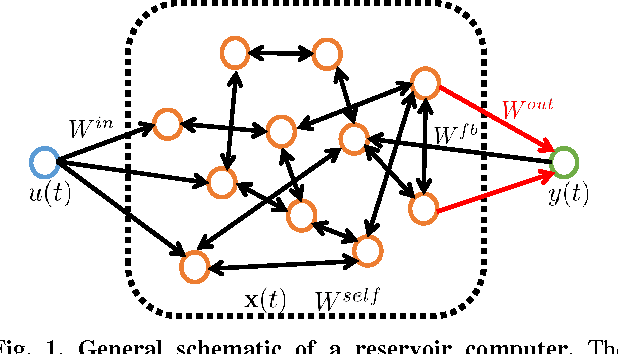

Abstract:Biologically inspired recurrent neural networks, such as reservoir computers are of interest in designing spatio-temporal data processors from a hardware point of view due to the simple learning scheme and deep connections to Kalman filters. In this work we discuss using in-depth simulation studies a way to construct hardware reservoir computers using an analog stochastic neuron cell built from a low energy-barrier magnet based magnetic tunnel junction and a few transistors. This allows us to implement a physical embodiment of the mathematical model of reservoir computers. Compact implementation of reservoir computers using such devices may enable building compact, energy-efficient signal processors for standalone or in-situ machine cognition in edge devices.

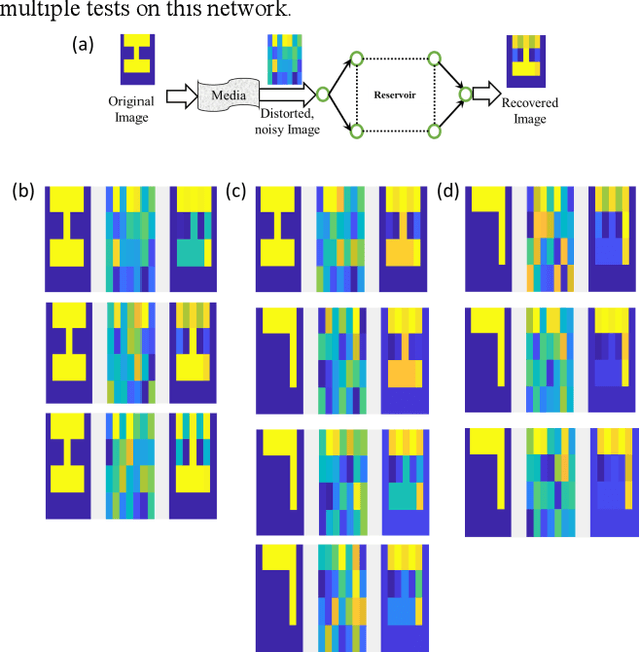

Reservoir Computing based Neural Image Filters

Sep 07, 2018

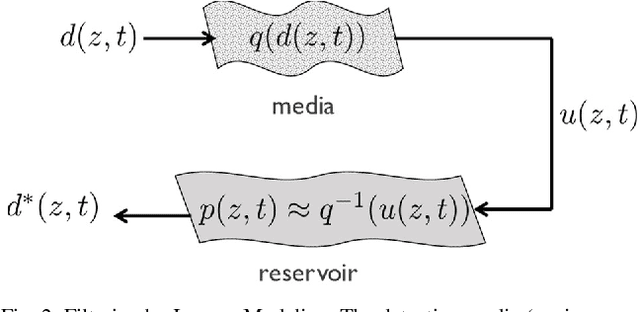

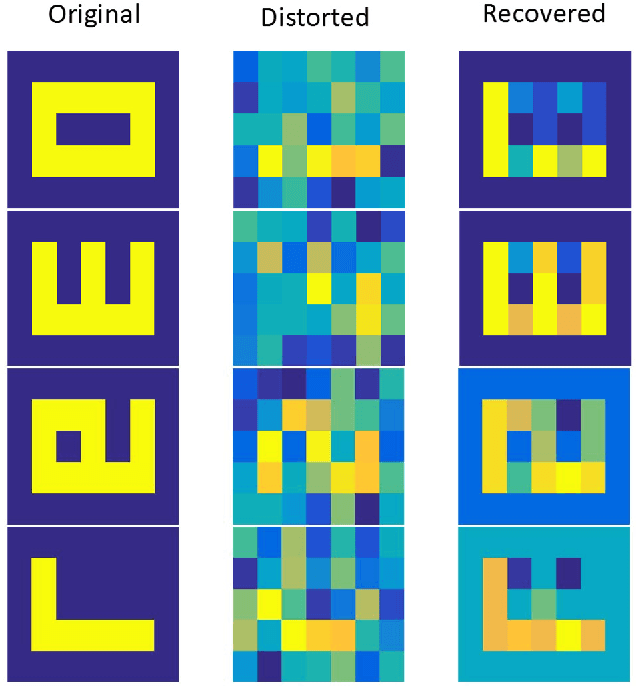

Abstract:Clean images are an important requirement for machine vision systems to recognize visual features correctly. However, the environment, optics, electronics of the physical imaging systems can introduce extreme distortions and noise in the acquired images. In this work, we explore the use of reservoir computing, a dynamical neural network model inspired from biological systems, in creating dynamic image filtering systems that extracts signal from noise using inverse modeling. We discuss the possibility of implementing these networks in hardware close to the sensors.

Hardware based Spatio-Temporal Neural Processing Backend for Imaging Sensors: Towards a Smart Camera

Mar 23, 2018Abstract:In this work we show how we can build a technology platform for cognitive imaging sensors using recent advances in recurrent neural network architectures and training methods inspired from biology. We demonstrate learning and processing tasks specific to imaging sensors, including enhancement of sensitivity and signal-to-noise ratio (SNR) purely through neural filtering beyond the fundamental limits sensor materials, and inferencing and spatio-temporal pattern recognition capabilities of these networks with applications in object detection, motion tracking and prediction. We then show designs of unit hardware cells built using complementary metal-oxide semiconductor (CMOS) and emerging materials technologies for ultra-compact and energy-efficient embedded neural processors for smart cameras.

Reservoir Computing using Stochastic p-Bits

Sep 29, 2017

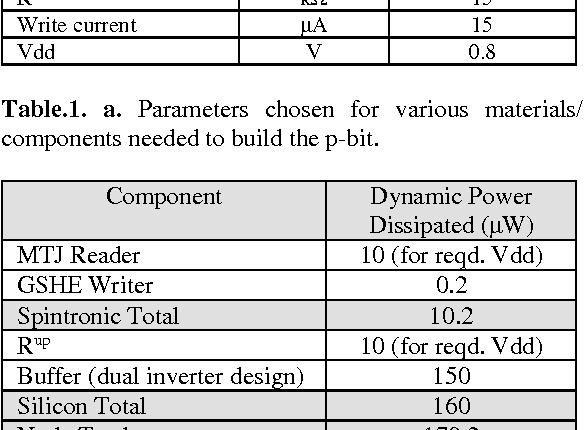

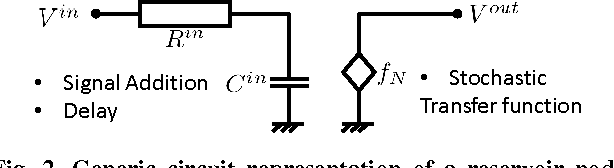

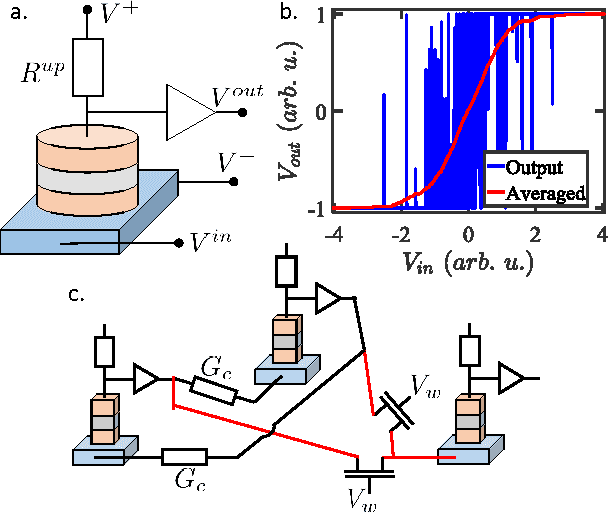

Abstract:We present a general hardware framework for building networks that directly implement Reservoir Computing, a popular software method for implementing and training Recurrent Neural Networks and are particularly suited for temporal inferencing and pattern recognition. We provide a specific example of a candidate hardware unit based on a combination of soft-magnets, spin-orbit materials and CMOS transistors that can implement these networks. Efficient non von-Neumann hardware implementation of reservoir computers can open up a pathway for integration of temporal Neural Networks in a wide variety of emerging systems such as Internet of Things (IoTs), industrial controls, bio- and photo-sensors, and self-driving automotives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge