Ashley Davey

Deep Learning Methods for S Shaped Utility Maximisation with a Random Reference Point

Oct 07, 2024

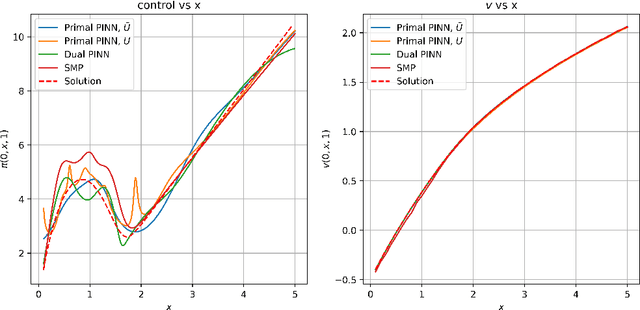

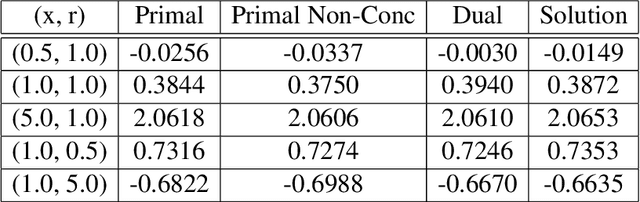

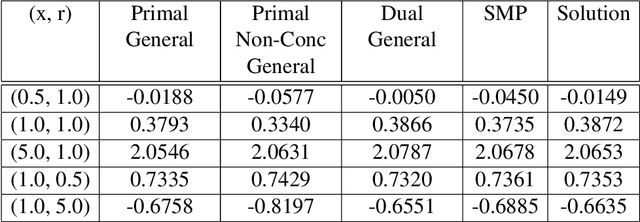

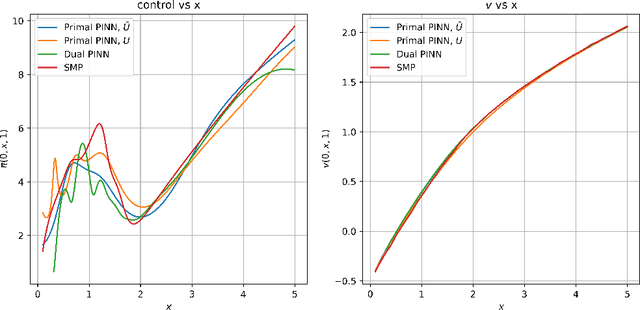

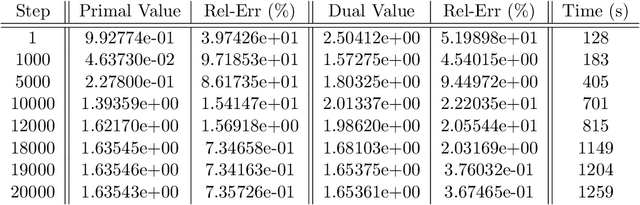

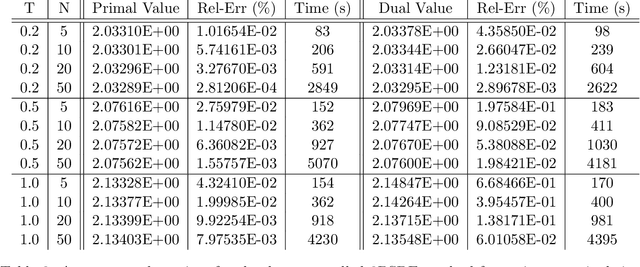

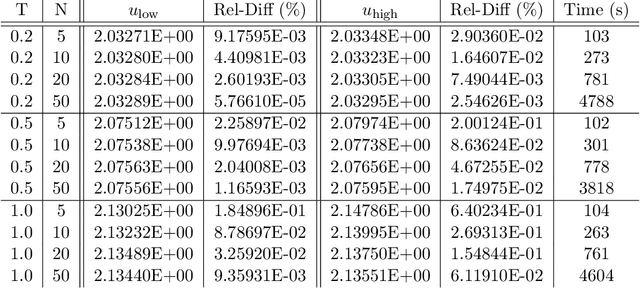

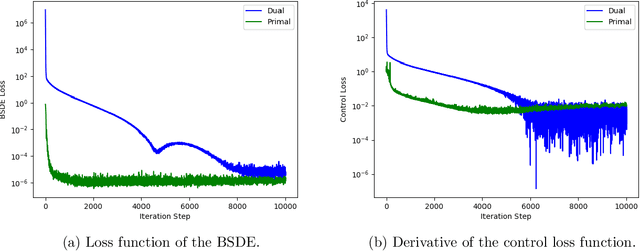

Abstract:We consider the portfolio optimisation problem where the terminal function is an S-shaped utility applied at the difference between the wealth and a random benchmark process. We develop several numerical methods for solving the problem using deep learning and duality methods. We use deep learning methods to solve the associated Hamilton-Jacobi-Bellman equation for both the primal and dual problems, and the adjoint equation arising from the stochastic maximum principle. We compare the solution of this non-concave problem to that of concavified utility, a random function depending on the benchmark, in both complete and incomplete markets. We give some numerical results for power and log utilities to show the accuracy of the suggested algorithms.

Deep Learning for Constrained Utility Maximisation

Aug 26, 2020

Abstract:This paper proposes two algorithms for solving stochastic control problems with deep reinforcement learning, with a focus on the utility maximisation problem. The first algorithm solves Markovian problems via the Hamilton Jacobi Bellman (HJB) equation. We solve this highly nonlinear partial differential equation (PDE) with a second order backward stochastic differential equation (2BSDE) formulation. The convex structure of the problem allows us to describe a dual problem that can either verify the original primal approach or bypass some of the complexity. The second algorithm utilises the full power of the duality method to solve non-Markovian problems, which are often beyond the scope of stochastic control solvers in the existing literature. We solve an adjoint BSDE that satisfies the dual optimality conditions. We apply these algorithms to problems with power, log and non-HARA utilities in the Black-Scholes, the Heston stochastic volatility, and path dependent volatility models. Numerical experiments show highly accurate results with low computational cost, supporting our proposed algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge