Armin Moin

Mining Software Repositories for Expert Recommendation

Apr 23, 2025

Abstract:We propose an automated approach to bug assignment to developers in large open-source software projects. This way, we assist human bug triagers who are in charge of finding the best developer with the right level of expertise in a particular area to be assigned to a newly reported issue. Our approach is based on the history of software development as documented in the issue tracking systems. We deploy BERTopic and techniques from TopicMiner. Our approach works based on the bug reports' features, such as the corresponding products and components, as well as their priority and severity levels. We sort developers based on their experience with specific combinations of new reports. The evaluation is performed using Top-k accuracy, and the results are compared with the reported results in prior work, namely TopicMiner MTM, BUGZIE, Bug triaging via deep Reinforcement Learning BT-RL, and LDA-SVM. The evaluation data come from various Eclipse and Mozilla projects, such as JDT, Firefox, and Thunderbird.

Automated Bug Report Prioritization in Large Open-Source Projects

Apr 22, 2025

Abstract:Large open-source projects receive a large number of issues (known as bugs), including software defect (i.e., bug) reports and new feature requests from their user and developer communities at a fast rate. The often limited project resources do not allow them to deal with all issues. Instead, they have to prioritize them according to the project's priorities and the issues' severities. In this paper, we propose a novel approach to automated bug prioritization based on the natural language text of the bug reports that are stored in the open bug repositories of the issue-tracking systems. We conduct topic modeling using a variant of LDA called TopicMiner-MTM and text classification with the BERT large language model to achieve a higher performance level compared to the state-of-the-art. Experimental results using an existing reference dataset containing 85,156 bug reports of the Eclipse Platform project indicate that we outperform existing approaches in terms of Accuracy, Precision, Recall, and F1-measure of the bug report priority prediction.

Bug Destiny Prediction in Large Open-Source Software Repositories through Sentiment Analysis and BERT Topic Modeling

Apr 22, 2025

Abstract:This study explores a novel approach to predicting key bug-related outcomes, including the time to resolution, time to fix, and ultimate status of a bug, using data from the Bugzilla Eclipse Project. Specifically, we leverage features available before a bug is resolved to enhance predictive accuracy. Our methodology incorporates sentiment analysis to derive both an emotionality score and a sentiment classification (positive or negative). Additionally, we integrate the bug's priority level and its topic, extracted using a BERTopic model, as features for a Convolutional Neural Network (CNN) and a Multilayer Perceptron (MLP). Our findings indicate that the combination of BERTopic and sentiment analysis can improve certain model performance metrics. Furthermore, we observe that balancing model inputs enhances practical applicability, albeit at the cost of a significant reduction in accuracy in most cases. To address our primary objectives, predicting time-to-resolution, time-to-fix, and bug destiny, we employ both binary classification and exact time value predictions, allowing for a comparative evaluation of their predictive effectiveness. Results demonstrate that sentiment analysis serves as a valuable predictor of a bug's eventual outcome, particularly in determining whether it will be fixed. However, its utility is less pronounced when classifying bugs into more complex or unconventional outcome categories.

TinyML for Speech Recognition

Apr 22, 2025

Abstract:We train and deploy a quantized 1D convolutional neural network model to conduct speech recognition on a highly resource-constrained IoT edge device. This can be useful in various Internet of Things (IoT) applications, such as smart homes and ambient assisted living for the elderly and people with disabilities, just to name a few examples. In this paper, we first create a new dataset with over one hour of audio data that enables our research and will be useful to future studies in this field. Second, we utilize the technologies provided by Edge Impulse to enhance our model's performance and achieve a high Accuracy of up to 97% on our dataset. For the validation, we implement our prototype using the Arduino Nano 33 BLE Sense microcontroller board. This microcontroller board is specifically designed for IoT and AI applications, making it an ideal choice for our target use case scenarios. While most existing research focuses on a limited set of keywords, our model can process 23 different keywords, enabling complex commands.

Automated Duplicate Bug Report Detection in Large Open Bug Repositories

Apr 21, 2025Abstract:Many users and contributors of large open-source projects report software defects or enhancement requests (known as bug reports) to the issue-tracking systems. However, they sometimes report issues that have already been reported. First, they may not have time to do sufficient research on existing bug reports. Second, they may not possess the right expertise in that specific area to realize that an existing bug report is essentially elaborating on the same matter, perhaps with a different wording. In this paper, we propose a novel approach based on machine learning methods that can automatically detect duplicate bug reports in an open bug repository based on the textual data in the reports. We present six alternative methods: Topic modeling, Gaussian Naive Bayes, deep learning, time-based organization, clustering, and summarization using a generative pre-trained transformer large language model. Additionally, we introduce a novel threshold-based approach for duplicate identification, in contrast to the conventional top-k selection method that has been widely used in the literature. Our approach demonstrates promising results across all the proposed methods, achieving accuracy rates ranging from the high 70%'s to the low 90%'s. We evaluated our methods on a public dataset of issues belonging to an Eclipse open-source project.

Stock Price Prediction Using a Hybrid LSTM-GNN Model: Integrating Time-Series and Graph-Based Analysis

Feb 19, 2025

Abstract:This paper presents a novel hybrid model that integrates long-short-term memory (LSTM) networks and Graph Neural Networks (GNNs) to significantly enhance the accuracy of stock market predictions. The LSTM component adeptly captures temporal patterns in stock price data, effectively modeling the time series dynamics of financial markets. Concurrently, the GNN component leverages Pearson correlation and association analysis to model inter-stock relational data, capturing complex nonlinear polyadic dependencies influencing stock prices. The model is trained and evaluated using an expanding window validation approach, enabling continuous learning from increasing amounts of data and adaptation to evolving market conditions. Extensive experiments conducted on historical stock data demonstrate that our hybrid LSTM-GNN model achieves a mean square error (MSE) of 0.00144, representing a substantial reduction of 10.6% compared to the MSE of the standalone LSTM model of 0.00161. Furthermore, the hybrid model outperforms traditional and advanced benchmarks, including linear regression, convolutional neural networks (CNN), and dense networks. These compelling results underscore the significant potential of combining temporal and relational data through a hybrid approach, offering a powerful tool for real-time trading and financial analysis.

Enhancing SQL Injection Detection and Prevention Using Generative Models

Feb 07, 2025

Abstract:SQL Injection (SQLi) continues to pose a significant threat to the security of web applications, enabling attackers to manipulate databases and access sensitive information without authorisation. Although advancements have been made in detection techniques, traditional signature-based methods still struggle to identify sophisticated SQL injection attacks that evade predefined patterns. As SQLi attacks evolve, the need for more adaptive detection systems becomes crucial. This paper introduces an innovative approach that leverages generative models to enhance SQLi detection and prevention mechanisms. By incorporating Variational Autoencoders (VAE), Conditional Wasserstein GAN with Gradient Penalty (CWGAN-GP), and U-Net, synthetic SQL queries were generated to augment training datasets for machine learning models. The proposed method demonstrated improved accuracy in SQLi detection systems by reducing both false positives and false negatives. Extensive empirical testing further illustrated the ability of the system to adapt to evolving SQLi attack patterns, resulting in enhanced precision and robustness.

AI-Enabled Software and System Architecture Frameworks: Focusing on smart Cyber-Physical Systems (CPS)

Aug 09, 2023Abstract:Several architecture frameworks for software, systems, and enterprises have been proposed in the literature. They identified various stakeholders and defined architecture viewpoints and views to frame and address stakeholder concerns. However, the stakeholders with data science and Machine Learning (ML) related concerns, such as data scientists and data engineers, are yet to be included in existing architecture frameworks. Therefore, they failed to address the architecture viewpoints and views responsive to the concerns of the data science community. In this paper, we address this gap by establishing the architecture frameworks adapted to meet the requirements of modern applications and organizations where ML artifacts are both prevalent and crucial. In particular, we focus on ML-enabled Cyber-Physical Systems (CPSs) and propose two sets of merit criteria for their efficient development and performance assessment, namely the criteria for evaluating and benchmarking ML-enabled CPSs, and the criteria for evaluation and benchmarking of the tools intended to support users through the modeling and development pipeline. In this study, we deploy multiple empirical and qualitative research methods based on literature review and survey instruments including expert interviews and an online questionnaire. We collect, analyze, and integrate the opinions of 77 experts from more than 25 organizations in over 10 countries to devise and validate the proposed framework.

Model-Driven Quantum Federated Learning (QFL)

Apr 05, 2023Abstract:Recently, several studies have proposed frameworks for Quantum Federated Learning (QFL). For instance, the Google TensorFlow Quantum (TFQ) and TensorFlow Federated (TFF) libraries have been deployed for realizing QFL. However, developers, in the main, are not as yet familiar with Quantum Computing (QC) libraries and frameworks. A Domain-Specific Modeling Language (DSML) that provides an abstraction layer over the underlying QC and Federated Learning (FL) libraries would be beneficial. This could enable practitioners to carry out software development and data science tasks efficiently while deploying the state of the art in Quantum Machine Learning (QML). In this position paper, we propose extending existing domain-specific Model-Driven Engineering (MDE) tools for Machine Learning (ML) enabled systems, such as MontiAnna, ML-Quadrat, and GreyCat, to support QFL.

MDE for Machine Learning-Enabled Software Systems: A Case Study and Comparison of MontiAnna & ML-Quadrat

Sep 15, 2022

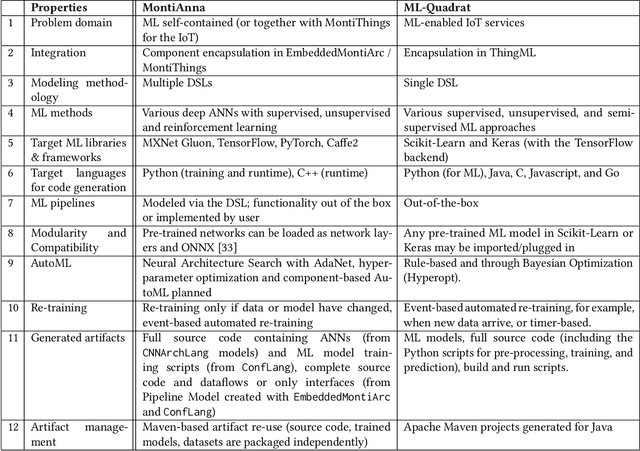

Abstract:In this paper, we propose to adopt the MDE paradigm for the development of Machine Learning (ML)-enabled software systems with a focus on the Internet of Things (IoT) domain. We illustrate how two state-of-the-art open-source modeling tools, namely MontiAnna and ML-Quadrat can be used for this purpose as demonstrated through a case study. The case study illustrates using ML, in particular deep Artificial Neural Networks (ANNs), for automated image recognition of handwritten digits using the MNIST reference dataset, and integrating the machine learning components into an IoT system. Subsequently, we conduct a functional comparison of the two frameworks, setting out an analysis base to include a broad range of design considerations, such as the problem domain, methods for the ML integration into larger systems, and supported ML methods, as well as topics of recent intense interest to the ML community, such as AutoML and MLOps. Accordingly, this paper is focused on elucidating the potential of the MDE approach in the ML domain. This supports the ML engineer in developing the (ML/software) model rather than implementing the code, and additionally enforces reusability and modularity of the design through enabling the out-of-the-box integration of ML functionality as a component of the IoT or cyber-physical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge