Ariel Larey

A Multi-Modal Approach for Face Anti-Spoofing in Non-Calibrated Systems using Disparity Maps

Oct 31, 2024

Abstract:Face recognition technologies are increasingly used in various applications, yet they are vulnerable to face spoofing attacks. These spoofing attacks often involve unique 3D structures, such as printed papers or mobile device screens. Although stereo-depth cameras can detect such attacks effectively, their high-cost limits their widespread adoption. Conversely, two-sensor systems without extrinsic calibration offer a cost-effective alternative but are unable to calculate depth using stereo techniques. In this work, we propose a method to overcome this challenge by leveraging facial attributes to derive disparity information and estimate relative depth for anti-spoofing purposes, using non-calibrated systems. We introduce a multi-modal anti-spoofing model, coined Disparity Model, that incorporates created disparity maps as a third modality alongside the two original sensor modalities. We demonstrate the effectiveness of the Disparity Model in countering various spoof attacks using a comprehensive dataset collected from the Intel RealSense ID Solution F455. Our method outperformed existing methods in the literature, achieving an Equal Error Rate (EER) of 1.71% and a False Negative Rate (FNR) of 2.77% at a False Positive Rate (FPR) of 1%. These errors are lower by 2.45% and 7.94% than the errors of the best comparison method, respectively. Additionally, we introduce a model ensemble that addresses 3D spoof attacks as well, achieving an EER of 2.04% and an FNR of 3.83% at an FPR of 1%. Overall, our work provides a state-of-the-art solution for the challenging task of anti-spoofing in non-calibrated systems that lack depth information.

Facial Expression Re-targeting from a Single Character

Jun 21, 2023

Abstract:Video retargeting for digital face animation is used in virtual reality, social media, gaming, movies, and video conference, aiming to animate avatars' facial expressions based on videos of human faces. The standard method to represent facial expressions for 3D characters is by blendshapes, a vector of weights representing the avatar's neutral shape and its variations under facial expressions, e.g., smile, puff, blinking. Datasets of paired frames with blendshape vectors are rare, and labeling can be laborious, time-consuming, and subjective. In this work, we developed an approach that handles the lack of appropriate datasets. Instead, we used a synthetic dataset of only one character. To generalize various characters, we re-represented each frame to face landmarks. We developed a unique deep-learning architecture that groups landmarks for each facial organ and connects them to relevant blendshape weights. Additionally, we incorporated complementary methods for facial expressions that landmarks did not represent well and gave special attention to eye expressions. We have demonstrated the superiority of our approach to previous research in qualitative and quantitative metrics. Our approach achieved a higher MOS of 68% and a lower MSE of 44.2% when tested on videos with various users and expressions.

Harnessing Digital Pathology And Causal Learning To Improve Eosinophilic Esophagitis Dietary Treatment Assignment

Apr 16, 2023

Abstract:Eosinophilic esophagitis (EoE) is a chronic, food antigen-driven, allergic inflammatory condition of the esophagus associated with elevated esophageal eosinophils. EoE is a top cause of chronic dysphagia after GERD. Diagnosis of EoE relies on counting eosinophils in histological slides, a manual and time-consuming task that limits the ability to extract complex patient-dependent features. The treatment of EoE includes medication and food elimination. A personalized food elimination plan is crucial for engagement and efficiency, but previous attempts failed to produce significant results. In this work, on the one hand, we utilize AI for inferring histological features from the entire biopsy slide, features that cannot be extracted manually. On the other hand, we develop causal learning models that can process this wealth of data. We applied our approach to the 'Six-Food vs. One-Food Eosinophilic Esophagitis Diet Study', where 112 symptomatic adults aged 18-60 years with active EoE were assigned to either a six-food elimination diet (6FED) or a one-food elimination diet (1FED) for six weeks. Our results show that the average treatment effect (ATE) of the 6FED treatment compared with the 1FED treatment is not significant, that is, neither diet was superior to the other. We examined several causal models and show that the best treatment strategy was obtained using T-learner with two XGBoost modules. While 1FED only and 6FED only provide improvement for 35%-38% of the patients, which is not significantly different from a random treatment assignment, our causal model yields a significantly better improvement rate of 58.4%. This study illustrates the significance of AI in enhancing treatment planning by analyzing molecular features' distribution in histological slides through causal learning. Our approach can be harnessed for other conditions that rely on histology for diagnosis and treatment.

Between Generating Noise and Generating Images: Noise in the Correct Frequency Improves the Quality of Synthetic Histopathology Images for Digital Pathology

Feb 13, 2023

Abstract:Artificial intelligence and machine learning techniques have the promise to revolutionize the field of digital pathology. However, these models demand considerable amounts of data, while the availability of unbiased training data is limited. Synthetic images can augment existing datasets, to improve and validate AI algorithms. Yet, controlling the exact distribution of cellular features within them is still challenging. One of the solutions is harnessing conditional generative adversarial networks that take a semantic mask as an input rather than a random noise. Unlike other domains, outlining the exact cellular structure of tissues is hard, and most of the input masks depict regions of cell types. However, using polygon-based masks introduce inherent artifacts within the synthetic images - due to the mismatch between the polygon size and the single-cell size. In this work, we show that introducing random single-pixel noise with the appropriate spatial frequency into a polygon semantic mask can dramatically improve the quality of the synthetic images. We used our platform to generate synthetic images of immunohistochemistry-treated lung biopsies. We test the quality of the images using a three-fold validation procedure. First, we show that adding the appropriate noise frequency yields 87% of the similarity metrics improvement that is obtained by adding the actual single-cell features. Second, we show that the synthetic images pass the Turing test. Finally, we show that adding these synthetic images to the train set improves AI performance in terms of PD-L1 semantic segmentation performances. Our work suggests a simple and powerful approach for generating synthetic data on demand to unbias limited datasets to improve the algorithms' accuracy and validate their robustness.

DEPAS: De-novo Pathology Semantic Masks using a Generative Model

Feb 13, 2023

Abstract:The integration of artificial intelligence into digital pathology has the potential to automate and improve various tasks, such as image analysis and diagnostic decision-making. Yet, the inherent variability of tissues, together with the need for image labeling, lead to biased datasets that limit the generalizability of algorithms trained on them. One of the emerging solutions for this challenge is synthetic histological images. However, debiasing real datasets require not only generating photorealistic images but also the ability to control the features within them. A common approach is to use generative methods that perform image translation between semantic masks that reflect prior knowledge of the tissue and a histological image. However, unlike other image domains, the complex structure of the tissue prevents a simple creation of histology semantic masks that are required as input to the image translation model, while semantic masks extracted from real images reduce the process's scalability. In this work, we introduce a scalable generative model, coined as DEPAS, that captures tissue structure and generates high-resolution semantic masks with state-of-the-art quality. We demonstrate the ability of DEPAS to generate realistic semantic maps of tissue for three types of organs: skin, prostate, and lung. Moreover, we show that these masks can be processed using a generative image translation model to produce photorealistic histology images of two types of cancer with two different types of staining techniques. Finally, we harness DEPAS to generate multi-label semantic masks that capture different cell types distributions and use them to produce histological images with on-demand cellular features. Overall, our work provides a state-of-the-art solution for the challenging task of generating synthetic histological images while controlling their semantic information in a scalable way.

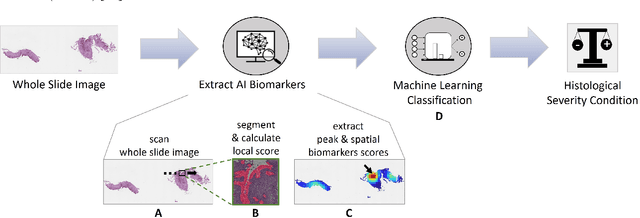

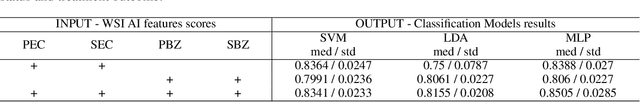

Harnessing Artificial Intelligence to Infer Novel Spatial Biomarkers for the Diagnosis of Eosinophilic Esophagitis

May 26, 2022

Abstract:Eosinophilic esophagitis (EoE) is a chronic allergic inflammatory condition of the esophagus associated with elevated esophageal eosinophils. Second only to gastroesophageal reflux disease, EoE is one of the leading causes of chronic refractory dysphagia in adults and children. EoE diagnosis requires enumerating the density of esophageal eosinophils in esophageal biopsies, a somewhat subjective task that is time-consuming, thus reducing the ability to process the complex tissue structure. Previous artificial intelligence (AI) approaches that aimed to improve histology-based diagnosis focused on recapitulating identification and quantification of the area of maximal eosinophil density. However, this metric does not account for the distribution of eosinophils or other histological features, over the whole slide image. Here, we developed an artificial intelligence platform that infers local and spatial biomarkers based on semantic segmentation of intact eosinophils and basal zone distributions. Besides the maximal density of eosinophils (referred to as Peak Eosinophil Count [PEC]) and a maximal basal zone fraction, we identify two additional metrics that reflect the distribution of eosinophils and basal zone fractions. This approach enables a decision support system that predicts EoE activity and classifies the histological severity of EoE patients. We utilized a cohort that includes 1066 biopsy slides from 400 subjects to validate the system's performance and achieved a histological severity classification accuracy of 86.70%, sensitivity of 84.50%, and specificity of 90.09%. Our approach highlights the importance of systematically analyzing the distribution of biopsy features over the entire slide and paves the way towards a personalized decision support system that will assist not only in counting cells but can also potentially improve diagnosis and provide treatment prediction.

Prune Once for All: Sparse Pre-Trained Language Models

Nov 10, 2021

Abstract:Transformer-based language models are applied to a wide range of applications in natural language processing. However, they are inefficient and difficult to deploy. In recent years, many compression algorithms have been proposed to increase the implementation efficiency of large Transformer-based models on target hardware. In this work we present a new method for training sparse pre-trained Transformer language models by integrating weight pruning and model distillation. These sparse pre-trained models can be used to transfer learning for a wide range of tasks while maintaining their sparsity pattern. We demonstrate our method with three known architectures to create sparse pre-trained BERT-Base, BERT-Large and DistilBERT. We show how the compressed sparse pre-trained models we trained transfer their knowledge to five different downstream natural language tasks with minimal accuracy loss. Moreover, we show how to further compress the sparse models' weights to 8bit precision using quantization-aware training. For example, with our sparse pre-trained BERT-Large fine-tuned on SQuADv1.1 and quantized to 8bit we achieve a compression ratio of $40$X for the encoder with less than $1\%$ accuracy loss. To the best of our knowledge, our results show the best compression-to-accuracy ratio for BERT-Base, BERT-Large, and DistilBERT.

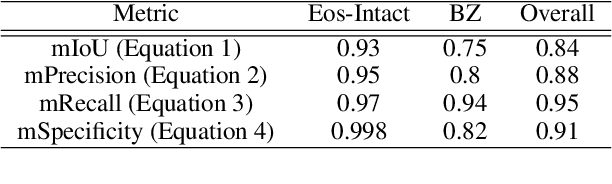

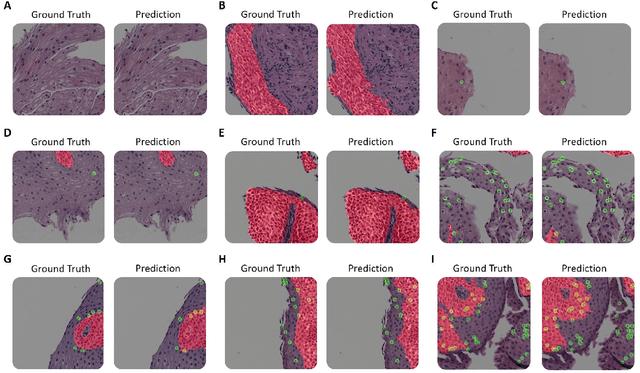

PECNet: A Deep Multi-Label Segmentation Network for Eosinophilic Esophagitis Biopsy Diagnostics

Mar 02, 2021

Abstract:Background. Eosinophilic esophagitis (EoE) is an allergic inflammatory condition of the esophagus associated with elevated numbers of eosinophils. Disease diagnosis and monitoring requires determining the concentration of eosinophils in esophageal biopsies, a time-consuming, tedious and somewhat subjective task currently performed by pathologists. Methods. Herein, we aimed to use machine learning to identify, quantitate and diagnose EoE. We labeled more than 100M pixels of 4345 images obtained by scanning whole slides of H&E-stained sections of esophageal biopsies derived from 23 EoE patients. We used this dataset to train a multi-label segmentation deep network. To validate the network, we examined a replication cohort of 1089 whole slide images from 419 patients derived from multiple institutions. Findings. PECNet segmented both intact and not-intact eosinophils with a mean intersection over union (mIoU) of 0.93. This segmentation was able to quantitate intact eosinophils with a mean absolute error of 0.611 eosinophils and classify EoE disease activity with an accuracy of 98.5%. Using whole slide images from the validation cohort, PECNet achieved an accuracy of 94.8%, sensitivity of 94.3%, and specificity of 95.14% in reporting EoE disease activity. Interpretation. We have developed a deep learning multi-label semantic segmentation network that successfully addresses two of the main challenges in EoE diagnostics and digital pathology, the need to detect several types of small features simultaneously and the ability to analyze whole slides efficiently. Our results pave the way for an automated diagnosis of EoE and can be utilized for other conditions with similar challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge