Aria Nourbakhsh

IDMC

Cluster Purge Loss: Structuring Transformer Embeddings for Equivalent Mutants Detection

Jul 26, 2025Abstract:Recent pre-trained transformer models achieve superior performance in various code processing objectives. However, although effective at optimizing decision boundaries, common approaches for fine-tuning them for downstream classification tasks - distance-based methods or training an additional classification head - often fail to thoroughly structure the embedding space to reflect nuanced intra-class semantic relationships. Equivalent code mutant detection is one of these tasks, where the quality of the embedding space is crucial to the performance of the models. We introduce a novel framework that integrates cross-entropy loss with a deep metric learning objective, termed Cluster Purge Loss. This objective, unlike conventional approaches, concentrates on adjusting fine-grained differences within each class, encouraging the separation of instances based on semantical equivalency to the class center using dynamically adjusted borders. Employing UniXCoder as the base model, our approach demonstrates state-of-the-art performance in the domain of equivalent mutant detection and produces a more interpretable embedding space.

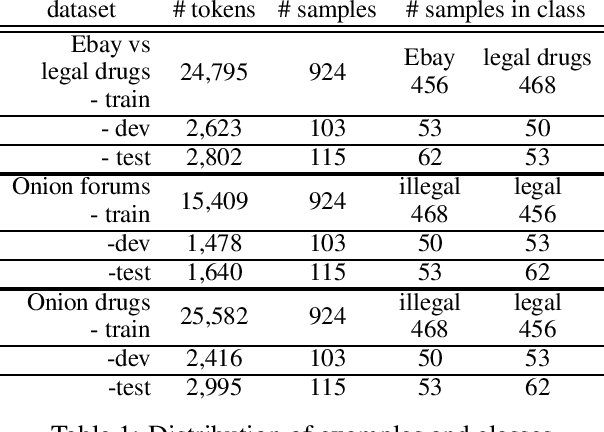

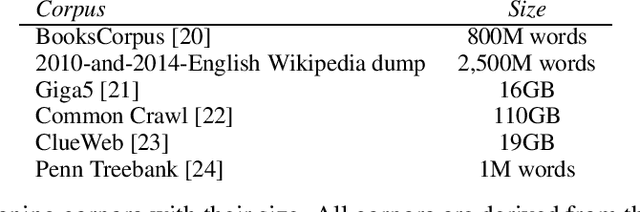

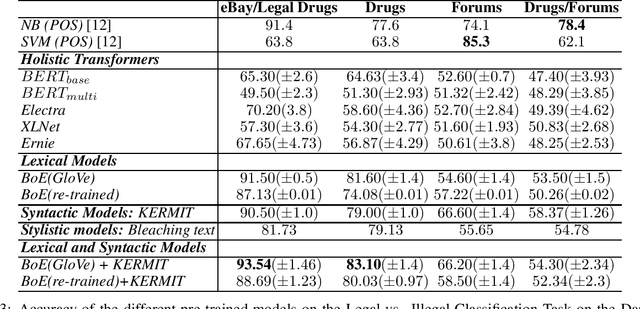

The Dark Side of the Language: Pre-trained Transformers in the DarkNet

Feb 09, 2022

Abstract:Pre-trained Transformers are challenging human performances in many natural language processing tasks. The gigantic datasets used for pre-training seem to be the key for their success on existing tasks. In this paper, we explore how a range of pre-trained natural language understanding models perform on truly novel and unexplored data, provided by classification tasks over a DarkNet corpus. Surprisingly, results show that syntactic and lexical neural networks largely outperform pre-trained Transformers. This seems to suggest that pre-trained Transformers have serious difficulties in adapting to radically novel texts.

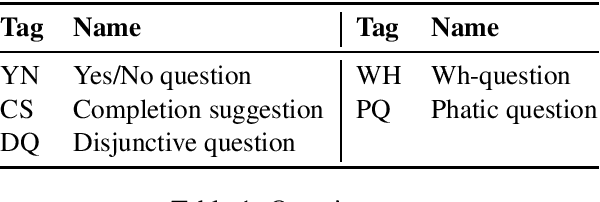

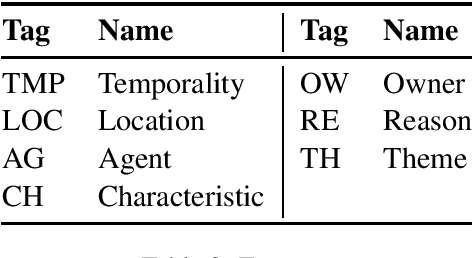

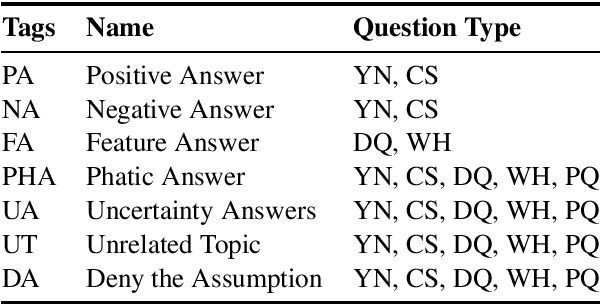

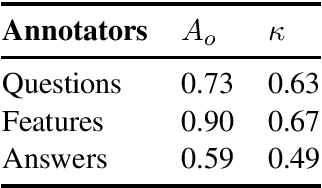

Toward Dialogue Modeling: A Semantic Annotation Scheme for Questions and Answers

Aug 23, 2019

Abstract:The present study proposes an annotation scheme for classifying the content and discourse contribution of question-answer pairs. We propose detailed guidelines for using the scheme and apply them to dialogues in English, Spanish, and Dutch. Finally, we report on initial machine learning experiments for automatic annotation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge