Apratim Majumder

Wavefront Coding for Accommodation-Invariant Near-Eye Displays

Oct 14, 2025Abstract:We present a new computational near-eye display method that addresses the vergence-accommodation conflict problem in stereoscopic displays through accommodation-invariance. Our system integrates a refractive lens eyepiece with a novel wavefront coding diffractive optical element, operating in tandem with a pre-processing convolutional neural network. We employ end-to-end learning to jointly optimize the wavefront-coding optics and the image pre-processing module. To implement this approach, we develop a differentiable retinal image formation model that accounts for limiting aperture and chromatic aberrations introduced by the eye optics. We further integrate the neural transfer function and the contrast sensitivity function into the loss model to account for related perceptual effects. To tackle off-axis distortions, we incorporate position dependency into the pre-processing module. In addition to conducting rigorous analysis based on simulations, we also fabricate the designed diffractive optical element and build a benchtop setup, demonstrating accommodation-invariance for depth ranges of up to four diopters.

HD snapshot diffractive spectral imaging and inferencing

Jun 25, 2024

Abstract:We present a novel high-definition (HD) snapshot diffractive spectral imaging system utilizing a diffractive filter array (DFA) to capture a single image that encodes both spatial and spectral information. This single diffractogram can be computationally reconstructed into a spectral image cube, providing a high-resolution representation of the scene across 25 spectral channels in the 440-800 nm range at 1304x744 spatial pixels (~1 MP). This unique approach offers numerous advantages including snapshot capture, a form of optical compression, flexible offline reconstruction, the ability to select the spectral basis after capture, and high light throughput due to the absence of lossy filters. We demonstrate a 30-50 nm spectral resolution and compared our reconstructed spectra against ground truth obtained by conventional spectrometers. Proof-of-concept experiments in diverse applications including biological tissue classification, food quality assessment, and simulated stellar photometry validate our system's capability to perform robust and accurate inference. These results establish the DFA-based imaging system as a versatile and powerful tool for advancing scientific and industrial imaging applications.

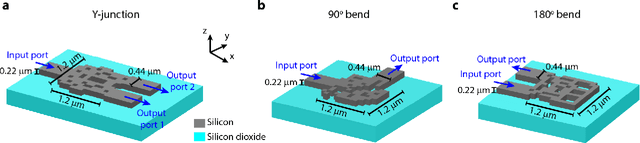

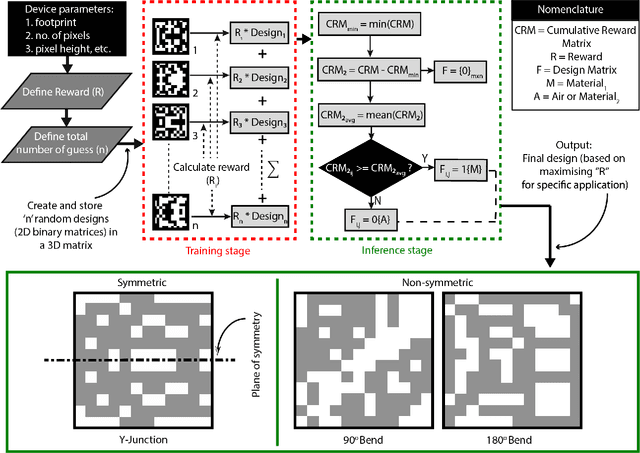

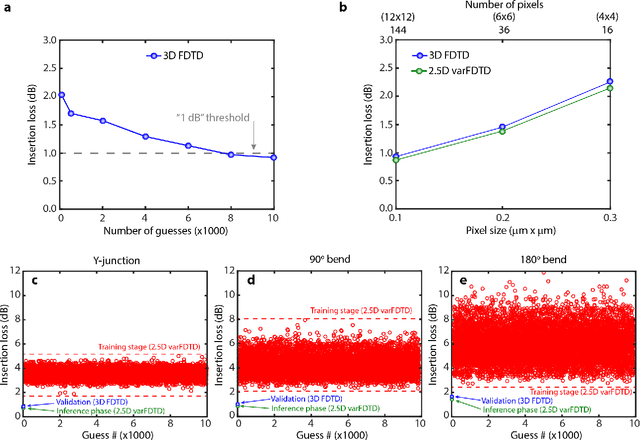

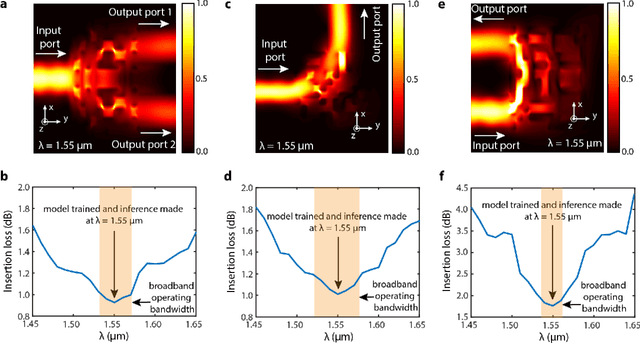

Machine Learning enables Ultra-Compact Integrated Photonics through Silicon-Nanopattern Digital Metamaterials

Nov 27, 2020

Abstract:In this work, we demonstrate three ultra-compact integrated-photonics devices, which are designed via a machine-learning algorithm coupled with finite-difference time-domain (FDTD) modeling. Through digitizing the design domain into "binary pixels" these digital metamaterials are readily manufacturable as well. By showing a variety of devices (beamsplitters and waveguide bends), we showcase the generality of our approach. With an area footprint smaller than ${\lambda_0}^2$, our designs are amongst the smallest reported to-date. Our method combines machine learning with digital metamaterials to enable ultra-compact, manufacturable devices, which could power a new "Photonics Moore's Law."

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge